Virtual Reality Systems

CSE 493V | Spring 2023

Visit the Winter 2025 website for the latest updates.

Overview

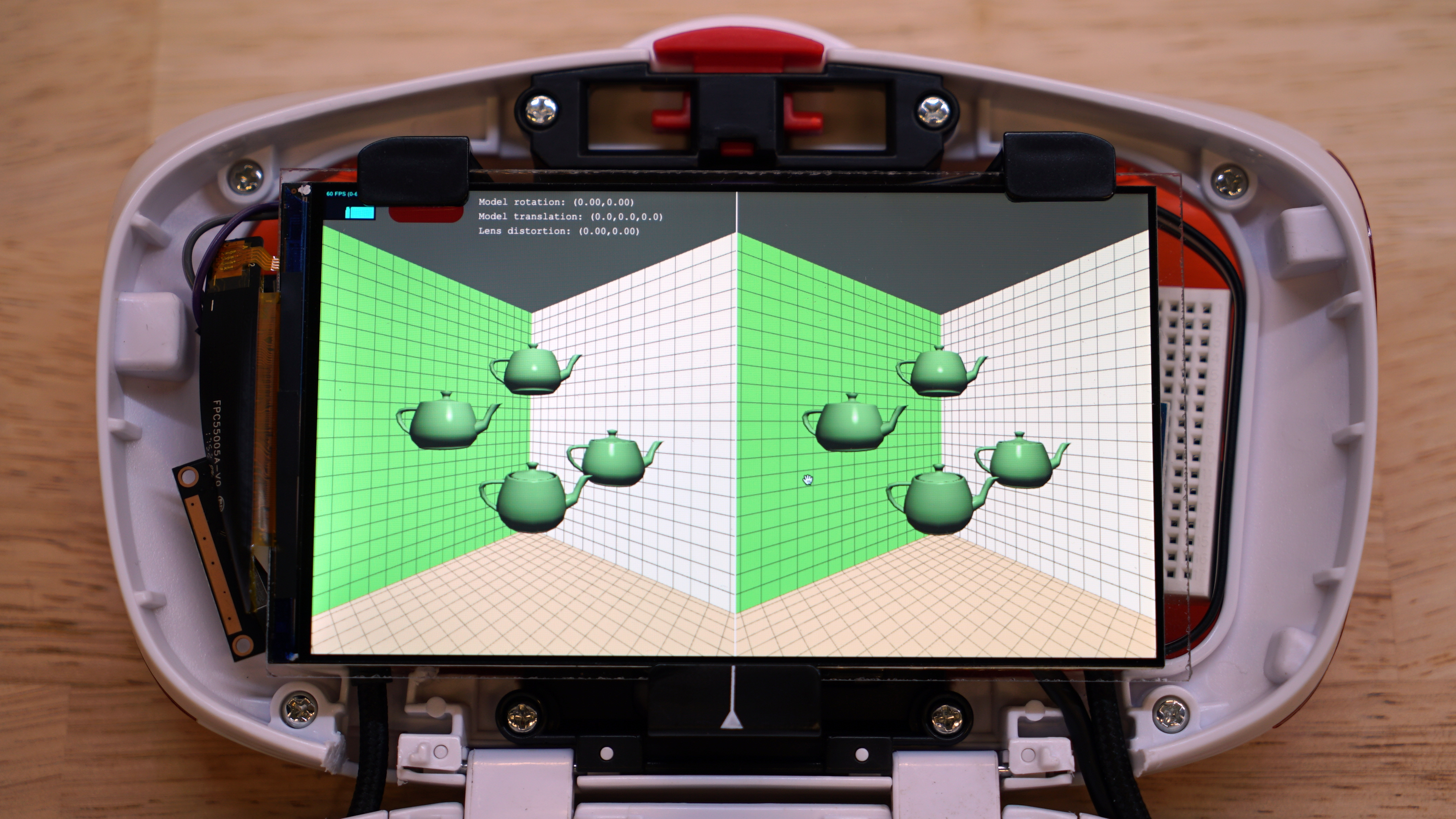

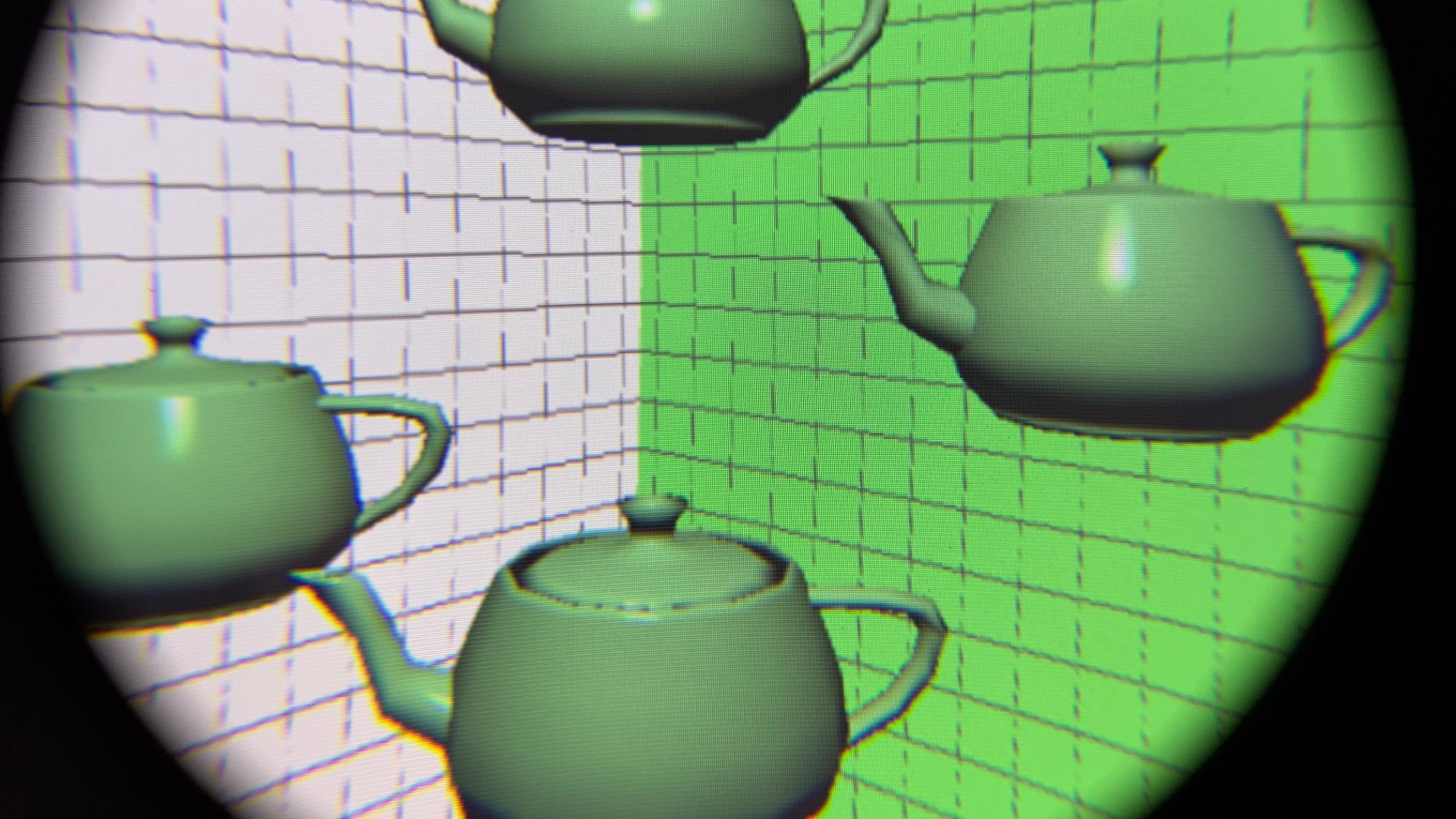

Modern virtual reality systems draw on the latest advances in optical fabrication, embedded computing, motion tracking, and real-time rendering. In this hands-on course, students will foster similar cross-disciplinary knowledge to build a head-mounted display. This overarching project spans hardware (optics, displays, electronics, and microcontrollers) and software (JavaScript, WebGL, and GLSL). Each assignment builds toward this larger goal. For example, in one assignment, students will learn to use an inertial measurement unit (IMU) to track the orientation of the headset. In another assignment, students will apply real-time computer graphics to correct lens distortions. Lectures will complement these engineering projects, diving into the history of AR/VR and relevant topics in computer graphics, signal processing, and human perception. Guest speakers will participate from leading AR/VR companies, including by hosting field trips.

For a summary of the 2020 edition of CSE 493V, including interviews with the students, please read "New Virtual Reality Systems course turns students into makers", as published by the Allen School News.

Acknowledgments

This course is based on Stanford EE 267. We thank Gordon Wetzstein for sharing his materials and supporting the development of CSE 493V. We also thank Brian Curless, David Kessler, John Akers, Steve Seitz, Ira Kemelmacher-Shlizerman, and Adriana Schulz for their support.

Requirements

This course is designed for senior undergraduates and early MS/PhD students. No prior experience with hardware is required. Students are expected to have completed Linear Algebra (MATH 308) and Systems Programming (CSE 333). Familiarity with JavaScript, Vision (CSE 455), and Graphics (CSE 457) will be helpful, but not necessary. Registration is limited to 40 students.

Teaching Staff

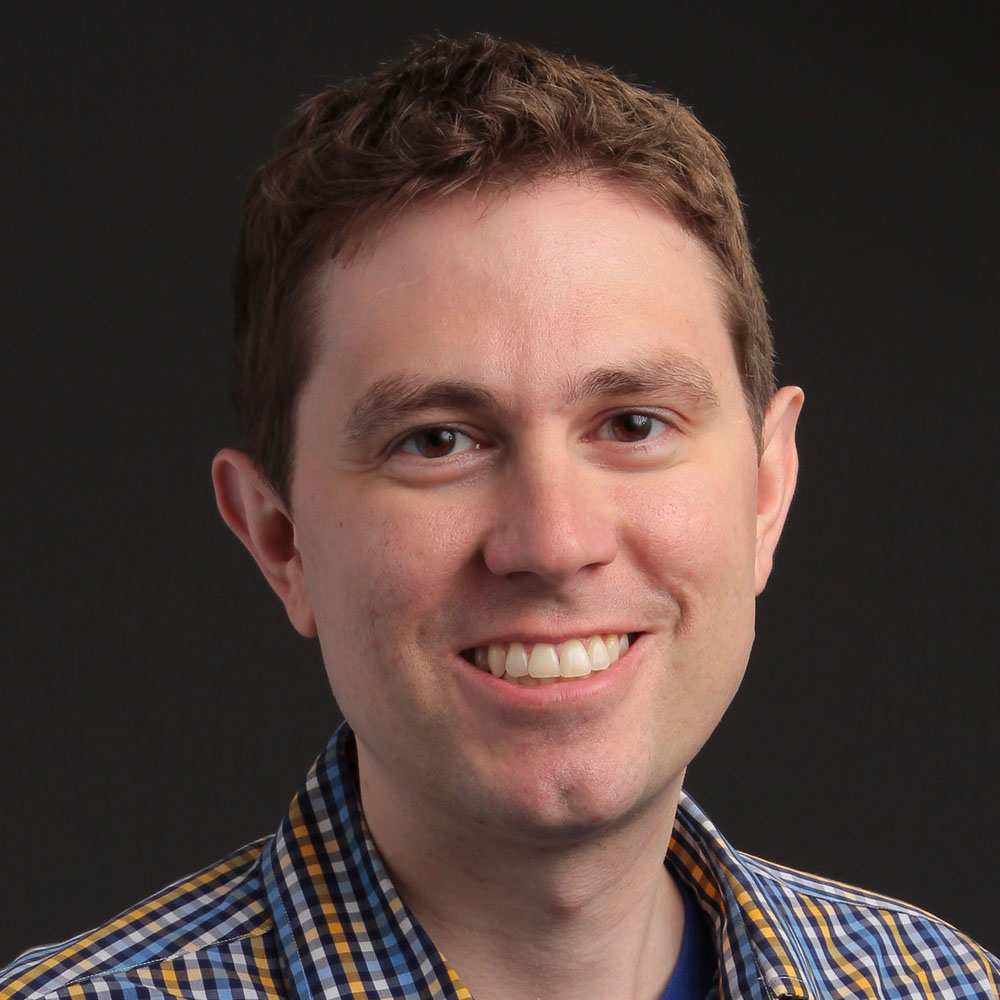

Douglas Lanman

Affiliate Instructor, University of Washington, CSE

Senior Director, Display Systems Research, Reality Labs Research

Douglas is the Senior Director of Display Systems Research at Reality Labs Research, where he leads investigations into advanced display and imaging technologies. His prior research has focused on head-mounted displays, glasses-free 3D displays, light field cameras, and active illumination for 3D reconstruction and interaction. He received a B.S. in Applied Physics with Honors from Caltech in 2002 and M.S. and Ph.D. degrees in Electrical Engineering from Brown University in 2006 and 2010, respectively. He was a Senior Research Scientist at Nvidia Research from 2012 to 2014, a Postdoctoral Associate at the MIT Media Lab from 2010 to 2012, and an Assistant Research Staff Member at MIT Lincoln Laboratory from 2002 to 2005. His recent work focuses on passing the visual Turing test with AR/VR displays.

Teerapat (Mek) Jenrungrot

PhD Student, University of Washington, CSE

Mek is a fourth-year PhD student in the UW Reality Lab and the UW Graphics and Imaging Laboratory (GRAIL). His current research interests are in the intersection of audio and computer vision with a focus on voice calling and telecommunications related technologies. He has previously worked on 3D audio separation, neural audio codecs with large language models, and spatial audio.

Diya Joy

BS/MS Student, University of Washington, CSE

Diya is a fifth year BS/MS student interested in computer graphics and game development. Her current research is focused on developing games for educational purposes. Outside of campus, she likes bouldering, board games, and reading.

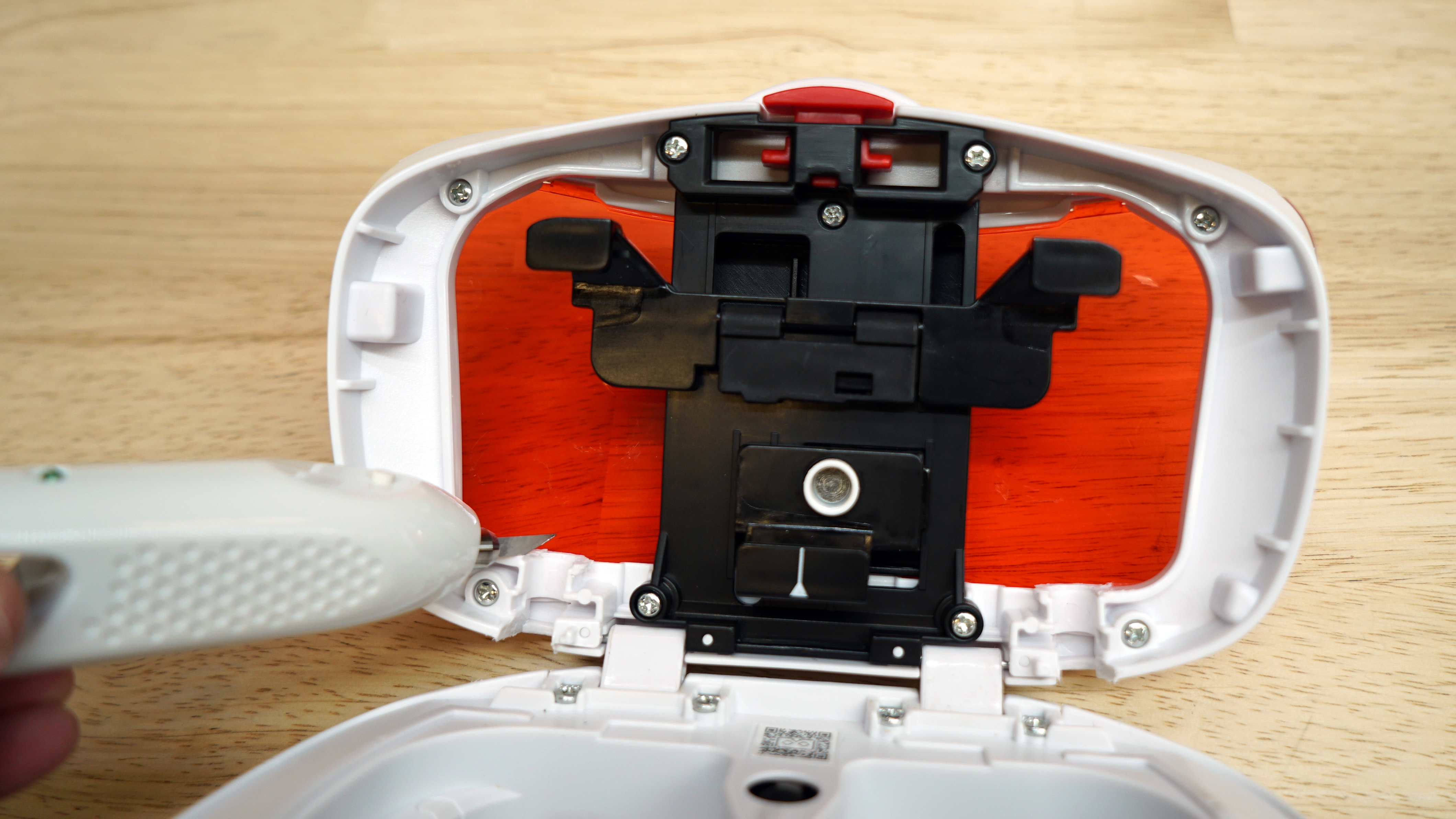

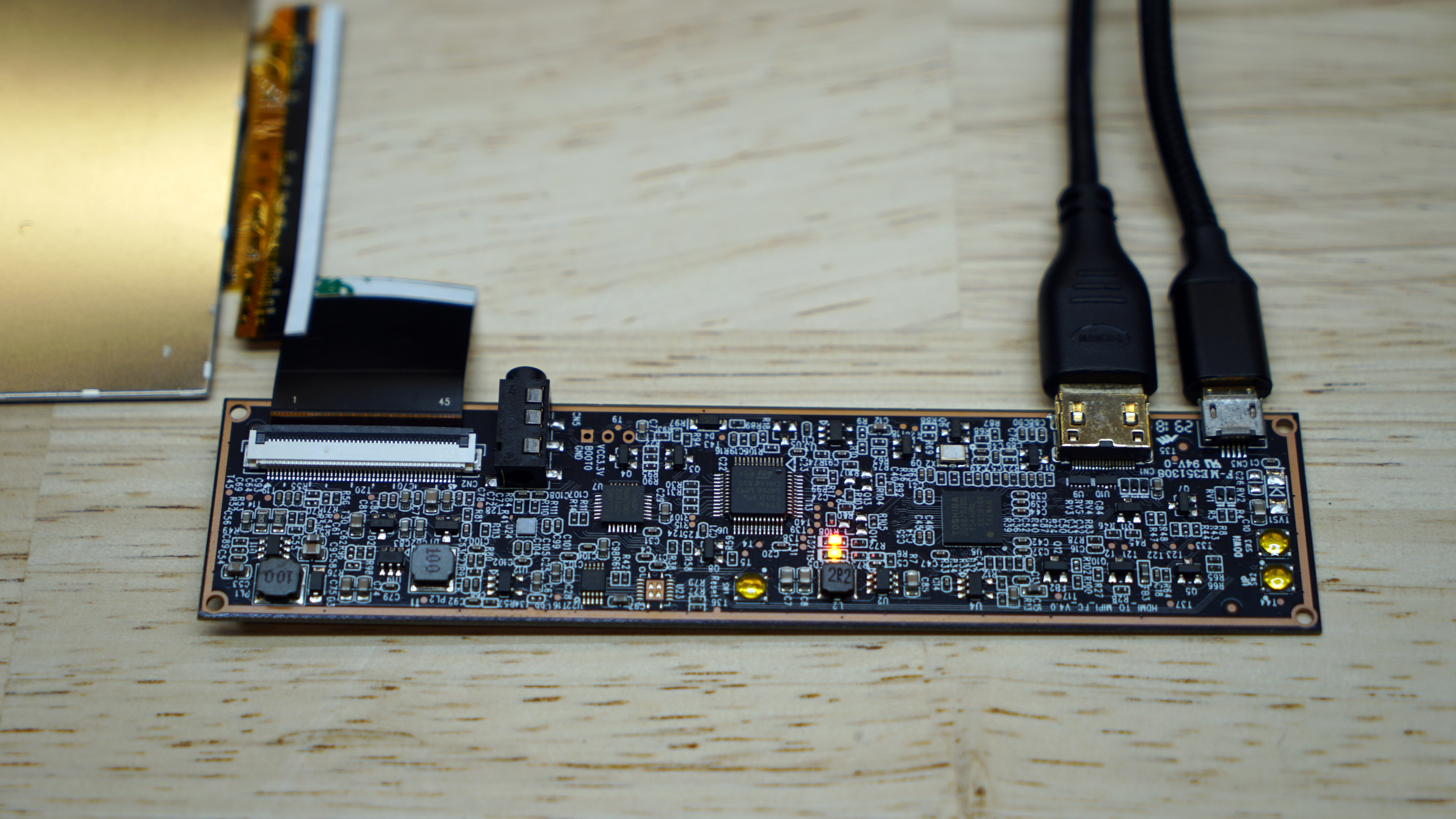

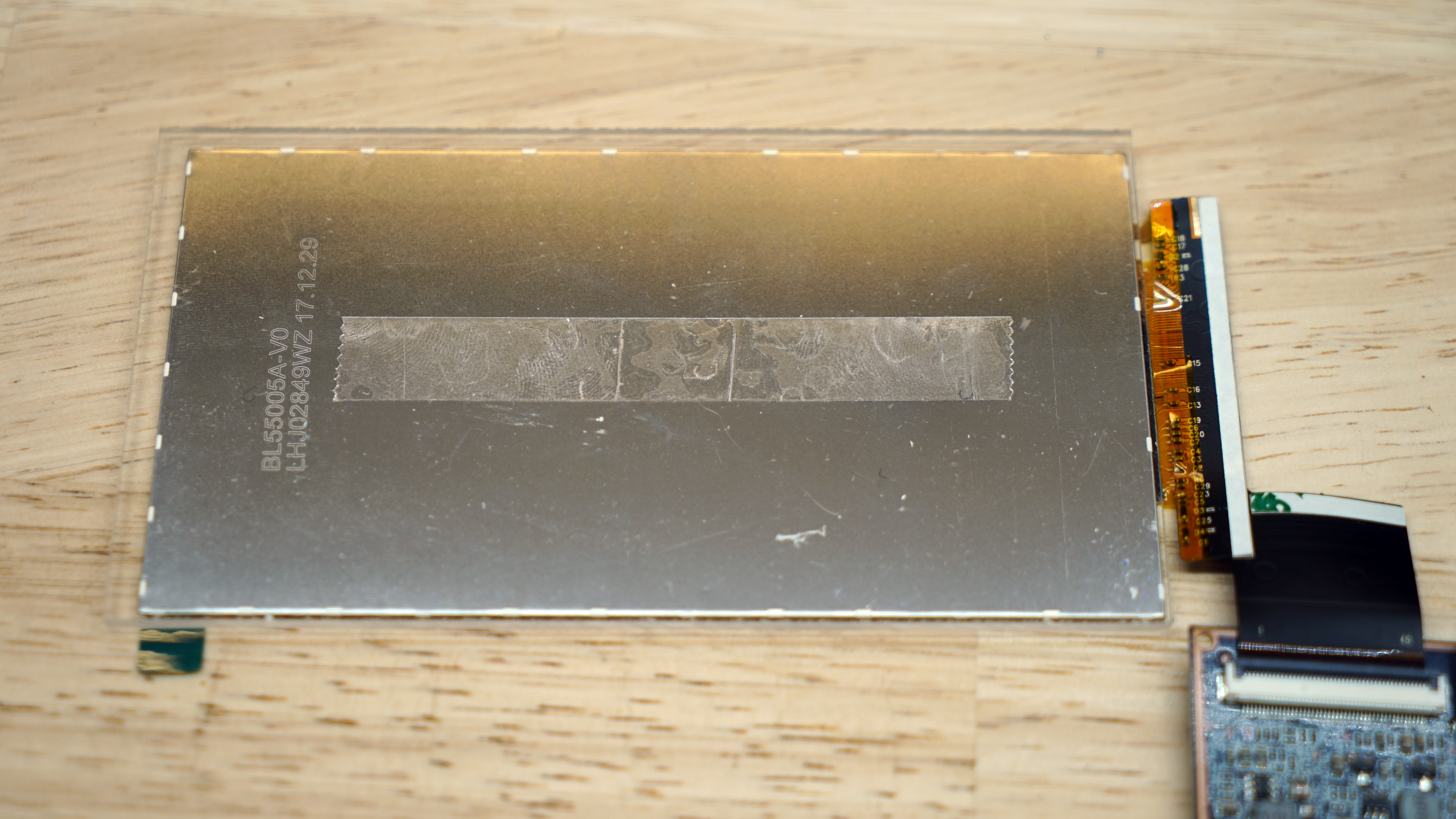

VR Headset Development Kit

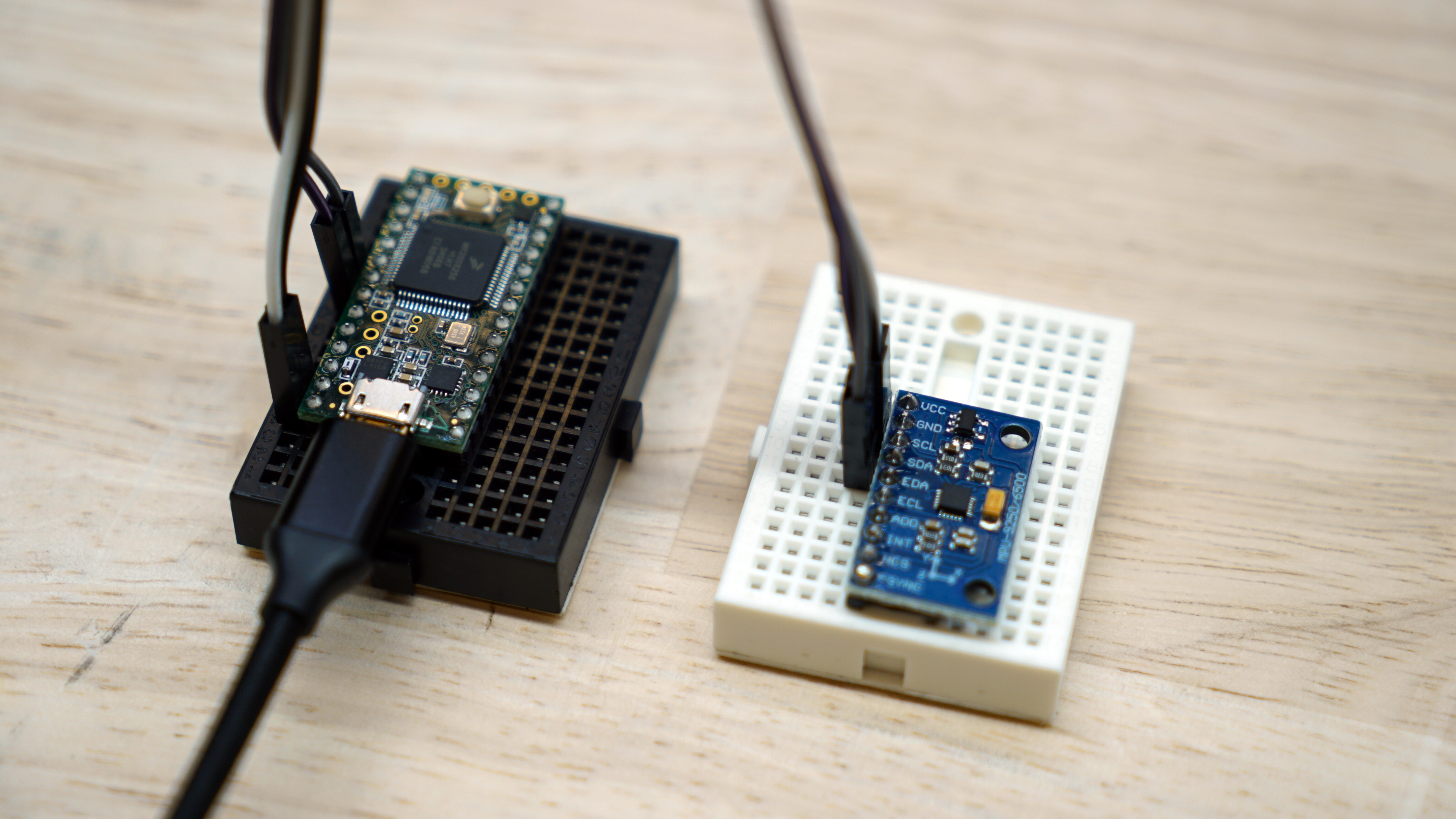

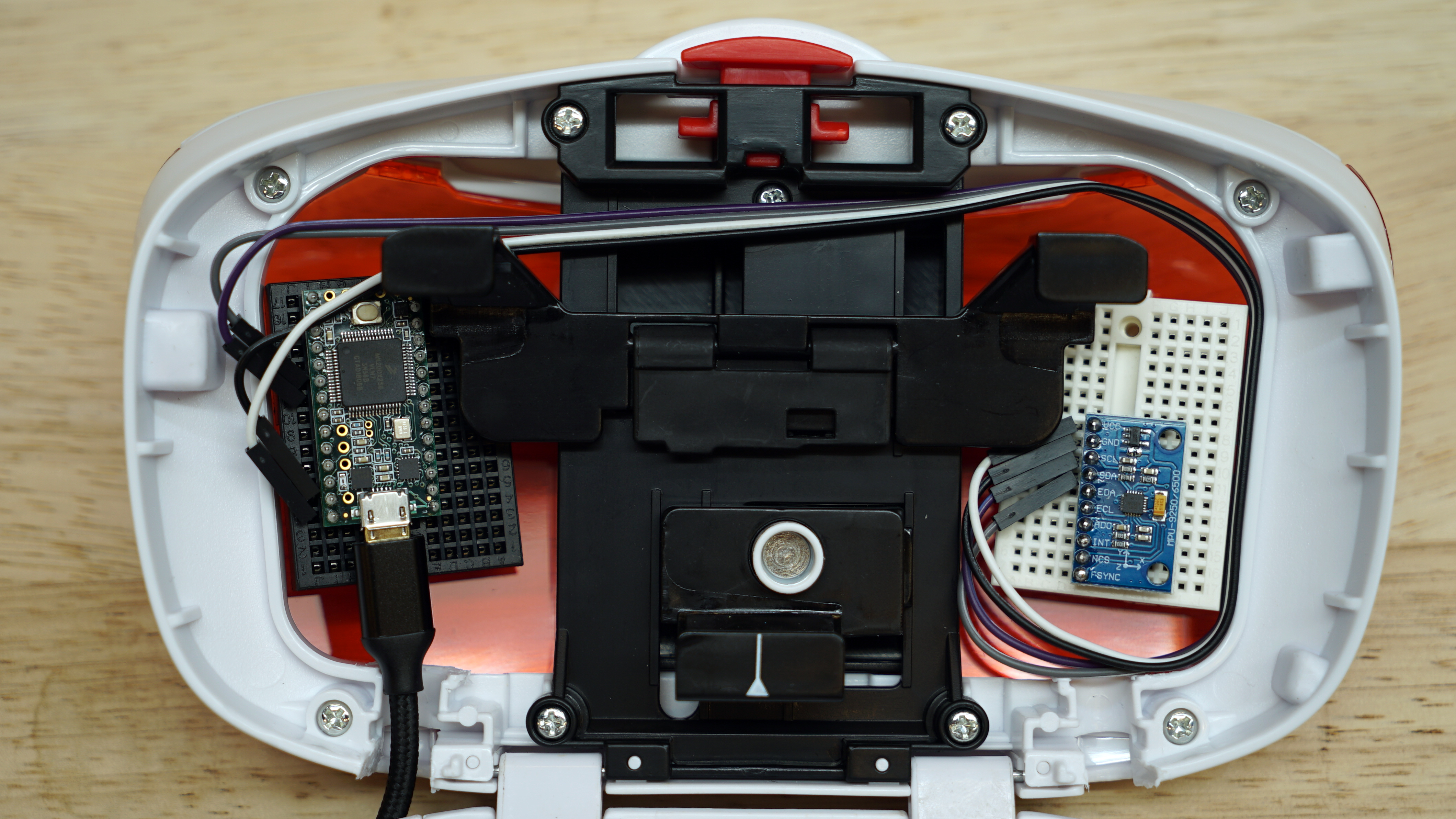

Students will be provided a kit to build their own head-mounted display, including an LCD, an HDMI driver board, an inertial measurement unit (IMU), lenses, an enclosure, and all cabling. Kits must be returned at the end of the course. All software will be developed through the homework assignments. Component details are listed below.

| Component | Model | Details |

|---|---|---|

| HMD Enclosure | View-Master Deluxe VR Viewer | Mattel |

| Display Panel | Wisecoco 6″ 2560×1440 LCD | Wisecoco |

| Display Mount | Acrylic Sheet (140mm × 82mm × 2.5 mm) | TAP Plastics |

| Microcontroller | Teensy 4.0 | PJRC |

| IMU | InvenSense MPU-9250 | HiLetgo |

| Breadboards | Elegoo Mini Breadboard Kit | Elegoo |

| Jumper Wires | Edgelec 30cm Jumper Wires (Male to Male) | Edgelec |

| HDMI Cable | StarTech 6′ High Speed HDMI Cable | StarTech |

| USB Cables | Anker 6′ Micro USB Cable (2-Pack) | Anker |

| Tape | Scotch Permanent Double-Sided Tape | Scotch |

| Velcro | Strenco 2″ Adhesive Hook and Loop Tape | Strenco |

Final Projects

| Description | Materials |

|---|---|

| Hardware | |

| High Spatial Acuity Haptics | Report and Poster |

| An Autostereoscopic Display Prototype | Report |

| Physically Based Interaction in Virtual Reality | |

| Interactive Fluid Simulations in VR | Website and Poster |

| Jelly Physics: Soft-Body Dynamics | Report and Poster |

| Computer Vision, Eye Tracking, and SLAM | |

| Exploring Pupil Tracking | Report |

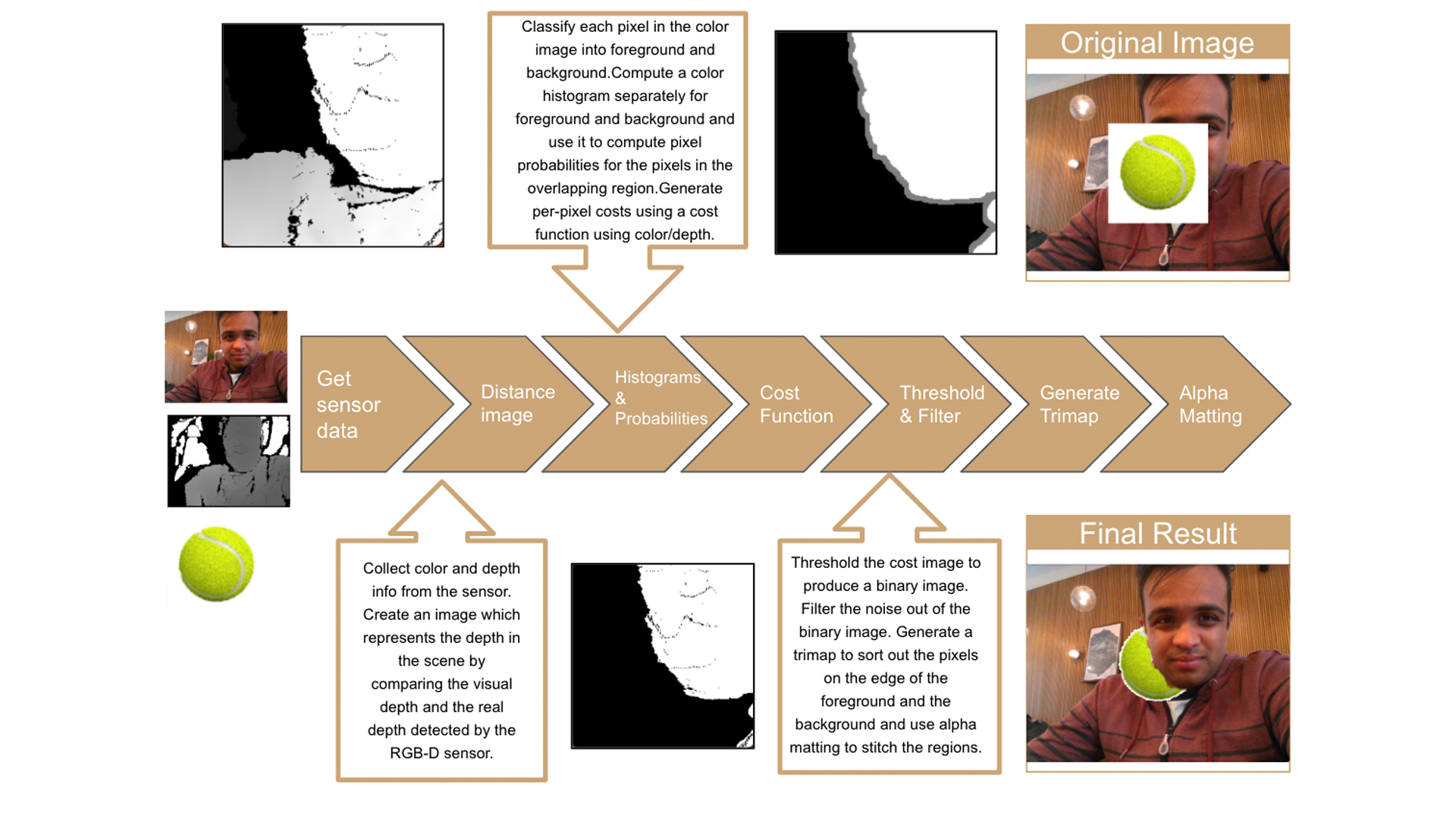

| Occlusion Handling in Augmented Reality | Report and Poster |

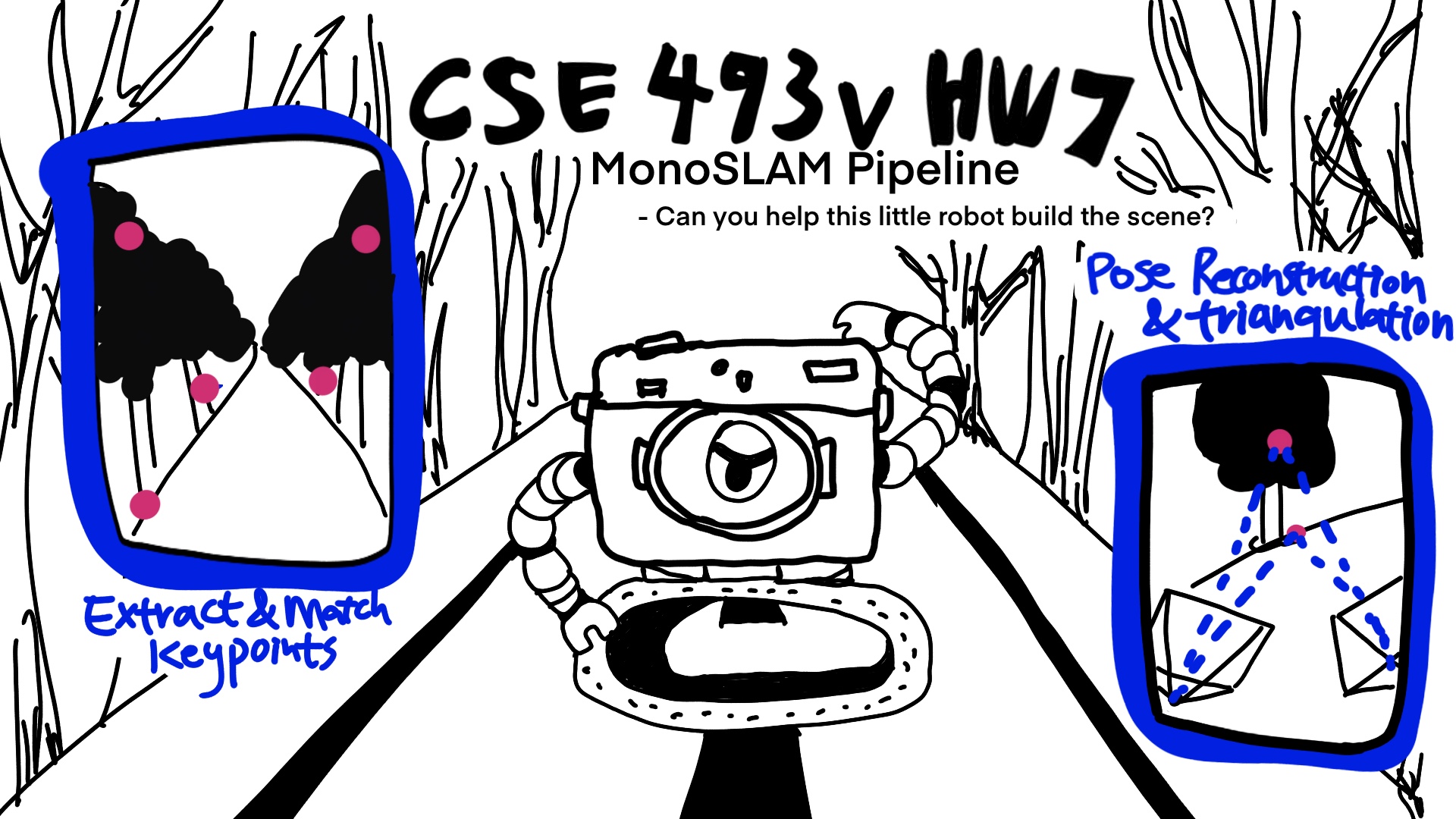

| Homework 7: Learning SLAM | Report and Poster |

| Converting 2D Images to 3D Anaglyphs | Report and Poster |

| Rendering Physical Objects in VR | Report and Poster |

| Vision Science | |

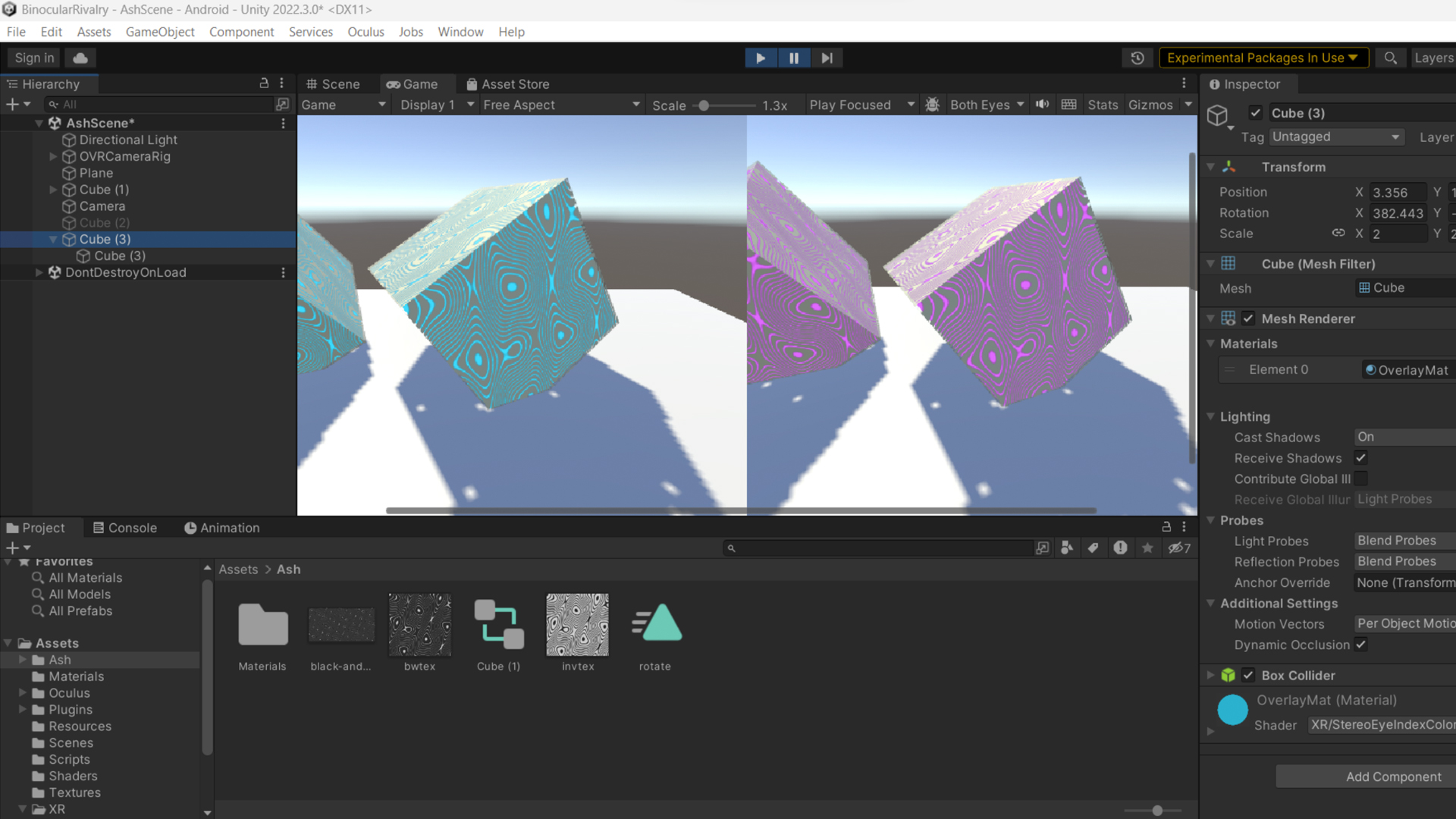

| Studying Binocular Rivalry in VR | Report and Poster |

| Audio | |

| Spatial Audio with Any Headphones | Report and Poster |

| Generative AI for Virtual Reality | |

| Ethereal: AI-Powered VR Adventure | Website and Poster |

| Cornucopia of Stories | Website and Poster |

| Creating VR Content using Generative AI | Report and Poster |

| Design Tools and Interactive Computing | |

| Holographic Whiteboard | Website and Poster |

| Creating Game Scenes in VR | Report and Poster |

| Arranging Furniture using Mixed Reality | Report and Poster |

| Games and Training Applications | |

| Bird Hunt VR | Website and Poster |

| The T.R.U.S.T. Game | Report and Poster |

| Introducing Chess En Garde | Report and Presentation |

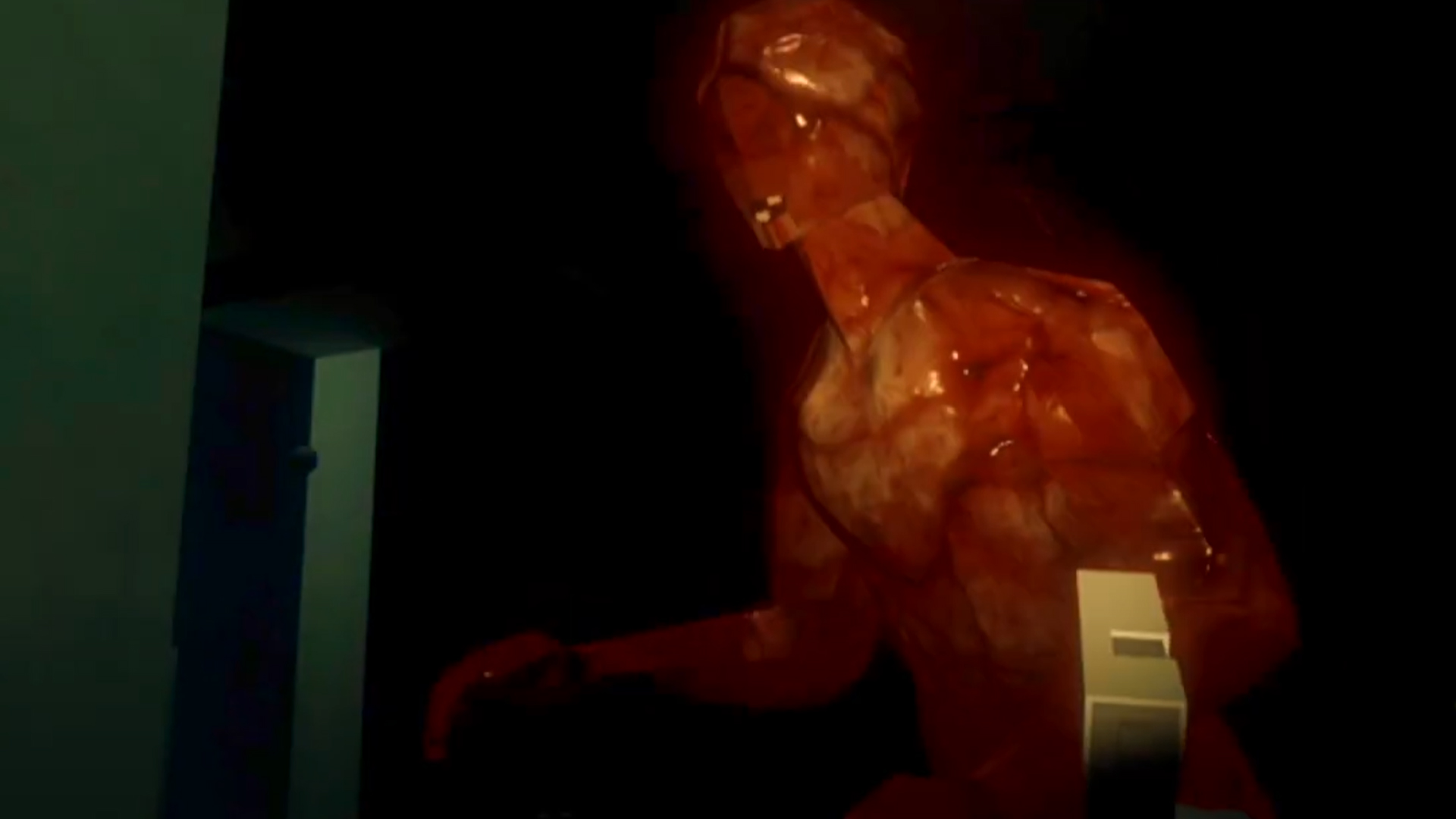

| A VR Horror Experience Utilizing Eye Tracking | Report and Poster |

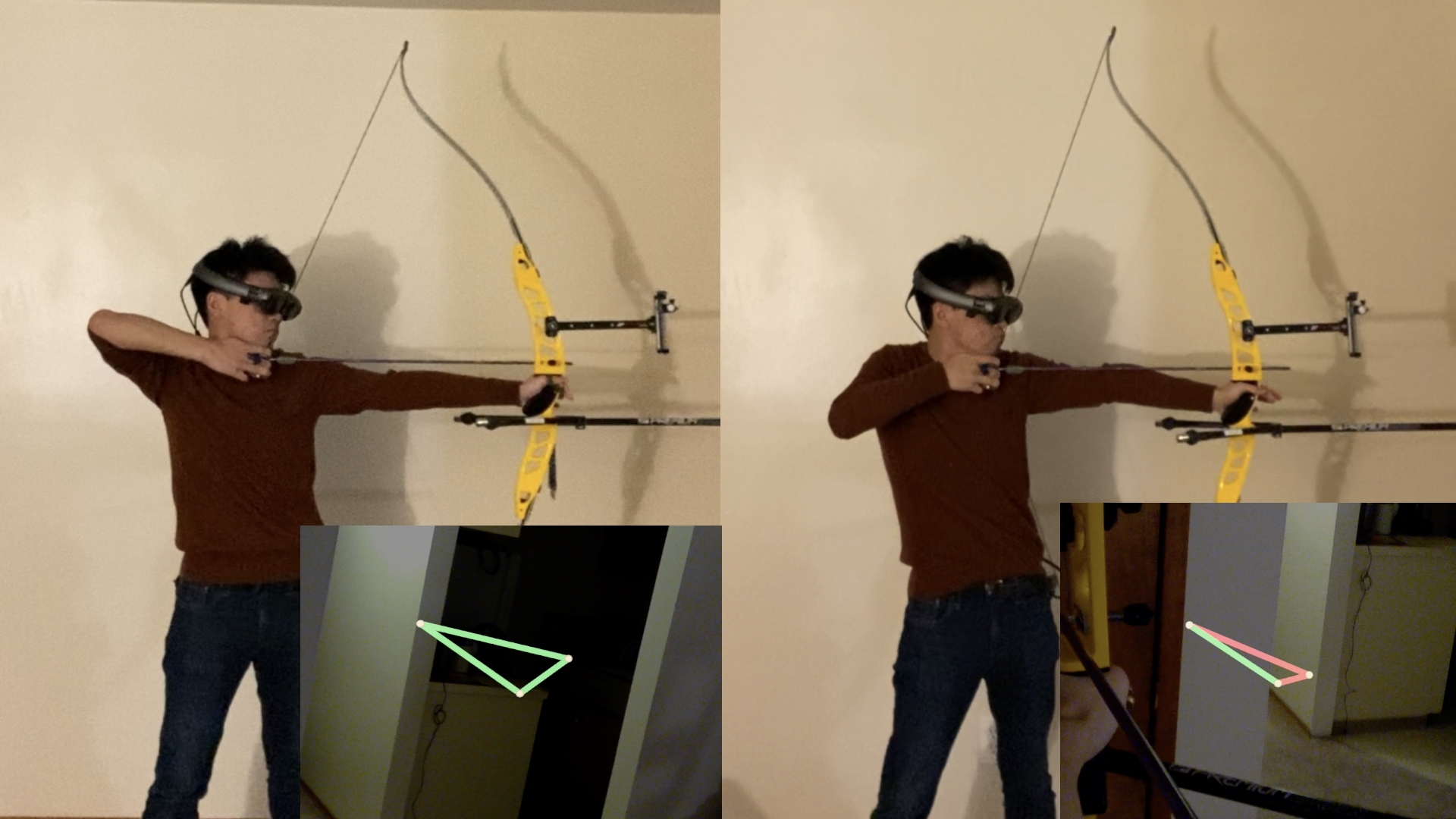

| Training Archery Form using Augmented Reality | Report and Poster |

Schedule

Lectures are on Wednesdays and Fridays from 4:30pm to 5:50pm in CSE2 G001.| Date | Description | Materials | |

|---|---|---|---|

| Wednesday March 29 |

Introduction to VR/AR Systems | Slides Sutherland [1968] |

|

| Friday March 31 |

Head-Mounted Displays Part I: Conventional Optical Architectures |

Slides Kore [2018] |

|

| Wednesday April 5 |

Head-Mounted Displays Part II: Emerging Optical Architectures |

Slides |

|

| Friday April 7 |

The Graphics Pipeline and OpenGL Part I: Overview and Transformations |

Slides and

Notes Marschner (Ch. 6 & 7) |

|

| Wednesday April 12 |

The Graphics Pipeline and OpenGL Part II: Lighting and Shading |

Slides and

Video Marschner (Ch. 10 & 11) |

|

| Friday April 14 |

The Graphics Pipeline and OpenGL Part III: OpenGL Shading Language (GLSL) | Slides and Video | |

| Wednesday April 19 |

The Human Visual System | Slides and

Video LaValle (Ch. 5 & 6) |

|

| Friday April 21 |

The Graphics Pipeline and OpenGL Part IV: Stereo Rendering | Slides and Video | |

| Wednesday April 26 |

Inertial Measurement Units Part I: Overview and Sensors |

Slides,

Video, and

Notes LaValle (Ch. 9.1 & 9.2) |

|

| Friday April 28 |

Inertial Measurement Units Part II: Filtering and Sensor Fusion |

Slides and Video | |

| Wednesday May 3 |

Positional Tracking Part I: Overview and Sensors |

Slides, Video, and Notes | |

| Friday May 5 |

Positional Tracking Part II: Filtering and Calibration |

Slides | |

| Wednesday May 10 |

Advanced Topics Part I: Spatial Audio Antje Ihlefeld (Meta) |

Slides LaValle (Ch. 11) |

|

| Friday May 12 |

Advanced Topics Part II: Engines and Emerging Technologies |

Slides and Video | |

| Wednesday May 17 |

Advanced Topics Part III: VR Video Capture |

Slides and Video | |

| Friday May 19 |

Advanced Topics Part IV: Direct-View Light Field Displays |

Slides and Video | |

| Wednesday May 24 |

Final Project Working Session | ||

| Friday May 26 |

Industry Presentation: Valve Corporation

Jeremy Selan |

Video | |

| Wednesday May 31 |

Industry Presentation: Google Project Starline

Dan B. Goldman |

||

| Friday June 2 |

Final Project Working Session | ||

| Monday June 5 |

Final Project Demo Session (Open to the Public)

1:00pm to 3:00pm in CSE 100 Microsoft Atrium |

Assignments

Students will complete six homeworks and a final project. Each homework is accompanied by a lab (a tutorial video). Labs must be completed before starting the homeworks. We encourage formatting written portions of homework solutions using the CSE 493V LaTeX template. Students must submit a one-page final project proposal and a final report. Final reports may take the form of a website or a conference manuscript.

| Due Date | Description | Materials |

|---|---|---|

| Thursday April 13 |

Homework 1 Transformations in WebGL |

Lab 1 and Video

Assignment and Code Solutions |

| Thursday April 20 |

Homework 2 Lighting and Shading with GLSL |

Lab 2 and Video

Assignment and Code Solutions |

| Monday May 1 |

Homework 3 Stereoscopic Rendering and Anaglyghs |

Lab 3 and Video

Assignment and Code Solutions |

| Friday May 5 |

Homework 4 Build Your Own HMD |

Lab 4 (2023, 2020) and Video

Assignment and Code Solutions |

| Monday May 8 |

Final Project Proposal | Directions and Template

Example |

| Monday May 15 |

Homework 5 Orientation Tracking with IMUs |

Lab 5 and Video

Assignment and Code Solutions |

| Monday May 22 |

Homework 6 Pose Tracking |

Lab 6

Assignment and Code Solutions |

| Wednesday June 7 |

Final Project Report | Template |

Grading and Collaboration

The grading breakdown is as follows: homeworks (70%) and final project (30%).

Projects are due by midnight on the due date. Late assignments are marked down at a rate of 25% per day. If you fail to turn in an assignment on time it is worth 75% for the first 24 hours after the deadline, 50% for the next 24 hours, 25% for the next 24 hours, and then it is worth nothing after that. Exceptions will only be given with prior instructor approval.

While the headset development kits will be shared, students are expected to individually write their homework solutions. Students may collaborate to discuss concepts for the homeworks, but are expected to be able to explain their solutions for the purposes of grading by the instructor and TAs. Final project groups can be as large as three students, subject to instructor approval.

Textbooks and Resources

Lectures are supplemented by course notes, journal articles, and textbook chapters. The following textbooks will be used for CSE 493V, which are freely available to University of Washington students via the links below.

- Marschner and Shirley. Fundamentals of Computer Graphics. 4th Edition. CRC Press, 2015.

- LaValle. Virtual Reality. Cambridge University Press, 2016.

All software will be developed using JavaScript, WebGL, and GLSL. Students should review the following tutorials and online resources to prepare for the labs, homeworks, and final projects.

- w3schools.com: JavaScript Tutorial

- WebGL using three.js: Slides, Video, Documentation, and Source

- kronos.org: WebGL 1.0 API Quick Reference Card

Office Hours and Contacts

We encourage students to post their questions to Ed Discussion. The teaching staff can also be contacted directly at . The instructor and TAs will hold weekly office hours at the following times.

- Douglas Lanman (Wednesdays, 5:50pm to 6:30pm, CSE2 G001)

- Teerapat (Mek) Jenrungrot (Thursdays, 2:30pm to 3:30pm, CSE2 121; also available by appointment)

- Diya Joy (Tuesdays and Thursdays, 11:30am to 12:30pm, CSE2 323)