Screenshots

Abstract

"The Cornucopia of Stories" is an innovative project aimed at providing a captivating 3D experience for children. Through our virtual reality (VR) software, users are transported into a mesmerizing world of immersive storytelling. The software brings realistic 3D scenes to life through the user's headset while a narrator weaves enchanting tales.

Introduction

The current version of the "The Cornucopia of Stories" tells the tale of Hansel and Gretel with fully built 3D landscapes that the user can explore and enjoy, as the narrator continues with the story. Interactive buttons guide the user to the next and previous parts of the story, also allowing them to re-listen to parts they missed. As advancements in VR continue to happen, we wanted to develop a product for children that makes them interested in listening to classic stories and fairy tales, recognizing the world's rich background of lore written by great authors and porting it over to this new world.

Contributions

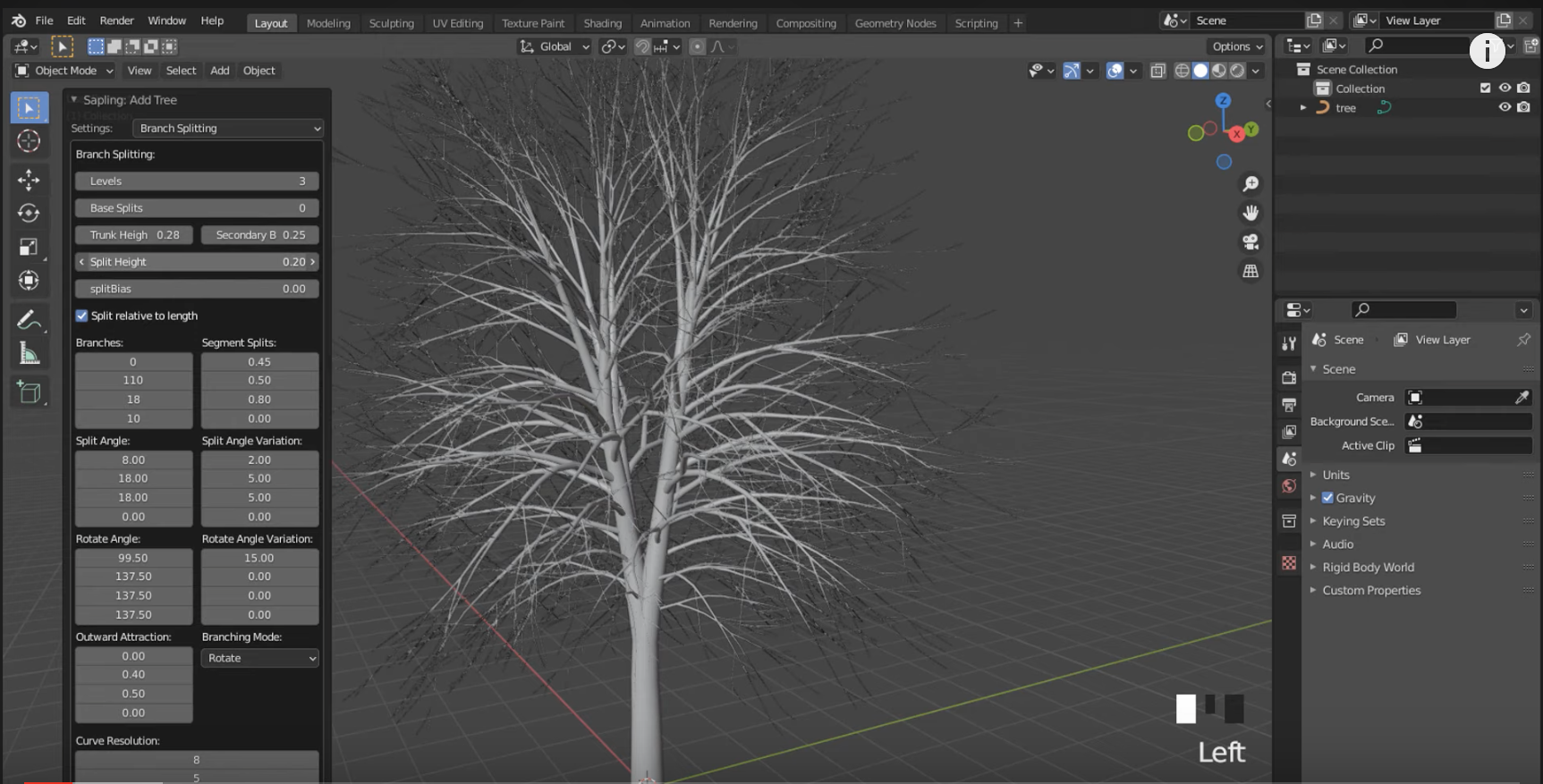

- We constructed multiple scenes and 3d models for the story line and used online models for more complex objects and textures.

- Using our 3d models from Blender, we created multiple, interactive VR scenes in Unity each with its own narration.

- We added several UI components like repeating narration and moving to the next and previous screen through buttons. We also enabled continuous movement through the controllers so that the user doesn't have to walk in the real world to explore the virtual scenes.

Method

For this project, we initially started with creating 3D scenes for the VR headset. For this purpose, we used Blender, and then ported over these scenes to Unity. After the rendering is complete, we worked on scene switching as the story progresses.

Our final goal was implementing narration of our story through the headset speakers by relying on an online text-to-speech product. Overall, we created our project with the aim of immersing children into an enchanting new world of adventure and magic, created with the power of Virtual Reality.

Implementation Details

We began by using ChatGPT to generate a concise fairy tale. To facilitate the creation of visual scenes from the story, we provided detailed instructions to ChatGPT, prompting it to break the narrative into manageable parts. To accomplish this, we leveraged Adobe Firefly, a new beta program by Adobe renowned for generating captivating images and designs. Next, we embarked on a learning journey to master Blender by following online tutorials and watching YouTube videos. Using the images generated from the story prompts as references, we meticulously crafted scenes in Blender. We predominantly relied on Blender, a professional 3D modeling software, to generate the majority of our 3D models for each scene. By employing the sculpting tool within the software, we painstakingly crafted models of landscapes, characters, and various other objects and overlaid the virtual scene accurately onto the background reference image to replicate the depicted scene. During this process, we made extensive use of Blender's mesh feature to create initial models. For more complex structures like the log cabin and intricately detailed characters, we sourced free models from platforms such as Blender Swap and BlenderKit. Once the scenes were implemented in Blender, we imported them into Unity to construct a seamless VR experience. Within Unity, we incorporated various user interface (UI) elements, including buttons that facilitated navigation between scenes or replayed the narration. To bring these elements to life, we employed C# scripting. Unity also played a pivotal role in enabling continuous movement, allowing users to explore the VR scenes without physically traversing the physical space. By utilizing the Unity XR Plugin and XR Interaction Toolkit, we empowered users to interact with the scenes using controllers while remaining stationary in the real world. We then progressed to implementing scene transitions, acquiring coding skills in Unity using C#. Once we had mastered this aspect, we incorporated buttons that granted users the freedom to move between scenes, enabling them to explore a single scene at their leisure. Lastly, we focused on incorporating narration into our project. We initiated this process by utilizing an AI text-to-voice converter to transform the written story into audio clips. Adhering to the divisions provided by ChatGPT, we split the narration into parts and uploaded the corresponding clips to our Unity assets. We referred to online tutorials to seamlessly play these clips when the scenes loaded, introducing a brief delay for a smooth experience. Additionally, we integrated a button that allowed users to replay the narration at any time. After successfully integrating all of these components, we proudly unveiled our final product—an immersive and colorful rendition of the Hansel and Gretel story, complete with interactive exploration.

Evaluation of Results and Discussion of Benefits and Limitations

Throughout the course of our project, we encountered numerous valuable learning experiences that we hadn't anticipated at the project's outset. One significant realization was the daunting and time-consuming nature of crafting flawless 3D scenes. Even when utilizing pre-existing models from online sources, we discovered a scarcity of freely available options, with the truly exceptional ones commanding exorbitant prices, often exceeding $100 per asset. Furthermore, we observed that relying solely on physical movement within the real world to explore the virtual scenes proved impractical. Consequently, we introduced controller-based navigation as an alternative. However, we acknowledged that this solution was less than optimal. The artificial-looking red rays utilized in this control mechanism felt unnatural and detracted from the overall experience. In our exploration of other applications on the headset, we encountered alternative approaches that employed hand gestures and triangulation for movement. If we were to enhance this application further, we would consider incorporating such functionality, enabling users to "teleport" to various locations within the scene for a more seamless and immersive experience.

Future Work

For future work on this topic, we would aim to be able to create unlimited AI generated stories and 3D scenes based on a user's spoken query, for which we will make use of the ChatGPT API and an AI image generation API such as DALL-E 2. We will work on creating an end-to-end pipeline that starts from the user's spoken query and ends with the following outputs: (1) the written story, and (2) generated 3D scenes with timestamps for scene change. An example of an excerpt from a generated story and an AI generated scene for the story is given below:

Once upon a time, in a faraway land, there lived a young and brave adventurer named Ethan. Ethan had a heart filled with wanderlust and a thirst for extraordinary experiences. One fine evening, as the sun began to set, he set out on a journey to explore the enchanted forests that lay beyond his village. As Ethan ventured deeper into the forest, the trees seemed to come alive, their branches forming intricate patterns that reached out to him, beckoning him to follow. A soft, shimmering mist surrounded him, casting an otherworldly glow upon his face. It was as if the forest itself was guiding him, whispering secrets of hidden wonders yet to be discovered. ...

Conclusion

Overall, we strongly believe that this project holds immense potential for further development and eventual launch as a full-fledged product. Its ultimate aim would be to offer children the opportunity to immerse themselves in a vast collection of boundless stories accompanied by vibrant scenes. Although creating, transitioning, and seamlessly integrating these scenes with narration presented formidable challenges, we remain optimistic that with continued research in this field, it could evolve into a unified and sophisticated pipeline. Such a pipeline would leverage the power of existing state-of-the-art AI models to generate captivating and imaginative stories. It could even allow users to inject their own unique elements, such as a child requesting a story featuring a unicorn mermaid. With this application, the possibilities for limitless and personalized storytelling experiences would abound, effectively transforming it into the "Cornucopia of Stories" we envisioned.

Acknowledgments

We would like to thank Douglas Lanman for providing us with resources to explore for our project. We would also like to thank John Akers and the UW Reality Lab for providing us with the Oculus Quest 2 headset.

References

[1] Blender Guru. 2021. Blender Beginner Tutorial. Blender Guru. Retrieved from https://www.youtube.com/watch?v=nIoXOplUvAw&ab_channel=BlenderGuru

[2] Valem. 2020. Introduction to VR in Unity - UNITY XR TOOLKIT. Valem. Retrieved from https://www.youtube.com/watch?v=gGYtahQjmWQ&list=PLrk7hDwk64-a_gf7mBBduQb3PEBYnG4fU&ab_channel=Valem