Affordance Analysis

Table of contents

- Do abstractions have politics?

- Speculative design

- Affordance analysis

- Infrastructural speculation

- Priority queues

- Binary trees

- Autocomplete

- Content moderation

Do abstractions have politics?

Asymptotic analysis examined the efficiency of an algorithm in terms of resources such as running time. Asymptotic analysis as it relates to algorithm analysis was popularized in the 1960s by academic computer scientists at Stanford University who began their careers as mathematicians. It quickly became the primary focus of algorithm analysis. This relationship is so strongly connected that the term “analysis of algorithms” is often assumed to be synonymous to asymptotic analysis. Entire courses (including this one) are the result of this assumption of the centrality of asymptotic analysis. Historically, computer scientists have focused on the question, “How efficient is this algorithm?” and justified their answers using mathematics.

Questions about the runtime efficiency of an algorithm are not only limited to mathematical answers. They can also have critical answers: answers that question underlying assumptions that are often taken for granted, particularly around issues relating to social relations. Recently, OpenAI published DALL·E, a neural network that creates images from English language text captions. The results are impressive. But training these deep neural networks on billions of images takes on the order of millions of dollars of computational power—not to mention a team of machine learning scientists building on decades of US military funding for artificial intelligence projects since World War II. The development of machine learning technologies (and computer science more broadly) exists in this political context. Not everyone has equal access to computation because it is a scarce resource, and computation is developed for the benefit of certain people over others.

In 1980, the science and technologies scholar Langdon Winner offered a critique of social determination of technology in his article, “Do artifacts have politics?” Beyond just existing as a byproduct of this political context, “some kinds of technology require their social environments to be structured in a particular way”—some technology could not exist “unless certain social as well as material conditions were met.” The design of a deep neural network necessitates a multi-million dollar computational budget, which requires centralized institutions that can coordinate the intellectual and financial resources needed to develop, operate, and manage this technology. Deep neural networks are not only a byproduct of this political context, but also reinforce and upholds that context by centralizing the power and decisionmaking authority within the techno-social elites who funded, designed, and maintained the system.

- Social determination of technology

- Our accustomed way of thinking that, “What matters is not technology itself, but the social or economic system in which it is embedded.”

It is obvious that technologies can be used in ways that enhance the power, authority, and privilege of some over others, for example, the use of television to sell a candidate. To our accustomed way of thinking, technologies are seen as neutral tools that can be used well or poorly, for good, evil, or something in between. But we usually do not stop to inquire whether a given device might have been designed and built in such a way that it produces a set of consequences logically and temporally prior to any of its professed uses.

Winner argues that technologies are not just neutral tools that are wielded by “good” or “bad” actors. Consider the following example drawn from the real-life design of vehicle overpasses in New York.

… it turns out … that some two hundred or so low-hanging overpasses on Long Island are there for a reason. They were deliberately designed and built the way by someone who wanted to achieve a particular social effect. Robert Moses, the master builder of roads, parks, bridges, and other public works of the 1920s to the 1970s in New York, built his overpasses according to specifications that would discourage the presence of buses on his parkways.

The design of this built environment structured racism by encoding a greater value for personal vehicles over public transportation, marginalizing the poor and the Black population of New York. Now that computation plays such a large role in the design of our personal environments, how does the design of programming abstractions produce a set of consequences even before an abstraction is used by anyone?

Speculative design

Affordance analysis

Affordance analysis measures which actions and outcomes that an abstraction makes more likely and then evaluates the effects of these affordances on society. Affordances are relational properties of objects that make specific outcomes more likely through the possible actions that it presents to users. In the context of designed physical objects, for example, a chair affords sitting because of its shape and a gun affords killing. “Enabling killing, however, is a negative final value and we have moral reasons to oppose it.”1 (Certainly, someone could use a chair for killing, but the design of a chair does not afford killing.)

- Affordance analysis

- Analyze an API to identify the affordances of a programming abstraction.

- Evaluate the affordances through a critical examination of social relations.

Affordance analysis is a two-step process. For step 1, the affordances of a programming abstraction are defined by its application programming interface.

- Application programming interface (API)

- In Java, a

classorinterfacedefines public methods that afford certain ways of using an object.

For example, the set and map abstract data types afford access to the elements corresponding to a particular key such as with the contains or containsKey methods. In Java, lists share the same affordances as sets but also provide additional affordances for access to elements corresponding to a particular index with the get method. On their own, these affordances might not seem to have any associated negative or positive final value. But when programmers apply them toward solving problems, they encode assumptions about the nature of the problem and the form of its solution.

For step 2, we need to consider how these affordances affect social relations. There are many methods in the social sciences that could work. Some methods, such as algorithmic ethnography, involve the researcher in fieldwork to learn how people use algorithms in practice. For simplicity, we will focus on the framework of infrastructural speculation: Rather than consider only the design of the program in isolation, infrastructural speculation examines how the program interacts with the rest of the world by imagining a projected lifeworld, or “the things that must be true, common-sense and taken-for-granted in order for the design to work.” How might we fill-in the projected lifeworld surrounding the program?

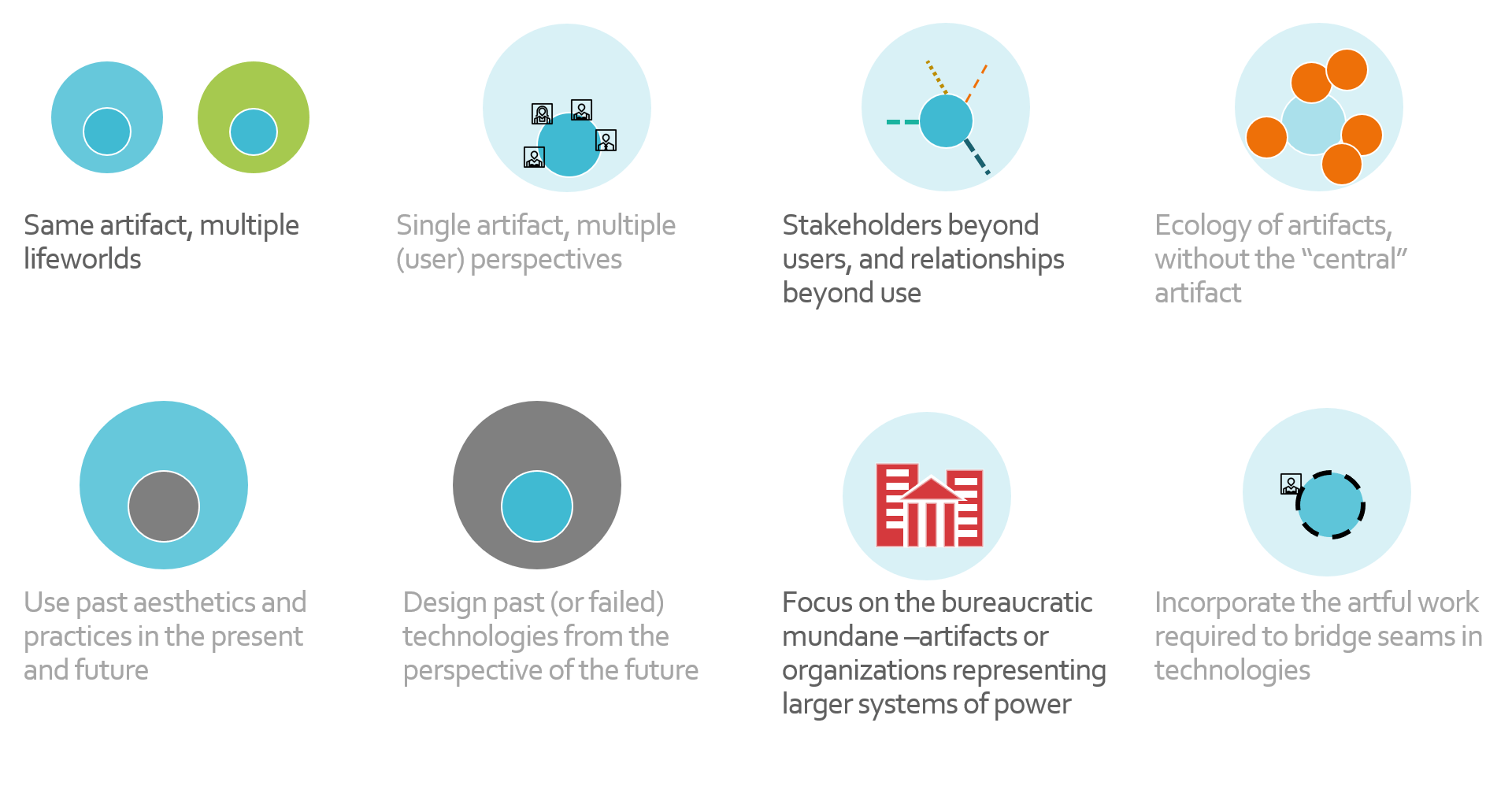

The full paper2 presents eight design tactics for creating infrastructural speculations, but we’ll focus on the three that Richmond makes most accessible.

- Place the same speculative artifact in multiple lifeworlds.

- Examine stakeholders beyond users and relationships beyond immediate use.

- Focus on institutions, organizations, and larger systems of power.

Klenk, M. 2020. How Do Technological Artefacts Embody Moral Values? Philosophy of Technology. https://doi.org/10.1007/s13347-020-00401-y ↩

Richmond Y. Wong, Vera Khovanskaya, Sarah E. Fox, Nick Merrill, and Phoebe Sengers. 2020. Infrastructural Speculations: Tactics for Designing and Interrogating Lifeworlds. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ‘20). Association for Computing Machinery, New York, NY, USA, 1–15. https://doi.org/10.1145/3313831.3376515 ↩

Infrastructural speculation

Priority queues

A priority queue is an abstract data type where elements are organized according to their associated priority value. A max-oriented priority queue serves highest-priority elements first while a min-oriented priority queue serves lowest-priority elements first. For example, social media platforms rely on algorithms to draw attention to the most personally-engaging user-generated content. Content moderation plays an understated but integral role in determining which pieces of content will be shown to the platform’s users. A content moderation system might use a priority queue for human moderators to review flagged content. The system might prioritize the most severe, obscene, or otherwise toxic content for review.

What are the consequences of this affordance? We might ask questions that help us dig deeper into the sociopolitical context. Consider how the affordance connects to broader questions such as:

- History and Context

- What are the historical and cultural circumstances in which the technology emerged? When was it developed? For what purpose? How has its usage and function changed from then to today?

- Power Dynamics and Hegemony

- Who benefits from this technology? At the expense of whose labor? How is this technology sold and marketed? What are the economic and political interests for the proliferation of this technology?

- Developing Effective Long-Term Solutions

- What solutions are currently being implemented to address this labor/benefit asymmetry? In what ways do they reinforce or challenge the status quo? Who receives benefits/harms?

Binary trees

Binary trees are the foundation for data structures such as binary search trees that implement sets and maps and binary heaps that implement priority queues. Beyond implementing abstract data types, binary trees can also be used on their own to represent hierarchical relationships between elements based on their position in the recursive tree structure. For example, a hiring algorithm could represent its decision-making process as a binary tree. Each internal node in the binary tree represents a hiring question with a yes/no answer that corresponds to proceeding to the left/right child. Each leaf node represents a final yes/no hiring decision.

On one hand, designing a hiring algorithm could be a step towards de-biasing a hiring process through formalizing requirements for the position. But it also risks dehumanizing the process, which is particularly problematic given that algorithms can make decisions that reproduce social injustice. How do binary tree affordances affect this balance of values, and what are the risks of designing an algorithm modeling data as a binary tree?

A binary tree affords questions that encode hard requirements for the position because those questions can be answered with a yes/no answer. But other characteristics might be harder to represent. It might be hard to say exactly how much prior experience (and what kind of prior experience) is needed for the job beyond the core requirements, especially when there are many candidates with diverse backgrounds to consider. Creating a more nuanced binary tree decisionmaking system requires acknowledging this design constraint during the planning of which questions to ask. A design that affords only questions that can be answered yes/no precludes open-ended questions that allow a broader diversity of ways for a candidate to demonstrate suitability, rather than just the most prevalent candidate experiences. By narrowly prioritizing design for dominant groups while marginalizing others as edge cases, sociotechnical systems risk reinforcing social injustice.

It’s possible to design a binary tree that is not limited to questions that have strictly yes/no answers. In the same way that we can rewrite an if-else-if-else conditional into several nested if-else structures, we can represent any multiway tree as a binary tree. But because binary trees afford binary questions, algorithms that rely on binary trees may tend towards solutions modeled with purely binary questions. Affordance analysis suggests that the decision to represent an algorithm as a binary tree may preclude questions incompatible with binary conditional logic.

if (a)

...

else if (b)

...

else if (c)

...

else

...

if (a)

...

else

if (b)

...

else

if (c)

...

else

...

Autocomplete

The Autocomplete interface defines two methods.

addAll- Adds all of the given terms to the autocompletion dataset.

allMatches- Returns a list of all terms that begin with the same characters as the given prefix.

Terms are represented with the CharSequence interface, which is an ordered sequence of characters.

Question 1

Suppose we’re using Autocomplete to improve the user experience for a search engine. How do the affordances shape meaning and value in society with respect to different cultures?

- What is the order of the

allMatchesresults? How does that prioritize certain values? If no order is explicitly specified, what might an implementation implicitly prioritize? - How does it deal with different meanings for a term based on culturally-specific context? For example, a search for “George the 3rd” would probably be similar to “George III” but the same analogy doesn’t work for “Malcolm the 10th” and “Malcolm X”. Come up with other examples of complexity in language, and describe how the Autocomplete affordances shape meaning.

- How do these affordances translate to other languages (non-English) or cultures (non-US)?

Explanation

See Safiya Noble’s Algorithms of Oppression: How Search Engines Reinforce Racism, especially chapter 1. Amy Ko offers a book review.

Content moderation

Social media platforms rely on algorithms to draw attention to the user-generated content that is most likely to engage. Content moderation is about determining whether or not a piece of user-generated content should be published at all. In designing a content moderation system, social media platforms make editorial decisions that impact public opinion.

Question 1

Suppose a social media platform doesn’t want to publish “toxic” content: user-generated content that is rude, disrespectful or otherwise likely to make someone leave a discussion. How does a priority queue approach to moderating toxic content benefit or harm different people around the platform?

- Users of the platform, particularly the ways in which certain identities may be further marginalized.

- Content moderators who are each reviewing several hundred pieces of toxic content every day.

- Legal teams who want to mitigate government regulation and legislation that are not aligned with corporate interests.

- Users who want to leverage the way certain content is prioritized in order to shape public opinion.