Ethics in Artificial Intelligence

UW CSE 582, Spring 2024

M/W 3pm-04:20pm, CSE2 G04

Instructor: Yulia Tsvetkov

Teaching Assistant: Orevaoghene Ahia

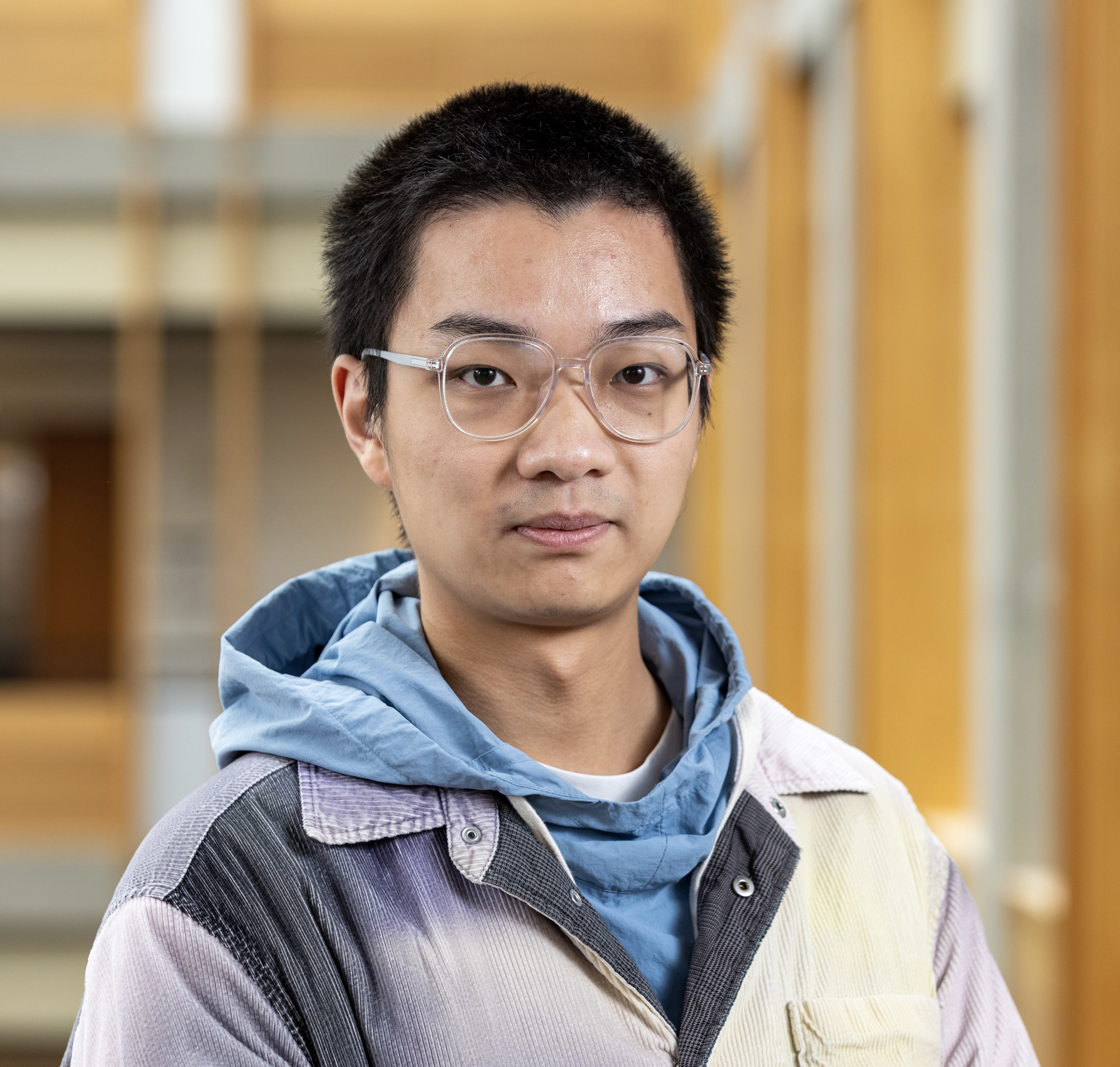

Teaching Assistant: Shangbin Feng

Summary

As AI technologies have become increasingly prevalent, there is a growing awareness that decisions we make about our data, methods, and tools are often tied up with their impact on people and societies. This course introduces students to real-world applications of AI, potential ethical implications associated with their design, and technical solutions to mitigate associated risks.

The class will study topics in the intersection of AI, ethics, and computer science for social good, with applications to Natural Language Processing, Speech, Vision and Robotics. Centered around classical and state-of-the-art research, lectures will cover philosophical foundations of ethical research along with concrete case studies, ethical challenges in development of intelligent systems, and machine learning approaches to address critical issues.

Methodologically, we will discuss how to analyze large scale data sets generated by people or data sets aggregating information about people, and how to ethically and technically reason about them through data-, network-, and people-centered models. From an engineering perspective, students will apply and extend existing machine learning libraries (e.g., Scikit-learn, PyTorch) to textual/vision problems. There will be an emphasis on practical design and implementation of useful and ethical AI systems, with annotation and coding assignments, a course project, and an ethics paper-reviewing assignment. Discussion topics include:

- Philosophical foundations: what is ethics, history, medical and psychological experiments, IRB and human subjects, ethical decision making, moral dilemmas and ethical frameworks to reason about them.

- Fairness and bias: fairness in machine learning, algorithms to identify social biases in data, models, and learned representations, and approaches to debiasing AI systems.

- Civility in communication: techniques to monitor trolling, hate speech, abusive language, cyberbullying, toxicity.

- Misinformation and propaganda: approaches to identify propaganda and manipulation in news, to identify fake news, political framing.

- Privacy: privacy protection algorithms against demographic inference and personality profiling.

- Green AI: energy and climate considerations in building large-scale AI models.

- Optional additional topics: AI for social good: low-resource AI and its applications for disaster response and monitoring epidemics; fairness in decision support systems; Ethical design and more careful experimental methods in AI; Intellectual property; Digital preservation.

Calendar

Calendar is tentative and subject to change. More details will be added as the quarter continues.

| Week | Date | Theme | Contents | Work due |

|---|---|---|---|---|

| 1 | 03/25 | Introduction | Motivation, course overview and requirements. Examples of projects in computational ethics [slides] [readings] | |

| 03/27 | Human subjects Research | History: medical, psychological experiments, IRB and human subjects. Participants, labelers, and data. [slides] [readings] | ||

| 2 | 04/01 | Human subjects Research | Paper discussion [readings] | |

| 04/03 | Philosophical Foundations | Ethical frameworks, benefit and harm, power, automation [slides] [readings] | project teams due on Friday (04/05) | |

| 3 | 04/08 | Social bias in AI models | Psychological foundations of bias; social bias and disparities in NLP models [slides] [readings] | |

| 04/10 | Project Workshopping | |||

| 4 | 04/15 | Mitigating harms from LLMs | Practical/technical approaches to mitigate harms from large language models, including bias detection and removal, factuality, etc. [slides] [readings] | |

| 04/17 | Social Bias & Fairness in AI Models | Paper discussion [readings] | Project proposal due Friday (04/19) | |

| 5 | 04/22 | Privacy auditing and protection in LLMs | Privacy auditing and protection in large language models [slides] [readings] | |

| 04/24 | Privacy auditing and protection in LLMs | Paper discussion [readings] | ||

| 6 | 04/29 | AI for Content Moderation | Identifying and countering hate speech/toxicity/abuse [slides] [readings] | |

| 05/01 | AI for Content Moderation | Paper discussion [readings] | Ethics review due on Friday (05/03) | |

| 7 | 05/06 | Hallucinations & Information Reliability in LLMs | Hallucinations & Information Reliability in LLMs [slides] [readings] | |

| 05/08 | AI Ethics in Industry | Self-Driving Cars and Recommender Systems: AI Ethics in Industry | ||

| 8 | 05/13 | Misinformation & Bots | Fact-checking and fake news detection. Computational propaganda and political misinformation. Detection of generated text. | |

| 05/15 | Safety of LLMs | Paper discussion [readings] | ||

| 9 | 05/20 | Individual meetings with Instructors on projects | ||

| 05/23 | Poster Presentations | Final Project Report due (05/29), 11:59pm |

Resources

-

EdStem. Course communication will be via EdStem

-

Google Drive. Course materials, including lectures, reading lists, etc., are in a Google Drive folder which has been shared with all students. You will also use Google Drive for submitting your ethics reviews and project proposals.

Assignments/Grading

There will be three components to course grades.

- Paper readings and discussion and written artifacts (20%).

- 10%: 1 regular role-play

- 10%: 2 written artifacts

- Ethics review (30%). Review ethics reviewing guidelines and write an ethics review for a research paper.

- Project (50%) . Work on a research project in ethical AI.

- 10% proposal.

- 30% final project report

- 10%: final project presentation.

Course Administration and Policies

-

Late policy. Students will have 4 late days that may be used for final project and ethics review deliverables. You can use at most 3 days per deadline.

-

Academic honesty. Homework assignments are to be completed individually. Verbal collaboration on homework assignments is acceptable, as well as re-implementation of relevant algorithms from research papers, but everything you turn in must be your own work, and you must note the names of anyone you collaborated with on each problem and cite resources that you used to learn about the problem. The project proposal is to be completed by a team. Suspected violations of academic integrity rules will be handled in accordance with UW guidelines on academic misconduct.

-

Accommodations. If you have a disability and have an accommodations letter from the Disability Resources office, I encourage you to discuss your accommodations and needs with me as early in the semester as possible. I will work with you to ensure that accommodations are provided as appropriate. If you suspect that you may have a disability and would benefit from accommodations but are not yet registered with the office of Disability Resources for Students, I encourage you to apply here.

Note to Students

Take care of yourself! As a student, you may experience a range of challenges that can interfere with learning, such as strained relationships, increased anxiety, substance use, feeling down, difficulty concentrating and/or lack of motivation. All of us benefit from support during times of struggle. There are many helpful resources available on campus and an important part of having a healthy life is learning how to ask for help. Asking for support sooner rather than later is almost always helpful. UW services are available, and treatment does work. You can learn more about confidential mental health services available on campus here. Crisis services are available from the counseling center 24/7 by phone at 1 (866) 743-7732 (more details here).