Description of

Feature Descriptor:

My feature descriptor takes in a feature at pixel (x,y). It determines an angle of rotation by looking at the eight pixels surrounding the feature (in the gray version of the image) and finding the one with the greatest difference in intensity (a hacky version of finding the dominant orientation of the image window). It then rotates a 5x5 color window centered on the feature according to the angle found. I rotated the window using the WarpGlobal method and nearest neighbor sampling. In order to correctly rotate the image around its own center instead of the origin, I used a matrix to translate to the origin, then rotate the image and then translate back (thanks Ankit). The feature description is then just a color description of the 5x5 rotated window image.

I also tried looking at a simple 5x5 window centered on the feature and outputting a list of the sorted intensities (both for gray and color images), which actually performed better than the simple window description (surprisingly), but not as well as the rotated window.

Design Choices:

To compute the Harris Values, I used a convolution with the Sobel matrices to approximate the partial derivatives and used a 5x5 Gaussian mask for the weights matrix. I varied the threshold to determine if something was a feature based on the images. I had to vary this a lot, as for some of the sets I would not get enough matches. I also found that this had a pretty big impact on my results. For the values listed in the performance, I tried to keep the threshold as constant as possible.

I would have liked to compute the eigenvalues to determine the dominant orientation of the image window, but I had trouble implementing that and did not get it done in time.

Performance:

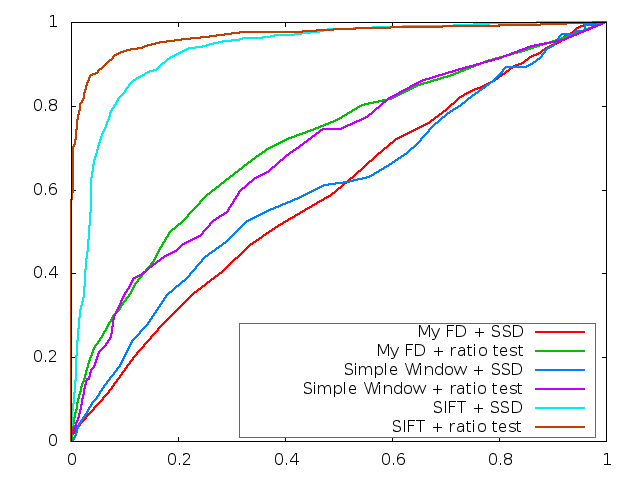

ROC curve and AUC values for SSD and ratio distance test for simple window, my Feature Descriptor, and SIFT for the graf set. SIFT is obviously the best, my Feature Descriptor is slightly better than the simply 5x5 window for the ratio test (but not SSD, oddly). Threshold for determining if something was a feature from the Harris matrix for this image was 0.005.

|

|

SSD |

Ratio |

|

Simple Window |

0.601 |

0.689 |

|

My Feature Descriptor |

0.587 |

0.701 |

ROC curve for SSD and ratio distance test for simple window, my Feature Descriptor, and SIFT for the yosemite set. SIFT is obviously the best, my Feature Descriptor is slightly better than the simply 5x5 window with the ratio match, but not the SSD match. Threshold for determining if something was a feature from the Harris matrix for this image was 0.005.

|

|

SSD |

Ratio |

|

Simple Window |

0.84 |

0.88 |

|

My Feature Descriptor |

0.79 |

0.90 |

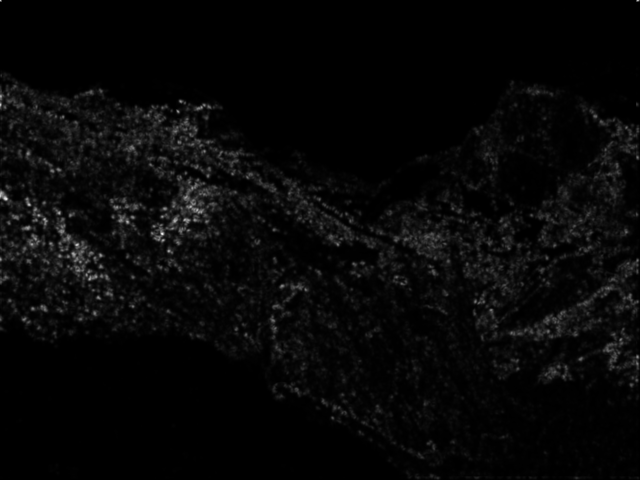

Harris operator for

Yosemite:

Harris operator for graf:

|

|

Window SSD |

Window Ratio |

My FD SSD |

My FD ratio |

|

graf |

0.595 |

0.604 |

0.602 |

0.642 |

|

bikes |

0.261 |

0.510 |

0.40 |

0.578 |

|

wall |

0.300 |

0.655 |

0.415 |

0.641 |

|

leuven* |

nan |

0.639 |

0.124 |

0.664 |

*Note that the threshold used for the leuven benchmarks was 0.0001. The threshold for bikes was 0.0025. The threshold for graf and wall was 0.05.

Strengths and

Weaknesses:

The simple window descriptor actually worked pretty well, which is not really reflected in the AUC scores above, because I had to set my thresholds pretty low for my own feature descriptor to get enough matches. I had a lot of trouble with the leuven images (I got a nan value for AUC for many levels of the threshold). I think this was because my algorithm did handle rotation (somewhat), but did not do much to take care of intensity, although I noticed that I got slightly better values when I used a color window instead of just the gray values. I think having matching on the color information helped dampen the effects of the different intensities. On the bikes, I also had trouble getting enough features on the really blurry image. I had to set my threshold pretty low to pick up features, which I think resulted in a low AUC value.

In general my feature descriptor did terribly when matched with the SSD matching algorithm, but seemed to do better when matched with the ratio algorithm. I am not totally sure why that is. Perhaps with the rotated feature descriptor, there were many more that were close matches, which would lead to more mismatches.

One thing I definitely should have done is to remove the features along the edges of the image. I think that threw off some of the feature matching.

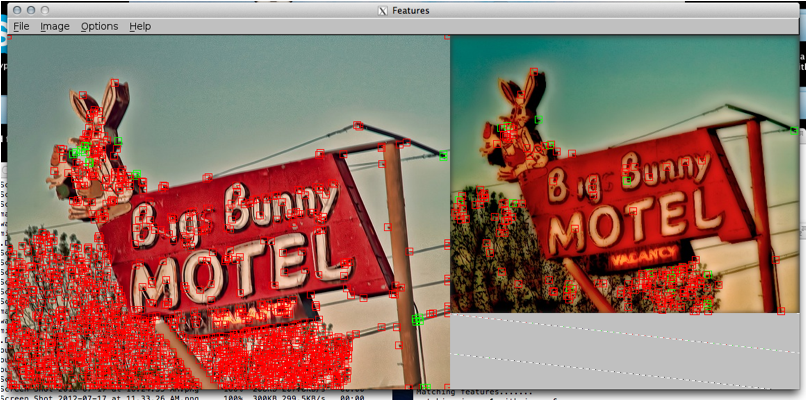

My Images:

This is a screen shot of my algorithm running with the ratio matching on an image., which has been blurred and the intensities of colors have changed. I tried to select out a few features that were distinctive. It does appear that some of the matches got lost in the trees.