Project 5: Monte-Carlo Tree Search for POMDPs

Adapted from the Berkeley Pac-Man Projects originally created by John DeNero and Dan Klein.Table of Contents

Introduction

In this project, like the last, you will design Pacman agents that use sensors to locate invisible ghosts.However, instead of hunting them, you will avoid them in pursuit of food. You will model this planning problem as a POMDP and write algorithms to solve this POMDP.

The code for this project contains the following files, available as a zip archive.

| Files you'll edit: | |

bustersAgents.py |

Agents for playing Pacman. |

answers.txt |

Write short answers to the questions in this assignment here. |

| Files you will not edit: | |

inference.py |

Inference Code |

busters.py |

The main entry to Ghostbusters (replacing Pacman.py) |

bustersGhostAgents.py |

New ghost agents for Ghostbusters |

istanceCalculator.py |

Computes maze distances |

game.py |

Inner workings and helper classes for Pacman |

ghostAgents.py |

Agents to control ghosts |

graphicsDisplay.py |

Graphics for Pacman |

graphicsUtils.py |

Support for Pacman graphics |

keyboardAgents.py |

Keyboard interfaces to control Pacman |

layout.py |

Code for reading layout files and storing their contents |

util.py |

Utility functions |

Files to Edit and Submit: You will fill in portions of bustersAgents.py during the assignment. You will also answer any questions posed to you in the problems in answers.txt You should submit these files with your code and comments. Please do not change the other files in this distribution or submit any of our original files other than these files.

Evaluation: Unlike the previous assignments, your code will only partially autograded for technical correctness. The autograderis there for you as a guide so that you can see if your agents have behavior that make sense. Because of randomness, correct code may not always pass the autograder. However, as before, pleasedo not change the names of any provided functions or classes within the code, or you will wreak havoc on the autograder. Do not use python autograder.py by itself (without the -t flag) to evaluate your code correctness. Use the specified autograder commands in the questions below. Using python autograder.py without the -t flag may cause you pain and sadness.

Academic Dishonesty: We will be checking your code against other submissions in the class for logical redundancy. If you copy someone else's code and submit it with minor changes, we will know. These cheat detectors are quite hard to fool, so please don't try. We trust you all to submit your own work only; please don't let us down. If you do, we will pursue the strongest consequences available to us.

Getting Help: This is an untested assignment! You are the first to ever do it! If you have any doubts or questions, please contact us! If anything is unclear, please contact us! If you think something is wrong, please contact us! We hope this assignment will be fun and teach you something cool.

Discussion: Please be careful not to post spoilers.

Background

Like in Project 4, Pacman is equipped with sonar (ears) that provides noisy readings of the Manhattan distance to each ghost. However, there are several differences. Unlike in project 4, the game ends when Pacman has eaten at least one of the food available on the board. The blocks of color again indicate where the each ghost could possibly be, given the distance readings provided to Pacman. However, although Pacman believes its sensors to be noisy, the Manhattan distance is receives will always be correct. Thus, Pacman uses the same emission model from Project 4 to do inference, where the probability of a distance reading decreases exponentially with its difference from the true distance, but the world will provide distance readings that are correct.

Question 1 (5 points): Maximum Likelihood UCT

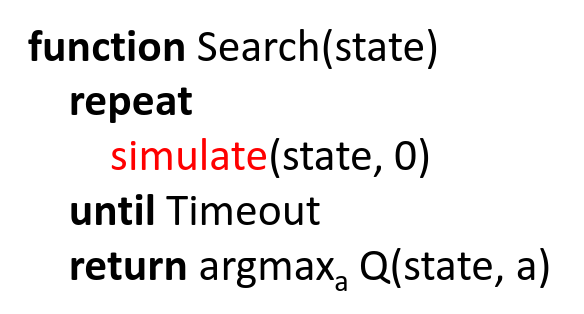

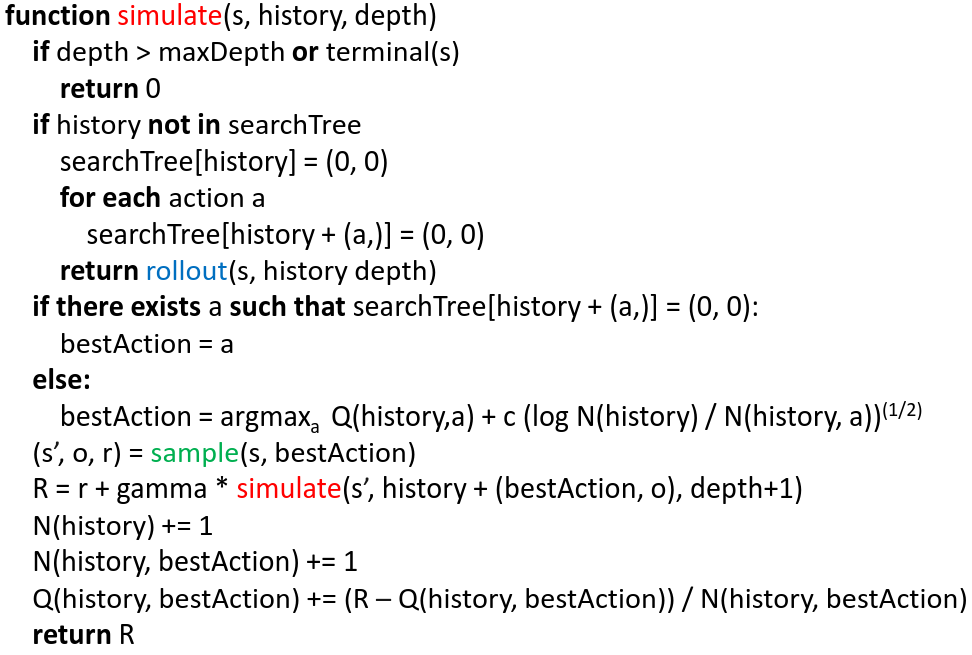

In this question, like in Project 4, you will write an agent that at each time step, first assumes the maximum likelihood position of the ghosts, making the environment fully-observable. Then, departing from Project 4, instead of taking the greedy action that minimizes the distance from Pacman to the nearest ghost, the agent will model this full-observable problem as a Markov Decision Process and run a Monte-Carlo tree search algorithm to solve for the optimal action. In particular, you will implement UCT (Kocsis and Szepesvari 2006), an online algorithm, meaning that unlike something like Value Iteration, it will be run at every time-step to determine the next best action. You will fill in the simulate and rollout methods in MDPAgent class of bustersAgents.py. UCT works in the following manner. The outermost loop of UCT simply repeatedly calls a simulate function to simulate trajectories in the MDP:

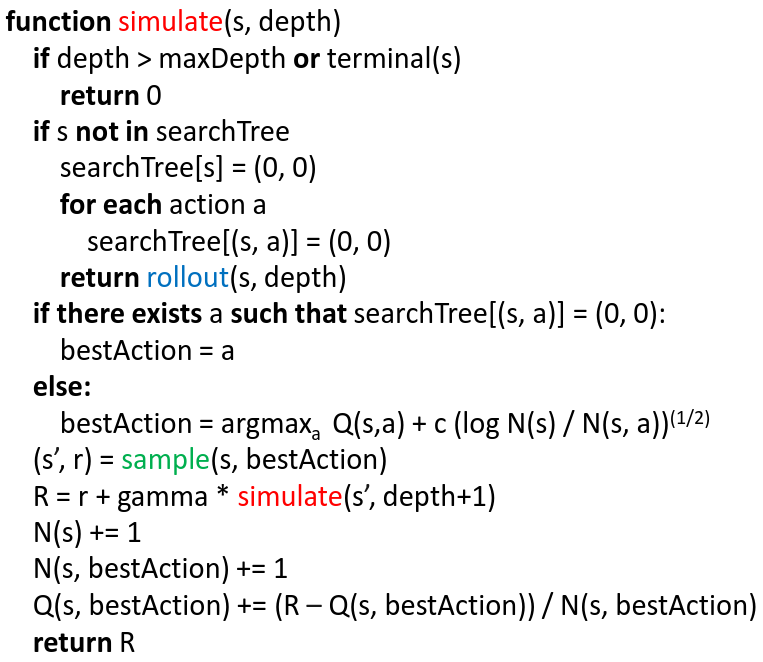

simulate() simulates a trajectory by picking actions according to the UCB criterion. It builds out a searchTree that maps states and state-action pairs to their values as well as the number of times they have been visited. It also uses a rollout policy to evaluate leaf nodes:

where c is an appropriately chosen constant and sample() is a function that takes a state and an action and samples a new state and reward from the MDP's transition and reward functions.

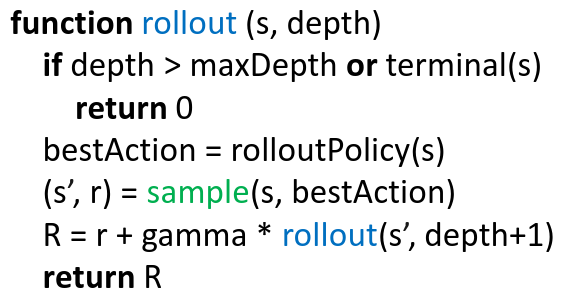

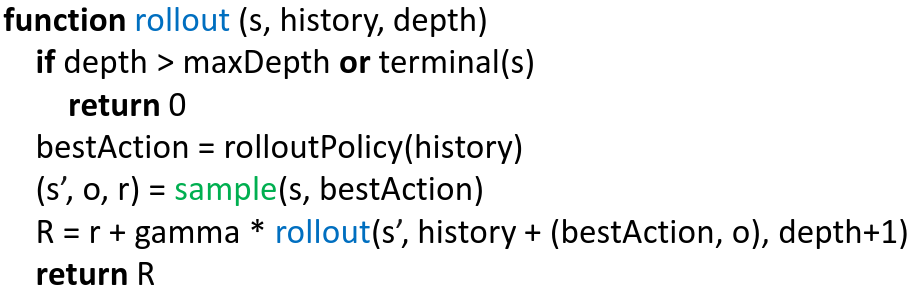

rolloutPolicy() is a function that, for now, chooses an action uniformly from the set of legal actions given the current state.

Let's try running our agent!

python autograder.py -t test_cases/q1/mdpTest

If you have written your agent correctly, Pacman should be able to win most times. Pacman does well in a large world with a lot of uncertainty! It seems like fancy POMDP algorithms are unnecessary!

Now let's try our agent in a world where there is a single ghost that does not move, and Pacman begins with a prior belief that the ghost could uniformly be in one of two positions. Unlike the previous world, Pacman now only receives observations when he takes the STOP action. The four other actions (WEST, EAST, NORTH, and SOUTH) do not produce any observations. To run the autograder for this question and visualize the output:

python autograder.py -t test_cases/q1/mdpStaticTest

Now the agent doesn't do very well. Many times, it will run into the ghost. Why?

The agent will also thrash around a lot. Why?

Question 2 (5 points): Partially Observable UCT

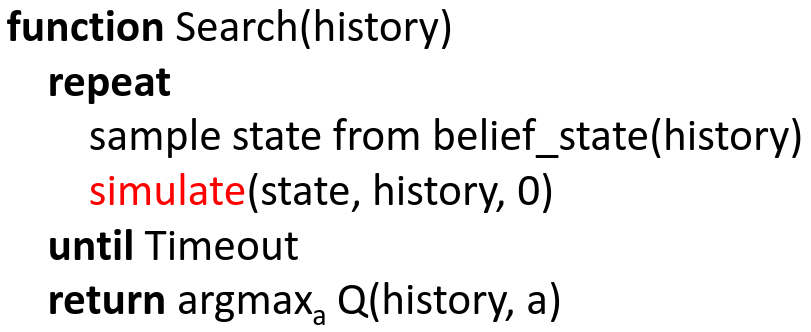

In the previous question you wrote an agent that modeled the world as an MDP and saw how this can lead to disastrous results when the world is, in reality, only partially observable. In this question, you will implement a simple version of POMCP, an extension of UCT to POMDPs. POMCP "applies a UCT search to a history-based MDP while using state-based simulator to efficiently sample states from current beliefs." As you can see in the psuedocode below, POMCP looks very similar to UCT, but now the search tree's nodes contain histories instead of states.

Implement the simulate and rolloutUniform methods in the POMDPAgent class of bustersAgents.py. Let's try running your new agent in the world from Question 1.

python autograder.py -t test_cases/q2/pomdpStaticTest

This agent does much better! Why?

Now, let's try running your agent in a bigger world, BigTest. Note that watching 10 runs may take a long time. You don't need to run this test to completion if you are sure your code is correct.

python autograder.py -t test_cases/q2/pomdpBigTest

Pacman does eventually reach the goal, but it takes forever. Why?

Side note: if you wish, you can try adjusting parameters in your agent to see how it affects performance. When the settings are set to the default, Pacman gains 60 points when he eats a food, and loses 1500 when he runs into a ghost. To change the amount of points Pacman loses, change line 483 in busters.py. To change the amount of points Pacman wins, change line 421 in busters.py. If you want to change the depth of the search, the number of simulations/trajectories, or the exploration constant, change the corresponding parameters in the __init__ function in the BustersAgent class in bustersAgents.py

Question 3 (5 points): A Better Rollout Policy

In Monte Carlo Tree Search methods, the rollout policy is similar to a heuristic. A good rollout policy uses domain knowledge to help guide search, so that the agent doesn't waste time on clearly suboptimal actions.

The pacman agents you have written have up to now used a rollout policy that uniformly picks an action from the legal actions. This rollout policy is pretty stupid, and in domains in which rewards are very far away, this rollout plicy can lead to extremely slow convergence to the optimal policy.

Can you think of a better rollout policy that will help Pacman reach the goal faster? Implement the rolloutBetter method in POMDPAgent in bustersAgents.py. Your agent should be able to clear the BigTest layout much faster and achieve at least a 50 point gain in average score:

To run with your new rollout policy

python autograder.py -t test_cases/q3/pomdpBigTest

To run with your old rollout policy

python autograder.py -t test_cases/q2/pomdpBigTest