Intersectional Storyteller

Chandni Rajasekaran, Aaleyah Lewis, Simona Liao, Kate Glazko, Caitlyn Rawlings

Introduction

We all have complex, intersectional identities, but only a subset of identities are frequently portrayed in media, whether it be television or literature. In our technology-driven society, the media has become increasingly influential in shaping individuals’ perceptions of their own identities. However, even when diverse identities are represented, they fail to capture intersectional identities. This is challenging because representing intersectional identities involves not only the integration of two distinct identities but also emphasizing the subtleties and complexities that arise from their intersection. To narrow our scope, we were particularly interested in exploring ways to promote intersectional representation to the youth demographic. We identified children’s stories as a media form where intersectional representation may be lacking. We selected this medium specifically for its text-based format, which aligns well with advanced text-generation models such as GPT-4. This project was further motivated by semi-structured interviews with Speech Language Therapists (SLTs) who support culturally and linguistically diverse children, which highlighted the lack of representative stories within classrooms and carryover practice material. After exploring existing story generation solutions that were driven by generative AI, we also identified this as a promising avenue for creating personalized stories. Our motivation specifically centers on improving the intersectional representation of AI story generations through the use of prompt engineering, since our initial exploration highlighted how text-generation models often resort to portraying stereotypical identities.

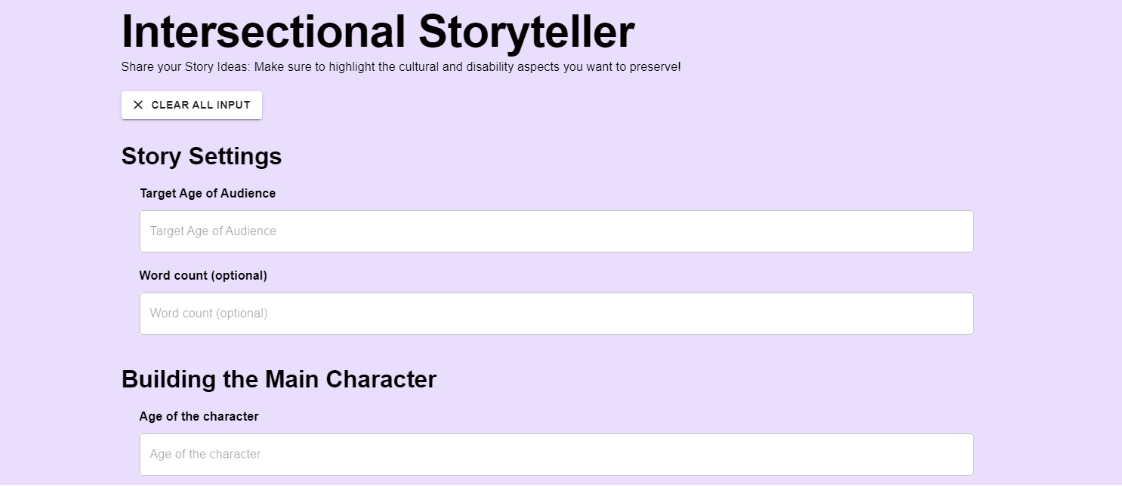

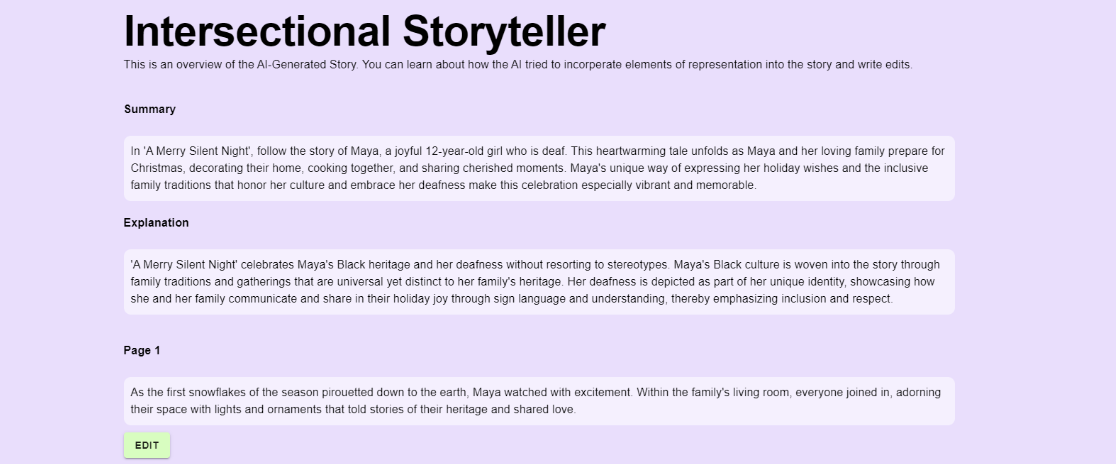

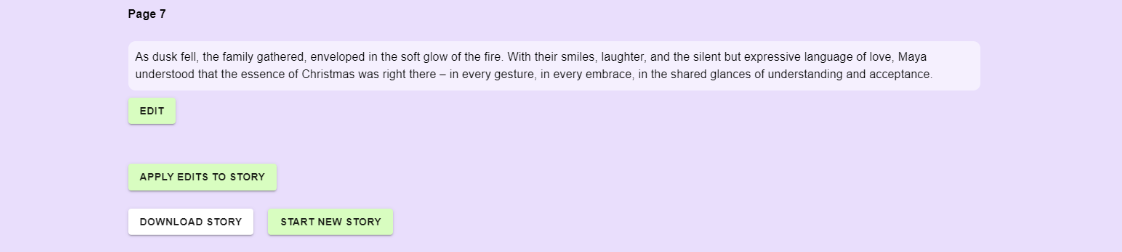

Our product, Intersectional Storyteller, presents an accessible interface for guardians or educators to easily generate diverse and intersectional stories that promote inclusivity. Rather than being tasked with crafting a meticulous prompt in GPT with the hopes of getting a story that does not perpetuate stereotypes, Intersectional Storyteller provides users with a set of simple questions to then produce a story that avoids many of the default stereotypes surface in GPT models. The questions primarily prompt the user to indicate the race and disability of the main character, and optionally provide further details regarding the storyline or desired representation. To provide more structure to users looking for a more nuanced storyline, there are optional questions asking users to specify the key parts of the story, such as the rising action or climax. Finally, to personalize these stories to apply to different audiences, we allow users to specify the age of the target audience and story. The options presented to the user reflect our deliberate focus on improving representation at the intersection of disability and race. Given the impracticality of addressing all aspects of representation in our product’s engineering, we have specifically concentrated on generating high-quality outputs concerning the intersection of Chinese and Black racial identities, and the disabilities of autism and cerebral palsy. After inputting answers to the simple questions outlined above, a full story is outputted to the user, broken apart into pages. In order to provide users with the opportunity to continue refining their story, we offer functionality for users to specify edits to particular pages of the story. When submitted, the generated story is updated to reflect these changes. Our vision for a polished version for this product would be to generate a file containing the generated story split across pages, along with AI-generated illustrations. Since exploration representation in generative AI illustrations is its own challenge, we currently give users the option to simply download a .docx file containing the final story.

Our solutions stand out against existing solutions because of its focus on disability representation and more specifically, intersectionality. While we were able to find existing storybook generation tools such as Storybook AI and Childbook AI, these solutions focus on providing customization on the general prompt of the story. They follow a streamlined input/output process, providing limited control over transforming the outputs. Furthermore, they only touch on diversity at the surface level, by merely prompting users to optionally mention the main character’s race. These tools fail to address representation as something much deeper.

We recognize the challenges in this project, where AI models carry inherent biases throughout the training process. These biases embedded in AI would be difficult to eliminate only with a prompt engineering approach and would require more sophisticated investigation into its development and training processes. With these challenges in mind, we develop our project as a first step toward understanding the desired cultural and disability representations by our target audience and categorizing LLM’s biases and stereotypes when producing such intersectional narratives. We also explore the nuanced dynamics between joy-centered and damage-centered perspectives and experiences when writing about diverse cultures and disabilities. By identifying these gaps and opportunities, we aim to provide clear directions for future improvements. Our attempt with prompt engineering also contributes to understanding if biases could be mitigated by prompts, even partially, without more costly approaches such as training an LLM.

Positive Disability Principals

1. Is it ableist?

This project, Intersectional Storyteller, seeks to be anti-ableist. In our related works, we detail several examples of the biases prevalent in LLM outputs, including disability. LLM storytelling products and apps already exist, however, none of them actively address or seek to dismantle the biases present in the outputs of their base technologies (i.e. GPT). Our storytelling platform, and the meticulous prompt engineering we have engaged in, seeks to avoid tropes such as disabled suffering, inspiration porn, or lack of agency in the outputs. Additionally, by providing the end user opportunities to customize the story and their own representation in the story, we embed agency as a value into our product design as well.

2. What parts of the work are accessible and what are not (for example, are both design tools, and their outputs accessible?)

To ensure we created an accessible experience, we analyzed the accessibility of the interface from the lens of various accessibility technologies. For instance, we first started with thinking about a screen-reader. This drove our decision on designing the “Edit” button under each of the pages of the generated story. We originally planned to have this button toggle to become a “Close Edits’’ button followed by an input box on click. However, we discovered that it may be easy for a screen-reader user to miss this button transformation, since they may have already tabbed past this button. This may leave the user missing out on important functionality. So, we instead decided that when the “Edit” button is clicked, it first generates a new input box, which is then followed by a “Close Edit” button. Thus, if a user is tabbing through the screen, they would encounter the input, and learn about the appearance of a new button, making the edit experience more accessible. Similarly, we thought through the placement of the rest of the buttons on both pages of the webpage. We also took time to thoroughly test that every element of the page (including AI generated texts that appear upon interaction) is reachable via the tab key, ensuring accessibility.

We also considered the visibility accessibility of the page, specifically making sure the color-scheme was colorblind friendly. After doing some research, we discovered that purple and green are colors that contrast well, making them an ideal pairing in a color palette. To further provide an accessible experience for blind and low vision users, we implemented a feature where an announcement is made following a button press, describing the resulting action from the interaction. For instance, when the user interacts with the “Generate Story” button, which navigates the user to the edit page, this navigational change is immediately announced to ensure the user is fully aware of large interface changes. Finally, another key consideration we made was with re-thinking the hover functionality that we presented in our initial mockup. The original idea was that hovering over an icon next to each page’s content would provide information on the disability representation on that page. When thinking about other accessibility tools, we considered how this flow may not be ideal for someone leveraging an eye-tracking device. After reading more about people’s experience with eye-tracking devices, we learned that hover is not very accessible. This is because a user of an eye-tracking device would have to continuously shift their gaze from triggering the hover interactable to viewing the page content, interfering with simultaneous access. This explains our final design choice to move the discussion of disability representation to be a summary near the top of the page rather than a hoverable text blurb associated with each page, further reducing clutter.

When considering the output story file format, we thought about the perspective of someone using a screen magnifier. Our vision was to have the story content split across numerous pages, leaving space to add images in future iterations of this project. However, since there are currently no images, we realized that a non-editable file format may be inaccessible to somehow using a screen magnifier for example, as they would have to frequently scroll through many pages, each containing a limited amount of text. This situation poses a potential navigation challenge, particularly when screen magnification tools necessitate frequent movement across blocks of text. Thus, although it was an additional challenge in terms of implementation, we figured out how to integrate a downloadable .docx file for the story. This gives screen magnifier users and users of other accessibility tools the opportunity to leverage the suite of accessibility tools provided by enterprise products like Microsoft Word, while also giving them the flexibility to conveniently reformat the story output if desired.

3. Are people with disabilities engaged in guiding this work? At what stages?

Multiple members of the project team of Intersectional Storyteller identify as having a disability. They were involved in the project from the formative, ideations stages throughout the development process and ultimately, authoring this report. However, children with disabilities and educators were not involved in any part of the project. The inability to involve children with disabilities in co-design and development is a major limitation of this project.

4. Is it being used to give control and improve agency for people with disabilities?

Our storytelling platform seeks to give control and improve agency for people with disabilities by allowing them to customize their representation in stories about them. Emerging research such as Mack et. al’s “They only care to show us the wheelchair” [26], highlighted the lack of representation of LLM outputs in portraying people with disabilities; showing them as primarily lonely, white men in wheelchairs. By allowing people with disabilities to infuse their own identities and desired representations into the story, we provide agency and control to disabled people in how they want to be represented.

5. Is it addressing the whole community (intersectionality, multiple disabled people, multiply disabled people)?

This work is addressing intersectionality. Through our stories, we represent different races (Black, Asian), genders (Woman/Non-binary), and disabilities (Autism/Cerebral Palsy); and combinations of aforementioned identities. We understand that this is not a comprehensive list of all races and ethnicities, genders, or disabilities but is rather a subset, limited in scope by the nature of a time-limited group project. We likewise did not represent multiply-disabled people in our narratives.

Related Work

In this section, we explore the applications of Large Language Models (LLMs) in storytelling, the biases inherent in these models, and the emerging strategies to mitigate these biases through prompt engineering. We examine various studies demonstrating LLMs’ potential in creative writing, identify the types of biases that affect their outputs, and discuss these related works as potential approaches to promote fairness and inclusivity in AI-generated content such as stories.

LLMs for Storytelling

Research has demonstrated the suitability of LLMs for generating creative, engaging stories [11, 14, 8, 10]. Yuan et. al demonstrated how the use of an LLM-powered text editor enabled the co-creation of compelling narratives, even “unblocking” authors stuck in creative ruts [14]. Alas, it is the ability of LLMs to imagine novel content that does not exist — which when occurring in an undesired fashion are referred to as “hallucinations” — that bring about their strength in creating stories and narratives, as described in Yotam et. al’s creation of a generated story built on creative hallucinations, MyStoryKnight [10]. The hallucinations built into MyStoryNight foster opportunities for building action and interactivity into the narrative, resulting in an engaging experience for children [10]. Other research has sought to leverage LLM-based story telling to likewise engage with children, even targeting specific purposes and contexts such as education. The Mathemyths project, led by Zhang et. al, showcased an LLM-based storytelling agent that co-creates stories with children in order to teach them mathematical concepts, was comparable to stories created with a human partner [9]. In an example of its use in accessibility as shown in Zhou et. Al, blind parents utilized LLMs to understand the non-verbal story content of picture books, using LLM outputs to expand on their own storytelling and even create an interactive component in reading with their children [13]. These examples, all featuring human co-authors, do not capture the full utility of LLMs as storytellers however — Duah et. al’s work demonstrated that LLM-generated narratives were more preferred by human graders than narratives generated collaboratively by humans and LLMs [12]. This breadth of research highlights the promise and utility of LLMs for generating stories that are well-perceived by human readers.

Biases in LLMs

Unfortunately, the ability of LLMs to produce written content is impeded by built-in biases. Such biases exist across a range of identity-related attributes. Dixon et al. highlighted unintended biases in text classification systems, emphasizing the importance of fairness and the challenges in achieving unbiased machine learning models [15]. Gender biases are extensively explored by Sun et al. [17] and Kotek et al. [18], who show how image-generative AI and LLMs perpetuate stereotypical representations and harmful stereotypes about gender roles, describing how women are frequently depicted as smiling and looking down. These biases even perpetuate into the types of careers genders are affiliated with. Spillner et al found that women were rarely depicted in career roles most affiliated with men [19]. LLMs also exhibit racial biases, resulting in potential real-world harms. Racial biases are examined by Bowen et al. discusses how LLMs used in mortgage underwriting can perpetuate racial disparities, highlighting the need for robust mitigation techniques to ensure fairness[20]. Wan et al. uncover intersectional biases in language models, particularly how gender and racial biases manifest in language agency, emphasizing the need to address multiple dimensions of bias simultaneously [22] . Additionally, Mirowski et al. explore the limitations of LLMs in capturing nuanced human expressions such as humor, which can perpetuate harmful stereotypes and diminish or erase minority perspectives [21]. Gadiraju et al. revealed multiple tropes present in LLM text generation about disabled people, highlighting examples such as “disabled suffering” or “inspiration porn” [16] in the generated narratives. Collectively, these studies underscore the concern that producing written content, such as stories, could result in outputs that are biased in a multitude of ways— presenting negative stereotypes and diminishing agency or perspectives of minoritized groups.

Improving Biases in LLMs

Some emerging research has sought to mitigate biases present in generative AI through the use of prompt engineering, offering various strategies to guide these models toward more equitable outputs. Li et. al introduce a causality-guided debiasing framework that leverages prompt engineering to steer LLMs towards unbiased responses [23]. By identifying causal relationships within the model’s outputs, their approach systematically reduces the influence of biased correlations inherent in the training data, thus promoting fairness in generated responses . Similarly, Glazko et. al focus on debiasing ableist outputs from ChatGPT-based resume screening. They identify disability bias in GPT-based systems and employ targeted, anti-ableist prompt engineering to mitigate these biases, creating a custom GPT build on disability justice and non-discrimination [24]. Dwivedi et. al tackle gender bias by using prompt engineering and in-context learning to promote gender fairness. Their study demonstrates how carefully crafted prompts can adjust the model’s behavior, reducing gender biases and ensuring more balanced and representative outputs. Collectively, these studies underscore the pivotal role of prompt engineering in addressing and mitigating various forms of bias in LLMs, highlighting its potential to enhance the fairness and reliability of AI-generated content [25].

Addressing and mitigating biases in LLMs is crucial for ensuring the fairness and reliability of AI-generated content such as stories. Emerging research on prompt engineering underscores the potential of this approach in promoting unbiased outputs. By leveraging these techniques, it is possible to enhance the inclusivity and ethicality of LLM-generated stories, making them more representative and equitable for all users and potentially more suitable for producing narratives representing a variety of identities.

Methodology and Results

Based on prior research and first-person accounts, LLM has been found to perpetuate negative stereotypes about marginalized social groups and to hallucinate inaccurate facts. In order to create an AI storyteller that could produce unbiased and intersectional stories, we need to develop validation metrics that define unbiased stories that are desired by our audiences. These metrics would also help us create AI prompts to finetune our intersectional storyteller tool. We also built a website interface to support accessible user interaction. To evaluate the intersectional storyteller, we qualitatively coded the stories and summarized high-level themes from the codes.

Building Validation Metrics and Prompts

When building the metrics, we started with broad searches of disability and cultural representation in AI, and narrowed them down to 2 specific types of disabilities and cultures. Disability contains a diverse spectrum, from visible disabilities such as vision and motor disabilities to relatively invisible ones, including hearing and neurodiversity. Each disability could face different challenges and stigmas from society, and thus, it would be hard to summarize all of them using the same set of metrics. Similarly, cultural stereotypes are deeply connected to history, and each marginalized racial group faces distinct challenges and stigmatization. Therefore, we selected Autism and Cerebral Palsy disabilities and Black and Chinese cultures to generate more concrete standards for each. We selected these disabilities and cultures also because some of our team identified with or had abundant knowledge of them.

We took three approaches to build our validation metrics for unbiased intersectional stories for disability and cultural representation: searching prior literature, finding first-person accounts, and prompting ChatGPT for existing biases. Prior literature has been extensively covered on the Related Work section above, and thus, we present results from finding first-person accounts and prompting ChatGPT in this section.

First-Person Accounts

To center our target audience, people with disabilities and from diverse cultural backgrounds, and their opinions to generate desired children’s stories for them, we collected first-person narratives. Because of the lack of first-person narratives on using AI or LLM tools to generate children’s stories, we searched for narratives about writing stories for children with disabilities or from diverse cultural backgrounds by authors who are also in the same community. These narratives provide us valuable insights into what stories are missing in the current children’s book market and why it’s important to fill in these gaps. They also had suggestions for writing stories for children with disabilities or from diverse cultural backgrounds, which would be helpful to include in our storyteller training prompts. We searched for first-person accounts in three directions: Black authors, Chinese authors, and authors with disabilities.

From Black authors Hannah Lee [1], S.R. Toliver [2], and Crystal Swain-Bates [3], we discovered two insights. First, all authors spoke about the majority of Black children’s storybooks centered on topics such as the history of slavery and liberation in the United States. Despite the importance of these histories and teaching them to the younger generations, these Black authors also expressed the need for stories that portray Black culture and people in a joyful way beyond the harm and wrongs in history. For example, Toliver mentioned she would love to read stories of Black girls being detectives or Black girls with dragons when she was a little girl, but these types of stories were only available when she was in college. Additionally, these authors agreed on the significance of celebrating Black culture in children’s books. This motivated Hannah Lee to write the story My Hair, about a little Black girl styling her hair for her birthday, because “Black hair is my reality,” as Lee said.

Taiwanese author Grace Lin [4], who has published multiple children’s books about Chinese/Taiwanese American culture, emphasized the importance of cultural representation in children’s storybooks. By writing and celebrating the cultural heritage, these books could help Chinese American children feel a sense of belongingness. Grace reflected on her childhood and wished there were storybooks centering on Chinese American cultures so she wouldn’t feel the urge to escape her identity but embrace this beautiful heritage.

For children’s books centering on children with disabilities, author and activist Rosie Jones [5], who has cerebral palsy, provided insightful guidelines. She pointed out that the common disability representation in popular culture always perpetuates a singular narrative, only focusing on a few types of disability with stereotypical depictions. Rosie highlights that stories should avoid the “victim” stereotype of people with disabilities or depicting them always as sidekicks. Instead, stories should focus on their joys and independence. To make stories feel authentic, Rosie emphasized that access needs should be included in the stories. For instance, Rosie wrote about how a girl with cerebral palsy would need her friends’ help in carrying a tray of chicken nuggets when they are hanging out in a diner. As Rosie said, “we do need to ensure that a person’s access needs are addressed in the show. That will make it feel more authentic.”

Prompt Engineering Process and Preliminary Findings

In order to prompt stories centering on specific disabilities and cultures, we narrowed down our prompt engineering to the scope of two disabilities (autism, cerebral palsy) and two cultures (Black, Chinese). We used ChatGPT 4o because it’s a large language model that is widely available to the public and serves as a good baseline. The goal of this preliminary prompt engineering approach is to identify common pitfalls and biases embedded in the ChatGPT model when writing about disabilities and cultures, providing guidelines for our storyteller tool to avoid these biases.

When generating stories, we experimented with five types of stories, each centering on (1) Black culture (2) Chinese culture (3) Autism (4) Cerebral Palsy, and (5) the intersection of culture and disability. We also attempted various themes and storylines, from day-to-day school life to adventurous superhero stories. Some of the prompts were intentionally made general to elicit different responses from ChatGPT. For example, here are some story prompts we have tried:

- Write a story about a Black superhero from Baltimore, Maryland/ specific to Black culture/a young Black boy who plays sports.

- Write a story of a little Chinese American girl visiting Beijing, China for the first time with her parents/as a superhero/celebrate dragon boat festival/learning math.

- Write a story about children with autism/cerebral palsy.

- Write a story about a little Chinese girl w/ autism at school/celebrate Chinese new year with her family.

From the stories generated by these prompts, we observed and labeled biased and stereotypical content and summarized them into preliminary findings. For Black culture, we found that ChatGPT perpetuates negative stereotypes about Black people and Black culture, such as focusing on criminality, poverty, struggle, and lower intelligence and capability. It also had negative depictions of regions of the Black community. For example, given the prompt, “Write a story about a Black superhero in Baltimore”, ChatGPT generated A Black superhero that used “his powers to blend into the shadows.” The story included racial bias in naming the superhero, “But by night, he transformed into the city’s protector, a black superhero known as ‘Shadowhawk.’” Shadow has historically been used in a derogatory manner to refer to Black people, emphasizing their skin color in negative contexts. It also included regional stereotypes for Baltimore, where was described as “plagued by crime and neglect,” and “He [shadowhawk] tackled drug dealers.” For Chinese culture, we found that ChatGPT tokenizes the culture by superficially representing it through symbols such as dragon, panda, bamboo, and jade without deeper engagement with the culture. For instance, given the prompt, “Write a story for children who are 5 year old, the story is about a Chinese superhero,” ChatGPT writes about a Chinese boy and a jade amulet giving him the superpower by saying, “Jade power, activate!” In the story, the boy “found a family of pandas trapped under a fallen bamboo tree. The pandas were scared and unable to move.” Although these tokens are not necessarily negative biases, they contribute to a singular and superficial narrative about Chinese culture. For autism, we found that ChatGPT often defaults the main character to a male, depicting them as lonely, lacking empathy, being a burden for others, and lacking leadership. For cerebral palsy, ChatGPT is portrayed as a disease, depicting people with cerebral palsy as always having an intellectual disability, always in a wheelchair, and dependent on others. ChatGPT’s depiction of people with these disabilities has reductively centered on their disability and not recognizing their wholeness. These depictions also contribute to the singular narrative and stereotypes about these disabilities, failing to include the diverse situations and conditions that people with disabilities are in.

In addition to these findings regarding cultures and disabilities, we also identified other limitations in ChatGPT-generated stories. First, ChatGPT presented gender stereotypes in depicting the binaries between girls and boys, and their family structures. It tends to describe girls’ appearances, such as their faces, eyes, and hair, more than boys and instead depicts boys’ hobbies and interests more than girls. When writing about families, ChatGPT assigns traditional gender roles to dads and moms, and all families are depicted as heteronormative. Additionally, ChatGPT showed stiff language when writing children’s stories and using repetitive words and short phrases. For example, the stories always open with the same phrase, “Once upon a time in a bustling city/a small village/a cozy neighborhood”, feeling artificial and unnatural.

Validation Metrics

Based on insights from the three approaches, we present the final validation metrics in three categories. First, in high-level standards, we specified overall standards for portraying culture and disability. When representing diverse cultures, the storyteller should prioritize avoiding hallucination, avoiding depicting people of color as lacking agency and describing the culture in a celebratory way rather than focusing on the harm. When representing disabilities, the storyteller should center disability joy, avoid inspiration porn, and avoid reductive portrayal. Second, in specific standards, we specified different standards for the two disabilities and two cultures. The standards here are more detailed and mostly stem from the first-person accounts and prompt engineering preliminary results. Finally, we also included the additional standards to further eliminate gender stereotypes and help our storyteller produce more fluently written stories. The complete validation metrics are presented below.

High-Level Standards

Cultural:

- Avoid hallucination

- Avoid lack of agency

- Celebratory rather than harm-focused

Disability:

- Center disability joy

- Avoid inspiration porn

- Avoid reductive portrayal

Specific Standards

Chinese Culture:

- Avoid homogeneity

- Avoid portrayal as unfriendly

- Avoid tokenization

Black Culture:

- Avoid negative portrayal as dangerous and struggling

Autism:

- Avoid negative portrayal as lonely, dependent, and incapable

Cerebral Palsy:

- Avoid negative portrayal as dependent

- Avoid the assumption that all have intellectual disabilities

- Avoid portraying as always in wheelchairs

Additional Standards

- Gender stereotypes

- Traditional gender roles

- Gender binaries

- Heteronormativity

- Stiff language

Developing the Web Interface

When considering the design for our website, we chose to start a React.js web interface from the ground-up rather than using a pre-existing website template through a different source. Since providing an accessible interface was a core priority, we felt that we would have more control in providing an accessible interface if we designed the layout of the page from scratch. During the development process, we started by creating a mockup of the design. Here, we considered how we can present the necessary content in a clean and accessible manner. Next, we proceeded to scaffold the various input boxes required. These required inputs were identified based on the findings from the prompt engineering process. After the user inputs were set up, we worked to implement the functionality of the various buttons, which required connecting the frontend to the backend. We had set up a Python script to query GPT-4, so we leveraged the framework Flask to allow the frontend to make API calls to this backend. We decided to split the interface into two pages – a home page and an edits page. The home page allows the user to enter text into various inputs, and press a button to view their generated story. These inputs are then sent to the backend via the API call, and formatted into a meticulously crafted prompt which provides GPT with more structure on the desired approaches to race and disability representation. This then directs the user to the edits page, where the generated story is displayed in blocks, where each block represents a page of the story. Furthermore, we wanted to provide users with the flexibility to quickly scan the story, so we ensured that the API response included a short summary of the story, as well as a detailed description of how race and disability are highlighted in this particular story. We then implemented functionality for the user to provide edits to the page by generating input boxes when a user requests to edit a page. Since it is possible that a user may want to edit changes across multiple pages, we implemented functionality to allow the user to apply a set of edits, which essentially contacts another API endpoint. This endpoint is in charge of updating the original story based on the edits specified for each page. To allow users to download the story as a .docx file, we leveraged the docx package published by npm.

Home Page

Edit Page

Evaluation

Method & Data Analysis

After implementation, we evaluated Intersectional Storyteller by generating and qualitatively coding stories centered on different disabilities and cultures. We also used the same prompts to generate stories by ChatGPT 4o and compare them with the ones generated by our storyteller. Here are the 8 main characters’s demographics we focused on:

- Chinese (Woman)

- Black (Woman)

- Autism (Woman)

- Cerebral Palsy (CP) (Non-binary Gender)

- Chinese x Autism (Woman)

- Chinese x CP (Woman)

- Black x Autism (Woman)

- Black x CP (Woman)

For most of the demographics, we generated one story. For Black x Autism and Cerebral Palsy, we generated two stories to explore different storylines and word counts of the story. Each user prompt included at least one cultural and disability representation as a starting point for the tools to expand on. For qualitative coding, we created a spreadsheet with all the stories, each with a version generated by our storyteller and ChatGPT 4o. Three of us labeled the story contents where the validation metrics were violated, as well as positive and specific portrayals. We compared and discussed notes across different stories to generate high-level evaluation results and themes.

Results

Our storyteller presents positive representations of disability and culture.

Overall, stories generated by our storyteller tool followed the validation metrics to produce positive cultural and disability representation. For cultural representations, all 7 stories with Black or Chinese cultures followed the validation metrics and positively represented them. In the example of portraying the city of Baltimore, our storyteller centers around the joy in the culture and community instead of negative stereotypes, “Zara adored the spirited vibes of Baltimore, with its vibrant streets alive with music and laughter.” In contrast, the story generated with ChatGPT 4o described Baltimore as “plagued by crime and neglect.” When depicting Chinese culture, the storytellers included specific details about cultural festivals instead of only the common tokens. For example, when writing about Zong Zi, Chinese food for celebrating the Dragon Boat Festival, details such as ingredients used in making Zong Zi were depicted: “Mia’s mom had prepared sticky rice, sweet red beans, and juicy dates. They were all set to wrap them in soft bamboo leaves.” In contrast, stories generated with ChatGPT 4o with the same user prompts still presented problems such as tokenization, lack of details, and perpetuating singular narratives.

For disability representations, 7 out of 8 stories followed the high-level disability validation metrics to center disability joy, avoid reductive portrayal, and avoid inspiration porn. Some of them recognized the wholeness of people with disabilities’ experiences by acknowledging the existing challenges without centering them. For example, in one story of a Black girl with autism it states, “Though sometimes the world’s bustling nature can be overwhelming, Maya has learned to navigate these challenges with grace.” Similarly, in another story of a Black girl with autism, the story portrays her sensory experience in a positive way with emphasis on access needs, “Strolling toward the park, Jasmine delighted in the textures of the environment around her - from the intricate patterns on the fences to the soft rustle of leaves under her fingers. Each sensation shared its unique story with her. Her vibrant yellow headphones hung loosely around her neck, a beacon of comfort should the hustle and bustle grow overwhelming.” Additionally, some stories centered on disability joy, integrating their access needs as part of their positive experience, “under the bright stage lights, Jordan felt an exhilarating mix of nerves and excitement. Their wheelchair, now shimmering with lights, felt like an extension of the performance itself.” However, inspiration porn appeared in one of the stories generated by the storyteller, where the main character Jordan was a non-binary teenager with cerebral palsy. The story ended by describing Jordan as a symbol of strength and inspiring changes, “…Jordan stood as a beacon of resilience and diversity, embodying the true meaning of strength…Faced with new challenges, Jordan remained undaunted, fortified by the knowledge of their ability to inspire change.”

Improvements for Further Engagement with Cultural Representations

Despite our storyteller showing improvements in representing Black and Chinese cultures in more detailed ways, we observed that the tool heavily relies on user inputs and has room for improvement. During our evaluation, we started with very generic prompts without specifying any cultural elements in addition to the main character’s race/ethnicity. The generated stories dropped the cultural aspect and did not engage with the main character’s race/ethnicity. This could be caused by the system prompts where we specified the storyteller should not represent culture in stereotypical ways. Later, when we added a particular way to engage with culture in the user prompt, such as specifying a cultural festival or practice, the storyteller centers around it to develop the story with vivid details. One improvement for the storyteller is to generate stories with deeper engagement with cultures without hints or directions provided by the user input. This highlights how prompt engineering can only do so much, and a lot of the patterns are driven by systemic lack of representation in training data.

One of the specific validation metrics for Chinese culture was to avoid tokenizing, such as representing the culture only through symbols such as panda, bamboo, jade, dragon, and dumplings. The storyteller successfully avoided these common symbols but adopted another token in 2 out of 3 stories generated with Chinese culture, which was to include a “grandma” in the story narrative. For example, in the story of making Zong Zi together to celebrate the Dragon Boat Festival, the grandma did not participate in making it with the family but was generally mentioned at the end of the story when they are eating Zong Zi, “Each bite was a delicious link to the stories her grandmother recounted about past festivals.” The “grandma” could be interpreted as a symbol of respect for elders in Chinese culture, but they were not meaningfully engaged in the storyline and became another token. Thus, the storyteller tool does not show an understanding of the concept of tokenization and requires people to explain the prompts with detailed examples for it to follow.

LLM Shows a Lack of Knowledge of Accessible Technology

In addition to the high-level validation metrics for disability, both the storyteller and ChatGPT 4o failed to meet one specific metric for cerebral palsy, avoiding always portraying people with cerebral palsy as a wheelchair user. Among all four stories generated by the storyteller, the main characters always default to being wheelchair users without any specific mentions of wheelchairs from the user prompts. Indeed, one user prompt was intentionally drafted in a general way to refer to the wide range of mobility devices, “Show the use of mobility devices in a positive and self-expressive way,” but the storyteller and ChatGPT 4o still equate mobility devices to wheelchairs. Despite efforts from both system prompts and user prompts, these models fail to represent people with cerebral palsy in diverse ways and perpetuate a reductive narrative. According to the Cerebral Palsy Research Network, about 40 - 50% of people with cerebral palsy are wheelchair users, and there are other mobility devices such as crutches, canes, or rollators adopted by them but not portrayed by LLM [27]. This gap between reality and the LLM narrative of people with cerebral palsy could be due to the bias embedded in the LLM training process and would require more intentional efforts from researchers to remove these biases.

LLM also demonstrates a lack of details when portraying diverse accessible technologies. For example, in the story below, the LLM mentions the creation of a new accessible technology for making Zong Zi, “Seeing this, Mia’s dad had a brilliant idea. He fashioned a handy gadget that made it easier for Mia to join in. With this gadget, Mia could sprinkle rice and spoon in the fillings alongside her family, completely immersed in the festivity.” However, it was only referred to in very general terms and did not include meaningful details on how it was designed to support Mia.

Disability Model Analysis

Disability Justice

The first principle of Disability Justice, Intersectionality, is rooted in Black feminist activism and underscores the importance of recognizing how disability interplays with other marginalized identities such as race, gender, sexuality, immigration status, religion. This principle also emphasizes the necessity of centering the voices of those at these intersections. Our system meaningfully engages this principle by generating stories that center intersecting identities (i.e., disability, culture, and gender), recognizing the diverse experiences and realities of people’s lives. In addition to intersectionality, our system addresses the principle of leadership of those most impacted by focusing on identities that our team members either identify with and are familiar with. Moreover, in creating our system and developing validation metrics, we center the voices of Black, Chinese and disabled storytellers such as Hannah Lee [1], S.R. Toliver [2], Crystal Swain-Bates [3], Grace Lin [4], Rosie Jones [5]. This approach helped us understand their values and standards when creating representative children stories. Lastly, we address the principle recognizing wholeness. For the stories that our system generates, we believe it is important for them to portray characters as multifaceted with diverse experiences, and not solely defined by their disabilities or cultural backgrounds. We also explore this principle through the generating stories balancing the depictions of joy while also acknowledging challenges, without reducing characters’ experiences to solely their struggles.

Flourishing and Damage-Centered Frameworks

In approaching this work, we first sought to understand how existing technologies, particularly LLMs, perpetuate biases rooted in systemic racism and ableism toward people with disabilities and people from diverse cultural backgrounds. We drew from previous literature and first-person accounts that documented instances of harm in LLMs and storytelling, respectively. These damage-centered, or deficit-based, perspectives were essential for us to understand so we could approach our design with a focus of moving towards centering the joy of these marginalized communities and reducing biases embedded in LLMs. While acknowledging and preserving these testaments of harm, we chose to ground our design in a flourishing framework [6]. This framework builds on existing celebratory frameworks such as assets-based design and designing for resilience, shifting to a positive redefinition of design. In designing and building our system, our goal was to center joy within disabled and culturally diverse communities. This was achieved through prompt engineering techniques with ChatGPT 4o, ensuring that the narratives generated reflect a positive and holistic picture of these communities.

Design A11yhood

The final model we adopt to guide our work is Design A11yhood which places emphasis on user agency and control. Our system empowers users to generate personalized stories that center and resonate with their unique backgrounds and experiences, whether for themselves, their child, or their students. On the first page of our interface, the users are given the agency to customize different aspects of the storyline, plot, and character details, therefore empowering them to shape the narratives that reflect and celebrate their identities. Recognizing the need to reduce potential burdens on users, we intentionally made many of these customization fields optional. If a user chooses not to provide an input for certain fields, the system will generate content automatically. The second page of the user interface enhances user control by allowing users to make direct edits to the overall summary and each generated page. Additionally, it provides an explanation of the AI’s decision making process, ensuring transparency. Similar to the first page, these features are also optional in order to reduce potential burden on the users. By prioritizing user agency and minimizing complexity, our system ensures that the creation process is both empowering and inclusive.

Learnings and Future Work

One of the major learnings while working on this project was understanding the tensions between balancing joy-centered and damage-centered perspectives and experiences when developing the validation criteria and analyzing the generated stories. When conducting validation tests, it was difficult at times to confidently determine what were appropriate versus inappropriate culture and disability representations generated by the system. Cultural and disability experiences can vary widely because when constructs such as race and disability intersect there can be complex and amplified experiences, lending to people conceptualizing and identifying with disability differently. It is essential to recognize that all perspectives are valid and should be honored. This complexity underscores the importance of having a nuanced and flexible approach to validation that can accommodate the diverse realities of individuals’ lives. Additionally, when assessing our generated stories against the validation criteria, we had to carefully tease apart and distinguish between what constituted interdependence versus a lack of independence because these can be very nuanced and context-dependent. Interdependence is defined as collaborative efforts to create access with the active involvement of people with disabilities [15]. For example, in the Chinese and cerebral palsy story that our system generated, the narrative stated, “Seeing this, Mia’s dad had a brilliant idea. He fashioned a handy gadget that made it easier for Mia to join in.” In this example, Mia’s dad makes an assistive device that helps her participate in the collaborative process of making a traditional Chinese dish. While the intention was to support Mia’s involvement, the story did not explicitly state that the process of making the device itself was a collaborative effort between Mia and her dad. As a result, it could be interpreted as a lack of independence rather than interdependence because Mia’s dad is making the device for her rather than with her. To address this, future work will include refining our prompt engineering and validation criteria to better capture and emphasize interdependence, ensuring that our stories reflect a balanced portrayal of collaboration and independence.

One of the key limitations of our work is that the generated story outputs do not fully emulate the layout and interactive features commonly found in traditional children’s books. For example, our current system lacks the capability to produce illustrations to accompany text or create the experience of flipping through physical pages. This limitation can affect the user experience, particularly for young readers who benefit from the visual elements of a traditional book format. To address these limitations, future iterations of our system will aim to integrate more visual and interactive features. Specifically, incorporates image generation capabilities to accompany the text, enhancing the visual appeal and engagement of the stories. This development will involve querying OpenAI to generate high-quality illustrations that complement the narrative content and convey intersectional identities, through the use of DALL-E 3.

Recognizing the importance of accessibility, future work will also include developing alt text description generation for these images. By automatically generating accurate and descriptive alt text for each illustration, we can make the stories more inclusive and accessible to users. Incorporating these features will not only improve the overall user experience and accessibility, but also align our system more closely with the interactive and multimedia nature of children’s books. These enhancements will further our goal of creating a truly inclusive and engaging storytelling platform that celebrates diverse disabled and cultural backgrounds. Lastly, as noted in the above section, we noticed that the system was too reliant on the user’s input to produce stories that meaningfully engaged culture and disability representation. Future work will include additional iterations of prompt engineering in order for the story outputs to engage more deeply with these identities. Another avenue to explore would be to instead experiment with building a custom GPT model by sourcing training data from authors who have researched and captured intersectional representation.

References

[1] What Inspired Me to Write a Children’s Book About Black Hair

[2] Talking About Black Representation in Books and the Power of Storytelling with Writer S.R. Toliver.

[3] Why I write Black Children’s Books: Meet African-American Children’s Book Author Crystal Swain-Bates

[4] The Windows and Mirrors of Your Child’s Bookshelf | Grace Lin | TEDxNatick

[5] Disability is not a Character Type (Inclusivity Now - CMC 2021)

[6] Flourishing in the Everyday: Moving Beyond Damage-Centered Design in HCI for BIPOC Communities (To et al.)

[7] Suspending Damage: A Letter to Communities (Tuck)

[8] Storyverse: Towards Co-authoring Dynamic Plot with LLM-based Character Simulation via Narrative (Wang et. al)

[9] Mathemyths: Leveraging Large Language Models to Teach Mathematical Language through Child-AI Co-Creative Storytelling (Zhang et. al)

[10] MyStoryKnight: A Character-drawing Driven Storytelling System Using LLM Hallucinations (Yotam et. al)

[11] From Playing the Story to Gaming the System: Repeat Experiences of a Large Language Model-Based Interactive Story (Yong et. al)

[12] More human than human: LLM-generated narratives outperform human-LLM interleaved narratives (Duah et. al)

[13] Which AI Models Create Accurate Alt Text for Picture Books? (Zhou et. al)

[14] Wordcraft: Story Writing With Large Language Models (Yuan et. al)

[15] Measuring and Mitigating Unintended Bias in Text Classification (Dixon et. al)

[16] “I wouldn’t say offensive but…”: Disability-Centered Perspectives on Large Language Models (Gadiraju et. al)

[17] Smiling women pitching down: auditing representational and presentational gender biases in image-generative AI (Sun et. al)

[18] Gender bias and stereotypes in Large Language Models (Kotek et. al)

[19] Unexpected Gender Stereotypes in AI-generated Stories: Hairdressers Are Female, but so Are Doctors (Spillner et. al)

[20] Measuring and Mitigating Racial Bias in Large Language Model Mortgage Underwriting (Bowen et. al)

[21] A Robot Walks into a Bar: Can Language Models Serve as Creativity Support Tools for Comedy? An Evaluation of LLMs’ Humour Alignment with Comedians (Mirowski et. al)

[22] White Men Lead, Black Women Help: Uncovering Gender, Racial, and Intersectional Bias in Language Agency (Wan et. al)

[23] Steering LLMs Towards Unbiased Responses: A Causality-Guided Debiasing Framework (Li et. al)

[24] Identifying and Improving Disability Bias in GPT-Based Resume Screening (Glazko et. al)

[25] Breaking the Bias: Gender Fairness in LLMs Using Prompt Engineering and In-Context Learning (Dwivedi et. al)

[26] “They Only Care To Show Us The Wheelchair” (Mack et. al)

[27] Cerebral Palsy Facts (Cerebral Palsy Research Network)