EmailAR

Towards Supporting Eyes and Hands-Free Email Responses Using Wearable Augmented Reality

Hi, I am Jae from the University of Washington. In this article, I will discuss our attempt at designing an on-the-go email composition system for wearable augmented reality (AR). The goal of this project is to empower people with musculoskeletal disorders to write email responses and maintain productivity, while also helping to prevent such disorders by reducing the necessity for poor posture when composing emails.

Introduction

For many people, sitting or standing at a desk and looking at computer or smartphone screens can have detrimental health effects [1, 2]. Moreover, looking down at screens and typing on keyboards may be inaccessible to those with musculoskeletal disorders [3, 4]. Musculoskeletal disorders are characterized by impairments in the muscles, bones, joints and adjacent connective tissues leading to temporary or lifelong limitations in functioning and participation [5]. Globally, 1.7 billion people have musculoskeletal conditions, encompassing over 150 different diseases and conditions, including neck pain, back pain, carpal tunnel, muscular dystrophy, and paralysis [5]. Today, one of the most common causes of musculoskeletal disorders is prolonged sitting and technology use in workplace environments [6].

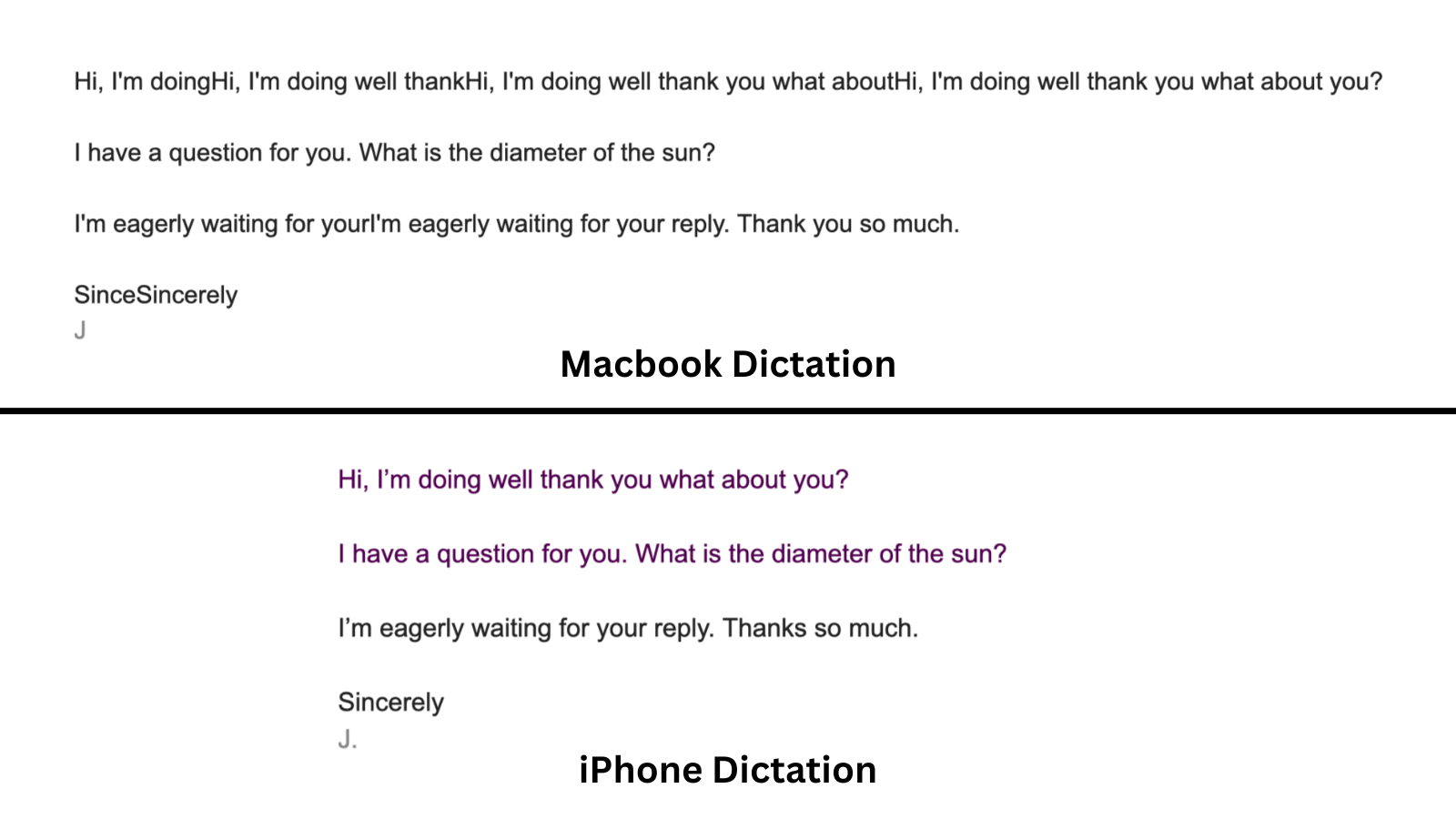

Traditional email composition involves looking down at a laptop screen or smartphone screen and typing on a physical keyboard. However, to people with musculoskeletal disorders such as including carpal tunnel, muscular dystrophy, paralysis, and amputation, these tasks are inaccessible. A commonly used to technology to transform typing tasks into speech tasks is Dictation. Dictation, available on many current devices like iPhones and MacBooks, enable users to speak to fill in text boxes on a screen. For instance, users can select the email body, enable the Dictation feature, then speak their email content. Dictation is often used by blind or low viison (BLV) people and people with musculoskeletal disorders for tasks such as tasking notes and searching on the web. We attempted to construct an email just using Dictation. While it is often accurate, it sometimes writes duplicate sentences and it struggles with punctuation and proper nouns.

To address the aforementioned limitations of traditional email writing tools and accessibility technology, we propose leveraging a pair of augmented reality (AR) glasses and a large language model (LLM) for email writing. We introduce EmailAR, a speech-based email response solution for wearable AR that enables hands- and eyes-free email writing. With EmailAR, users can see unread emails from an always-available scrollable UI panel. Users can click on an email, read it on an expanded UI panel, and respond via speech. Users only need to provide a brief summary of their email content, which an LLM uses to construct a full email response. In this class project, we implement an initial EmailAR prototype and evaluate the quality of AI-generated emails against human-written responses. Results indicate that EmailAR is capable of writing human-like responses, if given a few email samples from the users. However, EmailAR must be carefully designed, as it may not follow key disability models, including Language Justice, Leadership of Those Most Wanted in the 10 Principals of Disability Justice, and Disability Dongle.

The key question is as follows: How can we design an email composition app for wearable AR that supports hands- and eyes-free operation for individuals with upper-limb musculoskeletal disorders?

Related Work

Prior research has conducted interview-based studies to gather the opinions of individuals with upper body motor impairments regarding wearable AR technology. For instance, Malu et al. conducted two interview studies with people with upper-body motor impairment and found that they prefer wearable AR over traditional technologies, as they don’t have to hold the device while interacting with it, look down to see the screen, or worry about dropping their devices [7]. Additionally, McNaney et al. conducted a 5 day field study with 4 participants with Parkinson’s using Google Glasses. While participants appreciated wearable AR glasses, they expressed concerns regarding social stigma of wearing such technology [8]. Furthermore, Liu et al. conducted a semi-structured interview with 12 participants with upper-body motor impairment and found that they prefer voice or simple touch-based interactions with mobile technology [4]. Results from these interview studies suggest that wearable AR with speech input is a viable solution to support those with upper body motor impairments.

We also reviewed YouTube videos created by Ryan Hudson Peralta, an amputee and a popular Youtuber who reviews the usability and accessibility of the latest AR technologies. After trying out the latest wearable AR glasses, including the Meta Quest 3 and Apple Vision Pro, he said wearable AR can empower people with upper-body motor impairment to work better and live more freely using voice and eye gaze [9]. He emphasizes the importance of voice input such as speech-to-text, as this technology allows people who cannot type to bypass physical keyboards [9]. EmailAR follows Ryan’s vision of a speech-driven productivity tool.

Prior studies also indicate that AI technologies such as LLMs provide an opportunity to support augmentative and alternative communication (AAC) device users by improving the quality and variety of text suggestions [10]. Most closely related to our work, in a study with 19 adults with dyslexia, Goodman et al. suggested that an LLM can support email composition tasks such as rewriting parts of an email body and coming up with an email subject line [11]. In this work, we aim to understand whether an LLM-based email writing solution for wearable AR, an AAC device receiving increasing interest [12], can support uesrs with upper limb musculoskeletal disorders.

Methodology

A common technology used to transform typing tasks into a speech-based task is Dictation, a speech-to-text service available on Apple devices like iPhones and Macbooks. Similar features exist on Android devices as well. To activate Dictation, users first have to select a text box, then press the microphone button available on the digital and physical keyboards. Users can then speak into their devices, which will populate the selected text box with speech transcriptions. Most first-person account videos of disabled people using Dictation were of blind people taking notes and searching on the web. We tried to compose an email response ourselves relying only on Dictation. We observed that while Dictation is often accurate, it tends to repeat text. For instance, when we said “Hi, I’m doing well”, Dictation typed parts of this sentence multiple times. This was more frequent on a Macbook than on an iPhone. Additionally, Dictation had trouble with punctuations, often missing or misplacing commas, periods, and exclamation and question marks. Furthermore, Dictation struggled to spell proper nouns such as people’s names. We believe this limitation will be addressed by leveraging an LLM. With an LLM, users also no longer need to speak aloud their entire email response. They can instead provide a summary of the email content and ask an LLM to construct a full email.

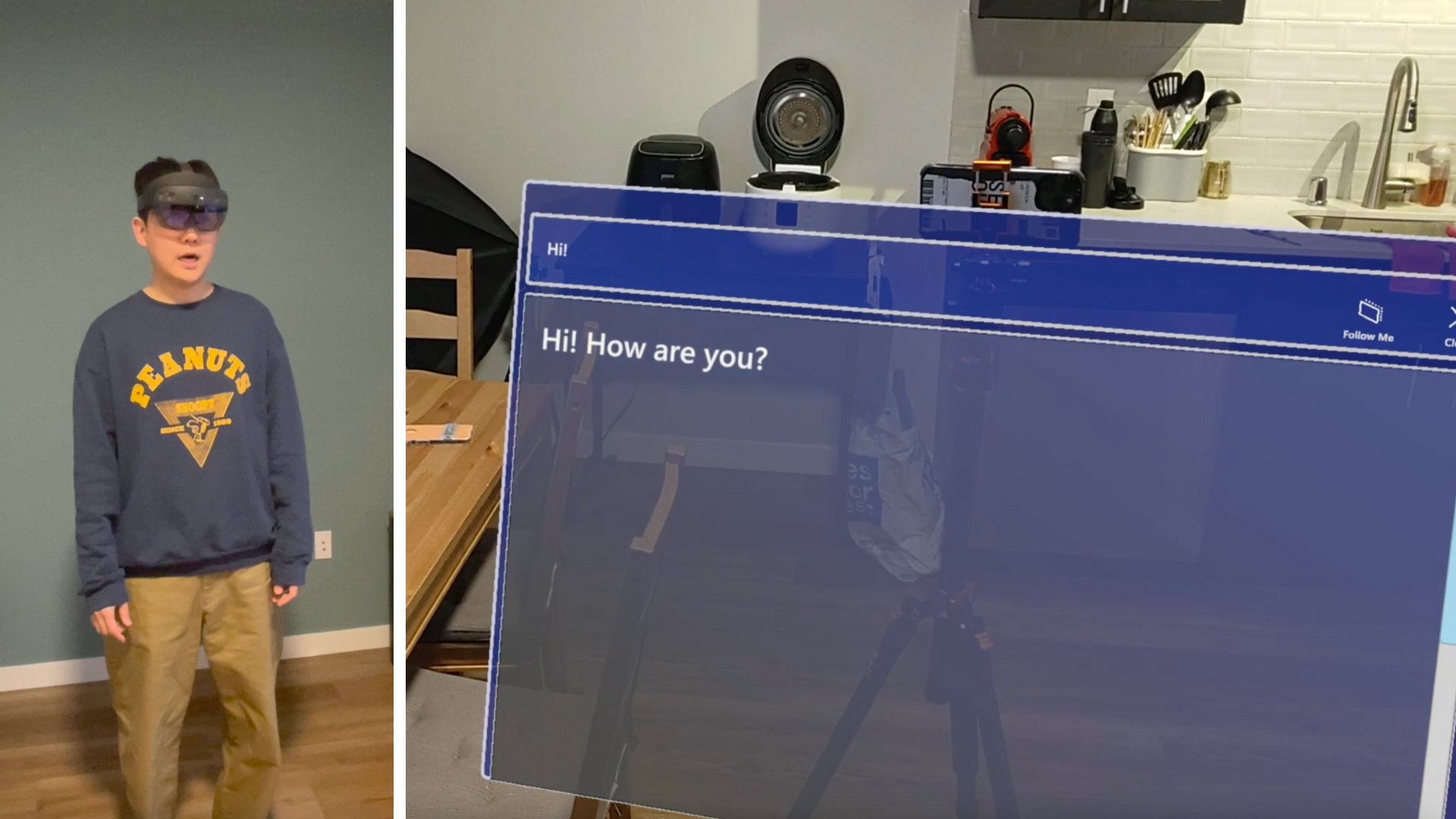

We designed and developed EmailAR using the HoloLens 2 as our choice of wearable AR headset and GPT-4o as our selected LLM. For programming the EmailAR software, we leveraged Unity 2022.3.25f1 and the Mixed Reality Toolkit (MRTK) 2.8.3, both of which provide tools for rapidly creating an AR experience. Our initial step was to connect the HoloLens 2 with Gmail through the Gmail API. This process turned out to be complex as the HoloLens 2 does not natively support the OAUTH 2.0 protocol required for authenticating users and asking for permissions. To resolve this issue, we engineered a custom solution by creating a simple Node server. We then exposed the localhost endpoint via Ngrok. Lastly, we used this exposed URI as the redirect URI for the Gmail API. By doing so, we were able to establish a custom OAUTH 2.0 authentication loop for the HoloLens 2.

Once users grant appropriate permissions, EmailAR searches for unread emails in their inbox and displays the subject and sender of each unread email within a scrollable, always-available UI panel. Users can select any email they wish to read, which will then expand into a larger UI panel showing the sender, subject, and body of the email. Moreover, users are able to respond via speech commands, dictating a brief summary of their response. This summary is sent to GPT-4o, which generates an appropriate email reply.

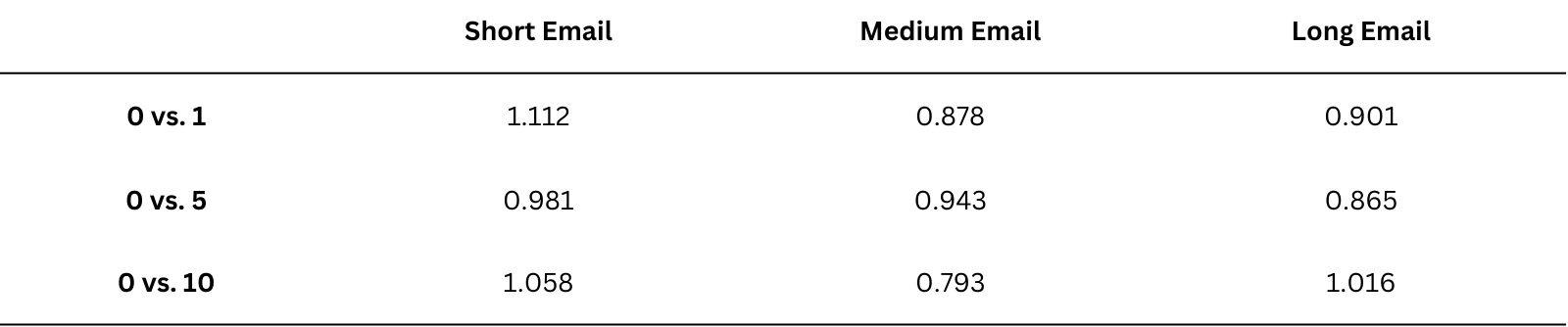

We wanted to understand whether an LLM can generate email responses that mimic users’ writing styles. To achieve this, we computed and utilized the BERT Score. BERT Score is a common metric used to evaluate an AI-generated text [13]. It compares the similarity between AI-generated text and baseline text. We sampled 13 most recent email-response pairs from five participants where they responded to someone’s email. Participants comprised of authors of this work, as uploading emails to an LLM can pose privacy risks. We randomly sampled three email-response pairs to see if an LLM can generate accurate responses to these emails. The three email samples vary in length: one short response, one medium, and one long. The other ten email-response pairs were used as contextual samples for an LLM to analyze the authors’ email writing styles. Initially, we supplied the LLM with zero email examples and gradually incorporated them one at a time. Each participant asked EmailAR to compose email responses. A significant change in BERT Score would suggest that the addition of email examples enabled an LLM to write more author-like email responses.

System Demo

We include a link to a YouTube Shorts video demonstrating how EmailAR works.

Results

Unsurprisingly, without any email samples, GPT-4o initially performed poorly in replicating users’ actual email responses. Interstingly, while providing email samples to an LLM improved its ability to construct user-like responses, having more samples did not necessarily result in greater performance. An increase in BERT Score of 1 signals a significant improvement in similarity between an AI-generated email and a human-written email. When given one email sample, the BERT Score increased by around 1 compared to the no email sample condition for short, medium, and long emails. When given five or ten email samples, the Bert Score increased by similar amounts. While we only had five participants, our results indicate that an LLM does not need a large number of email samples. This means future EmailAR systems can have a small initial questionnaire asking users to respond to a few dummy emails. This way, an LLM can mimic the user’s writing style without privacy risks of accessing real emails.

Discussion

Positive Disability Principals

We further evaluated our project idea based on the positive disability principals:

-

Is it ableist?

Our system is mostly not ableist, as it can support users with and without disabilities. It offers a new way to compose email responses that supports those with musculoskeletal disorders and helps everyone else avoid temporary disorders like carpal tunnel. However, wearable AR technology can be ableist as it is expensive and not widely available. We hope this technology will continue to become cheaper so that more people will have access. We address this point further in a later section.

-

What parts of the work are accessible and what are not (for example, are both design tools, and their outputs accessible?)

The software system, including email viewing, writing, and editing, is accessible, as it minimizes the need to look down at screens and type on keyboards. People with musculoskeletal disorders can compose emails via speech input. However, the AR glasses hardware may not be accessible to some who cannot easily wear and manipulate glasses-like devices such as amputees (e.g., see Ryan Hudson Peralta’s review of the latest AR technologies listed in the Related Work section).

-

Are people with disabilities engaged in guiding this work? At what stages?

People with disabilities are involved in the planning stages. Our prototype is motivated by prior first-person accounts and interview studies, as highlighted in paragraph three of the project proposal. Additionally, Prof. Kat Steele was involved in designing our system. In this course, the primary goal is to understand whether an LLM can mimic a user’s email writing style. To minimize privacy issues of sending emails to an LLM, we may not involve people with disabilities for this course. However, once a prototype is available, we will bring in people with musculoskeletal disorders to try our system and provide feedback. Again, this may be outside the scope of this course.

-

Is it being used to give control and improve agency for people with disabilities?

Our tool is designed to support people with musculoskeletal disorders to complete email tasks without having to look down at screen-based technologies like smartphones and laptops. Additionally, users can compose emails via speech instead of typing on a keyboard. Broadly, we hope that our technology will support everyone to be productive and contribute equally without barriers like musculoskeletal discomfort.

-

Is it addressing the whole community (intersectionality, multiple disabled people, multiply disabled people)

This is a key consideration of our project. Our prototype will support users with musculoskeletal disorders. Additionally, it can benefit those wanting to prevent temporary motor impairments such as carpal tunnel. It may also be helpful to BLV people, as they also often rely on speech-to-text solutions like Dictation for typing tasks. Although the system is initially designed to support people with upper body musculoskeletal disorders, it can certainly be useful to everyone.

Disability Model Analysis

Due to the time constraints of the quarter system at the University of Washington, EmailAR has several limitations. We critique EmailAR using three disability models: Language Justice, Leadership of Those Most Wanted in the 10 Principals of Disability Justice, and Disability Dongle.

Language Justice means making sure everyone has equal access to information, resources, and the ability to participate, regardless of the language they use. Components of EmailAR, including email display UI panel and speech recognition, only works with English. Additionally, prior research suggests that LLMs trained using primarily English data, including ChatGPT, can struggle in multilingual settings [14]. EmailAR unfortunately does not work as well in a language other than English. EmailAR should support any language the user is comfortable with.

Leadership of Those Most Wanted in the 10 Principles of Disability Justice means prioritizing and centering the voices, experiences, and leadership of people who are most affected by disability in decision-making and advocacy. For EmailAR, this means involving people with upper body musculoskeletal disorders, both temporary and permanent, in the design process. While this was the original goal, we did not have enough time to conduct a user study. We instead evaluated whether an LLM can mimic users’ writing styles. EmailAR, as of now, goes against the Leadership of Those Most Wanted principle. As future work, we will further improve the EmailAR prototype, then conduct a user study with our stakeholders.

Throughout the course, I questioned whether EmailAR, and largely wearable AR headsets, can be a disability dongle. A disability dongle refers to a well-intentioned but poorly-designed assistive technologies created without sufficient understanding of the needs and realities faced by disabled people. Wearable AR headsets such as the HoloLens 2 are bulky and expensive, meaning assistive technologies built using such hardware cannot yet be deployed. Additionally, the disabled community has expressed concerns regarding reaffirmed dependency on others and the social stigma of wearing specialized technology [8]. However, we believe this limitation in hardware will likely be resolved with time. Even today, companies like XReal and Brilliant Labs are creating lightweight AR glasses that resemble typical glasses and sunglasses. As future work, we plan to utilize a more natural-looking, lightweight pair of AR glasses and conduct a field study or an autoethnographic study evaluating the usability of EmailAR in the real world.

Future Work

In summary, our future work is to:

- Implement EmailAR for a lighter pair of AR glasses.

- Improve the email display component of EmailAR.

- Add support for multiple languages.

- Conduct a more ecologically valid user study.

- Multi-day field study with participants OR

- Multi-month autoethnographic study

Summary of Learnings & Conclusion

In this article, we present EmailAR, an email composition solution for wearable AR that leverages an LLM to facilitate speech-based email writing for people with upper limb musculoskeletal disorders. If done correctly, the process of email composition can be boiled down to looking ahead and dictating a verbal summary of the email content. Additionally, in our preliminary evaluation, we concluded an LLM can write user-like emails with a small number of email samples. However, it is critical to design this technology carefully by following first-person accounts and different disability models. We are excited to continue this project and potentially submit a paper to a human-computer intearction (HCI) conference.

References

- Ratan, Z. A., Parrish, A. M., Zaman, S. B., Alotaibi, M. S., & Hosseinzadeh, H. (2021). Smartphone addiction and associated health outcomes in adult populations: a systematic review. International journal of environmental research and public health, 18(22), 12257.

- Namwongsa, S., Puntumetakul, R., Neubert, M. S., & Boucaut, R. (2019). Effect of neck flexion angles on neck muscle activity among smartphone users with and without neck pain. Ergonomics, 62(12), 1524-1533.

- Naftali, M., & Findlater, L. (2014, October). Accessibility in context: understanding the truly mobile experience of smartphone users with motor impairments. In Proceedings of the 16th international ACM SIGACCESS conference on Computers & accessibility (pp. 209-216).

- Li, F. M., Liu, M. X., Zhang, Y., & Carrington, P. (2022, October). Freedom to choose: Understanding input modality preferences of people with upper-body motor impairments for activities of daily living. In Proceedings of the 24th International ACM SIGACCESS Conference on Computers and Accessibility (pp. 1-16).

- World Health Organization. Musculoskeletal health. https://www.who.int/news-room/fact-sheets/detail/musculoskeletal-conditions

- Centers for Disease Control and Prevention. Work-Related Musculoskeletal Disorders & Ergonomics. https://www.cdc.gov/workplacehealthpromotion/health-strategies/musculoskeletal-disorders/index.html

- Malu, M., & Findlater, L. (2015, April). Personalized, wearable control of a head-mounted display for users with upper body motor impairments. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 221-230).

- McNaney, R., Vines, J., Roggen, D., Balaam, M., Zhang, P., Poliakov, I., & Olivier, P. (2014, April). Exploring the acceptability of google glass as an everyday assistive device for people with parkinson’s. In Proceedings of the sigchi conference on human factors in computing systems (pp. 2551-2554).

- Ryan Hudson Peralta. Apple Vision Pro Accessibility Features: Navigating Without Hands. Equal Accessibility. https://www.youtube.com/watch?v=dncb-FlUhlM

- Valencia, Stephanie, Richard Cave, Krystal Kallarackal, Katie Seaver, Michael Terry, and Shaun K. Kane. ““The less I type, the better”: How AI Language Models can Enhance or Impede Communication for AAC Users.” In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, pp. 1-14. 2023.

- Goodman, S. M., Buehler, E., Clary, P., Coenen, A., Donsbach, A., Horne, T. N., … & Morris, M. R. (2022, October). Lampost: Design and evaluation of an ai-assisted email writing prototype for adults with dyslexia. In Proceedings of the 24th International ACM SIGACCESS Conference on Computers and Accessibility (pp. 1-18).

- Bertrand, T., Moccozet, L., & Morin, J. H. (2018, July). Augmented human-workplace interaction: Revisiting email. In 2018 22nd International Conference Information Visualisation (IV) (pp. 194-197). IEEE.

- Zhang, T., Kishore, V., Wu, F., Weinberger, K. Q., & Artzi, Y. (2019). Bertscore: Evaluating text generation with bert. arXiv preprint arXiv:1904.09675.

- Zhang, Xiang, Senyu Li, Bradley Hauer, Ning Shi, and Grzegorz Kondrak. “Don’t Trust GPT When Your Question Is Not In English.” arXiv preprint arXiv:2305.16339 (2023).