Engaging with Children’s Artwork in Mixed-Ability Families

Introduction

Creating art is a fundamental part of childhood, cherished by both children and parents as a means to connect and bond over their children’s artistic expressions. For children, creating art is a way to express their emotions, imagination, and experiences. For parents, viewing and discussing their children’s artwork is a way to understand their child’s world, encourage their creativity, and foster emotional connections.

However, for parents who are blind or have low vision (BLV), engaging with their sighted children’s artwork poses unique challenges. Traditional methods of interacting with visual art rely heavily on sight, which can make it difficult for BLV parents to fully appreciate and discuss their children’s creations. This gap in engagement can impact the emotional connection and understanding between parents and their children, potentially affecting the child’s confidence and the parent-child relationship.

Our project aims to address this challenge by developing an iOS app that uses AI-generated descriptions to help BLV parents engage with their children’s artwork. The app leverages advanced GPT-APIs to create detailed, vivid descriptions from pictures of children’s artwork, which parents can then explore using voiceover, high-contrast, and magnification settings. This tool not only facilitates self-exploration of the artwork but also enables BLV parents to have meaningful conversations with their children about their creations, thus bridging the gap caused by visual impairment. By incorporating feedback from formative research with BLV parents and their children, our app is designed to meet the specific needs of mixed visual ability families. The inclusion of a human-in-the-loop system allows children and parents to edit the AI-generated descriptions, ensuring accuracy and personal relevance. This collaborative approach not only improves the quality of the descriptions but also empowers both parents and children to contribute to the understanding and appreciation of the artwork. By ensuring that BLV parents can actively engage with and discuss their children’s artistic expressions, we aim to enhance the emotional bonds within mixed-ability families and support the developmental growth of children through positive reinforcement and shared experiences.

In addition to addressing the immediate needs of BLV parents, our app also contributes to the broader goals of accessibility and inclusion. By demonstrating how technology can be used to create inclusive experiences, we hope to inspire further innovations that support diverse needs and promote equity in family interactions and beyond. With this project, we envision a future where all parents, regardless of their visual abilities, can fully participate in their children’s creative journeys, fostering a more inclusive and understanding world for everyone.

Figure 1: Images of different children’s artworks

Figure 1: Images of different children’s artworks

Positive Disability Principles

1. Our project is not ableist and aims to make children’s artwork more accessible to BLV parents. The formative work guiding this project ensures that our technology solution is not a “disability dongle”.

2. BLV family members and their children have guided this work, primarily through the findings from formative studies as previously mentioned. In the future, we hope to co-design and evaluate any technology outputs of this work with mixed visual ability families.

3. Our project aims to improve the agency of people with disabilities by providing BLV parents a supplemental understanding of their children’s artwork which they can use to further engagements with their children.

4. While we are not addressing multiple disabled people (such as deafblind individuals) through this work, as that would require more formative understanding, we have consulted with multiple different BLV individuals and their children. Additionally, the formative work has looked at a wide range of relative-child relationships: parent-child, grandparent-grandchild, great-grandparent-great-grandchild, aunt/uncle-niece/nephew. This ensures that our solutions work not only for BLV parents and their children but also for other BLV-sighted family relationships.

5. All technology produced through this work is accessible to BLV individuals. We tested our app with VoiceOver and other accessibility settings like magnification and high-contrast. We have also avoided cognitively overloaded designs, and used plain language wherever possible in our application. We used standard digital design tools such as PowerPoint or Figma, which have been audited and tested for accessibility, during our design phase.

Related Work

Several projects and studies have informed our approach to making children’s artwork accessible to BLV parents.

User-Centered Design in Accessibility

User-centered design principles are crucial in accessibility projects. Previous studies emphasize the importance of involving end-users in the design process to create solutions that truly meet their needs. Hence, the research mentioned above has been conducted with BLV parents and children, ensuring that the app is both usable and valuable in the mixed-ability settings.

AI-Generated Descriptions

Previous work has explored the use of AI to generate descriptions for visual content. Projects like Microsoft’s Seeing AI and Google’s Lookout provide insights into the capabilities and limitations of AI in generating useful descriptions for visually impaired users.

Technology in Mixed-Ability Family Interactions

Prior research in mixed-ability family settings includes work by Park, S., Cassidy, C.T., & Branham, S.M. (2023). “It’s All About the Pictures:” Understanding How Parents/Guardians With Visual Impairments Co-Read With Their Child(ren). Proceedings of the 25th International ACM SIGACCESS Conference on Computers and Accessibility. and Storer, K.M., & Branham, S.M. (2019). “That’s the Way Sighted People Do It”: What Blind Parents Can Teach Technology Designers About Co-Reading with Children. Proceedings of the 2019 on Designing Interactive Systems Conference., who explore the design of technology for co-reading interactions between BLV parents and their children, approaching the BLV parent’s perspective through observational and interview studies like we do in our work. However, prior research has not investigated technology facilitating a mixed visual-ability family’s interaction regarding children’s artwork.

Formative Work [In Submission]

A member of our team led formative work that is in submission Anonymous Authors, “Engaging with Children’s Artwork in Mixed Visual Ability Families,” in Proceedings of the ACM SIGACCESS, 2024. to investigate what motivates BLV relatives to engage with their children’s artwork, current practices by BLV relatives and their children to discuss and adapt the child’s artwork, BLV adults and sighted children’s reactions to AI interpretations of the child’s artwork, and the families’ collective responses to the technology design probes. These research questions were explored through two studies: the first was with 14 BLV individuals, and the second study was with 5 BLV relative and sighted children groups. These studies consisted of semi-structured interviews, using two different AI tools to describe children’s artwork (provided by the BLV family members), and two in-person design probes to evaluate preferred technology representation mediums for nonvisual understanding of children’s artwork. Through these studies, researchers found that BLV family members are motivated by a desire to emotionally connect and bond with their child, as well as a need to understand their child’s developmental growth and skills. While many BLV individuals found some benefits to AI descriptions, saying that some information was better than none and it enabled them to have a conversation or ask questions to their children, some parents did not like it when the AI used simplistic or reductive language to explain their child’s art. These findings specifically around the role and language of AI have heavily informed this present work.

Methodology and Evaluation

Project Design

We designed an iOS app that uses ChatGPT to generate detailed descriptions from pictures of children’s artwork. The app includes essential accessibility features such as voiceover compatibility, high-contrast visual settings, and an intuitive navigation system to ensure ease of use for BLV users. The design process was iterative, involving multiple rounds of feedback from BLV parents and their children to ensure the app met their specific needs.

Implementation

Our major contributions in the final project include:

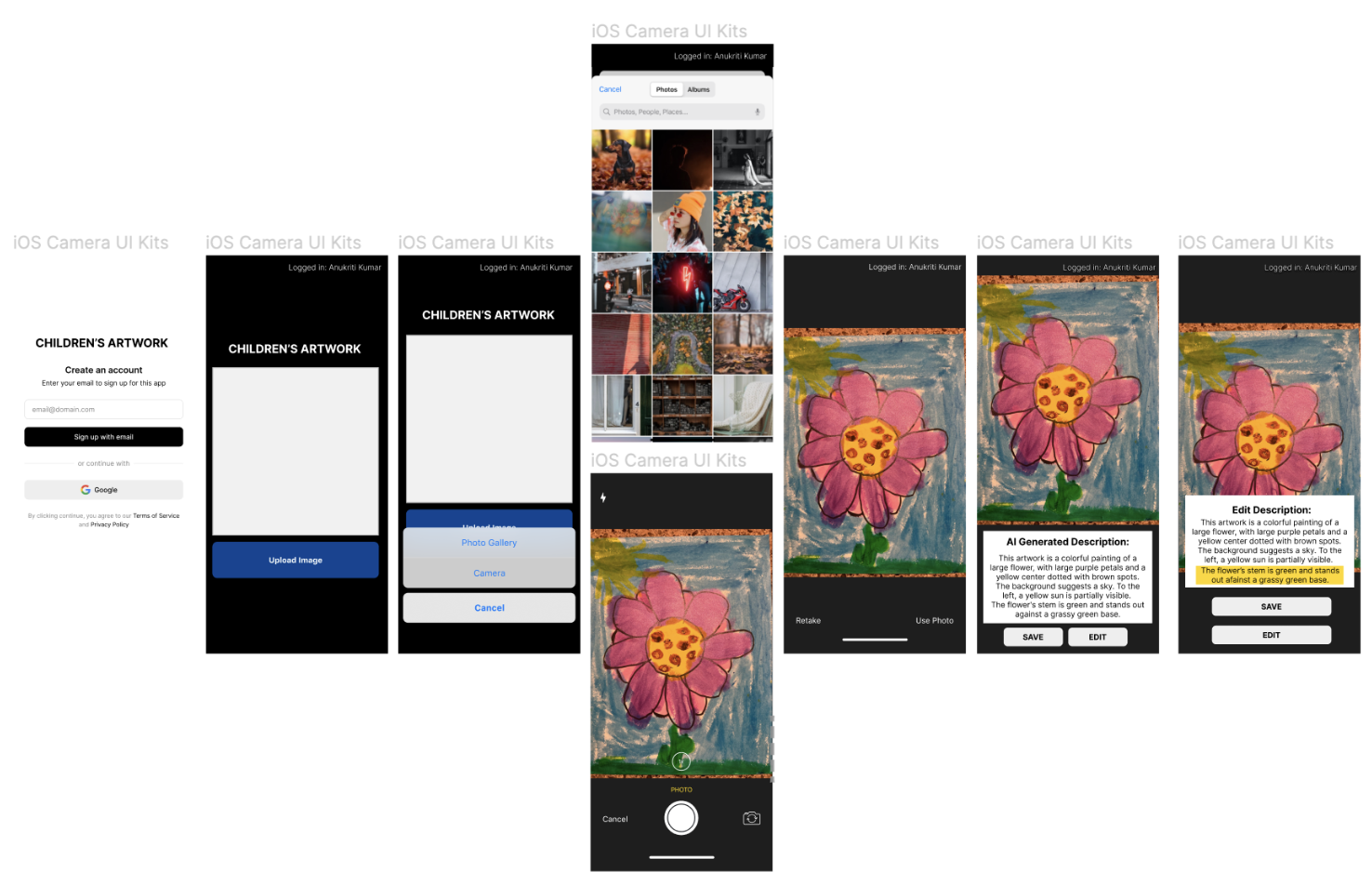

- Finalizing Designs: We completed the designs for our app, including detailed interaction flows for users. This involved creating wireframes and prototypes using accessible digital design tools like Figma, which were audited for accessibility (Shown in Figure 2).

-

Prompt Engineering: We performed extensive prompt engineering to ensure comprehensive and accurate artwork descriptions. We validated the relevance of the prompt by comparing outputs from a custom GPT model (ArtInsight) and GPT-4o.

Final Prompt:

“This assistant helps blind parents understand their childrens visual artwork. It provides detailed, respectful descriptions of the artwork, focusing on descriptive aspects such as orientation, scenery, number of artifacts or figures, main colors, and themes. The assistant avoids reductive or overly simplifying language that minimizes the childs effort and does not assume interpretations if uncertain. For example, it says, ‘The person has a frown, and there are tears falling from their eyes’ instead of ‘The person appears to be sad.’ When given feedback from the parent or child about the artwork, the assistant honors and integrates this perspective into its descriptions and future responses. The assistant maintains a respectful, supportive, and engaging tone, encouraging open dialogue about the artwork. This assistant uses a casual tone but will switch to a more formal tone if requested by the parent or child. The assistant avoids making assumptions about names or identities based on any text in the artwork.”

- Core functionality: We built the core functionality of the app to process images and generate descriptions using ChatGPT. This included integrating with the iPhone camera and third-party libraries to upload images and process them (Click here for app demo).

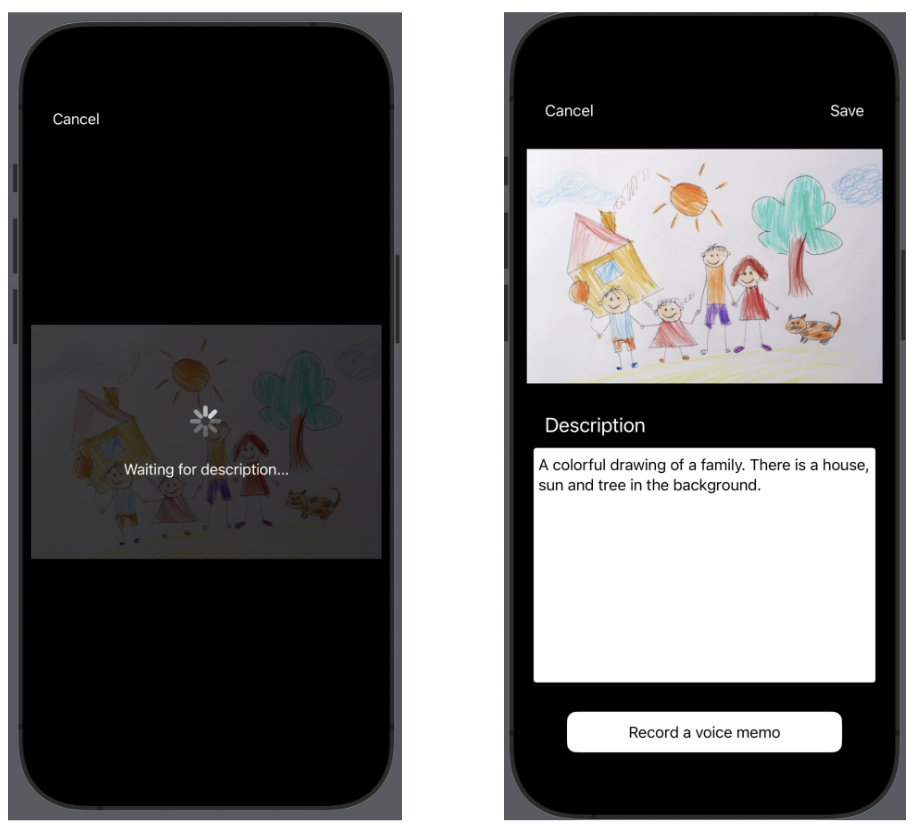

- Voice Memo Integration: We incorporated a UI feature that allows children to record voice memos describing their artwork, which can be saved and reviewed by BLV parents (The UI of our app is shown in Figure 3).

- Human-in-the-loop system: Although out of scope for the current phase, we plan to implement a system that allows users to edit AI-generated descriptions, ensuring they are accurate and personally relevant.

Salient Features of the iOS app

- Integrates with iOS accessibility settings (success criterion)

- Additional, custom VoiceOver and dynamic-type support (success criterion) - It is an iOS / MacOS feature which automatically scales text according to user preference (includes large, accessible fonts)

- Integrates with iPhone camera via third-party library

- Core functionality: Uploads image, Asks custom GPT for a description, Polls for response (Click here for app demo with the Dynamic Type Support)

Metrics and Validation

These are some metrics for success that we considered:

- VoiceOver Compatibility: We conducted manual tests to ensure that all interactive elements are correctly labeled and accessible via VoiceOver.

- Contrast Ratio: We verified that the app meets the minimum contrast ratio requirements (4.5:1 for normal text and 3:1 for large text) as per the Web Content Accessibility Guidelines (WCAG).

- Error Handling: We tested the app for robustness by inputting various types of images, including very complex, very simple, or irrelevant images, and ensure that the app handles errors gracefully without crashing.

- AI Descriptions Match Formative Work Guidelines: We validated that our custom GPT described all elements of the artwork in appropriate language, and was not reductive, simplistic, and presumptive.

Success Criteria for AI Descriptions

We evaluated the AI descriptions of 10 different artworks created by children using GPT-4o and our custom GPT (ArtInsight). We measured the number of elements identified, usage of reductive language, simplistic descriptions, and presumptive statements.

Performance Comparison:

- Art Insight performed better than GPT 4o on 6/10 different drawings.

- GPT 4o performed better than Art Insight on 1/10 drawings.

- Both (Art Insight and GPT 4o) had equal performance on 3/10 drawings.

These metrics and validation steps ensured that our app met the necessary accessibility standards and provided meaningful, accurate descriptions of children’s artwork.

These are some other metrics for success (Out of Scope):

- Usability: We plan to evaluate our app through user testing sessions with BLV parents.

- Accuracy: We plan to compare AI-generated descriptions with a set of reference descriptions created by sighted individuals.

- Engagement: We plan to measure the frequency and quality of interactions between parents and children facilitated by the app.

Figure 2: Different UI screens indicating different states of the Children’s artwork app

Figure 2: Different UI screens indicating different states of the Children’s artwork app

Figure 3: Screenshots of the app interface showing screens to capture an artwork and show the AI-generated description

Figure 3: Screenshots of the app interface showing screens to capture an artwork and show the AI-generated description

Results

The project has made significant strides in developing a tool that addresses a critical need for BLV parents, enabling them to engage more deeply with their children’s artwork. The initial technical achievements and validation of core accessibility features provide a solid foundation for further refinement and user testing.

Technical Achievements

- Accessible Design: The app’s design adheres to accessibility principles, ensuring that BLV parents can navigate and use the app effectively. Features like voiceover compatibility and high-contrast settings have been tested and validated.

- Accurate Descriptions: The custom GPT model (ArtInsight) has demonstrated the ability to generate detailed and respectful descriptions of children’s artwork, aligning closely with the guidelines established from our formative research.

- Robust Error Handling: The app has proven to handle a variety of image inputs without crashing, maintaining stability and reliability.

Disability Model Analysis

Leadership of Those Most Impacted

Our project is guided by findings from formative research consisting of interviews with BLV family members and their sighted children. As such, our project places the perspective of BLV relatives at the center of our work.

Recognizing Wholeness

While there is a large body of valuable research dedicated to making productivity tools more accessible to people with visual impairments, it is important to recognize that all of our lives encompass so much more than work. With that in mind, this project focuses on aspects of life outside of work and productivity.

Interdependence

The formative work for this project observed interactions between both the BLV relative and their sighted children, recognizing that artwork engagement in families is an interdependent practice where the relative and child work in support of each other.

Learnings and Future Work

Learnings

Through this project, we learned the importance of involving end-users in the design process. Feedback from BLV parents and their children was invaluable in shaping the app’s features and ensuring its relevance. We learnt how to build an accessible iOS app while integrating GPT APIs. We defined various success metrics for validating our work, and also recognized the potential of AI in generating meaningful descriptions. However, we noted the necessity of human oversight to ensure accuracy and personal connection.

Future Work

Future work will focus on expanding the app’s capabilities to include more interactive features, such as voice memos from children describing their artwork. We also plan to explore integrations with other accessibility tools and conduct long-term studies to assess the app’s impact on parent-child relationships. Additionally, we aim to refine the prompts to improve the accuracy and emotional depth of the descriptions.

We will focus primarily on implementing the human-in-the-loop system, conducting extensive user testing, and continuously improving the app based on user feedback. This iterative process will ensure that the app remains relevant, effective, and truly beneficial for BLV parents and their children. Specific next steps include:

- Refining the instructions given to the AI for higher accuracy and emotional depth in descriptions.

- Incorporating the child’s voice descriptions into the AI-generated descriptions for a more personalized experience.

- Exploring the app’s role as an interactive agent that suggests questions or prompts users to collect more data.

References

Arnavi Chheda-Kothary, Melanie Kneitmix, and Anukriti Kumar