SoundDrift: A LiDAR-Based Spatial Audio Experience

Creating Physically-Accurate Sound Environments in VR

Sai Sunku and Harshitha Rebala

University of Washington

Abstract

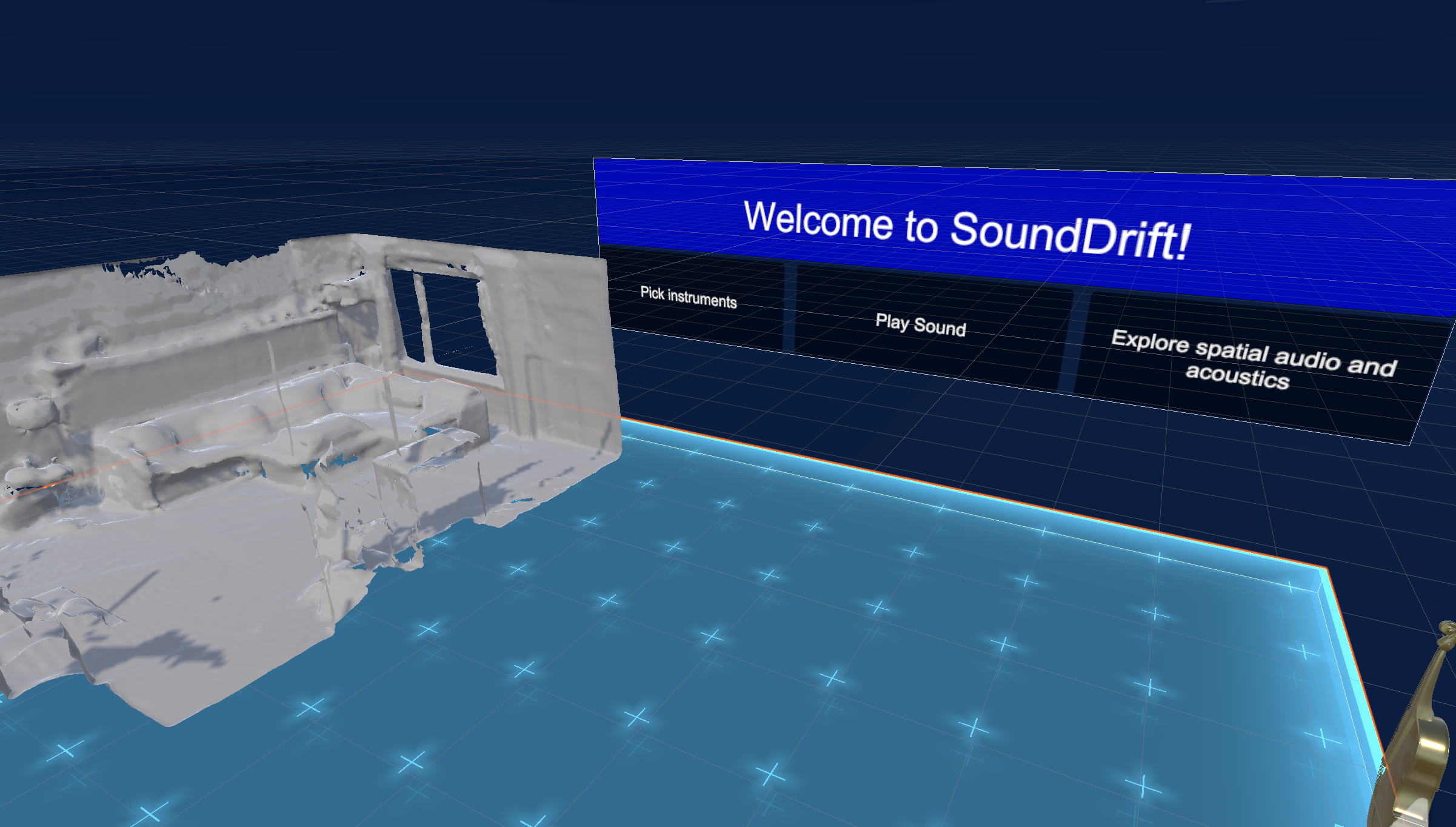

SoundDrift creates immersive spatial audio environments in VR based on real-world acoustic properties captured through LiDAR scanning. By using Apple's LiDAR sensor and Meta Quest VR headsets, our system captures the physical geometry of spaces, analyzes material properties, and simulates how sound waves interact with surfaces to create physically accurate audio environments. Our approach is unique as it generates environment-specific audio responses that accurately model real-world acoustics, including reflections, reverberations, and material-based absorption. Users can place virtual instruments within these reconstructed environments to experience how they would actually sound in the physical space - a valuable feature for musicians, venue planners, and acousticians evaluating spaces before physical setup. SoundDrift combines LiDAR-based spatial reconstruction with acoustic ray-tracing to create an interactive platform for realistic sound experimentation in digital replicas of physical environments.

Our system consists of two major components: an iOS LiDAR scanner app that captures and processes environmental data, and a VR application running on the Meta Quest headset. The iOS app captures detailed spatial information about the environment, including geometry and material properties, while the Quest headset provides the immersive spatial audio experience. Users can place virtual sound sources within the space and experience sound propagation that accurately reflects how audio would behave in that physical environment, with different materials affecting sound differently.

Introduction

In virtual reality, while visual fidelity often takes precedence, audio plays a crucial role in creating truly immersive experiences. Accurate spatial audio significantly impacts our sense of presence and spatial awareness in virtual environments. LiDAR technology presents a unique opportunity to advance spatial audio fidelity by precisely capturing the geometric and material properties of real spaces that directly influence acoustic behavior.

Our project utilizes LIDAR scanning to create physically accurate spatial audio environments in VR. LiDAR is particularly well-suited for this application because it captures high-precision geometric depth data and material properties, including the dimensions and the subtle surface variations of spaces. These details are critical for accurate acoustic modeling, as even small geometric features can significantly affect how sound travels and reflects within an environment. This approach of collecting real-world LIDAR scans to build detailed 3D reconstructions allows us to provide a level of audio realism that traditional spatial audio methods with predefined acoustic profiles or manual environmental modeling wouldn’t be able to achieve.

Building upon established spatial audio rendering techniques, our project uses these highly detailed environmental scans to inform acoustic properties. Unlike approaches that rely on approximate models or manual acoustic design, our method directly translates the physical properties of scanned environments into their acoustic equivalents. The cross-device implementation, utilizing Apple's LiDAR sensors for data acquisition and the Meta Quest for VR rendering, allows users to capture any space and experience its unique acoustic signature in VR.

Our hypothesis was that LiDAR-informed acoustic modeling could create spatial audio so realistic that users could identify environments based solely on acoustic properties. Through blind testing across various spaces, we confirmed this hypothesis, with users correctly identifying spatial characteristics and reporting they could "feel" the size and materials of a space before seeing it. These findings suggest that LiDAR-based acoustic modeling opens new possibilities for further, more immersive experiences, such as real-time adaptive audio-environments, or even physics-based acoustic simulations as well.

Contributions

- We developed a pipeline that transforms raw iPhone LiDAR point cloud data into acoustically accurate 3D environments that includes outlier removal and downsampling algorithms before converting it into polygonal mesh models that maintain detail in complex geometries while optimizing computational efficiency for VR rendering.

- We designed and implemented an intuitive, user-friendly Unity-based VR application for the Meta Quest platform that allows users to place various virtual instruments within reconstructed spaces, play distinct music samples specific to each instrument, and experience how these sounds interact with the unique acoustic properties of the environment, despite having never worked with Unity before.

- We created a network communication system between our iPhone and the Meta Quest headset that enables the live transfer of environmental data from LiDAR scans to the VR application, allowing for the visualization and acoustic simulation of captured spaces.

Method

System Overview

Our project is comprised of two main components: an iOS application that captures and processes LiDAR data, and a VR application running on a Meta Quest headset. These components work together to create a physically accurate spatial audio experience that reflects the acoustic properties of real environments. The overall workflow involves capturing the physical space, analyzing its acoustic properties, and simulating sound within that space in VR.

Initially, we tried to process real-time LIDAR point cloud data within Unity, which caused significant lag and delays, which is why we switched to this approach.

Mapping Environment

The core of our approach is capturing an accurate 3D representation of physical environments, including both geometric and material properties. This environmental data provides the necessary information to model how sound interacts with the space:

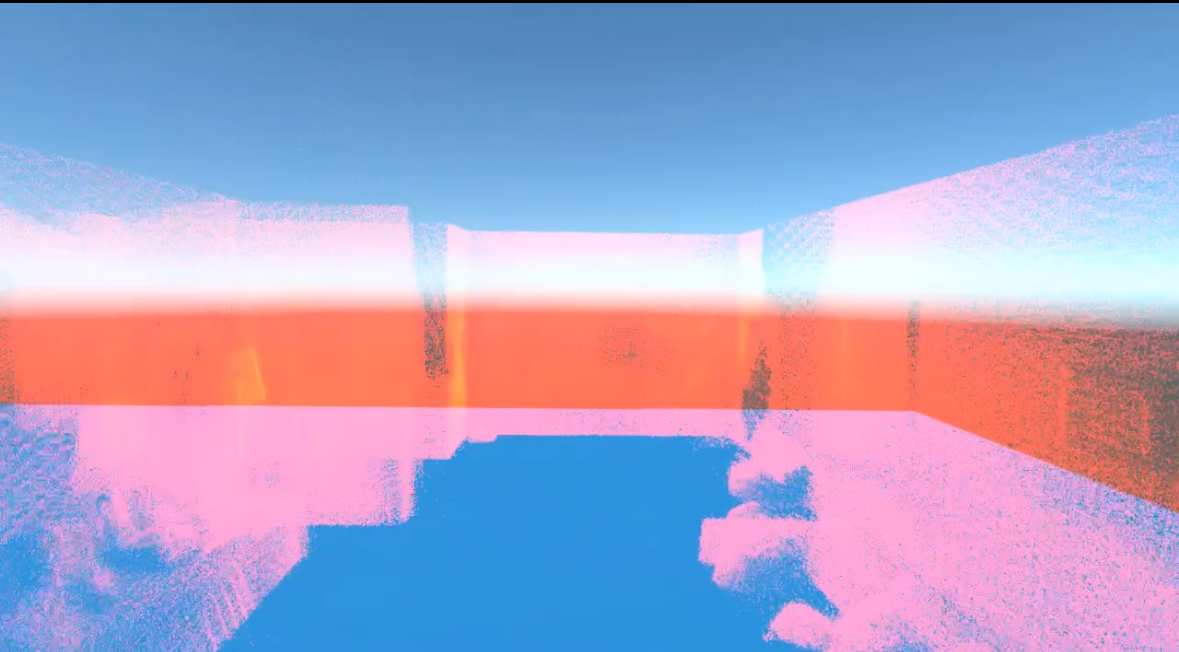

- We capture the spatial structure of the environment using the LIDAR sensor in the iPhone, which generates a dense point cloud representation

- To help make this raw data useful for acoustic modeling, we implement some data processing including outlier removal, filtering, and downsampling.

- We use ARKit's scene reconstruction capabilities to generate a detailed mesh representation of the physical environment with classified surfaces (walls, floor, ceiling, objects). We extend ARKit's classification with acoustic material profiles that define how different surfaces interact with sound waves

- We implement some post-processing on the mesh to help it work better for sound simulation as well including surface normal calculation, smoothing, and geometric simplification

Cross-Device Transfer

Our project also implements a network communication pipeline between the iOS LiDAR device and Quest headset. We implement a local network connection that transmits the processed environmental data, including mesh geometry and acoustic material properties, allowing the VR application to accurately reconstruct the physical environment with its acoustic characteristics.

Acoustic Simulation

The Quest application integrates our acoustic simulation with spatial audio capabilities. We leverage Meta's XR Audio SDK to position sound sources in 3D space, applying our computed acoustic properties to modify how sounds propagate through the environment.

Implementation Details

Hardware Components

Our implementation uses the following hardware:

- iPhone 14 Pro with built in LiDAR sensor

- Meta Quest 3 VR headset

- Wireless router for local network communication

- Development computer (MacBook Pro with M1 chip)

LIDAR Processing Pipeline

Our pipeline builds on Apple's ARKit scene reconstruction features, adding our own custom processing focused on creating realistic sound environments. We take LiDAR data and transform it into VR environments where sound behaves naturally, through several key stages:

ARKit Scene Reconstruction and Enhancement

- Mesh Capture: We use ARKit to automatically generate a 3D mesh of the room. This gives us the basic structure of walls, floors, and objects without having to write complex scanning code ourselves.

- Cleaning Up the Data: Real-world scans are messy, so we apply several cleanup techniques:

- Outlier Removal: We filter out points that are clearly errors (like floating bits that don't belong to any surface)

- Voxel Grid Filtering: We divide the space into small 3D cubes (voxels) and simplify points within each cube, which reduces data size while keeping the important details

- Adaptive Downsampling: We keep more detail in complex areas (like furniture edges) and simplify flat areas (like walls), balancing quality and performance

However, we encountered significant issues with the raw mesh from ARKit - spiky, noisy, and with numerous artifacts that would cause unrealistic acoustic reflections.

Mesh Post-Processing

To address these issues, we implemented several post-processing steps to make the mesh work better for sound simulation::

- Spike Artifact Removal: We analyze face normals to identify and correct mesh anomalies that could cause unrealistic sound reflections. Our algorithm detects vertices where surrounding face normals deviate significantly from the average normal, adjusting these outliers to create a more acoustically accurate representation.

- Laplacian Smoothing: We implement mesh smoothing to reduce jagged surfaces that would create unrealistic acoustic scattering.

- Adaptive Decimation: We implemented a mesh simplification algorithm that reduces polygon count while preserving acoustically significant features. Our approach identifies edges that can be collapsed with minimal acoustic impact, creating a more computationally efficient representation without sacrificing acoustic accuracy.

Data Communication

- Mesh Importer: Downloads and processes mesh data from the iOS application, converting it to Unity-compatible formats (.obj for mesh and .json for classification data).

- Web Server Integration: Uses GCDWebServer to create a local HTTP server that provides mesh and acoustic data to the Quest application.

VR Application

The VR application was developed using Unity with the OpenXR plugin, MetaXR All-in-one SDK, Meta XR Audio SDK, and tools from Unity's Asset Store. Key components include:

Acoustic Simulation

We utilized Meta XR Audio SDK to implement spatial audio capabilities:

- Reflections & Reverb: Simulates how sound bounces and decays in virtual spaces.

- Material-based Acoustics: Adjusts sound behavior based on virtual surface properties

- Acoustic Model: After experimenting with both, we chose the Raytraced Acoustics model (over the Shoebox Room Acoustics model), as it uses ray-tracing to model complex sound propagation and is better for realistic environments with complex geometries.

User Interface

- Virtual Sound Sources Users can place sound sources within the reconstructed environment, choosing from various virtual instruments designed to highlight different acoustic properties.

- Interactive Listening: As users move through the space, the acoustic simulation updates in real-time, allowing them to experience how sound characteristics change with position.

Evaluation of Results

We evaluated our LiDAR-based spatial audio system through both technical assessments and user studies to measure its effectiveness and identify limitations.

Technical Assessment

We evaluated our system's technical performance across several metrics:

- Mesh Quality: The post-processing pipeline significantly reduced mesh artifacts, eliminating erratic reflections caused by mesh spikes and improving the acoustic simulation quality.

- Processing Performance: On an iPhone 14 Pro, our mesh processing pipeline completed in a few seconds for a typical room scan, which is works for static environment capturing but still too slow for true real-time updates..

- Data Transfer: The mesh transfer between the iOS device and Quest headset had a noticeable delay between scanning and experiencing the acoustic environment.

User Study

We also conducted a user study with participants to evaluate how accurate our spatial audio system would be perceived. We put users in a black room (but with audio), and asked them several spatial audio perception questions.

- Sound Source Distance Perception: Participants were placed either near or far from a virtual sound source and asked to determine their relative distance

- Sound Source Position: Participants were asked to point toward the source of a sound played from different directions.

Key findings include:

- Most participants could accurately distinguish between near and far positions based solely on acoustic cues. We observed that distance perception was more accurate for near sources, while participants showed more variation in their perception of far sources.

- Most participants could accurately determine the general direction of sound sources, with better accuracy for sounds originating from the front than those from behind.

One question we did not get to ask was whether participants could identify the size of the room they were in, as we are still currently working on implementing more accurate adaptive acoustics (based on material and size).

Benefits and Limitations

Key Benefits

Our LiDAR-based spatial audio system offers several significant advantages:

- Environment-Specific Acoustics: Unlike generic spatial audio solutions, our system creates acoustic environments that accurately reflect the specific physical space, enhancing immersion and presence.

- Automatic Setup: By leveraging LiDAR scanning, our system eliminates the need for manual acoustic modeling or calibration, making physically-accurate spatial audio accessible, as LIDAR sensors can be readily found on mobile devices today.

- Material-Aware Processing: The system's ability to identify different materials and their acoustic properties creates more nuanced and realistic sound environments that respond differently to various surfaces.

Limitations

Despite these advantages, our current implementation has several limitations:

- Material Classification Accuracy: While ARKit provides basic surface classification, it cannot distinguish between acoustically different materials within the same category, limiting the accuracy of material-specific acoustic simulations.

- Geometric Detail: Small but acoustically significant features were sometimes oversimplified by our mesh processing pipeline, affecting the accuracy of high-frequency reflections.

- Real-time Updates: The current implementation does not support true real-time updates to the acoustic environment as objects move, limiting the system's ability to represent changing scenes.

- Hardware Requirements: The need for both an iOS device with LiDAR and a Quest headset limits accessibility.

- Network Dependency: The reliance on local network communication between devices introduced occasional latency and connection issues during testing.

- Processing Trade-offs: More aggressive mesh simplification improved performance but reduced acoustic accuracy, highlighting the need for better adaptive approaches.

Applications

Despite all these limitations however, we think there's a lot of potential applications for this system:

- Acoustic Education: Allowing students to experience how different spaces and materials affect sound propagation.

- Architectural Previsualization: Enabling architects and clients to hear how spaces will sound before they're built.

- Virtual Production: Creating more realistic audio environments for VR content creation.

- Music Experiences: Allowing musicians to place virtual instruments in specific locations and hear how they would sound in that space.

- Acoustic Heritage Preservation: Capturing and reproducing the acoustic properties of historically significant spaces.

Future Work

Based on our findings and the limitations of our current implementation, we identify several promising directions for future work:

- Hybrid Acoustic Modeling: Combining our geometric approach with wave-based acoustics to better handle diffraction and other wave phenomena, particularly for low frequencies.

- Enhanced Material Classification: Implementing more sophisticated material recognition using computer vision and machine learning to better identify acoustic properties.

- Dynamic Environment Handling: Developing techniques to efficiently update the acoustic model as objects move within the environment.

- Audio-Visual Correlation: Exploring techniques to better align visual and acoustic features for enhanced perceptual realism.

- Real-time Mesh Reconstruction Refinement: Improving the LiDAR scanning pipeline to support continuous refinement of the 3D reconstruction as users move through environments, potentially using machine learning to predict and fill in occluded regions from partial scans.

- User Customization: Developing more tools that allow users to refine material properties and acoustic behaviors.

- Multiple Sensors: Integrating data from multiple LiDAR sensors simultaneously to create more complete and accurate environmental models, especially for complex or large-scale spaces where a single vantage point is insufficient.

Conclusion

Our LiDAR Spatial Audio Experience project demonstrates that integrating physical environment scanning with acoustic simulation can significantly enhance audio immersion in virtual reality. By leveraging the strengths of both Apple's LiDAR technology and Meta Quest's VR capabilities, we've created a system that can generate physically accurate spatial audio environments that reflect the specific acoustic properties of real spaces. We think that as time goes on, and as VR continues to evolve that environment-aware audio processing will become increasingly important for creating more convincing virtual experiences. Particularly in applications where accurate acoustic reproduction is crucial, such as architectural visualization, acoustic education, and immersive entertainment.

All in all, it was a really fun project (despite the steep learning curve we had with learning Unity) as we got to play around with so many different new concepts (LIDAR, sound, etc.) and although our project right now is a pretty basic application with standard functions we are nevertheless quite proud of what we managed to create (particularly getting the LIDAR scan to reconsruct properly!)

Acknowledgments

We would like to thank Professor Douglas Lanman for his guidance and support throughout this project and just for teaching such an amazing class this quarter, we really loved the chance to get to build something ourselves at the end! Our thanks also go to the participants in our user study for their valuable feedback, and to the CSE department for providing access to the necessary hardware.

References

- Apple Inc. (2024). "Scene Reconstruction." https://developer.apple.com/documentation/arkit/arworldtrackingconfiguration/scenereconstruction

- Meta. (2024). "Meta XR Audio SDK Documentation." https://developers.meta.com/horizon/documentation/unity/meta-xr-audio-sdk-unity/

- Thakur, A. and Rajalakshmi, P. (2024). "Real-time 3D Map Generation and Analysis Using Multi-channel LiDAR Sensor." IEEE 9th International Conference for Convergence in Technology (I2CT). https://ieeexplore.ieee.org/document/10544111

- Tachella, J., Altmann, Y., Mellado, N., McCarthy, A., Tobin, R., Buller, G. S., Tourneret, J.-Y., & McLaughlin, S. (2019). "Real-time 3D reconstruction from single-photon lidar data using plug-and-play point cloud denoisers." Nature Communications, 10(1). https://www.nature.com/articles/s41467-019-12943-7

- Savioja, L. and Svensson, U.P. (2015). "Overview of geometrical room acoustic modeling techniques." The Journal of the Acoustical Society of America, 138(2). https://asa.scitation.org/doi/10.1121/1.4926438

- Schissler, C. and Manocha, D. (2017). "Interactive sound propagation and rendering for large multi-source scenes." ACM Transactions on Graphics (TOG), 36(1). https://dl.acm.org/doi/10.1145/2983918

- Valem Tutorials. (2024). YouTube Channel. https://www.youtube.com/@ValemTutorials

- Various different Unity threads on discussions for debugging help and understanding how to use ARKit with Unity!