Eye Tracking for VR Gaming

Alex Zhang

University of Washington

zhangbor@cs.washington.edu

Abstract

The goal of this project is to find out how the gaming experience of shooting with eyes is different from shooting with hand controllers. In order to do so, I have created a Unity game in which players can shoot randomly spawned robots by gazing at them through a webcam or clicking the mouse. After collecting data from 5 users, the results show that shooting with eyes is faster but users prefer the experience of shooting with hands. Directions for future work include: implementing the VR aspect (using eye-tracking headset and hand controllers), improving enemies’ spawning locations to reduce randomness, and collecting data from a wider range of users in terms of age and professions.

Introduction

In most shooting games, players use hands to either press the keyboard, click the mouse, or operate on a hand controller to shoot enemies. The process of perceiving enemies by eyes, sending signals to the brain, and giving instructions to hands takes time. With virtual reality and eye tracking, players only need to perceive, eliminating the next two steps. But as a potential drawback, simplifying the reaction process might undermine the real feeling of shooting.

I am building upon a sample FPS shooting game provided by Unity. Features include simplifying the game scenes, adding random spawning of robots, and recording of user’s average shooting time. In addition, using the webcam-based eye tracker implemented by Stephan Filonov, I add the shooting with eyes option to the game.

With this project, I hope to find out how eye-shooting is different from hand-shooting and which way do people prefer by inviting people to complete a survey after playing the game. My hypothesis is that eye-shooting is, in theory, faster than hand-shooting. But players might be more attracted to the experience of hand-shooting as it feels more realistic. Based on collected data and users’ reports, the hypothesis holds true.

Contributions

Primary contributions include:

- I have become proficient with Unity and have created new scenes, new scripts, new game objects and prefabs.

- I have introduced data from a webcam-based eye tracker application into Unity using UDP ports and utilized the data to allow eye-shooting game experience.

- I have analyzed user's performance and reached the conclusion that shooting with eyes in the game is slightly faster than shooting with mouse clicking.

Method

Starting Point

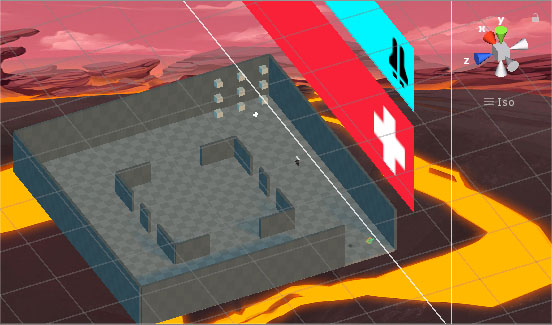

The first step was to choose a platform for my game and I decided to build the game in Unity. With no previous experience with Unity, I downloaded the software and spent some time watching tutorial videos on YouTube to get familiar with the Unity’s interface, the game structure, and concepts of different assets including scenes, prefabs, scripts, etc. On Unity’s website, I found a sample FPS game, Unity’s FPS Microgame Template. It has simple scenes, shooting robots as enemies, and a first-person view of the player’s weapon. Players can shoot enemy bots by clicking the mouse. The game’s setup fits my imagination of the basic structure of my game which is a good template for me to build on.

Unity Game

Physical Layout

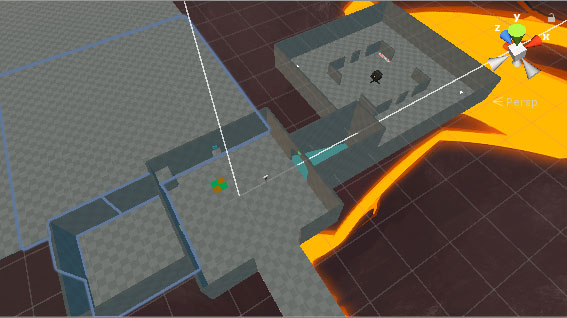

After downloading Unity’s FPS Microgame Template, I played the game several times to get familiar with its controls and objectives. I decided to work on restructuring the physical scene of the game first, then improve the scripts. At first, the game had three rooms. All rooms were surrounded by walls and connected by a small door. With this structure, if enemy bots were not in the same room with the player, the only entrance they could emerge was the door. This would reduce the vividness of the game since I was planning on spawning the enemy bots at random locations. Thus, I removed two rooms and only kept the center one. I moved the player and the main camera into the center room.

Soft Features

1. Spawning enemies at random locations

Next, I needed to add features to the game. Before, there was only one enemy bot and one enemy turret. To analyze player’s performance, I need a larger set of samples. I added a C# script which spawns a new enemy bot every 10 seconds. The spawning location is a random position in 10 coordinate points around the player’s position. I used Unity’s GameObject.transform.position to get the player’s position in world space, used Random.random(-10, 10) to get a point, and added to the player’s position.

2. Modify winning objectives

The second step was to change the point when game ends. The old objective was that user needs to destroy all enemies. Since now new enemy bots would spawn, the objective needed to be changed to destroying a certain number of enemy bots. I found the script that defined the objective and change the test condition in an if loop from checking the number of enemies left to number of enemies destroyed.

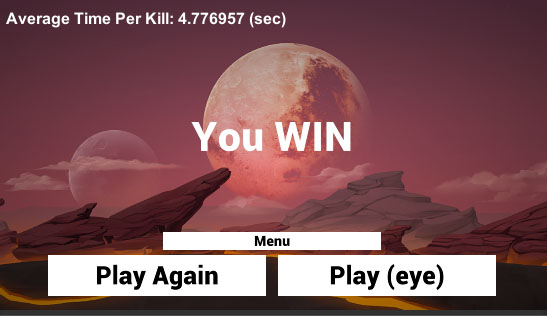

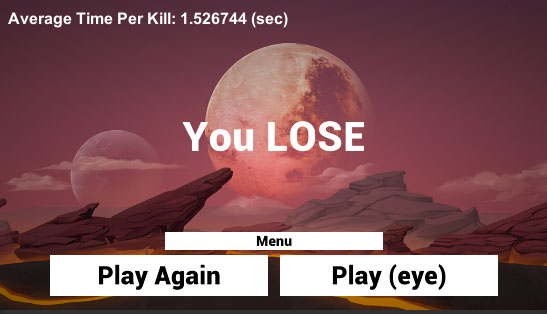

3. Collecting data to analyze user’s performance

The third step was finding a way to analyze the user’s performance in game so that I could compare the effect of shooting with eyes and shooting with mouse clicking. I chose to record how fast the player destroy each enemy bot and report the average time at the end of the game. I chose the time when the enemy bot encounters the player as the starting time mark of the destroy process, and the time when the enemy bot game object was destroyed as the ending time mark of the destroy process. I stored the value of Time.time in a static variable. Each time the player destroyed an enemy, I added the difference between the starting time mark and the end time mark to the sum. At the end of the game, I divided the sum by the number of total kills to get the average value. Then, I report the value on the GUI of the end scene.

Adding Eye tracking to the game

I found an open-source eye-tracking application online. It is based on processing images captured by webcam using OpenCV and Python and it is written by Stephan Filonov. I extracted the estimated eye positions from the application and I needed to send the data to Unity. I converted the numbers to strings and then to bytes and used Python’s sockets to send the data from the application and then used Unity’s UDP Client to receive the data. After converting them back to numbers, I now have the positions of eyes in my Unity game. To allow shooting with eyes, I planned to allow the weapon to fire when the positions of eyes are close to enemy bot’s position. I used Unity’s GameObject.transform.position to get the enemy bot’s position in world space and used Camera.main.WorldToScreenPoint to convert the position to screen pixels. Since the coordinates of my eyes were in terms of the size of my webcam’s window, I converted the eye positions to screen pixels by diving it by the webcam’s window size (480 x 640) and multiplying by my screen size (1366 x 768). When the difference between the screen coordinates of either of my eyes and the enemy bot was less than 10, the weapon would fire. To separate the eye-shooting with hand-shooting, I created another “Play (Eye)” button on the start scene. When the user clicked on this button, a static bool would be set to true and the firing criteria would change from mouse clicking to eye tracking.

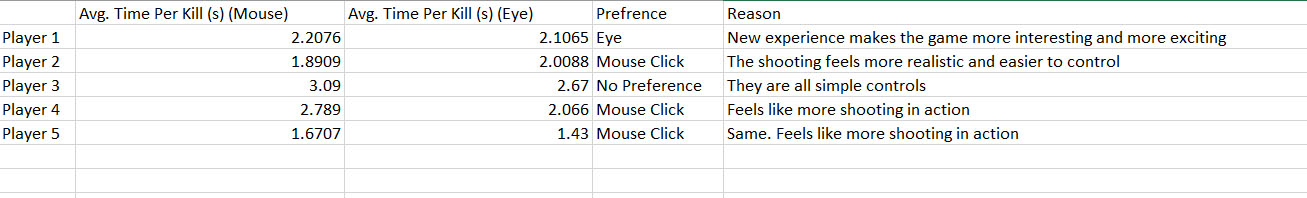

Analyze Data and User report

I invited five people to be my targeted group. I let them first try the games several times. After they got familiar with the game, I asked them to play the game in both eye-shooting and hand-shooting mode and recorded their performances in game. After playing, I asked which mode they prefer. The data and results are attached in the following section.

Evaluation of Results

In general, the collected data and the user reports proved my hypothesis. In 4 out of the 5 cases, eye shooting is slightly faster. 3 users reported that they would prefer shooting with some kind of hand controllers because the feeling is more realistic.

Benefits, Limitations, And Future Work

In this project, I use a simple shooting game as my method of study. The benefit is that users can get familiar with the game really fast, which prevents the unfamiliarity of the game from affecting the data and user reports. Trying to run the study in a more complex game with enemy bots of different sizes might give different results and is definitely worth doing in the future.

In the game, the enemy bots spawn at random locations. This improves the vividness of the game. But on the other hand, the randomness might play affect the data. For example, the time it takes to destroy an enemy in front of the player and the time it takes a destroy one behind the player would not be the same. Future studies can try different criteria of the spawning locations to reduce the impact of randomness on the results.

Using a webcam as the input for eye tracker is convenient. But the eye tracker results are not always consistent and satisfying. The original plan was to use the eye tracker of HTC Vive Pro headset offered by the CSE 490V course staff. Unfortunately, my laptop was not compatible with the headset and the situation did not allow hardware changes. Running the study with a better camera and eye tracking algorithm might amplify my conclusion. Shooting with eyes using VR is more vivid than using a webcam, which could potentially change the user’s preferences of the methods.

Due to the current health situation, I was only able to collect data from 5 people. Increasing the sample size might result in different conclusions.

Conclusion

The results of this project show that shooting with eyes is generally faster. Most users prefer the experience of shooting with hands because of its sense of reality. Based on that, if future developers of FPS games want to implement eye-shooting as a feature, they would need to make sure the sense of shooting and handling a weapon is still vivid. The novelty of eye-shooting did attract 1 player in the study. Incorporating the novelty and improving on the vividness can help eye-shooting become more popular.

Acknowledgments

Thanks to Douglas Lanman for the idea of collecting data and conducting user studies.

Thanks to Kirit Narain for the idea of using a webcam-based eye tracker.

Thanks to the CSE 490V course staff for the hardware support.

References

Stepan Filonov. Tracking your eyes with Python.

https://medium.com/@stepanfilonov/tracking-your-eyes-with-python-3952e66194a6. (2019).

Stepan Filonov. Eye tracking using OpenCV, Python.

https://github.com/stepacool/Eye-Tracker. (2019).

Unity. FPS Microgame. https://learn.unity.com/project/fps-template.