Pausable TTS: Final Hand-In

Authors: Jacklyn Cui, Shangzhen Yang

Introduction

Many people rely on Text-to-Speech (TTS) tools to read written content aloud, particularly blind, visually impaired, or neurodivergent individuals. These tools play an important role in providing access to books, websites, emails, and other digital content. However, despite their importance, most TTS applications are missing essential features that could make them more inclusive and user-friendly. For example, most TTS tools can only read text from start to finish or allow changes to the reading speed. If a user loses focus or misses part of the content, they often have to manually restart or reselect sections of text to hear it again. This limitation is especially challenging for neurodivergent users, who may find it difficult to keep up with the fast speech rates typically used in TTS tools. Additionally, the lack of pause or replay functions makes it frustrating and time-consuming to navigate or review critical pieces of information, particularly on mobile devices, since keyboard shortcuts are unavailable. These usability issues served as the primary motivation for our project: to create a TTS app that gives users more control. Our app is designed to address these challenges by including features such as pause, replay, and adjustable playback speed.

The inspiration for this project came from listening to first-person accounts and feedback from the BVI and neurodivergent communities. Many existing TTS tools assume that users can follow fast-paced speech effortlessly or that there will be no interruptions or distractions. However, real life is not that simple. For example, in a video by a blind individual who uses a screen reader every day, they demonstrated how quickly text is read aloud and explained how they often lose track of details if their attention is diverted for even a moment. They also pointed out that replaying text is cumbersome and disruptive, especially when multitasking. Our app seeks to directly address these issues by prioritizing user control and flexibility. In doing so, we follow principles of disability justice, ensuring our solution is not only functional but also inclusive and respectful of diverse needs.

In summary, our app reimagines TTS technology to provide greater accessibility and control for users who are blind, visually impaired, neurodivergent, or anyone who prefers to listen to text rather than read it. By addressing the gaps in existing tools and incorporating features like pause, replay, and adjustable speed, we aim to create a solution that empowers users to engage with text in ways that work best for them. Our ultimate goal is not only to make technology more accessible but also to promote independence, inclusion, and dignity for all users.

Positive Disability Principals Analysis

- The project is not ableist, as our project has considered the needs of different disabled communities, and we want to make sure that everyone can use our app. That is, we didn’t assume the user’s ability in the design process. Meanwhile, our implementation and debugging also included the necessary check for accessibility issues. For example, we checked and implemented the necessary ALT texts for all the elements in the app to accommodate the accessibility needs of the BVI community. Meanwhile, the needs of the neurodivergent community (especially those who identify themselves as ADHD) are also considered: we introduced the “pause” and “replay,” as well as the “skip” features for the app, to accommodate the need for extra time processing the information. Furthermore, we inspected the UI color contrasts and layouts to ensure that the color would not prevent people from using the app’s features.

- The App’s original design (especially the ideas and low-fidelity wireframes) is accessible, as we considered the different disabilities in the design. However, I would not say that the implementation and testing, including the final prototype, are as accessible as we expected (although it is accessible to some degree). For example, although our app has successfully implemented the Text-to-Speech feature, including the support for multi-languages and the feature to pause/replay the speech, we haven’t fully tested the app on different platforms: our thought was to design the app to be cross-platform, but due to the lack of time, we didn’t fully test the App on platforms such as Web, Android, and Windows. Meanwhile, we found that testing accessibility issues were actually hard - although we considered different disabilities in the design process, it is still hard to implement, as we have to take care of the “tiny” accessibility issues in the implementation (and I don’t think we’ve fully captured all the accessibility issues).

- Many first-person account videos describe how screen readers (a form of text-to-speech app) help the BVI community members interact with technology. For example, the YouTube video by Unsightly Opinions by Tamara talked about her own experience as a blind person who uses technology. During the video, she said the demo speed is slow for screen reader experts, and she demoed the normal speed of the screen reader she uses, which is fast for non-blind individuals. Meanwhile, another video by Ablr showed the typical speed of the screen reader that is being used by a blind individual. James, another blind individual, also shared how he used a computer on YouTube. Meanwhile, there are some videos describing the challenges that ADHD individuals might encounter while reading and learning. a YouTube video by the channel ADHD Mastery (Part One) shared some personal tips on reading with ADHD, including how reading bulky texts in chunks would be beneficial for learning (which inspired us to highlight the current sentence to be synthesized into speech). The second part of that video, including another video on How ADHD Affects Their Job, shared how technologies would help reduce distractions for individuals with ADHD. All videos were first-person accounts, as they were not advertisements and were all disabled individuals talking about their experiences and accessibility needs. Specifically, the videos from the ADHD community

- The project would definitely give more control to the disabled community, including the BVI community and the neurodivergent Community.

- BVI Community: the BVI community usually uses a screen reader as AT to assist them in reading the content on the web page. The most common and current approach for them to pause or replay the paragraph is to reselect the texts on a computer. The new proposed approach would help them to do the following: 1) have another way to replay the text on PC and 2) don’t need to go to PC but can use mobile phones to replay the sentence. Through which they would gain more control.

- Neurodivergent Community: The members from the neurodivergent community, especially those who identify themselves as ADHD, might need some extra time to process the information on the webpage. By offering the pause, replay, and skip features, we can provide them more time and more control when using text-to-speech apps to process the information.

- Meanwhile, our app also considers the whole disabled community - our design focused on both the BVI and the neurodivergent community. The current text-to-speech apps barely offer the option to “pause” or “replay” the speech, which is especially hard for the neurodivergent blind individual to follow. In our design, we considered the needs of both communities and implemented the app to support different languages to make it accessible for those who share the intersectional identity, no matter their preferred language.

Methodology and Results

Technical Approach

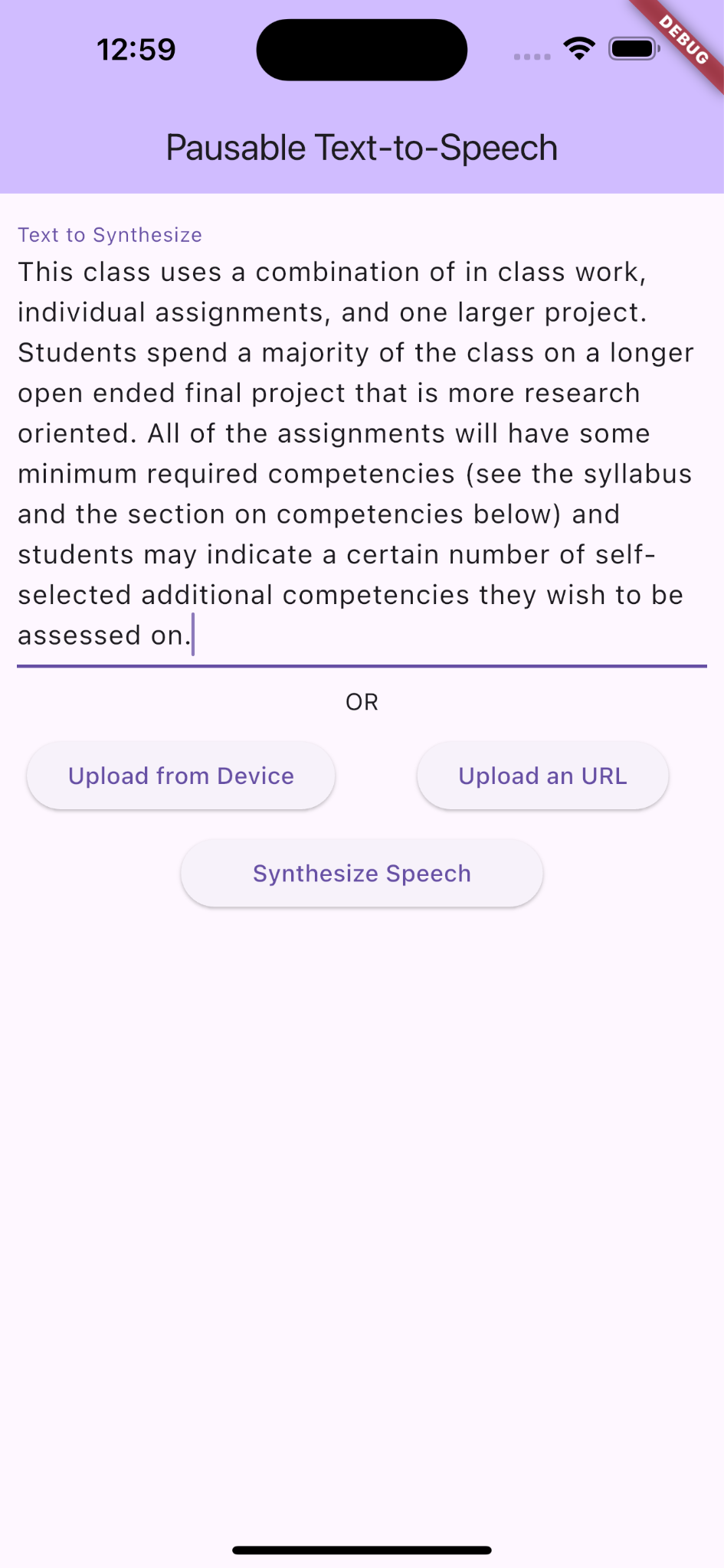

Our app was developed using the Flutter framework to ensure cross-platform compatibility, enabling seamless functionality across desktop and mobile devices. The core TTS functionality was implemented using the flutter_tts library, which facilitates real-time text-to-speech conversion. To enhance usability, we designed features that allow users to:

- Input text directly.

- Upload text files locally.

- Access text via a provided URL.

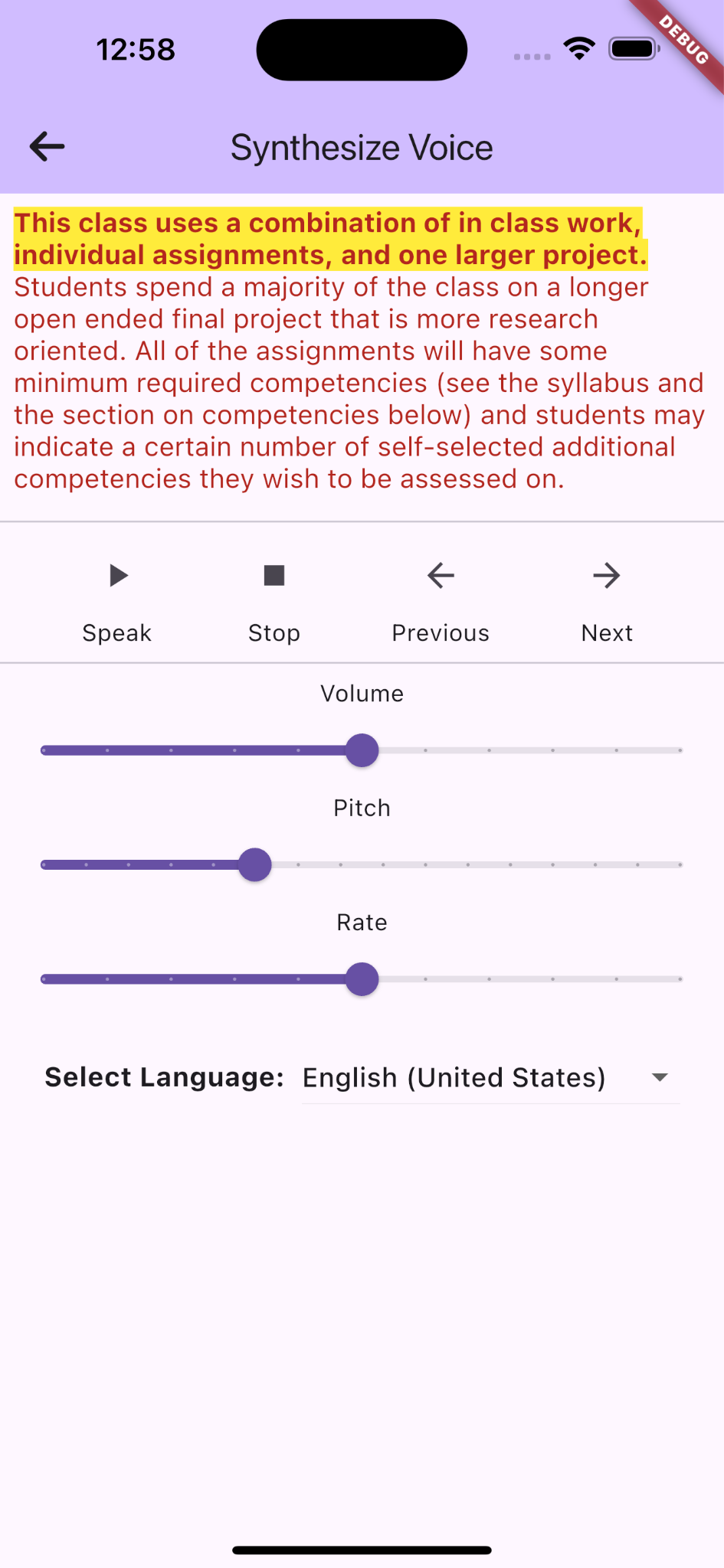

Playback Controls

The app offers intuitive playback options, including:

- Play/Pause: Users can start or pause the TTS engine at any time.

- Replay: Users can replay the last sentence (or the next sentence) with a single tap.

- Adjustable Speed: Users can control the playback speed to suit their preferences.

These feature address limitations in traditional TTS applications, particularly for indivuals who struggle with following long sentences or fast speech rates.

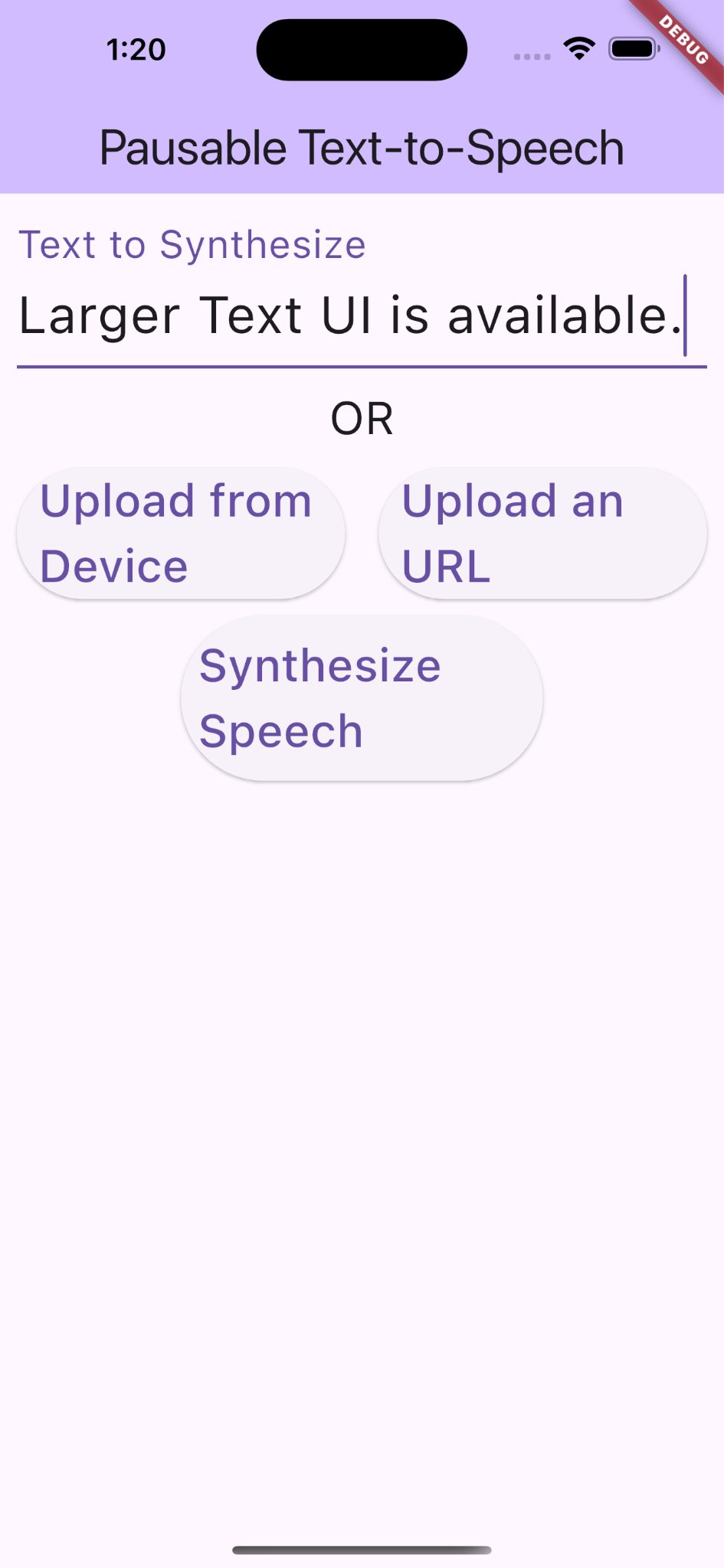

User Interface

The user interface was designed with accessibility in mind. Key elements include:

- Highlighting the currently spoken sentence for visual tracking.

- Supporting larger text sizes to accommondate users with low vision.

- Providing ALT text for all buttons and interactive elements to ensure compatibility with screen readers.

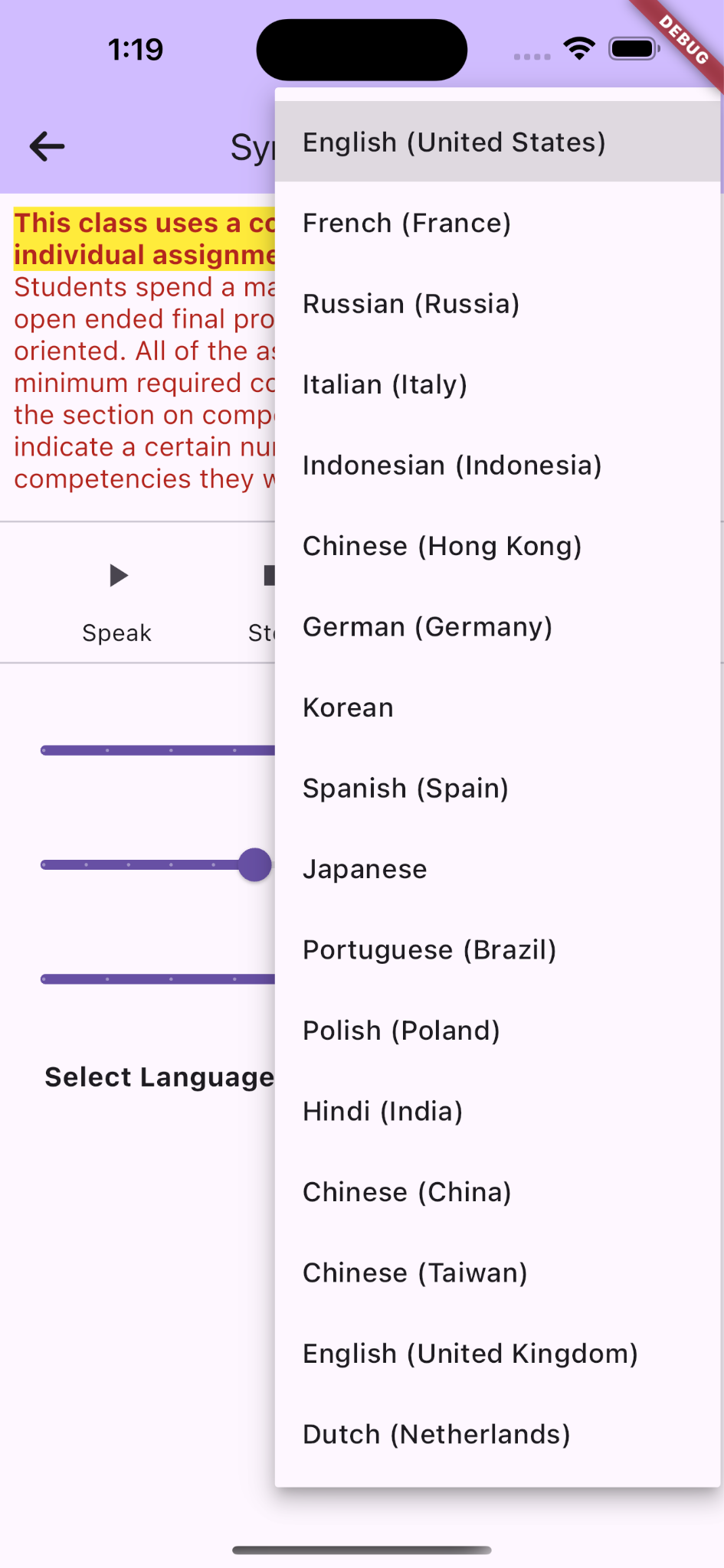

Language Support

To sever a diverse audience, our app includes options for speech synthesis in multiple languages. Users can select their preferred language from a dropdown menu. It allows the app to support more types of content and cater to a broader user base.

Accessibility Testing

We ensured our app met accessibility standards by testing it across platforms using Lighthouse for web and Google Accessibility Scanner for Android, along with manual testing. Lighthouse evaluated key aspects like screen reader compatibility, color contrast, and labeling for the web interface, while Google Accessibility Scanner identified potential issues on Android, such as touch target sizes and missing content descriptions. Manual testing complemented these automated tools by validating the user experience firsthand, ensuring the app is intuitive and accessible across both web and mobile platforms. These efforts helped us create an inclusive tool for users, particularly those who are blind, visually impaired, or neurodivergent.

Results

By the project’s completion, we successfully developed a functional prototype demonstrating all planned features. The app was tested for cross-platform compatibility and performed consistently across iOS, Android, macOS, and Windows. Preliminary user feedback highlighted the effectiveness of the replay and pause features, as well as the intuitive design of the playback controls.

Future Enhancements

The next steps for the project include:

- Expanding file format support (e.g., PDFs, rich text).

- Conducting a pilot study to gather comprehensive user feedback.

- Implementing automated checks for accessibility in uploaded texts.

Disability Justice Analysis

- Cross-Movement Solidarity: Everyone is unique, and when we address accessibility needs, it isn’t enough if we only consider one specific need rather than considering all potential accessibility needs. In our implementation, although we mainly considered the needs of the BVI and Neurodivergent community, we also considered some potential needs of other disabled communities, such as color blindness. We made the app so that the texts are not only represented by their color information - we implemented the current line of texts to be bold with high-contrast backgrounds to inform the user about the current line: we have not only implemented support for screen readers (including ALT texts) but also some minor accommodations for accessibility needs. That is, When we are considering the accommodation for a specific community, we should be able to accommodate the accessibility needs of all disabled communities.

- Intersectionality: The definition of intersectionality says everyone has different identities, and when we accommodate accessibility needs, we may need to address all of them. Our idea is to make the app accessible to individuals who both identify as neurodivergent and BVI. Right now, the trending text-to-speech apps are either only accessible to the BVI community (by putting some ALT texts and making the app accessible to the screen reader) or only accessible to the Neurodivergent community (by adding some colors on focusing the current word/sentence being spoken). However, our app considers the needs of individuals who share both identities as members of the 1) neurodivergent community and 2) BVI community. Meanwhile, we also implemented the support for different languages to address the needs of individuals from different language backgrounds. For example, someone who identifies as BVI and neurodivergent and only understands Chinese can also use our app. Therefore, our app would contribute to the principle of Intersectionality.

- Anti-Capitalist Politics: The principle of anti-capitalist politics requires making the system accessible, even if it may be expensive or time-consuming (i.e., so-called “not-worthy”), and we should go against capitalism to make sure we have the freedom to choose the data we want to share instead of being forced to share our usage data. We aim to make the software open-source, and by doing so, we can provide everyone with an equal chance of accessing the software instead of commercializing or restricting its access. The act of putting the software open-source would definitely challenge the profit-driven models of proprietary software and capitalism. From the developer’s perspective, by supporting and using open-source libraries, including maintaining the open-source code, they can reduce their dependence on capitalistic corporations and even “fight” with the capitalistic companies, including their technologies. Also, from the user’s perspective, the nature of open-source software would ensure that there would be fewer “backdoors” or data collection (even “monitoring” for the user activity) compared with closed-source commercial software. The source code for our project is published in the UW CSE GitLab repository Pausable TTS.

Learning and Future Work

- Through implementing the final project, we learned that it is very hard to implement the software accessibly, even if the original idea and designs were accessible. We found that there were too many different aspects of accessibility to consider, and it is hard to take care of them in the implementation and testing: even if we accommodated one aspect of all the accessibility issues, another accessibility issue might arise.

- Due to the lack of time, we only tested our app on iOS and macOS. Our expectation is to make it cross-platform, so it would definitely be something we can do in the future (i.e., through testing on Windows and Android, including the Web). In the future, we might wish to integrate unit testing and pipelines for testing accessibility issues, such as automatic checking for ALT texts and behaviors on different platforms, thus reducing the workload for manual testing. However, we know that these automated checking would not be able to replace manual testing, especially the potential user experience issues - but it can slightly reduce the workload from manual testing though.

- Meanwhile, we might wish to integrate some more functions into the app, such as support for file types other than

.txt, including support for rich texts such as Markdown files. We may also wish to do a pilot study as well to make sure that people with disabilities can really benefit from the App we built.