Accessible Manga Reader

Introduction

Nowadays, manga become more and more popular among people who like stories and art. However, people with visual impairments may find them inaccessible, since most of the manga are drew in pages that made of manga panels, with no caption or ALT text provided for them. I aim to build an accessible manga reader that can let everyone read manga using screen reader and keyboard. I want to mimic the real panel-by-panel manga-reading experience to make people fully enjoy reading them, not only as story but also as art.

Positive Disability Principles

Intersectionality

This project recognizes that ableism often overlaps with other forms of oppression, like racism or sexism. By focusing on making manga accessible for visually impaired readers, it addresses a broader audience, including marginalized and multiply disabled communities.

Leadership of Those Most Impacted

This project centers on real needs and feedback from people with visual impairments. Their input shaped the design to address their needs directly.

Accessible Design and Outputs

The manga reader itself is accessible, allowing navigation through panels with a screen reader. However, current tools used in the project, such as OCR, still need improvement to fully handle all manga text and visuals, limiting complete accessibility.

Agency and Control

This project gives visually impaired users more independence by allowing them to navigate and enjoy manga without relying on others. It empowers users to control how they experience art and storytelling.

Methodology and Results

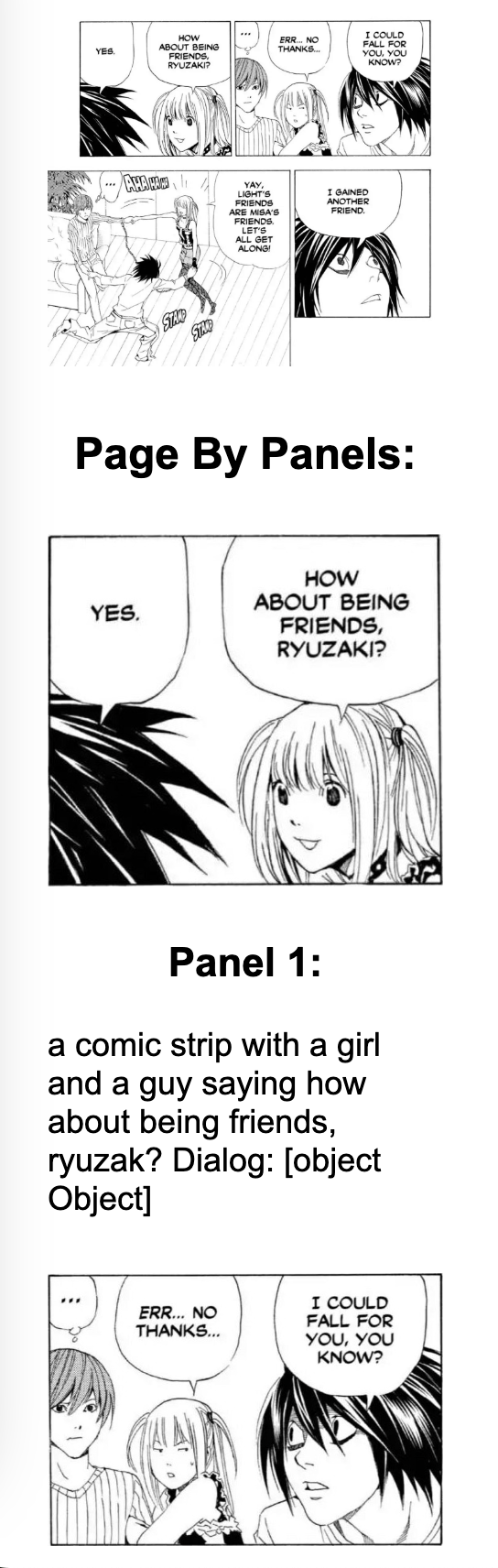

The Accessible Manga Reader provides panel-by-panel navigation for visually impaired users, enabling them to experience manga as intended. The project uses an existing manga panel segmentation algorithm implemented using OpenCV. This algorithm separates a manga page into panels by converting the image to grayscale, applying binary thresholding, and detecting contours. Irrelevant contours are filtered out, leaving only meaningful panels. These panels are then ordered from top to bottom and left to right, aligning with natural reading patterns. If no distinct panels are detected, the entire page is treated as a single panel.

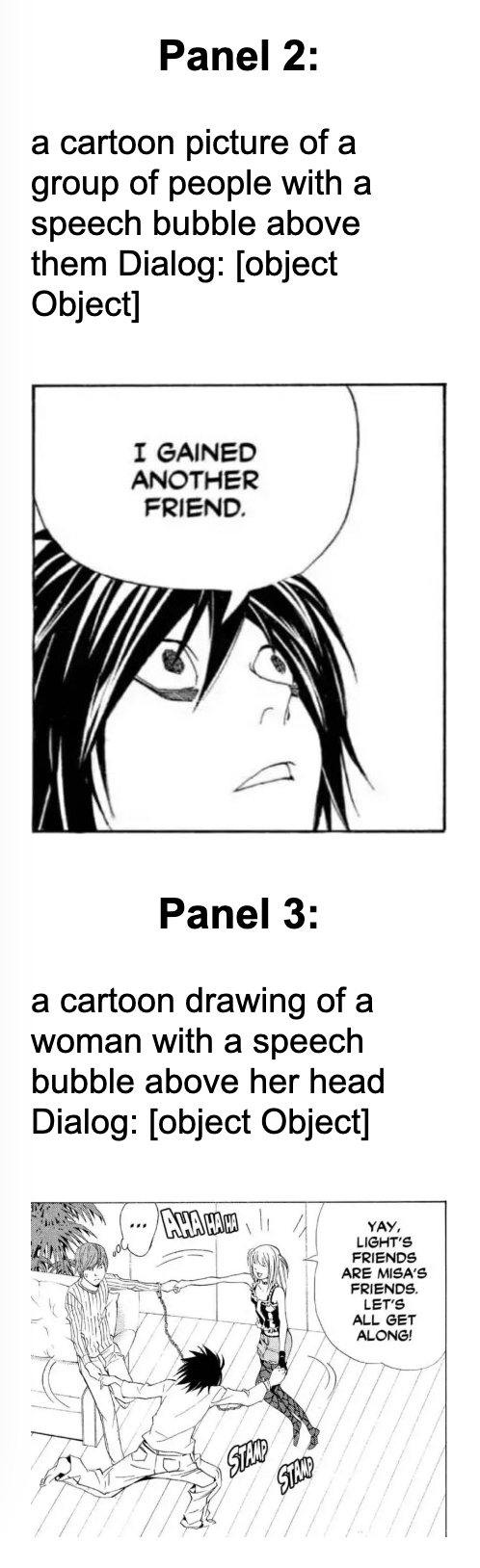

Each panel is described using a Large Language Model (LLM) to capture character actions, emotions, and scene details. However, most free image-captioning models are not trained using manga data so performs relatively bad due to the abstract nature of the art. We need to probably train a model ourselves using manga images in the future, or use stronger non-free LLM such as chatGPT in the future, for further accuracy. OCR (Optical Character Recognition) is applied to extract text from speech bubbles. Preprocessing techniques, including scaling and thresholding, improve text detection accuracy. Tesseract.js is used for OCR, enabling direct browser-based text recognition. Current challenges still include grouping text correctly within speech bubbles and improving detection accuracy.

Descriptions are made by LLM-generated scene details along with OCR-extracted text, recreating the manga experience in an accessible format. The final goal in the future can be achieved in multiple different ways. First, if we can use free and strong LLM such as chatGPT API in the future, we can even skip the OCR part and let chatGPT do the text extraction for us, combining the dialogs to the description it generates. Second, if we end up using other free image-to-text models or training a model ourselves, we need to do the OCR individually, and then we can combine the OCR result and image description as the input to another text-to-text LLM for a full description, including scene, character and dialog.

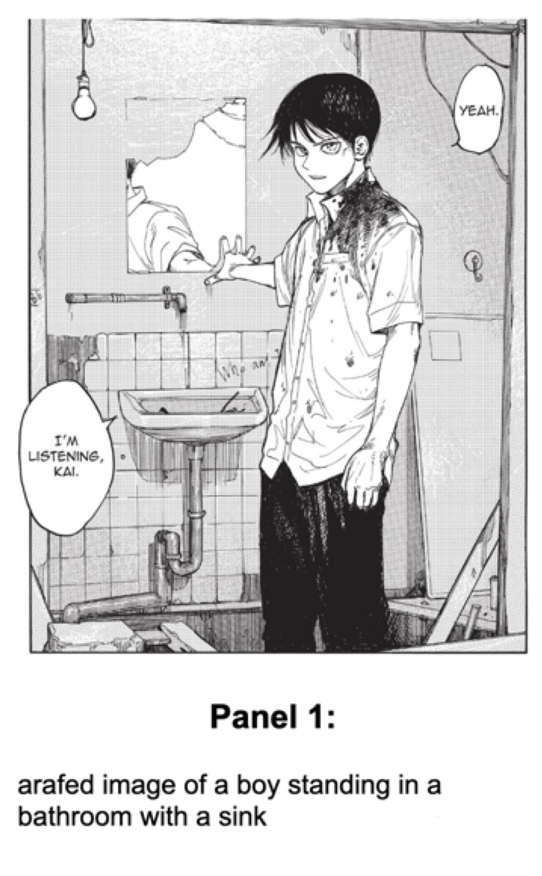

Panel Navigation Examples

Current results show successful panel segmentation and navigation. Improvements to OCR accuracy and text grouping are ongoing.

Disability Justice Analysis

Intersectionality

This project addresses intersectionality by making manga accessible to visually impaired readers, including those from other groups. By creating a tool that can help people with overlapping identities, it supports a more inclusive approach to accessibility.

Recognizing Wholeness

This project respects the right of visually impaired readers to experience manga fully, as both art and storytelling. It makes sure that they can enjoy manga in the same way as other readers, showing that everyone deserves equal access to cultural experiences.

Leadership of Those Most Impacted

This principle highlights the importance of centering the voices of people most affected by a problem. The project involved feedback from visually impaired users during the design process. Their insights shaped the navigation system and descriptions, ensuring the tool addresses their specific needs effectively.

Collective Access

This project uses tools like screen reader compatibility and detailed panel descriptions to create a flexible solution for visually impaired readers. However, challenges remain in improving OCR accuracy, which currently limits full access to text content in speech bubbles.

Learnings and Future Work

Future plans include refining LLM descriptions and integrating dialog text and description for a full description on the manga page. If we do have more time, we can consider adding more Computer Vision algorithms to make the tool even more powerful, such as recognizing and remembering the main characters in the work and include their name when describing the scenes that they are at. I've learned a lot making this tool(as a gitpage website), for example we can really build a prototype for a tool from scratch that can help people who have disability, and there are actually many existed tools that we can use in this process. The project is doable, and will become more and more powerful when better free image-to-text LLMs are developed.