By: Jason Kim, Nicholas Chung, Nathan Pao, Shaan Chattrath

The Problem

Most people do not share responsibility for an environment being safe. Home safety is a big issue that causes harm. People often get harmed by falls, cuts, or even bumping into objects. Most of the time, people do not take care of safety issues until an accident happens. This is harmful to both the home owner and also anyone who visits. This problem is important to address because accidents like tripping over cables or stepping on toys are a major cause of hospital visits. People who are visually impaired face an even greater risk since they may not notice or remember dangerous objects. Solving this issue is essential to make homes and spaces safer for everyone and to help prevent these types of accidents.

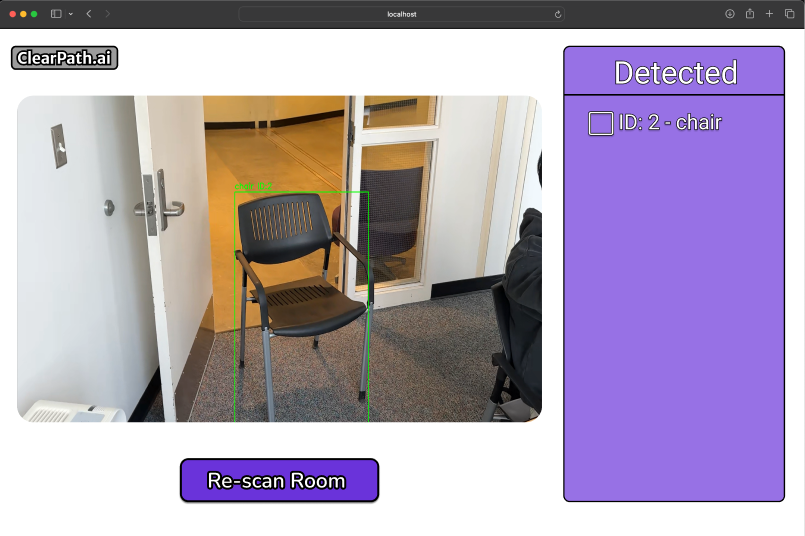

ClearPath.ai is a program used to detect dangerous objects. A user can use their phone camera to scan the room. After scanning is done the app will create a list of objects found in the room. The application then decides which objects can be harmful. These objects are then put into a TODO list. Users can then fix any issues present. Our application is designed to help users improve their surroundings to create a safer and more inclusive environment for everyone.

Positive Disability Principals

We ensured that our application remained as accessible as possible by creating an intuitive and simple user interface. Additionally, we also took additional steps to ensure that those reliant on audible descriptions can still effectively utilize our application to ensure a safe space for everyone. In order to ensure accessibility of our application, we took measures such as running accessibility tools to ensure that all users can access our application. We manually tested our application with assistive technologies such as screen readers and screen magnifiers. In future renditions of this application, we also have goals to implement a feedback system in which users can leave comments or ideas on how we can improve our application to make it even further inclusive. Although we had originally hoped to To push towards being more involved with the disability community, we also have goals to have future versions tested by individuals to get more direct feedback. We believe that by offering visual object detection with auditory and sensory feedback our app remains to be more accessible. We hope to create a user interface that is navigable for individuals with visual impairments so that accessible features such as screen readers, voice controls, and haptic readers can be utilized with our application. We also plan to allow the app to run on various devices, perhaps utilizing cross-platform app development frameworks so that there aren’t any barriers to utilizing our technology. By providing timely warnings and information about potential hazards, this app empowers all users to make informed decisions and navigate their environment more independently. This is done with the goal to improve agency and give control for people with disabilities. Our application also addresses broader community needs, potentially accommodating those with cognitive disabilities through clear, simple instructions and avoidance of complex language. In future versions of our project we also aim to implement customization options to be even further inclusive of people with various disabilities.

Methodology and Results

ClearPath.ai is a user-friendly application that is used to detect dangerous objects or hazards in the user’s environment. When using ClearPath.ai, the user will be prompted to utilize their phone camera to take a quick scan of their surroundings. After the scan is complete, the app’s algorithm will take any objects that were detected and filter out non-hazardous objects. For example, a laptop on a desk would be considered safe, however, a cable going across the floor would be considered hazardous. After filtering is completed, our application will then display the objects detected and dangerous/hazardous to the user in the form of a TODO list. Users can then take their time to address each item in the list and mark them off as they are addressed.

We implemented ClearPath.ai using both a Visual Language Model (VLM) and a Large Language Model (LLM). For our VLM, we utilized Ultralytics YOLO11, the latest pre-trained object detection model from Ultralytics. We chose to use the VLM specifically because of its accuracy, speed, and ease of implementation with Flutter for our mobile application. The model works by processing visual input from the phone’s camera, detecting and classifying objects with a high degree of precision. When we were testing our application, we made sure to test in a variety of cases, from well-lit indoor settings to more dimly lit or cluttered environments so that our application would still remain functional in these scenarios.

We integrated our VLM with our LLM to achieve a higher level of contextual understanding, enabling ClearPath.ai to go beyond simple object identification to provide just risk assessments and insight. Once the user is done with the scan, our algorithm assigns appropriate risk values to detected objects using a dictionary. Using YOLO11 and Meta’s Llama 3 allowed us to describe results in natural language, and suggest preventive measures, all while maintaining accessibility features like text-to-speech and haptic feedback.

After implementing the backbone of our application, we began to work on improving the UI. Firstly, a web version of the app was made covering all basic functionalities. The web version is aimed to be simple in order to adhere to many of the WCAG guidelines such as WCAG 2.1 1.4.6 Contrast (Enhanced). In addition to this, the design was further improved through testing with screen readers to ensure elements such as proper tabbing order. Tests were also done with screen magnifiers to ensure that such AT’s do not affect functionality.

To make sure our mobile application adhered to accessibility standards, we conducted various tests and implemented elements to add or improve touch targets, semantic labeling, and visual contrast. For tappable node sizing, we ensured that all tappable nodes across our application met the minimum size requirements for accessibility. We ensured that all tappable nodes in our application were larger than 44 x 44 pixels on both Android and iOS. For certain UI elements such as buttons, we made sure to appropriately label these elements with semantic labels to allow users reliant on assistive technologies to be able to interact and utilize our application. To ensure that our application remained accessible, we also conducted manual testing using screen readers and screen magnifiers. This helped us identify and barriers for users utilizing these accessible technologies, leading to a more accessible application.

Disability Justice Analysis

Cross Disability Solidarity

The principle of cross-disability solidarity honors the insights and participation of all of our community members, knowing that isolation undermines collective liberation. ClearPath.ai incorporates this principle into its product design by prioritizing accessibility and inclusivity in its design. This approach fosters greater participation of a group that is often marginalized in discussions about safety and technology. Cross-disability solidarity emphasizes the importance of building alliances and unity among people with different disabilities, recognizing that while people’s experiences may differ, the fight for justice, equality, and accessibility, is shared. Our app supports individuals who have low vision, but addresses the needs of those with intersecting disabilities as well. By addressing hazards in shared spaces, ClearPath.ai promotes collective well-being, ensuring that safety measures are not just for sighted individuals but for all members of a household or community, including those with physical, sensory, or cognitive impairments. This collaborative design reinforces the principle of breaking down isolation between individuals with varying disabilities, encouraging shared responsibility and mutual respect. With these features, we are able to assist a wide range of users with various backgrounds, acknowledging the diversity of needs within our community and working towards being inclusive.

Interdependence

The principle of interdependence recognizes that we are all interconnected and reliant on each other. While our app is a technological solution, it supports and empowers individuals within an entire household. In accordance with the interdependence principle, those who have disabilities should be able to choose the types of support they need and want “without always reaching for state solutions”. For example, by improving the safety of individuals with low vision and empowering them to move more freely and safely, ClearPath.ai promotes their liberation. Yet, the app can also be seen as a form of mutual aid — both allowing for greater autonomy among its users while reducing those with access needs’ reliance on constant assistance from others. This app thus exemplifies an instance where we technology allows us to “meet each other’s needs” — liberating across communities of all abilities.

Collective Access

The principle of collective access emphasizes the flexibility and creativity in meeting diverse needs. Furthermore, this principle recognizes that everyone functions differently and that while access can be addressed individually or collectively that the responsibility is still shared. It emphasizes the need for a shared commitment to make our world accessible for everyone and not just those with disabilities. Our project succeeds in embodying this principle because we aim to create a unique application that can prioritize public safety for everyone. Although the app specifically benefits the visually impaired, it contributes to public safety by raising awareness about hazardous objects in the environment through object detection and hazard prioritization. By making the app cross-platform, we hope to increase access for those interested in using our app. Our app understands that everyone functions differently and aims to create a safer environment for those with vision impairments. We share the responsibility of fostering a safe environment through the creation of this app. We hope to increase collective access to safer spaces by detecting hazards for everyone.

Areas We May Fail to Address

The app could fall short in fully addressing the needs of individuals with certain disabilities if their specific safety challenges are not explicitly part of its functionality. For example, individuals with unique disabilities may encounter hazards that require detection methods or tailored guidance that the current version of the app may not provide. To better embody the principle of cross-disability solidarity, ClearPath.ai could expand its features to include customizable hazard identification, more diverse modes of communication, and other input from the disability community. By deepening its commitment to inclusivity, the app could further dismantle barriers and contribute to collective liberation from ableism and isolation.

Learnings and Future Work

Developing ClearPath.ai taught us the importance of understanding the challenges of creating an accessible and effective mobile hazard detection application. From the beginning, accessibility wasn’t a feature but a foundational aspect of the design. This required us to think critically about the user needs and the best ways to implement the various features in our application. By implementing features like voice guidance, haptic feedback, and accessible reporting formats, we hope to make our app truly inclusive. A key lesson from this project that we learned was being open-minded to exploring different solutions. Feedback and iteration were crucial in refining our initial vision of the app, helping us move from a broad idea of hazard detection to a more focused and practical solution that addresses real-world challenges. This iterative process also shaped the algorithm that determines whether an object is classified as dangerous. We experimented with multiple approaches to classify objects and attribute risk levels effectively, ultimately developing a method that balances both accuracy with usability.

One of the more challenging aspects was determining which frameworks, packages, and libraries to use. We explored and evaluated various options, balancing factors like performance, ease of integration, and compatibility with accessibility standards. Some of our decisions required trade-offs, and we often found ourselves revisiting earlier choices as our understanding of the problem space deepened. Looking ahead, we feel that there’s still ample opportunity to build on what we’ve achieved. We can further refine the hazard detection algorithm to improve both speed and accuracy, expand the range of supported environments, and explore integrations with more advanced AI models. Future development might also focus on creating a seamless ecosystem of mobile and web applications, ensuring users can access ClearPath.ai’s features on any platform. These next steps would be guided by more user feedback and iterative improvement.