Final Project Handin: Alt Mate

Plain Language Introduction

Many websites have images without alt text. Screen readers cannot describe these images to screen reader

users. Even if websites do include alt text, it is often written in complicated language. This makes it hard

for screen reader users to understand what is on the page.

There are tools to generate alt text for images. But they are mostly made for web developers. These tools let

developers upload images and generate alt text for them. However;

- Developers must manually add the alt text to the website. If developers skip this step, then their

website

is still inaccessible to screen reader users.

- These tools take in one image at a time. This is slow if developers want to create alt text for many

images.

- Alt text should match the context of the image. This means that an image may have different alt texts

depending on how it is used on different pages. Most tools do not use context. Tools that do use this

context often require developers to manually add descriptions.

Alt Mate solves these problems for developers and screen reader users by scanning web pages for images

without alt text. Then, Alt Mate generates plain language alt text using the image and surrounding context.

This context is the raw HTML code surrounding the image. For developers, all images and their alt text are

also displayed in Alt Mate’s Chrome Extension. This helps them review all images and alt text in one place.

Alt Mate also automatically updates the webpage’s code locally with the generated alt text. This allows

screen readers to read out descriptions of the image.

Alt Mate makes websites more accessible even if developers didn’t originally focus on accessibility. For

developers who want to improve accessibility, Alt Mate makes it faster for them to add alt text. This

encourages more developers to make their websites more accessible.

Positive Disability Principals

Ableist?

Alt Mate challenges ableist design by addressing an accessibility gap–the lack of sufficient alt text on

images

on webpages. For screen reader users, the lack of alt text can exclude them from understanding web content.

This

project is not considered ableist because we are designing with inclusive principles. We used several ATs

throughout development, and used prior research to justify our technical decisions. By considering past

research, we can avoid having being ableist in our final project.

Accessible in part or as a whole?

This project is considered accessible as a whole because Alt Mate provides a way to understand image content

that

would otherwise have been inaccessible.

Disability led?

This project is not disability led as none of us on the team use the assistive technology or have a

disability

that might influence us to use it.

Being used to give control and

improve agency for people with disabilities

Yes, this is being used to give control and improve agency for people with disabilities. Alt Mate generates

alt

text descriptions for images that would otherwise be without. By doing this, Alt Mate is able to provide

more

opportunities to alt text images on a site and for people that use screen readers to interpret.

Addressing the whole community

Yes, this addresses the whole community of people that have low vision or are blind as well as screen reader

users where they may come from all different backgrounds and communities.

Methodology and Results

For our project Alt Mate, we designed and implemented a browser plugin/extension that automatically generates

alt

text for images with missing alt text. Our goal was to create a tool that essentially makes web content more

accessible for screen reader users. On the flip side, we also hoped to encourage web developers to

prioritize

alt text on their sites through this extension as well since it detects all images that are missing alt text

on

a site.

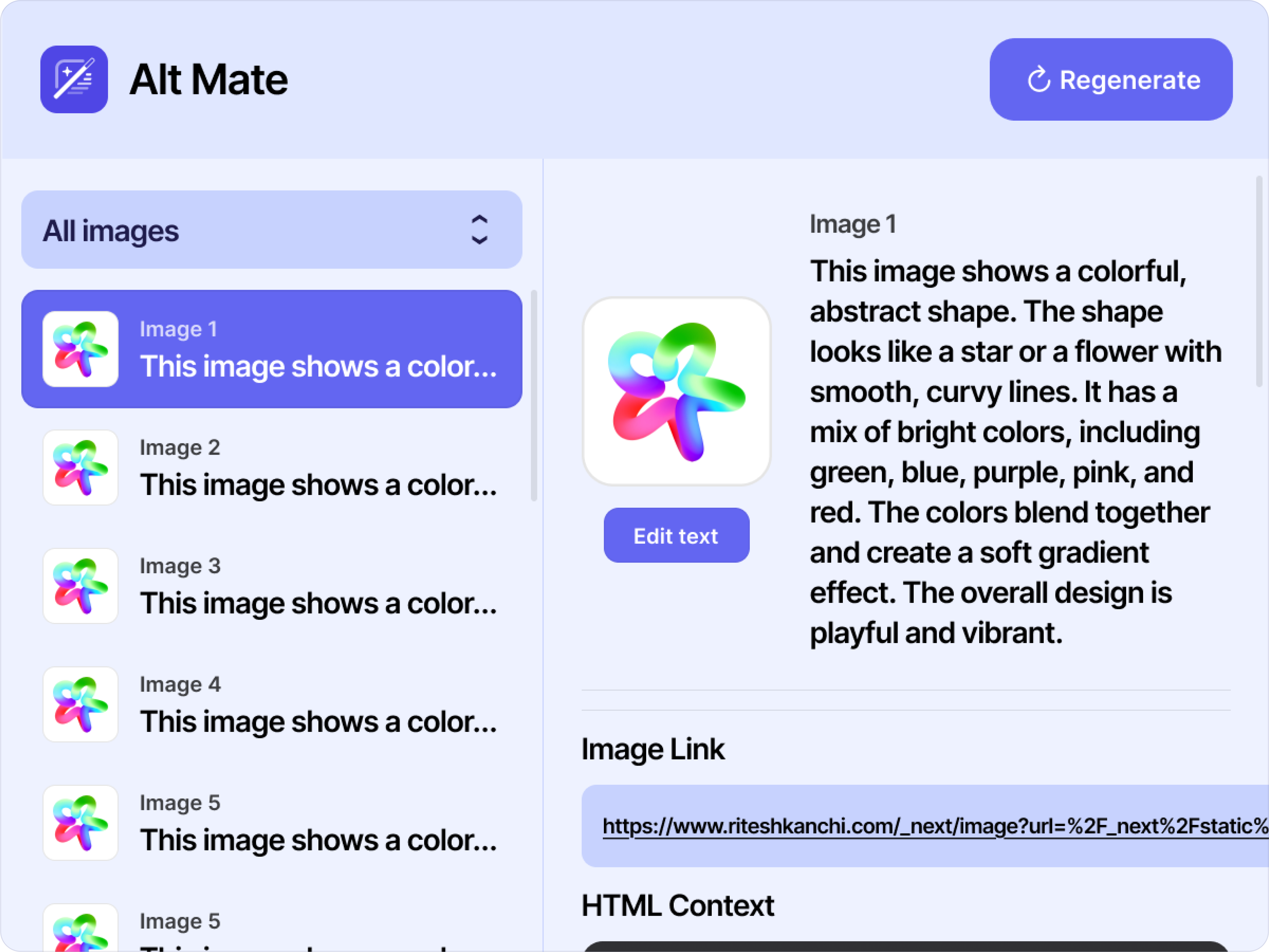

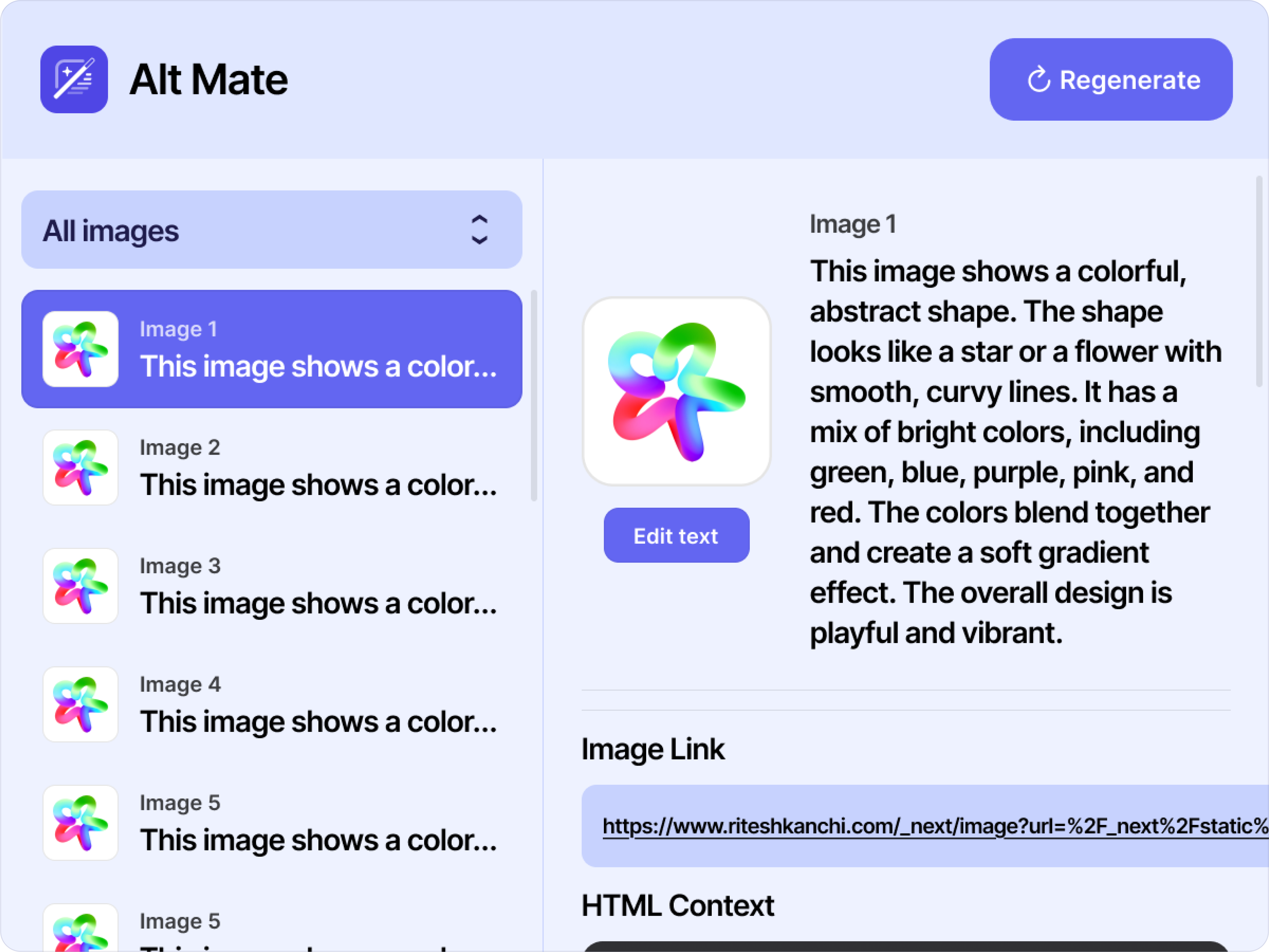

The first step of Alt Mate is opening the Chrome Extension. When opened, it scrapes every image on the page,

retrieving the following from each image element: the URL of the image, the alt property, and 300 characters

of

HTML source code above and below the image. We realized that the context of an image matters, which is why

we

collect the surrounding HTML source code. An image’s understanding relies on how it’s used, but also what’s

around it. For example, the Facebook logo at 8x8 next to other social media icons in the footer is different

from the Facebook logo at 100x30 in the navbar. After we’ve collected all this data from images, we go

through

each one to check the alt parameter to see if it’s empty or doesn’t exist. If so, we begin the alt text

generation process.

The alt text generation process happens per-image, from the images that we’ve flagged as not having alt text.

First, we contact the OpenAI API and create a thread: this is similar to a conversation. From here, we send

a

message to the thread we made, with the image source URL and context we collected. After the message is

queued,

we run the thread, and then wait for the run to complete. Here, we’re waiting on a response from the OpenAI

assistant we instructed. Once we’re done waiting, we retrieve the alt text and repeat the process for the

next

image.

Now that we’ve generated the alt text for all the images, we do two things. First, we inject the generated

alt

text back into the page with every image. During the generation stage, all images without alt text say

“Loading

from Alt Mate!” Here, this is replaced with the generated alt text. We’ve associated every image with a

unique

index that allows us to know which images to update. Second, we start populating the Alt Mate popup. The

popup

will no longer show a “Processing” label, and now shows information about every image. This includes: the

image

itself, alt text (with whether it was Alt Mate-generated or not), the image URL, and the surrounding context

of

the image. This way, individuals can see what was used to generate the image’s alt text.

For testing, we relied heavily on manual testing across many different web pages, to find the strengths and

weaknesses of Alt Mate. Some examples of websites include: Five

Guys, Emerald City Athletics, and

the UW Department of Biology. We saw that Alt Mate was

reading

through images of calendars, generating markdown-equivalents. We also saw Alt Mate using full-sized images

when

they were being rendered at much smaller sizes, with no need to process at their full scale. Due to this, we

updated the instructions slightly, as well as our post-processing to reduce these shortcomings.

Ultimately, Alt Mate addresses a critical accessibility gap by generating alt text for images with missing

descriptions, making web content more accessible for screen reader users while also encouraging developers

to

prioritize alt text on their sites. Our design focuses on context-based alt text generation so that the alt

text

being generated is relevant to the site the user is on.

Disability Justice Analysis

Commitment to Cross-Disability Solidarity

Commitment to Cross-Disability Solidarity means that individuals with different disabilities can come

together as

one, to fight against ableism and inequities faced collectively. This involves designing with an

understanding

of the needs of people with multiple disabilities, and also ensuring that the accessibility of one group is

not

overlooked while addressing the needs of another. The original focus of our project was solely on generating

alt-text. However, if we limit ourselves to this goal, we exclude blind or low-vision individuals who also

may

have cognitive or learning disabilities, as well as users who may not be BLV but rely on screen readers for

learning disabilities. Because of this, our project will not only generate alt-text but also aim to create

descriptions that align with plain language principles, making content more accessible across disabilities.

Collective Access

Collective Access recognizes that we all interact with our environments differently, whether that is with

regards

to our body or mind. However, no individual should be given access to lesser resources than another simply

because of these differences, as everyone deserves the opportunity to engage with the world in ways that are

equitable to their needs. On websites that contain images with no alt-text, these needs are overlooked for

individuals who rely on screen-readers. Our project embodies the principle of Collective Access by ensuring

that

these users, too, can engage fully with online experiences, making digital content more inclusive of varying

abilities.

Anti-capitalist Politics

To be anti-capitalist is to refuse the values of a capitalist economy that prioritizes profit over people.

Our

project, by being free-to-use, directly embodies this principle as it challenges the profit-driven motives

behind a capitalist society that focuses on financial gain. In such a system, when money becomes the focus,

accessibility is often disregarded or overlooked because of the extra time and expertise it requires—what

some

may believe can be better allocated to areas seen as more profitable. As a result, individuals, such as

those

who use screen-readers, are left with unequal access to online resources. We push against this model,

ensuring

that online content is made available to all, regardless of ability, which can be especially important when

it

comes to understanding information that impacts daily life—whether that is health, education, employment, or

other essential areas from which the disability community has historically been marginalized. And in doing

so,

this disrupts the traditional power dynamics capitalism has reinforced.

Learnings and Future Work

One of the biggest takeaways from our project was recognizing that “good enough” descriptions aren’t always

sufficient—users need a way to improve them if somehow the alt text description falls short. This leads us

to a

future improvement where we would also like to add the functionality to actually regenerate alt text in case

users want a different alt text description for an image. It would also be helpful in the situation that the

alt

text generated happened to be incomplete for some reason so that users can have control when things go

wrong.

Not only that, our current design only generates alt text for images completely missing alt text. We hope to

expand this to also generate alt text for images with insufficient alt text like “Image” or so on either

with

some sort of word count or other design idea.

We also learned how essential context is when generating alt text. Without considering parent and sibling

elements, alt text descriptions for images will fall short of capturing the entire meaning if we only send

just

the image. This learning was what drove us to send contextual information along with image data to OpenAI’s

API

so that we could have more accurate and useful alt text being generated.

OpenAI API calls are expensive, especially at scale. We felt it was particularly frustrating when testing our

design that we were regenerating alt text over and over for sites we’ve already been on. In the future, we

would

like to optimize this with some form of caching. One of the ways we could implement this is by storing the

URL

of the image and its generated alt text in some key-value system so that future visitors to the same page

won’t

trigger additional API calls—instead they’ll immediately receive the previously generated alt text. We think

this will not only significantly reduce the API costs but also speed up the user experience so they don’t

need

to wait for existing alt text to regenerate each time.

Another area for development is this idea of opening threads that continuously interact with the OpenAI API

to

prompt images about their content. It would introduce the potential for a Q&A format where users could

ask

questions about images on a webpage. For example, users might ask, “What is the person in this image doing?”

which would help extend our use case here to beyond just simple alt text generation.

In the long term, we see Alt Mate as more than just a tool for end users. It could also function as an

accessibility audit tool for web developers in helping them identify images with missing or unhelpful alt

text

on their sites. By making alt text visibility a core feature and goal of our plugin, we hope to drive better

accessibility practices across the web for users and developers alike.