3D Drawing in VR

a new choice of social distancing entertainment

Daniel Lyu and Lily Zhao

University of Washington

Abstract

This project is a VR 3D drawing APP. With the two controllers of the VR headset, the user could experience drawing 3D sketches in VR, within a background environment by the user's choice. The drawing is in true 3D, and the canvas is basically the entire 360-degree space inside the environment. The distance of putting the strokes is able to be controlled by the user, and the drawing is therefore not limited by space that the user could move, it is able to be played even while the user is standing at their original spot all the time.

In this drawing APP, the user is able to perform drawing in the environment. They can use the controller to put strokes, change color, change the stroke width, and change the distance of putting strokes when they draw in 3D.

Introduction

While recently the coronavirus is spreading all around the world, there’s an urgency to find new forms of entertainment that could be accessed at home, performed under the social distancing condition, while still able to bring fun and relaxation. Virtual reality seems like a very good starting point for creating projects with such features. Having to do a final project at this specific moment, we decided to try to build something contributing to this aspect.

After considering several outdoor activities, our focus settled on a special one that is very commonly seen in various parks in China. In these parks, we can see elders writing with huge Chinese writing brushes, practicing Calligraphy on the ground or walls with water. Inspired by this, we decided to build an app that could achieve this in VR. Instead of only focusing on the Calligraphy aspect, we also decided to extend the project to be able to draw anything in any color, in 3D space. At the same time, for better user experience and the expectation of using this app as entertainment, we made the background environment changeable. The user can decide where they are while drawing, by loading different environment models. You can experience drawing in the mountains, on the beach, or deep in the ocean without leaving your room.

The innovative idea of our implementation is basically to let the user put the strokes at a specific distance from the controller at the position on a virtual laser line going out from the controller. Then by having the distance controllable, the possible space of putting the strokes will be the full environment. By having this, the experience will be that it seems like you are holding a very long drawing brush to draw, and the length is adjustable. Since the “virtual brush” doesn’t have any weight regardless of how long it is, this works well.

Also since the drawing is 3D, the user can actually save the scene after drawing and walk around in this newly decorated environment. By having this feature, this app could also be further used in cases like virtual room decoration.

Contributions

- The main contribution is that with our app the user can draw real full-range 3D figures without walking around.

- The user can draw in different virtual environments and the drawing is integrated into the environment.

- The strokes implemented is very alike the Chinese writing brush strokes, which provides our app with an extended function that the user can practice Chinese Calligraphy with this app.

Method

The first step of implementing this project is to implement a way of drawing continuous strokes. There are actually various ways of doing that. We first tried drawing continuous circles, which is the easiest way to do. However, since circles are 2D, the result of doing so is not ideal. Even if we put all the circles facing the user, it looks fine if standing still, but if the user moves, it will become weird again. Then we also tried drawing spheres, which is 3D but has a weird surface looking while illuminated. We finally decided to implement the brush strokes by creating meshes and use those meshes to render a shape. By this way, the brush strokes have actual shape and textures.

After having the strokes, we then start to consider how to put them at the places they should be. As mentioned in our approaches, we made the right-hand controller a very long brush, with a specified length. Then if you draw a continuous stroke at this distance, you are actually drawing on a sphere that is that far from the controller. Therefore, we made up an imaginary sphere that has a radius as the drawing distance, and when the user starts to put strokes, we calculate the interacting point by the extending line going out from the controller and the imaginary sphere. Getting these points, combine them together to form a stroke, then one stroke is done.

At the same time of having these, there are several parameters changeable. The first is the drawing distance, the distance is controlled by the direction buttons on the left-hand controller. The second is the with of the strokes, which is controlled by the direction buttons on the right-hand controller.

After implementing these, as a drawing app, being able to change color is actually pretty important. In this part, we borrowed a color picker board from the internet, and the left-hand controller is used to adjust the 2 sliders on the color picker board. The 2 sliders on the color picker board indicate hue and saturation & luminance, which then are combined to form a specific color.

The last step is to integrate or drawing panel into an environment. This step is straight forward. We found some free environment templates on the internet, and load them together with the drawing system. The user can also change the template, they can choose to use our provided ones or their own.

Implementation Details

For development of this project, our hardware used is the HTC Vive VR Headset, which includes the headset, two controllers, and two laser trackers. The software we used is Unity, with scripting language C#. The library used was Steam VR. These are all completely new for us, it really took a much longer time to learn how all of them work. The computing environment is a personal computer with Windows 10 operating system. For the physical environment, we removed the mattress and used Lily’s bedroom as the testing area. The testing area used a floor lamp for the lighting.

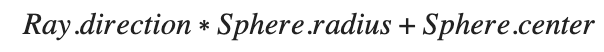

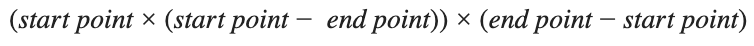

To generate new lines in the world, we need to detect the ray shooting out from the controller with the current “transparent sphere canvas”. At first, we weren’t able to detect the intersection because the unity built in sphere has its normal outwards. Thus, we had to create an inverted sphere in blender, and import it into unity as our sphere canvas. To detect the intersection, we used the ray-sphere intersection formula:

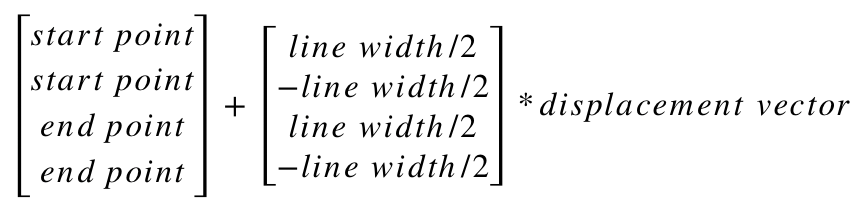

For the software, we used the Unity package and System.Collections.Generic to generate the line. With the formula

we get the displacement vector. Then, use the displacement vector to perform matrix calculation to get the four coordinates of the new mesh quad:

Discussion of Benefits and Limitations

The key benefit of our drawing app is that the user can draw real full-range 3D figures without walking around. This feature is new to most of the current existing 3D drawing VR apps. The limitations of the current app including the app only support specific hardware, which is the HTC Vive. Other limitations will be discussed in the next section of future works.

Future Work

Currently, our app does have some aspects that could be improved in the future. The first is that the color picker board in this version is at a fixed position, which will occupy a range where the user could not draw conveniently. Although the user actually could still draw on that part, it’s harder to see it if the stroke is put behind the color picker. In the future, we might consider building the color picker as a pop-up window, which the user could call it out or close it as they want. Besides, right now our stroke type is fixed, we didn’t have time to implement different stroke shapes or textures other than the current one. In the future, this could become a changeable feature.

Conclusion

By implementing this app, we provided a new choice of indoor entertainment during this special period of time. The app addressed the limitations that the current VR drawing systems are commonly having, and could be extended furthermore for other usages.

Acknowledgments

Special thanks to the entire CSE 490 V team at the Allen School, University of Washington who supported this class to provide this great learning opportunity. Also the UW Reality Lab who provided the hardware we needed.

References

Tutorial | E25 | Creating Your First HTC Vive App in UnityExperimental Evaluation of Sketching on Surfaces in VR

Developing A Custom VR Paint APP - by Tejas Shroff

Mixing realities for sketch retrieval in Virtual Reality

Multiplanes: Assisted Freehand VR Sketching The HoloSketch VR sketching system

Learn How to Set Up Your HTC Vive for Building VR Apps in Less Than 5 Minutes

Unity VR Tutorial: How To Build Tilt Brush From Scratch