Philosophy of AI

Administrative

Autumn 2022

Time: Wednesdays and Fridays from 1:30 to 3:20pm

Location: DEN 113 (or sometimes online)

Instructor: Jared Moore

Email: jlcmoore@cs.washington.edu

Office hours: Wednesdays 4:30 - 5 (show up by five or email me so I’ll stay later) CSE 216

Please do not hesitate to write to me about any accommodations or questions related to readings or course material.

Description

What does it mean to think? How are computers different from people? How are they the same? This is a seminar class about asking deep questions about intelligence and exploring their far-reaching consequences. Through daily readings, discussions, and a course project, students will survey the history of approaches in artificial intelligence as well as related disciplines like neuroscience, philosophy of mind, and psychology. We will cover concepts such as alignment, connectionism, consciousness, causation, generalizability, information, learning, and symbolism.

Objectives

By the end of this course students will:

- be able to articulate various assumptions and ideas behind AI,

- practice historically and culturally contextualizing those positions,

- consider what progress in AI means,

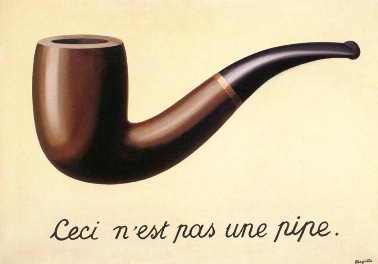

- recognize the gap between theory and actuality of AI,

- challenge their own and others’ assumptions,

- be prepared to interrogate novel claims about intelligence,

- feel more confident being a part of an intellectual community,

- and have fun.

Schedule

(may change up to a week in advance)

Symbolic vs. Connectionist

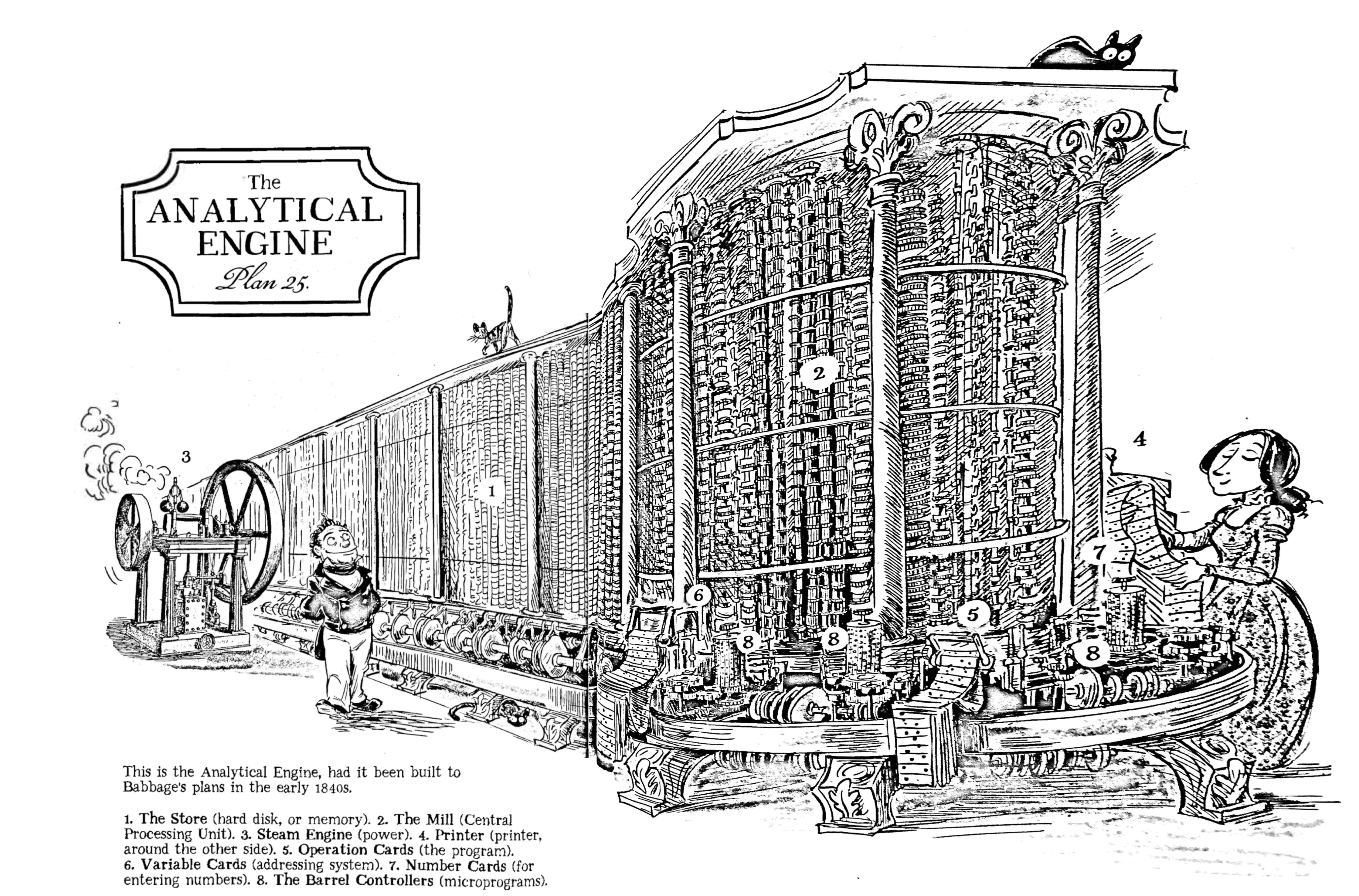

Wed, Sep 28 History

Required:

-

Read chapters 1-2 (45 pages) from "Artificial intelligence: a guide for thinking humans" [pdf] by Melanie Mitchell, 2019.

Mitchell, an expert on genetic programming and former student of Doug Hofstadter, approachably covers a history of symbolic and connectionist AI, describing, to the extent we will need, the technical differences between approaches over the years.

-

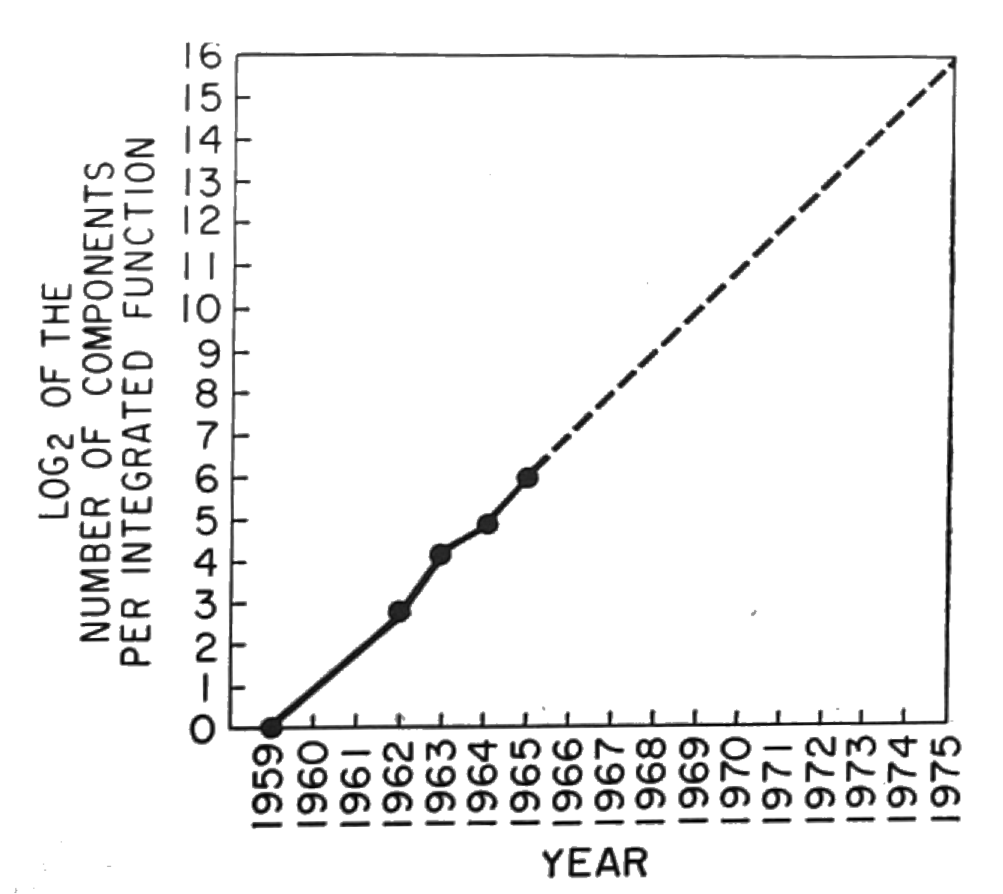

Read "A Golden Decade of Deep Learning: Computing Systems & Applications" [pdf] [url] by Jeffery A. Dean, 2022 (17 pages).

Dean, head of Google AI, trumpets the successes of deep neural networks (deep learning) with an eye toward their potential. Focus on the sense and the complexity of the field Dean imparts; don’t worry about the technicalities (especially in “Application explosion” and “Future of machine learning”.

Optional:

-

Watch "What Is Artificial Intelligence? Crash Course AI #1" [url] by Eric Prestemon et al., 2019.

This and the videos in its series provide a gentle introduction to some idea behind AI.

-

Watch "But what is a neural network? | Chapter 1, Deep learning" [url] by Grant Sanderson, 2017.

In this series Sanderson introduces us to math behind neural networks and modern deep learning.

-

Read "Genius Makers : The Mavericks Who Brought AI to Google, Facebook, and the World" [url] , 2021.

A solid recent history on the personalities behind deep learning.

-

Read the introduction from "Patterns, predictions, and actions: A story about machine learning" [pdf] [url] by Moritz Hardt et al., 2021 (309 pages).

This textbook provides an expansive and measured view of machine learning. It’s strongly recommended for those who like a dose of reality when learning technicalities.

-

Read the introduction from "What computers can't do; a critique of artificial reason" [pdf] [url] by Hubert L. Dreyfus, 1972 (314 pages).

Dreyfus, in his seminal critique, here provides a good early history of the field, specifically the era which is now called Good ol’ Fashioned AI (GOFAI).

-

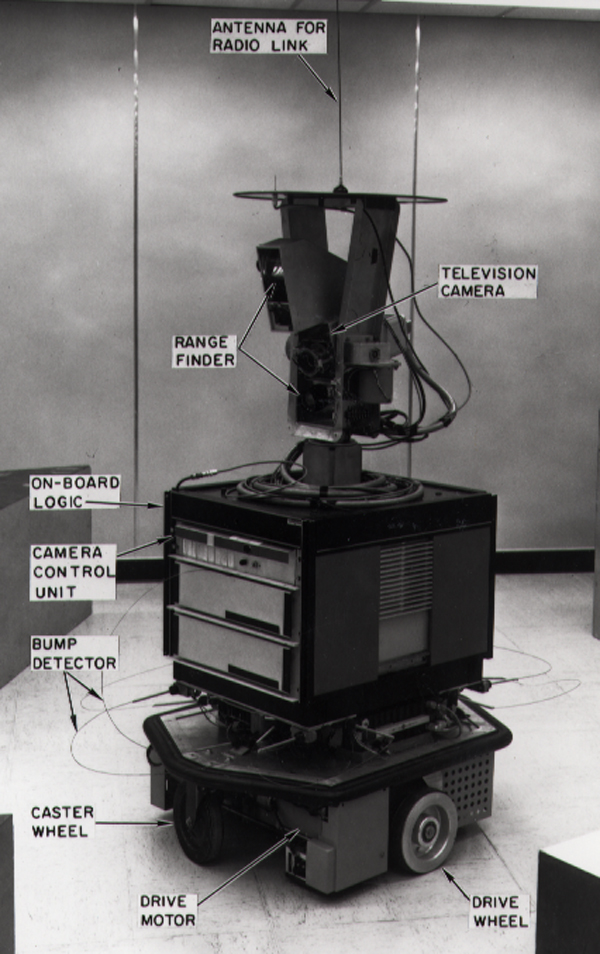

Read "Computer science as empirical inquiry: Symbols and search" [pdf] by Allen Newell et al., 1976 (14 pages).

In their Turing award lecture speech, Herbert Simon and his then-student Allen Newell deliver the flavor of the symbolic approach in the sense of the General Problem Solver.

-

Read "Cognitive Wheels: The Frame Problem of AI" [pdf] by Daniel C. Dennett, 1998 (27 pages).

Dennett delivers a cheeky rebuke of the symbolic approach.

Optional Creative Pieces:

-

Check out "Erewhon: or, Over the range." [url] by Samuel Butler, 1872 (320 pages).

Butler extends and clashes with the evolutionary ideas of his contemporary, Darwin, musing, in this fictional colonial account, on machine understanding.

Origins

Fri, Sep 30 History

Required:

-

Read chapter four from "From bacteria to Bach and back: the evolution of minds" [pdf] by D. C. Dennett, 2017 (23 pages).

Dennett, the august philosopher, examines mindless molecules and machines as a means to mine meaning. We read him here to understand the role of AI tools as analogies of thought.

-

Read "Computing Machinery and Intelligence" [pdf] by Alan Turing, 1950 (27 pages).

Alan Turing, by this time already well-known for his theoretical work which led to the Church-Turing thesis and for von Neumann’s implementation of computers, here describes the now-famous imitation game, a mainstay of many functionalist arguments.

-

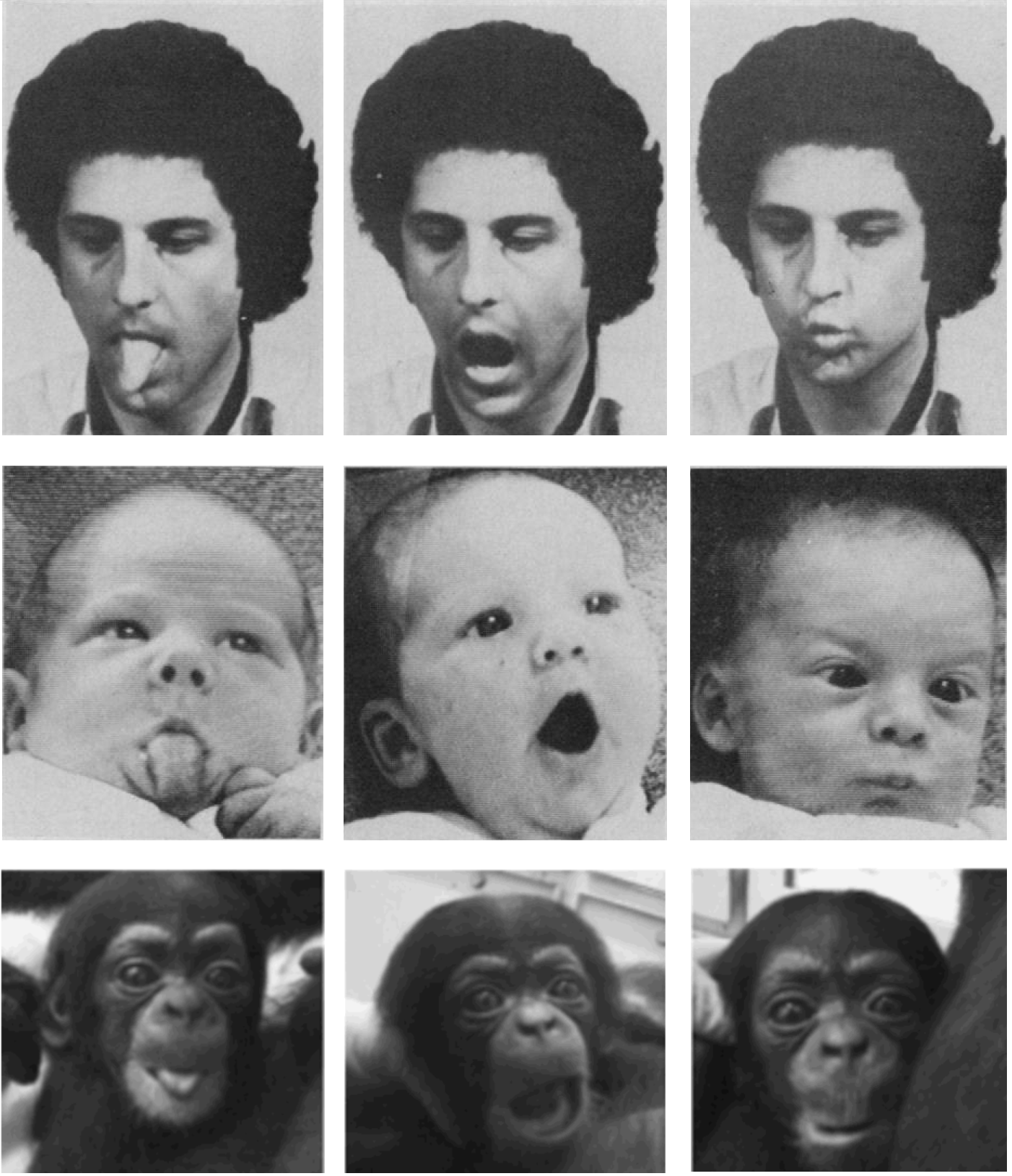

Read the introduction (3 pages) from "A logical calculus of the ideas immanent in nervous activity" [pdf] by Warren S. McCulloch et al., 1988.

The surgeon Warren McCulloch and his protege the logician Walter Pitts here offer an (incorrect) logical description of a neuron’s operation, a fork in the road to the fields of computational neuroscience and artificial intelligence.

Optional:

-

Read "Philosophical foundations" [pdf] [url] by Konstantine Arkoudas et al., 2014 (29 pages).

Introducing much of the material we will cover in this course, Arkoudas and Bringsjord survey the history of AI in light of the philosophical issues that have arisen, many of which we will address throughout class.

-

Read "A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence" [pdf] by John McCarthy et al., 2006 (18 pages).

The proposal that started it all, or at least first popularized the term artificial intelligence. They thought a significant proportion of the work toward human-like understanding could be done in a summer.

-

Read "The Dartmouth College Artificial Intelligence Conference: The Next Fifty Years" [pdf] by James Moor, 2006 (5 pages).

A follow up on the Dartmouth conference, fifty years on. This time, their projections were a bit less rosy.

-

Read chapters 9-12 from "I Am a Strange Loop" [url] by Douglas R. Hofstadter, 2007.

A topic we will not cover is Gödel’s incompleteness theorems and their relevance to the determinacy of mind. For those interested, Hofstadter is the place to start.

Optional Creative Pieces:

-

Check out "Spinoza" [url] by Berthold Auerbach, 1882.

The Jewish Golem myth holds that a anthropomorphic mud figure may be animated by inscribing on its head certain characters. Auerbach is said to be one of the originators of the myth in its modern, Prague narrative.

-

Check out "The Honorable Historie of Frier Bacon and Frier Bongay" [url] by Robert Greene, 1905.

Turn here for another classic rendition of the conjuring trick as told in the mythos of the Brazen Head, a metallic automaton.

-

Check out "The Funniest Joke in the World" [url] , 1969.

Watch this Monty Python sketch for the humor. Stay for the commentary on self-reference.

-

Check out "The Riddle of the Universe and Its Solution" [pdf] by Christopher Cherniak, 1981 (15 pages).

Follow Cherniak for a dry and delightful unfurling of the implications of Gödelian incompleteness.

How to perceive things

Wed, Oct 05 Sensing

Required:

-

Read chapter five from "From bacteria to Bach and back: the evolution of minds" [pdf] by D. C. Dennett, 2017 (26 pages).

Dennet returns to describe how organisms track affordances (the perceived ways to interact) in nature, using simple parts to respond usefully to the world.

-

Read chapter one from "Beyond concepts: unicepts, language, and natural information" [pdf] by Ruth Garrett Millikan, 2017 (13 pages).

Millikan, who follows after her advisor Wilfred Sellars and works on biosemantics (akin to biosemiotics), describes what the world needs to be like in order for it to be known. Millikan requires a lot of patience. She introduces ideas much later than when she first mentions them and does not shy away from subtlety. Try anyway—she’s worth it.

Optional:

-

Read "Basic objects in natural categories" [pdf] by Eleanor Rosch et al., 1976 (57 pages).

In this classic paper, Rosch, the esteemed psychologist, details a number of experiments which demonstrate how the highly correlated nature of the real world yields certain basic objects as the most useful level of categorization.

Optional Creative Pieces:

-

Check out "Alice's Adventures in Wonderland" [url] by Lewis Carroll, 1865 (192 pages).

Follow Alice down the rabbit hole and away from the logic that is supposed to govern the world as the ways that she (and the narrator) categorize the world run wild. Alice’s imagination may not be so far from each of our own.

Information

Fri, Oct 07 Sensing

Required:

-

Read chapter three from "Beyond concepts: unicepts, language, and natural information" [pdf] by Ruth Garrett Millikan, 2017 (11 pages).

Millikan, whose overall thesis is that there are no such things as concepts but rather organisms each have their own unique ways of “same-tracking” states of affairs, here describes how organism-internal representations can serve to make use of information from the world.

-

Read chapter six from "From bacteria to Bach and back: the evolution of minds" [pdf] by D. C. Dennett, 2017 (32 pages).

Here Dennett expands on information but specifically semantic information, information that matters. This is also not the same as abstract, Shannon information and matters very much for some things in the world.

-

Read the introduction (3 pages) from "Psychology, briefer course" [pdf] by William James, 1988.

William James, the father of American psychology, gives an associationist account of learning (like the rule after Donald Hebb: fire together, wire together), a psychological approach to answering the questions of class today.

Optional:

-

Read chapter twelve from "Incomplete Nature" [pdf] by Terrence Deacon, 2012 (21 pages).

Deacon, a biosemiotician which means he studies the nature of signs in living systems in the way of Charles Sanders Pierce or Thure von Uexküll, approaches two different ways of looking at information: abstractly (like Shannon) and thermodynamically (like Gibbs). It’s an important distinction to understand.

-

Read chapter eleven from "Beyond concepts: unicepts, language, and natural information" [pdf] by Ruth Garrett Millikan, 2017 (15 pages).

Millikan extends her inquiry to what infosigns: non-accidentally-correlated states of affairs, but, in general, the unique-to-an-individual categories useful to agents in the world.

-

Read "Visual Information Theory" [url] by Crhis Olah, 2015 (18 pages).

Olah, a co-founder at Anthropic and a force in AGI safety, offers a very understandable mathematical introduction to information theory.

-

Read "A mathematical theory of communication" [pdf] by C. E. Shannon, 1948 (44 pages).

Here is Shannon’s classic, but less understandable, introduction to information theory. Allegedly, von Neumann told Shannon to name the central tenet of information theory entropy, telling Shannon, “no one knows what entropy really is, so in a debate you will always have the advantage” as quoted in Leff, Maxwell’s Demon, Entropy, Information, Computing, 1990).

Optional Creative Pieces:

-

Check out "Crystal Nights" [url] by Greg Egan, 2008.

Consider this story by the great Greg Egan, who has long been honing his craft on genetically-inspired AI evolving in its own digital world. After all, “Human-crafted software was brittle and inflexible; his Phites had been forged in the heat of change.”

-

Check out "Flatland" [url] by Edwin Abbott Abbott, 1884 (147 pages).

Abbott’s classic offers a different, and much more satirical, take on mathematical (informational) worlds, one which, in his case, still carried much human meaning.

Predictive perception

Wed, Oct 12 Sensing

Required:

-

Read chapter eleven from "Odd perceptions" [pdf] by R. L. Gregory, 1986 (13 pages).

The delightful Bayesian cognitive psychologist, Richard Gregory, here describes some of the most troubling data for those who contend the world is as perceived: illusions.

-

Read chapter four from "Being you: a new science of consciousness" [pdf] by Anil K. Seth, 2021 (22 pages).

Anil Seth, in his approachable way, details how a commonsense view of psychology fails in light of various psychological findings. Conscious experience, in his view, is better termed as a “controlled hallucination.”

-

Read everything but "Goals and Motivations" and "Subjective Experience" (462-468, 476; 9 pages total) from "The unbearable automaticity of being" [pdf] by John A. Bargh et al., 1999.

In this classic paper, social psychologists Bargh and Chartrand focus on the unconscious influences of what they contend is our largely automatic behavior. Skim the experiments.

-

Read the introduction (3 pages) from "Concerning the Perceptions in General" [pdf] by Hermann von Helmholtz, 2001.

Helmholtz, famous for his 19th century ground-breaking work on vision at a much more mechanistic level, describes the ways in which organisms construct their perception from experience, as opposed to being given it innately.

Optional:

-

Read "The man who mistook his wife for a hat" [pdf] by Oliver Sacks, 1998 (15 pages).

The neurologist Oliver Sacks, called by at one point “the poet laureate of contemporary medicine,” describes here one of his best-know case reports: that of his visually agnostic patient, Dr. P. Being able to name the objects you see is just one of the constellation of cognitive tools that most of us humans take for granted.

-

Read "The mind of a mnemonist: a little book about a vast memory" [url] by A. R. Luria, 1987 (160 pages).

The great Soviet psychologist Alexander Luria here delivers an extended case report (in a style that Sacks sought to emulate) of his patient, S., a mnemonist.

Optional Creative Pieces:

-

Check out "The diving bell and the butterfly" [url] by Jean-Dominique Bauby et al., 1998 (131 pages).

In one of the most touching and heart-wrenching memoirs ever written, Bauby blinks out, letter by letter, the beauty and torture of the world when locked inside ones own body.

-

Check out "Funes, the Memorious" [pdf] [url] by Jorge Luis Borges, 1962 (9 pages).

The Argentinian master, Jorge Lois Borges, paints the painstakingly full memory of a mnemonist, Funes, in a way very similar to Luria’s patient, S.

-

Check out "Marjorie Prime" [url] by Michael Almereyda et al., 2017.

Harrison’s film offers an ambivalent portrayal of artificial intelligence in the incompleteness of its experience, but a portrayal that is nonetheless decidedly natural, as the burgeoning inconsistencies of the human characters quickly make clear.

How to know things

Fri, Oct 14 Knowing

Required:

-

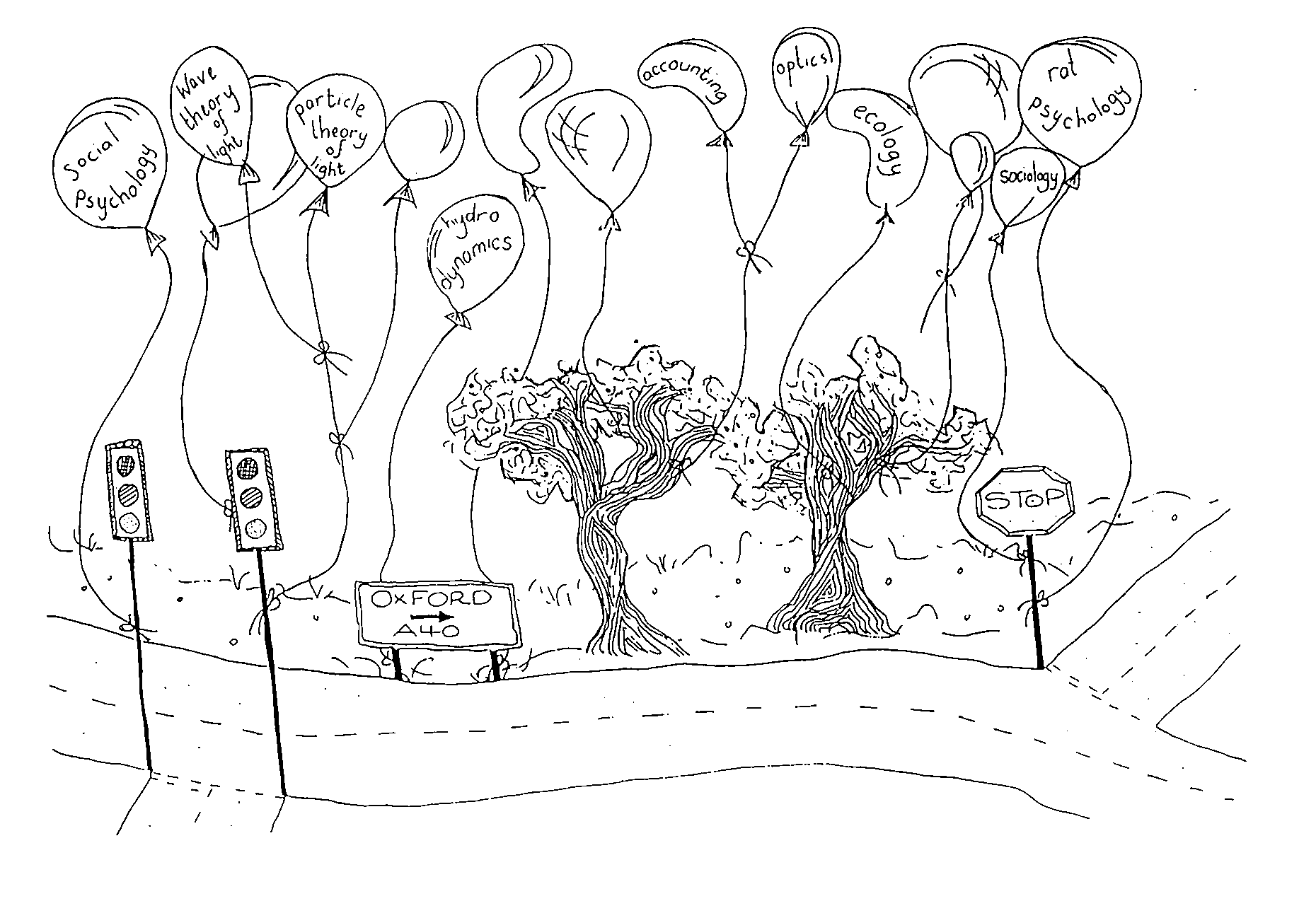

Read "Logic of discovery or psychology of research?" [pdf] by Thomas S. Kuhn, 1977 (27 pages).

Thomas Kuhn, although slightly less well known than his contemporary philosopher of science, Karl Popper, himself known for “falsification,” originated the term “paradigm shift” in his seminal work The Structure of Scientific Revolutions which described how mutually-irreducible scientific fields work in their own paradigm under “normal science” but occasionally join (or split) in revolutions. Kuhn further contends that no science—no way of knowing the world—can be free from such subjectivity.

-

Read the introduction through page 17 (17 pages) from "The Book of Why: the new science of cause and effect" [pdf] by Judea Pearl et al., 2018.

In his popular book, the Turing-award-winning and modern Bayes’ nets father Judea Pearl describes the motivation behind seeking causative, as opposed to mere correlative, information. It’s a task that he contends is necessary for any strong AI.

-

Read the introduction (4 pages) from "Actual causation and the art of modeling" [pdf] [url] by Joseph Y. Halpern et al., 2011.

In their readable and example-filled work, Halpern and Hitchcock convey the sense of causal modeling and its model-dependent subjectivity. Read on to get a sense of the mathematics.

Optional:

-

Read "Ducks, Rabbits, and Normal Science: Recasting the Kuhn's-eye View of Popper's Demarcation of Science" [pdf] [url] by Deborah G. Mayo, 1996 (19 pages).

Mayo reads between the philosophies of Popper and Kuhn, offering clarification.

-

Read "The Art and Science of Cause and Effect" [pdf] by Judea Pearl, 2000 (28 pages).

This is the conclusion to Pearl’s textbook, Causality, and gives a gentle introduction to the historical reasons behind a focus on causation.

-

Read "The structure of scientific revolutions." [url] by Thomas S. Kuhn, 1967 (172 pages).

Kuhn’s classic text.

-

Read "A Causal Theory of Knowing" [pdf] [url] by Alvin I. Goldman, 1967 (15 pages).

This is a classic work which lays out conditions in which analysis of causality graphically makes sense.

Optional Creative Pieces:

-

Check out "Arcadia: A play in two acts" [url] by Tom Stoppard, 1993.

In his pastoral and far-ranging Arcadia, Stoppard offers one of the sharpest portrayals of modern science.

How knowing errs

Wed, Oct 19 Knowing

Required:

-

Read the introduction from "The Dappled World" [pdf] by Nancy Cartwright, 1999 (18 pages).

Cartwright asks us to look at the closure, at the extent of applicability, of our theories, contending that those theories don’t explain as much of our world as our attitudes towards those theories would suggest. In other words: physics is limited—objects in the world are not points in space (point masses) as idealized equations require.

-

Read through page 382 (30 pages) from "The looping effects of human kinds" [pdf] by Ian Hacking, 1995.

Ian Hacking, in a characteristically high-minded manner, rigorously defends the notion that certain kinds (arrangements of things in the world) are slippery—they change as we investigate them. Think of self-fulfilling prophecies: Did you not do the reading because I said you wouldn’t or did I say you wouldn’t because you would not do the reading? Note that this reading has much to say about the unhappy things that people do to each other and to themselves.

Optional:

Optional Creative Pieces:

-

Check out "Candide" [url] by Voltaire, 1746.

Voltaire, the Enlightenment philosopher, wrote this satire in response to the polymath Leibniz’s contention that ours is the “best of all possible worlds.” Read it to get a sense of the history of what scientific optimism leaves out.

Bottom-up

Fri, Oct 21 Levels of reduction

Required:

-

Read chapter one from "The Computational Brain" [pdf] [url] by Patricia S. Churchland et al., 2016 (16 pages).

Neuro-philosopher Pat Churchland and computational neuroscientist Terrence Sejnowski introduce us to, in their textbook on the subject, the computational view of mind: an attempt to reduce thinking to its (neural) parts.

-

Read the introduction (2 pages) from "Vision" [pdf] by David Marr, 1988.

The late neuroscientist David Marr had an outsize role in shaping vision-, and neuro-, science and here explains the multiple levels with which we might view the brain, but also, by extension, any understanding system.

-

Read "Zoom In: An Introduction to Circuits" [url] by Chris Olah et al., 2020 (15 pages).

Olah and colleagues, then all at OpenAI, offer a different perspective on reduction: analyzing the component parts of artificial neural networks in the spirit of computational neuroscience.

Optional:

-

Read "Neuroscience-Inspired Artificial Intelligence" [pdf] [url] by Demis Hassabis et al., 2017 (13 pages).

Hassabis, co-founder of DeepMind, and colleagues describe the recent union of technical approaches in computational neuroscience and artificial intelligence, fields that since the time of McCulloch and Pitts had been in many ways quite separate.

-

Read "Toward an Integration of Deep Learning and Neuroscience" [pdf] [url] by Adam H. Marblestone et al., 2016 (41 pages).

In much more technical detail than Hassabis et al., Marbleston et al. describe the connections of these two fields: e.g. path-finding (hippocampus), working memory (prefrontal cortex), gated relays (thalamus), multi-timescale reductive feedback (cerebellum), and reinforcement learning (basal ganglia).

Optional Creative Pieces:

-

Check out "Book XI; Chapter IV: A Hymn And A Secret" [url] by Fyodor Dostoyevsky, 1880.

In this section of The Brothers Karamazov, Dostoyevsky, who was much concerned about current science, muses on the implications of the neurons discovered by Golgi and his method: “these nerves are there in the brain … there are sort of little tails, the little tails of those nerves, … That’s why I see and then think, because of those tails, not at all because I’ve got a soul.”

-

Check out "Non Serviam" [pdf] by Stanislaw Lem, 1981 (25 pages).

Stanisław Lem, the great Polish science fiction author, strikes home with this story of evolution and digital worlds, questioning, at a quite basic level, the parts that are necessary to sustain life.

Top-down

Wed, Oct 26 Levels of reduction

Required:

-

Read chapter three from "I Am a Strange Loop" [pdf] [url] by Douglas R. Hofstadter, 2007 (14 pages).

Hofstadter, in his typical relaxed pace of ho-hum examples conveys one of the most confusing inversions in nature: downward causality—how it is that we can do such things as deciding in a world governed by particle physics.

-

Read chapter seven from "The hidden spring: a journey to the source of consciousness" [pdf] by Mark Solms, 2021 (30 pages).

Mark Solms, a neuropsychologist and psychoanalyst, offers an approachable introduction to Friston’s free energy principle, a mathematical way of describing top-down causation, what is sometimes called emergence.

-

Read "The mathematics of mind-time" [url] by Karl Friston, 2017 (6 pages).

The neuroscientist Karl Friston, the enigmatic force behind fMRI and the free energy principle, in an uncharacteristically un-mathematical way, describes the relationship between his free energy principle and active inference: his term for how ends-driven reasons appear in mechanically reducible systems.

Optional:

-

Read "Why is Life the Way it Is?" [pdf] [url] by Nick Lane, 2019 (8 pages).

The biochemist Nick Lane accessibly describes the centrality of regulating energy expenditure in understanding the perpetually-away-from-equilibrium nature of life.

-

Read chapter nine from "Incomplete Nature" [pdf] by Terrence Deacon, 2012 (24 pages).

In his chapter on teleodynamics, Deacon turns to describe the ends-driven (telos) organization (dynamic) of life at quite a mechanical level. Living systems are self propelling, like whirlpools that can seek out the conditions for their own perpetuation.

-

Read (don't worry about the math) "Life as we know it" [pdf] [url] by Karl Friston, 2013 (12 pages).

Friston here mathematically describes how larger-scale organization influences smaller-scale properties—how life works.

Optional Creative Pieces:

-

Check out "The last question" [url] by Isaac Asimov, 1956 (10 pages).

In a nod to the thermodynamic constraints which govern all life—and thought—the great Isaac Asimov plays with the levels of description we wish we could have.

-

Check out "They're All Made Out of Meat" [pdf] [url] by Terry Bisson, 1991 (2 pages).

Bisson, in this delightful short story, contorts our incredulity toward artificial thought and leaves us with the question: why is it that we think at all?

Theories of you

Fri, Oct 28 Consciousness

Required:

-

Read chapter one and two (38 pages) from "Consciousness and the social brain" [pdf] by Michael Graziano, 2013.

Princeton psychologist Michael Graziano gently introduces his functional theory of consciousness, the Attention Schema Theory, which is similar to the Global Neuronal Workspace Theory (GWNT), Higher Order Thought (HOT), and illusionist theories.

-

Read chapter one from "Being you: a new science of consciousness" [pdf] by Anil K. Seth, 2021 (22 pages).

Neuroscientist Anil Seth introduces the troubling distinctions necessary for a theory of consciousness, familiarizing us with the necessary terms such as panpsychism, functionalism, zombies, information processing and more.

-

Read postscript from "The hidden spring: a journey to the source of consciousness" [pdf] by Mark Solms, 2021 (6 pages).

Mark Solms returns to thread the needle through recent contributions to the science of consciousness with an eye toward affective (feeling-centered) theories.

Optional:

-

Read "Toward a standard model of consciousness: Reconciling the attention schema, global workspace, higher-order thought, and illusionist theories" [pdf] [url] by Michael S. A. Graziano et al., 2020 (17 pages).

In a more scholarly vein, Graziano attempts to unite the similarities between a swath of functionalist theories of consciousness.

-

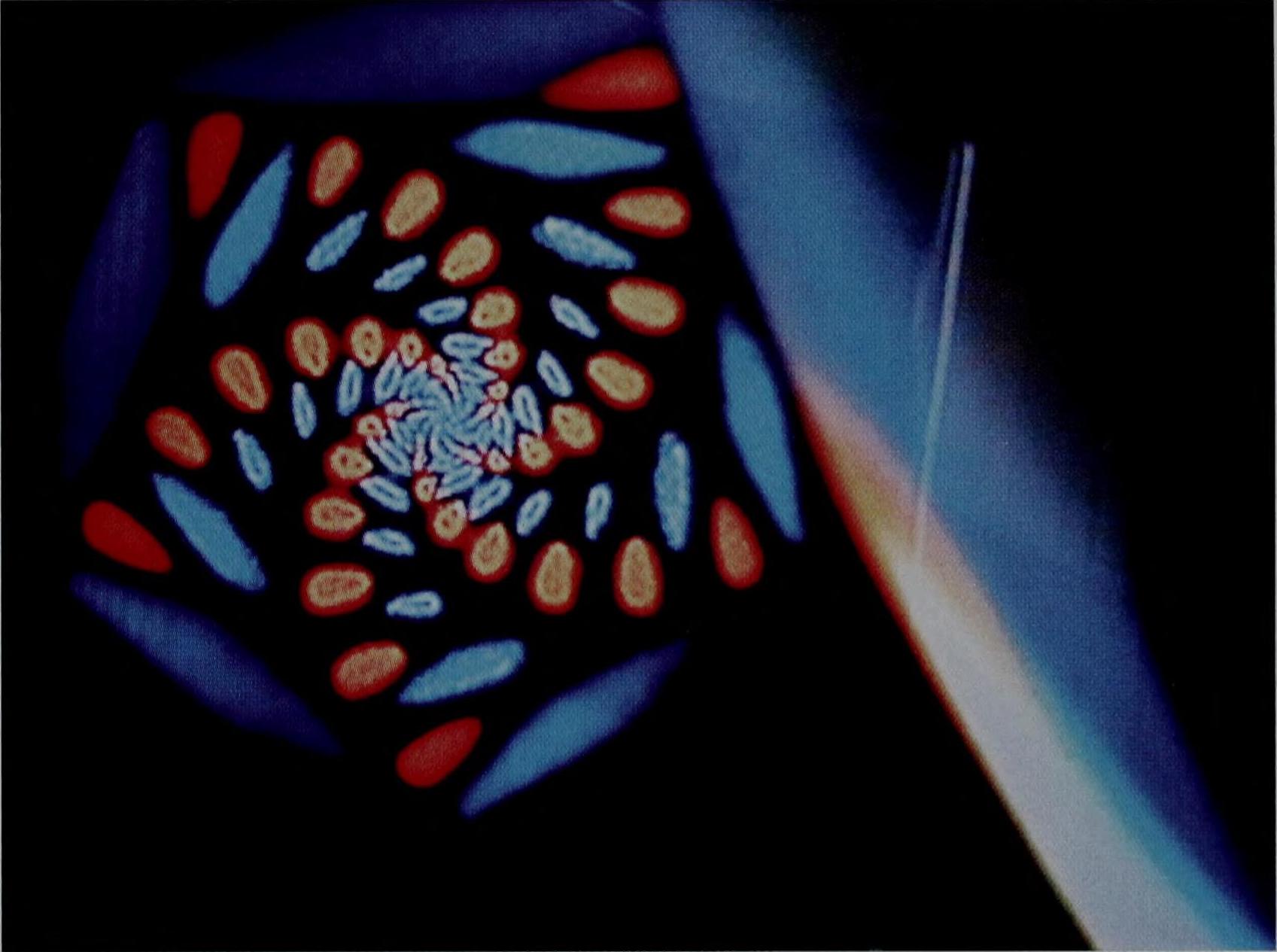

Read "The entropic brain - revisited" [pdf] [url] by Robin L. Carhart-Harris, 2018 (11 pages).

Carhart-Harris, formerly of UCL but now at UCSF, pumps our intuition about consciousness with causal-organization-increasing theories about the effects of serotonergic psychedelics.

-

Read "Consciousness without a cerebral cortex: A challenge for neuroscience and medicine" [pdf] [url] by Bjorn Merker, 2007 (18 pages).

In this fascinating study, Merker challenges our assumptions about which brain areas are necessary to sustain conscious, or at least affective, experience.

-

Read chapter two from "Being you: a new science of consciousness" [pdf] by Anil K. Seth, 2021 (27 pages).

Seth, assuming that consciousness is a material quantity, asks how it is that we can measure it—just like we do for mass or volume.

-

Read chapter three from "Being you: a new science of consciousness" [pdf] by Anil K. Seth, 2021 (15 pages).

Seth goes on to describe one important theory of consciousness: integrated information theory, popularized by Tononi and Koch.

Optional Creative Pieces:

-

Check out "Borges and I" [pdf] by Jorge Luis Borges, 1981 (15 pages).

The masterful Borges subverts the usual literary frame to question who it is that writes his stories and, by extension, who it is that writes the stories of our minds.

-

Check out "Fiction" [pdf] by Robert Nozick, 1981 (4 pages).

In a more American literary bent, Nozick bucks the convention of speaker identity and of identity writ large.

-

Check out "Mind-Body Problem" [url] by Rebecca Goldstein, 1985.

Goldstein, a former student of Nagel, clues us into how the scientific mind-body problem can come to be personal.

Hard questions, hard problems

Wed, Nov 02 Consciousness

Required:

-

Read "Facing up to the Problem of Consciousness" [pdf] by David J Chalmers, 1995 (20 pages).

We consider Chalmers’ (himself a former student of Hofstadter) enigmatic classic, one that postulated a difficulty in the study of consciousness that may well have been illusory.

-

Read "What Is It Like to Be a Bat?" [pdf] [url] by Thomas Nagel, 1974 (15 pages).

Thomas Nagel presents a critique of materialism fit for the ages, homing in on the question of how to arrive at subjectivity from a science of objectivity.

-

Read chapter fourteen from "From bacteria to Bach and back: the evolution of minds" [pdf] by D. C. Dennett, 2017 (36 pages).

In contrast to Chalmers and Nagel, Dennett arrives at his reductive, functionalist account of consciousness, one built up from many smaller intellectual tools.

Optional:

-

Read "Facing up to the hard question of consciousness" [pdf] [url] by Daniel C. Dennett, 2018 (7 pages).

Dennett more directly addresses Chalmers’ hard problem, simply dissolving it into an ill-posed question.

-

Read chapter thirteen from "I Am a Strange Loop" [pdf] [url] by Douglas R. Hofstadter, 2007 (15 pages).

Hofstadter arrives at his roundabout description of conscious systems: strange loops.

-

Read "Beyond the Doubting of a Shadow" [pdf] [url] by Roger Penrose, 1996 (40 pages).

The venerated mathematician Roger Penrose defends his argument that consciousness arises through the quantum randomness in neural microtubules, one related to earlier considerations of Gödelian incompleteness.

-

Read "Gaps in Penrose's Toilings" [pdf] by Rick Grush et al., 1995 (32 pages).

Pick up Grush and Churchland for a scathing rebuke of those who argue against the role of quantum randomness in consciousness.

Optional Creative Pieces:

-

Check out "The Revelations" [url] by Erik Hoel, 2021.

In Hoel’s The Revelations, or a “portrait of the artist as a young neural network,” witness the struggle between not just normal and revolutionary sciences but also consciousnesses.

Sociality

Fri, Nov 04 Collaboration

Required:

-

Read chapter one from "Conscience: the origins of moral intuition" [pdf] by Patricia Smith Churchland, 2019 (25 pages).

Patricia Churchland cuts straight to mechanism in her evolutionary argument for the adaptive value of socially-attuned brains.

-

Read chapter two from "Becoming human: A theory of ontogeny" [pdf] by Michael Tomasello, 2019 (33 pages).

Michael Tomasello, expert in both cross-species developmental psychology and usage-based linguistics, synthesizes the evolutionary origins of sociality as rooted in capacities he describes as intentionality.

Optional:

-

Read chapter six from "Baboon metaphysics: The evolution of a social mind" [pdf] [url] by Dorothy L. Cheney et al., 2008 (30 pages).

The dynamic primatologists Cheney and Seyfrath, in their study on baboons, detail what primate life is like—what social life is like.

-

Read "Mothers and Others" [pdf] [url] by Sarah Blaffer Hrdy, 2009 (33 pages).

The august primatologist Sarah Hrdy unveils one of the most reasonable evolutionary theories for the emergence of social behavior in humans’ most recent ancestor: shared caring.

-

Read "Learning to summarize from human feedback" [pdf] [url] by Nisan Stiennon et al., 2020 (45 pages).

From the people at OpenAI, find another good example of what a formalizaiton of sociality looks like. Particularly look at the linked blog post.

Optional Creative Pieces:

-

Check out "Her" [url] by Spike Jonze et al., 2014.

Jonze’s film, in portraying an emotionally-intelligent conversational agent, questions the mechanistic nature of our very own feelings.

-

Check out "The Grand Inquisitor" [url] by Fyodor Dostoyevsky, 1880.

Follow this legendary section of Dostoyevsky’s novel, The Brothers Karamazov, along a different vein as basic human nature and moral decision making is challenged.

Morality

Wed, Nov 9 Collaboration

Required:

-

Read chapter two from "Conscience: the origins of moral intuition" [pdf] by Patricia Smith Churchland, 2019 (7 pages).

Churchland returns to give us the tools to understand some of the mechanisms at play in the conduction of attachment.

-

Read chapter nine from "Becoming human: A theory of ontogeny" [pdf] by Michael Tomasello, 2019 (26 pages).

Tomasello provides further cross-species evidence on the nature of norm-based (moral) behavior, rooting his findings in the development of, in his terms, collective intentionality.

-

Read chapter seven from "Conscience: the origins of moral intuition" [pdf] by Patricia Smith Churchland, 2019 (32 pages).

Using that information about how human moral behavior comes about, Churchland theorizes about the potential implementations—is logic enough?

Optional:

-

Read "Computational ethics" [pdf] [url] by Edmond Awad et al., 2022 (17 pages).

This recent review paper details computational moral reasoning with an eye toward Bayesian approaches.

-

Read "Ethical Machines" [pdf] by Irving John Good, 1982 (5 pages).

I. J. Good, an acolyte of Turing, turns his speculative attention to the question of moral behavior.

-

Read "Delphi: Towards Machine Ethics and Norms" [pdf] [url] by Liwei Jiang et al., 2021 (42 pages).

This system, out of the nearby Allen Institute for AI, purports to approach moral reasoning simply using large language models fine-tuned on Reddit text. Also see the demo.

-

Read "The Moral Machine experiment" [pdf] [url] by Edmond Awad et al., 2018 (5 pages).

Growing out of the Bayesian-minded researchers at MIT and elsewhere, this experiment set to reduce human moral decision making in the context of autonomous driving to a set of trolley-car like problems. Also see the demo.

-

Read "Just an Artifact: Why Machines Are Perceived as Moral Agents" [pdf] by Joanna J Bryson et al., 2011 (6 pages).

Bryson, a leader in AI ethics, explores our intuitions for how we do and ought to treat AI.

-

Read "The emotional basis of moral judgments" [pdf] [url] by Jesse Prinz, 2006 (14 pages).

Neurophilosopher Jesse Prinz makes a convincing analytical argument for the emotional basis of morals.

Optional Creative Pieces:

-

Check out "Runaround" [url] by Isaac Asimov, 1942.

This is the story in which Asimov first explores his “Three Laws of Robotics” which for so long governed conversations around machine behavior.

-

Check out "Dolly" [url] by Elizabeth Bear, 2016.

Science fiction great Elizabeth Bear gives us an updated view on what rule-based morals might look like in silicon.

Embodiment

Wed, Nov 16 Representation

Required:

-

Read "The extended mind" [pdf] by Andy Clark et al., 1998 (14 pages).

In Clark and Chalmers’ classic paper, we’re asked to consider: where does thinking stop? What physical systems are necessary? Sufficient?

-

Read "Beyond the computer metaphor: Behaviour as interaction" [pdf] by Paul Cisek, 1999 (21 pages).

The neuroscientist Paul Cisek considers whether the “computationalist” view of mind is sufficient and what role internal representations may play. What does it mean for a system to be meaningful?

-

Skim "Intelligence without representation" [pdf] [url] by Rodney A. Brooks, 1991 (20 pages).

Roboticist Rodney Brooks, in his incisive way, gives us an alternative account to the dominant symbolic or connectionist paradigms for the internal structures necessary for intelligence.

Optional:

-

Read sections five and six (5 pages) from "Intelligence without reason" [pdf] by Rodney A. Brooks, 1991.

Read these sections of Brooks’ as the literature review for his above paper—they provide background commentary and relevant alternatives.

-

Read "Every good regulator of a system must be a model of that system" [pdf] [url] by Roger C Conant et al., 1970 (8 pages).

This theorem, by the cyberneticist Ashy, establishes that to the extent that an agent regulates its environment (a system) it must form an internal model of that environment.

-

Read "Toward Robotic Manipulation" [pdf] [url] by Matthew T. Mason, 2018 (27 pages).

Read the roboticist Mason for a review of what truly embodied AI looks like at the moment—it’s still quite hard.

Optional Creative Pieces:

-

Check out "Klara and the Sun" [url] by Kazuo Ishiguro, 2021.

Ishiguro’s latest novel brings us past the ultra-rationalist conception of AI to consider the internal worlds which may emerge from feelings quite different from our own.

Internals

Fri, Nov 18 Representation

Required:

-

Read everything excluding 2.1 through the end of section 2 and excluding the subsection "Parallel computation and the issue of speed" (pg. 54) up until "Concluding comments" (pg. 64) (40 pages total) from "Connectionism and cognitive architecture: A critical analysis" [pdf] by Jerry A. Fodor et al., 1988.

In this still-relevant classic, the philosopher Jerry Fodor and cognitive scientist Zenon Pylyshyn take the symbolically-light connectionist approach to task. How could such a wishy-washy approach mirror the easy heights of human logic?

-

Read chapter six from "Rebooting AI: building artificial intelligence we can trust" [pdf] by Gary Marcus et al., 2019 (33 pages).

The viral psychologist Gary Marcus and the measured Ernie Davis delineate the failures of current AI; the obvious is what you need when the very problem is that AI models can’t spell it out.

Optional:

-

Read "The Promise of Artificial Intelligence: Reckoning and Judgment" [pdf] by Brian Cantwell Smith, 2019 (15 pages).

From a more scholarly bent, the cognitive scientist Cantwell Smith homes in on the history of AI, describing trends in light of the things (ontology) that various methods could know (epistemology).

-

Read chapter eight from "Rebooting AI: building artificial intelligence we can trust" [pdf] by Gary Marcus et al., 2019 (20 pages).

-

Read "Artificial Intelligence and the Common Sense of Animals" [pdf] [url] by Murray Shanahan et al., 2020 (10 pages).

Shanahan and colleagues give a picture of what commonsense might look light outside of a symbolic-AI set-up.

-

Read "Building machines that learn and think like people" [pdf] [url] by Brenden M. Lake et al., 2017 (72 pages).

In a technical paper, Lake and colleagues offer a view into a kind of logically robust architecture (probabilistic programming, or generative Bayes nets) that contends with strict artificial neural networks and might support some of the symbolic arguments.

-

Read "The Bitter Lesson" [url] by Richard Sutton, 2019.

This oft-referenced post by reinforcement learning pioneer Richard Sutton summarizes an opposing view of Bender, one that assumes scale—instead of architectural changes—is all that AI needs.

-

Read "Nativism and empiricism in artificial intelligence" [pdf] by Robert Long, 2020 (25 pages).

In a much more rigorous defense than Sutton, Long explores what representation is actually necessary in support of mind.

-

Read "Language in a new key" [pdf] by Paul Ibbotson et al., 2016 (5 pages).

Ibbotson and Tomasello offer an approachable introduction to the field of usage-based linguistics, one that rebuffs much of the Chomskyian baggage of universal, internally-meaningful symbols.

Optional Creative Pieces:

-

Check out "Galatea 2.2" [url] by Richard Powers, 1996 (329 pages).

In the novel which garnered a fan-letter from Dennett because of its accuracy, Powers explores the disembodied representations that an artificial neural net may come to form.

Semantics

Wed, Nov 23 Representation

Announcements:

Required:

-

Read "Minds, brains, and programs" [pdf] [url] by John R. Searle, 1980 (7 pages).

The larger-than-life Searle, whom we’ve met in asides throughout the course, submits his invective against reductive view of mind and introduces the viral Chinese Room thought experiment.

-

Read chapter fifteen from "From bacteria to Bach and back: the evolution of minds" [pdf] by D. C. Dennett, 2017 (35 pages).

Dennett takes to task arguments that vaunt human uniqueness (or original intentionality) and explores what kind of comprehension it is that artificial systems have.

Optional:

-

Read "What Have Language Models Learned?" [url] by Adam Pearce, 2021.

This is a superb way to explore what it actually is that language models are doing.

-

Read until page 203 (before Future of Artificial Intelligence section) from "What computers can't do; a critique of artificial reason" [pdf] [url] by Hubert L. Dreyfus, 1972 (314 pages).

Consider Dreyfus’ classic critique of GOFAI and the degree to which it applies (or simply informs arguments) today.

-

Read "The symbol grounding problem" [pdf] by Stevan Harnad, 1990 (11 pages).

Psychologist and student of Donald Hebb, Harnad here defends an internally-symbolic view of mind similar to that of Searle, one that gives humans an edge on intentionality.

-

Read "Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data" [pdf] [url] by Emily M. Bender et al., 2020 (13 pages).

This paper by Bender and colleagues has recently divided natural language processing scholar, rooting its arguments in Harnad.

-

Read "The Model Is The Message" [url] by Benjamin Bratton et al., 2022.

Aguera y Arcas returns with Bratton to offer a rejoinder on recent debates about understanding, possibly a third view distinct from Bender and Sutton.

Optional Creative Pieces:

-

Check out "The Great Automatic Grammatizator" [url] by Roald Dahl, 1982.

Dahl’s delightful story depicts a world perhaps no so far from our own: one in which writers are replaced by a machine.

-

Check out "The Soul of Martha, a Beast" [pdf] by Terrel Miedaner, 1981 (16 pages).

Playing with the notion of a soul—where it is and where it might go—Miedaner exposes our assumptions about mind.

Alignment

Wed, Nov 30 Future

Announcements:

- Brian Christian visits class.

Required:

-

Read chapter nine from "The Alignment Problem: Machine Learning and Human Values" [pdf] by Brian Christian, 2020 (34 pages).

Christian gives us a sense of the people and the problems around AI alignment. What are we supposed to do about AI given that we are uncertain what it might do?

-

Read "Can machines learn how to behave?" [url] by Blaise Aguera y Arcas, 2022 (20 pages).

Aguera y Arcas, an AI leader at Google, extends the mechanistic findings on the nature of moral behavior to speculate on its multiple realizability.

-

Read "If We Succeed" [pdf] [url] by Stuart Russell, 2022 (15 pages).

Berkeley’s Stuart Russell asks us to consider what happens when AI systems act outside of their specification and trots out the reinforcement-learning off-switch game as one way to regain certainty.

Optional:

-

Read "Specification gaming: the flip side of AI ingenuity" [url] by Victoria Krakovna et al., 2020 (2 pages).

This DeepMind blog post succinctly demonstrates when our expectations for what an AI should do fail to match up with what our systems actually reward.

-

Skim "Speculations Concerning the First Ultraintelligent Machine" [pdf] [url] by Irving John Good, 1966 (57 pages).

Good returns to give us a sense of one of the original formulations of an intelligence explosion.

-

Read "Against longtermism" [url] by Émile P. Torres, 2021 (10 pages).

X-risk scholar Torres takes to task the claims of longtermism, in particular what to do about the expected value of unlikely future people.

-

Read the introduction from "The precipice: existential risk and the future of humanity" [pdf] by Toby Ord, 2020 (14 pages).

Oxford’s Ord argues for longtermism, a kind of utilitarianism—how we might act differently under a scheme that weighs the costs and benefits of our actions.

-

Read chapter one from "The precipice: existential risk and the future of humanity" [pdf] by Toby Ord, 2020 (31 pages).

Optional Creative Pieces:

-

Check out "Frankenstein or The Modern Prometheus" [url] by Mary Shelley, 1818.

Essential reading for anyone, Shelley’s icy tale details the traps of scientific hubris.

-

Check out "The Pupil in Magic" [url] by Johann Wolfgang von Goethe, 1901.

Goethe’s classic, otherwise known as “The Sorcerer’s Apprentice” and popularized in the U.S. by Fantasia, is a mischievous tale about what can go wrong when what we request isn’t exactly what we want.

-

Check out "R.U.R. (Rossum's universal robots)" [url] by Karel Čapek et al., 2004 (84 pages).

Čapek, who in this story birthed the term robot—from the Check “forced laborer”—satirizes an industry bent on automation.

-

Check out "The Seventh Sally or How Trurl's Own Perfection Led to No Good" [pdf] by Stanislaw Lem, 1981 (9 pages).

Lem pops up again with a wry tale of improperly specified tools and the values we imbue in the systems we make.

Forecasting

Fri, Dec 02 Future

Announcements:

- Ted Chiang visits class.

Required:

-

Read it all but particularly section eight on from "Artificial Intelligence as a positive and negative factor in global risk" [pdf] [url] by Eliezer Yudkowsky, 2008 (47 pages).

Yudkowsky’s quite readable speculation on future AI makes honest points even though it is based on very long time scales and optimistic assumptions.

-

Read "The Seven Deadly Sins of AI Predictions" [url] by Rodney A. Brooks, 2017 (6 pages).

Brooks returns to chide his AI colleagues on their rosy forecasts on the future of AI.

-

Read "Why Computers Won't Make Themselves Smarter" [url] by Ted Chiang, 2021 (3 pages).

Chiang extrapolates out a number of postulated intelligence explosion scenarios with an eye toward practicality: are these things likely anytime soon?

Optional:

-

Read "Artificial intelligence meets natural stupidity" [pdf] by D. McDermott, 1976 (6 pages).

Even at the beginning of AI’s expert systems era, McDermott assails the profession for their overly expansive use of terms: “wishful mnemonics.” Although it is steeped in the problems of its time, try to consider how the criticisms of the paper still apply today.

-

Read chapter eleven from "Machines like us: toward AI with common sense" [pdf] by Ronald J. Brachman et al., 2022 (18 pages).

Symbolic AI greats Brachman and Levesque, in their book in defense of logical knowledge representation, turn to consider what kind of behavior AI will need to be trustworthy.

-

Read "Forecasting Transformative AI: An Expert Survey" [pdf] [url] by Ross Gruetzemacher et al., 2019 (14 pages).

Read this for a flavor of the forecasts made about AI. Consider how much weight to give the variety of expert responses.

Optional Creative Pieces:

-

Check out "Lifecycle of Software Objects" [pdf] [url] by Ted Chiang, 2019 (111 pages).

From the masterful Chiang comes a story that challenges our ethical cores around human-level AI. Savor it even more when you read that “Experience is algorithmically incompressible”.

-

Check out "Ex machina" [url] by Alex Garland et al., 2015.

Garland’s film gives images to the more incendiary scenarios speculated to come from out of control AI.

What to value

Wed, Dec 07 Humans

Required:

-

Read the introduction (1-22) and conclusion (211-227) (48 pages) from "The Atlas of AI" [pdf] by Kate Crawford, 2021.

In her far-ranging work, Crawford investigates the hidden human and environmental costs of behind the boom in AI leaving us to wonder whether this is what we value.

-

Read chapter eight from "Reclaiming conversation: the power of talk in a digital age" [pdf] by Sherry Turkle, 2015 (21 pages).

Turkle, whose background is both in psychoanalysis and science and technology studies, brings her career working on AI at MIT to bear on the problems that have spread out of the laboratory: what to do when we spend more time with machines than each other.

Optional:

-

Read chapter two from "Fairness and machine learning" [pdf] [url] by Solon Barocas et al., 2019 (253 pages).

In their technical text, Barocas and colleagues remind us that formalisms can only help so much, we must still talk to each other, and view our tools with a grain of salt.

-

Read "Problem Formulation and Fairness" [pdf] [url] by Samir Passi et al., 2019 (9 pages).

Consider this case study as an example of the labor that doesn’t get automated.

-

Read chapter two from "The Alignment Problem: Machine Learning and Human Values" [pdf] by Brian Christian, 2020 (30 pages).

Here Christian offers a perspective that unites discussions of alignment and fairness.

Optional Creative Pieces:

-

Check out "Mother of Invention" [url] by Nnedi Okorafor, 2018 (11 pages).

Afro-futurist Okorafor offers us an expanded view on what living with AI might look like.

-

Check out "The Machine Stops" [url] by E. M. Forster, 1909.

Forster, in his early twentieth century story, delivers a remarkably prescient view on what human life might be like if turned entirely digital.

-

Check out "Player piano" [url] by Kurt Vonnegut, 1999.

As I have said elsewhere, Vonnegut here offers not just a satire of the 1950s atomic age but of our informational age as well. “At issue is not that the “robots are taking our jobs,” but that the facts of automation conveniently mask a consolidation of power.”

Project Presentations

Fri, Dec 09 Project

Announcements:

- Course project presentations

Final (TBD)

Mon, Dec 12 Project

Announcements:

- Meet at 230-420 pm

- TBD more course project presentations during our slated final exam time.

If you want to dive even deeper consider the readings and other courses beyond this class.