| Optional Value Iterations Programming Exercise for CSE 473 (Spring 2018) |

|

CSE 473: Introduction to Artificial Intelligence The University of Washington, Seattle, Spring 2018 |

| Purposes: This activity serves two purposes. (1) It offers some extra practice in coding up the Value Iterations method for offline solving of Markov Decision Processes. (2) It offer students who scored below the median score on the midterm a chance to have their scores adjusted so as to be closer to the median. This activity is offered in response to a student's request to have some extra work that could be used to bring up a low midterm score. In order to make it fair to all students, the adjustment formula is designed below so that it will (a) help those who are most likely to need it, (b) not change the median midterm score, (c) not necessitate additional work on the part of anyone who does not wish to do it, and (d) have no significant effect on the grades of students who scored at or above the median, whether or not they choose to do this exercise. We anticipate that doing this exercise will take between 1 and 3 hours of programming, depending on your programming fluency and whether you have become familiar with values, Bellman updates, and policies. This exercise will be graded on a binary basis (zero credit or full credit) and if full, the adjustment points (ap) will be computed from your original midterm score (oms) as follows: ap = 38 - oms/2. So if you originally had a 54, you could get 11 more points, and if you had an original midterm score of 66, you could get 5 more points, but if you had 76 on the midterm (the median) or more, then you would not receive adjustment points, although you are still welcome to do the exercise. Emilia has agreed to serve as lead TA for this activity. |

| This exercise involves implementing a form of planning, in which we assume that the parameters of an MDP (especially T and R) are known, and the agent will compute the expected utility of each state, so that the information can be uses to extract an optimal policy. |

| If you opt to do this activity, your code is due Monday, May 14 at 11:59 PM. You should turn it in via Canvas. |

| What to Do. This exercise requires Python 3.x (e.g., Python 3.5, 3.6, or 3.7) and will not run with Python 2.7. It also uses the standard GUI library Tkinter (which you get automatically when you install a standard Python distribution from Python.org). Before installing the starter code (described below), set up your computer with Python 3.x. |

| Next, download the starter code. and try running the file TOH_MDP.py. This will start up a graphical user interface, assuming that you are running the standard distribution of Python 3.x which includes the Tkinter user-interface technology. If the starter code does not run, it might be due to using PyCharm or some IDE that requires configuring paths; the staff will not be supporting the use of PyCharm in this activity, but there might be other students in the class who can help out. You should not have any problem running the starter code if you use a more standard environment such as IDLE (comes with Python from Python.org) or command-line execution from Linux. When the starter-code GUI runs, you can see that some menus are provided. Some of the menu choices are handled by the starter code, such as setting some of the parameters of the MDP. However, you will need to implement the code to actually run the Value Iteration. |

| About the files in the starter code: There are multiple files in the starter code folder, but you only need to modify two of them (mentioned above). The others can be ignored for this activity. (But leave them in your folder because some of them are required for the program to run.) |

| Implementing Value Iteration.

There is a starter file called YourUWNetID_VI.py. Rename the file according to this format and your own UWNetID. Also, change the name within TOH_MDP.py where this file is imported. Complete the implementation of the functions there:

|

Self-Check.

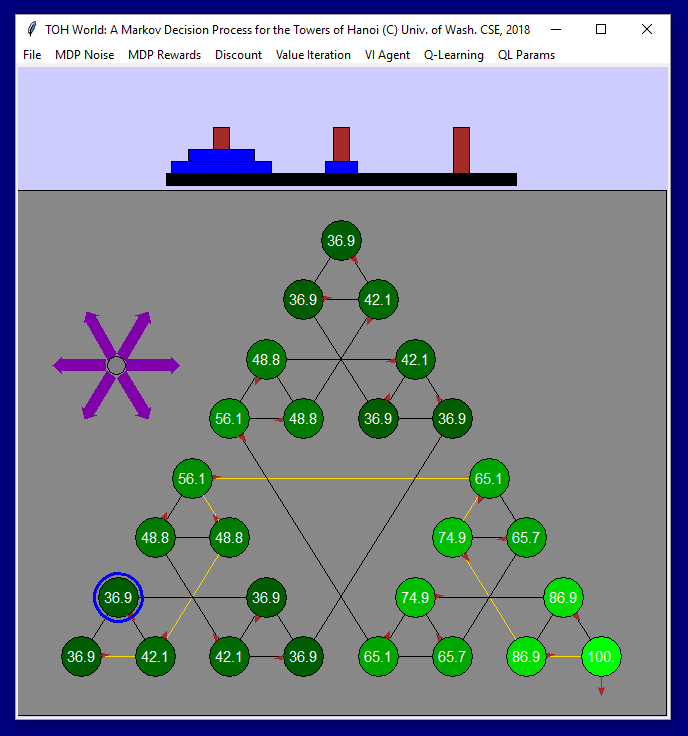

Your values and optimal policy should match those shown in this illustration.

|

| What to Turn In. Turn in your Value Iteration Python file, named with the scheme YourUWNetID_VI.py. Also, turn in your slightly modified version of TOH_MDP.py, without changing its name. (The modification this time is that it imports your Value Iterations file. You can leave the import of YourUWNetID_Q_Learn as it is in the starter code for this first submission.) Finally, also turn in a screen shot of the values you got, similar in style to the illustration above. Your file should be named using a similar naming scheme as for your VI code file... YourUWNetID_screenshot.png (or .gif or .jpg). |