It is difficult for new investors to interpret the vast amount of data about a single stock.

Users are often at a loss about which companies to invest in and how to make wise and profitable

decisions.

Goal: Our website will offer suggestions to users about which companies to invest in and also

offer more information about how well companies are performing in the financial world.

Our website will give the investors our take on the current data available.

Artifact: Website that facilitates stock searches and lists our top rated stocks.

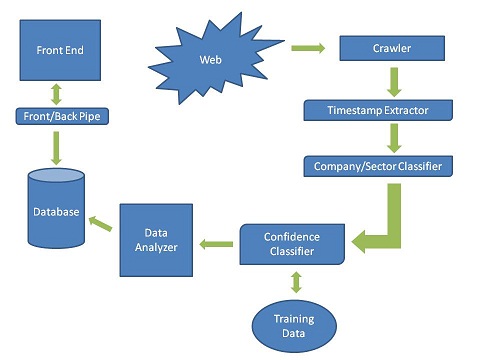

We will use machine learning for document classification. Machine learning will be used to assign

a confidence level to each article, where the confidence level is directly proportional to the

article’s prediction of the stock doing well in the near future.

We will start by reading manually a small set of financial articles and use them as our

initial training data. To expand our training set, we will use our crawler to fetch more

financial articles written in the past. These articles are going to be fed into our machine

learning model to predict confidence level. The predicted confidence level for each article

will be compared with the past performance of the corresponding stocks, with respect to the

timestamp of the article. If the prediction and the performance are in agreement, we add the

article in our training data set. This way, we can use old articles to grow our training set

to a size which will be big enough to facilitate our prediction of current articles.

It is difficult to measure our level of success by how well we can predict the actual stock prices,

because this is a difficult problem that many companies devote huge amounts of resources to.

Instead we will measure our success by how accurate our news classifications are.

We can measure this by randomly looking at classified articles by hand and make sure our classification

matches the one we interpret, which can give us a sample precision and recall for our classifications.