Lecture: Address Translation & Paging

Physical Memory

- byte addressable (can refer to each byte in memory), limited size

- ~200 cycles access latency (as a reference, common instrs within 7 cycles)

- a process's code and data needs to be in memory to execute

Physical Memory Management

- another resource allocation problem: limited physical memory, multiple processes

- so how do we allocate memory?

- simple case: one process at a time

- give the entire physical memory to the process

- no translation needed, process's address = physical address

- pro vs cons?

- actual case: multiple processes

- attempt 1:

- if we know how much memory a process needs, we can just put processes's memory into disjoint sections of the physical memory

- do we need address translation now? how do we support fork?

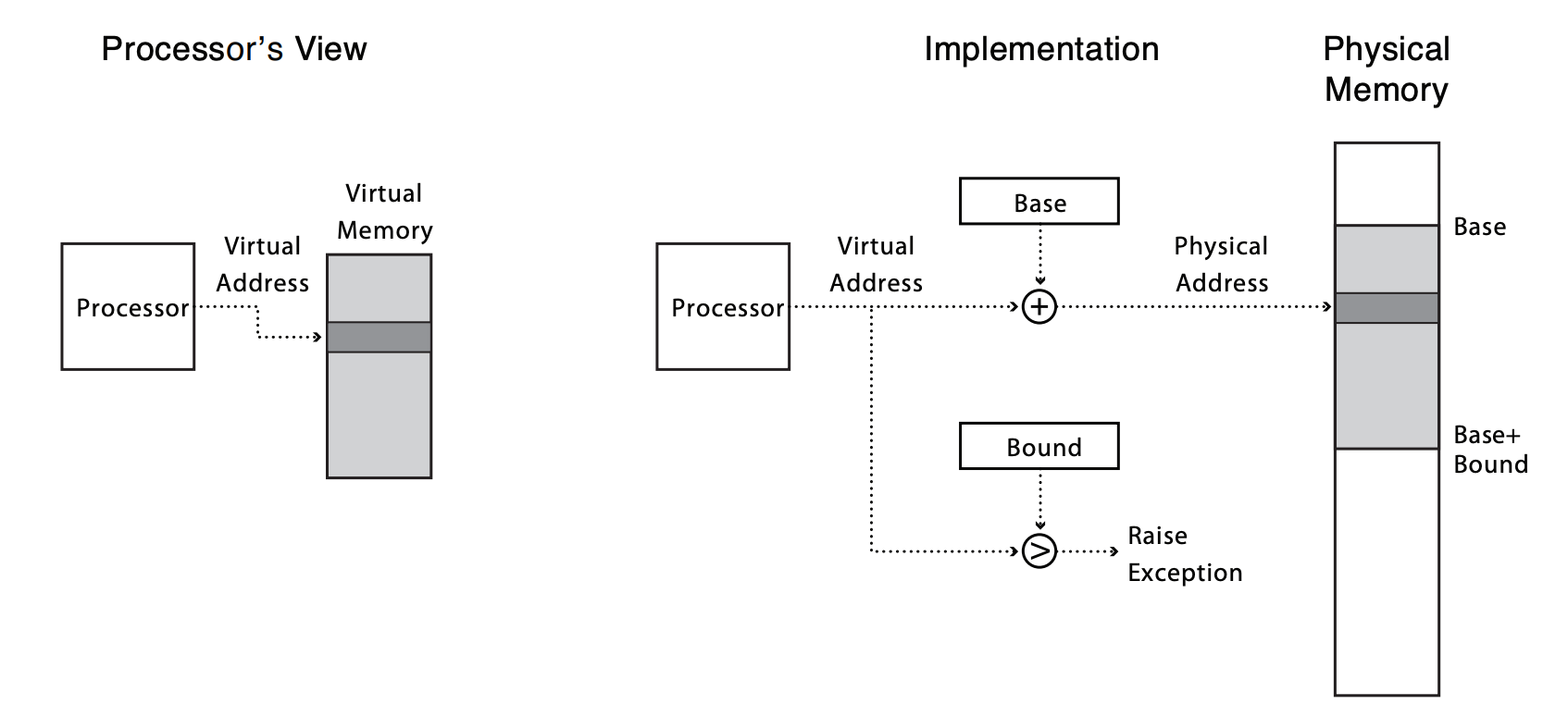

- virtual memory: every process has their own view of memory

- virtual address vs physical address

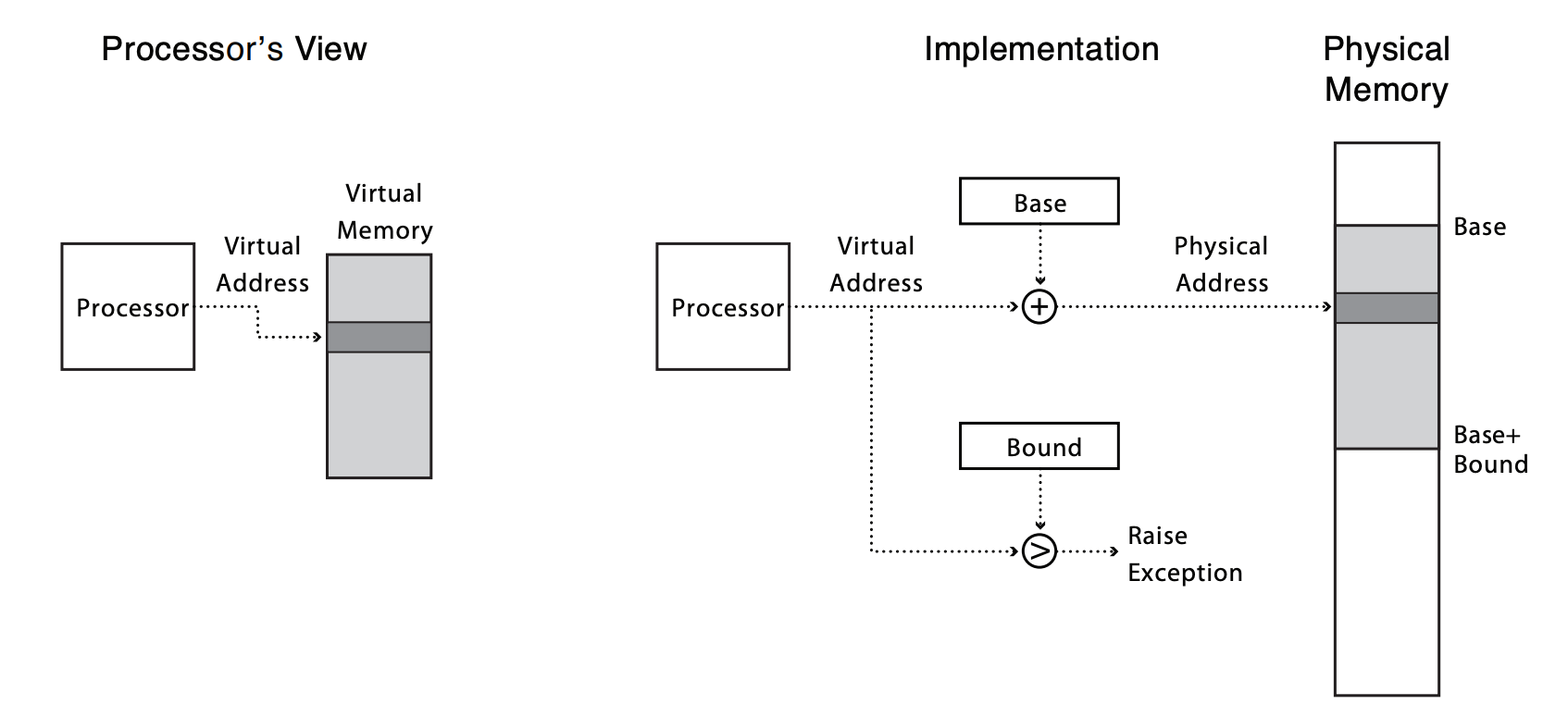

- hw support: base and bound registers

- pos vs cons? What are the limitations?

- attempt 2:

- do programs need all of its memory at once?

- how can we make more efficient uses of physical memory?

- paging: divide process memory into fixed chunks, only keep ones needed in physical memory

Paging

- divide a process's memory into fixed sized pages (typically 4KB)

- only keeps pages we currently need in memory (what might that be?)

- dynamically load other pages into memory as needed it

- access to a page not in memory causes a page fault

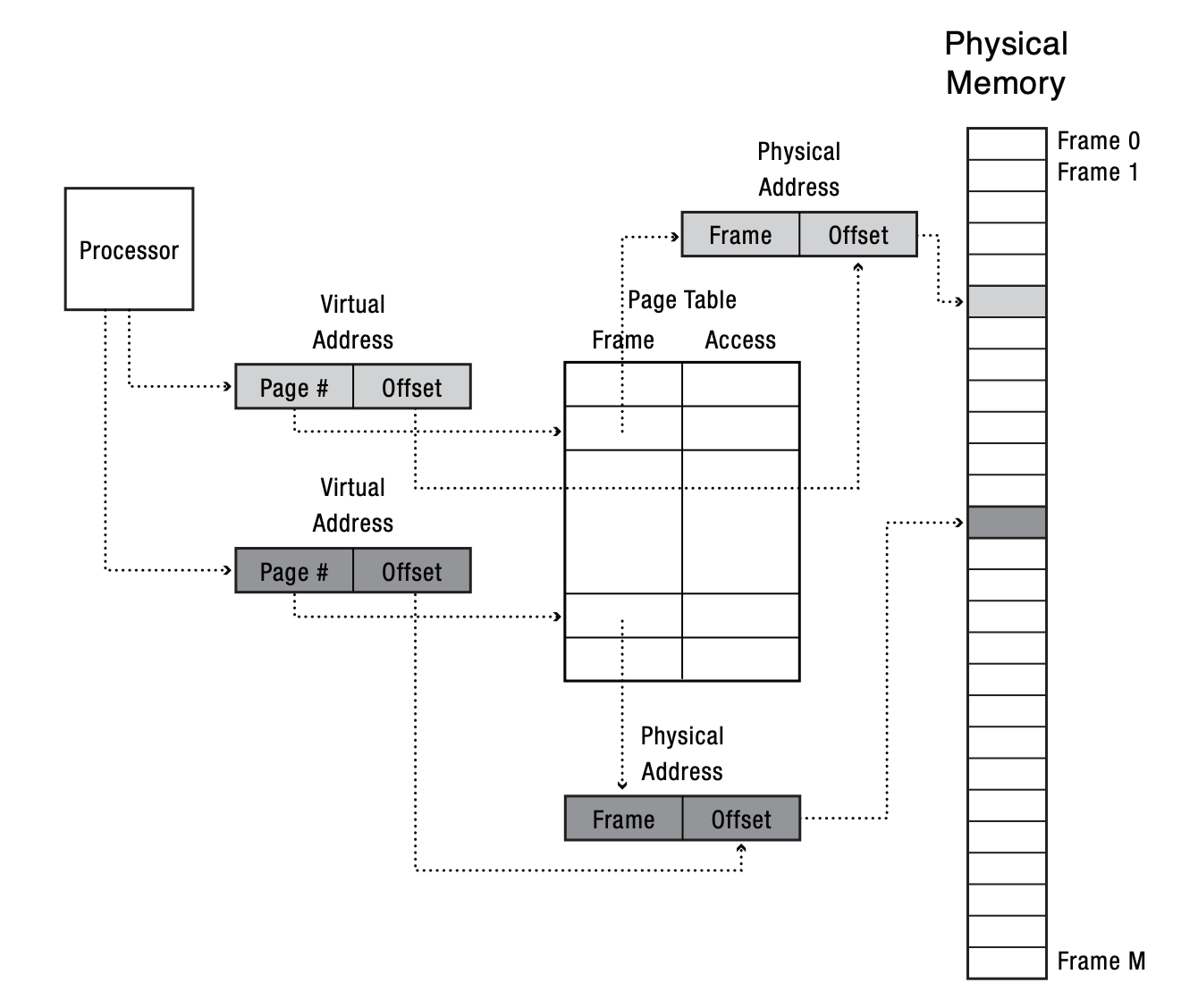

Address Translation With Paging

- how would we implement this purely in software?

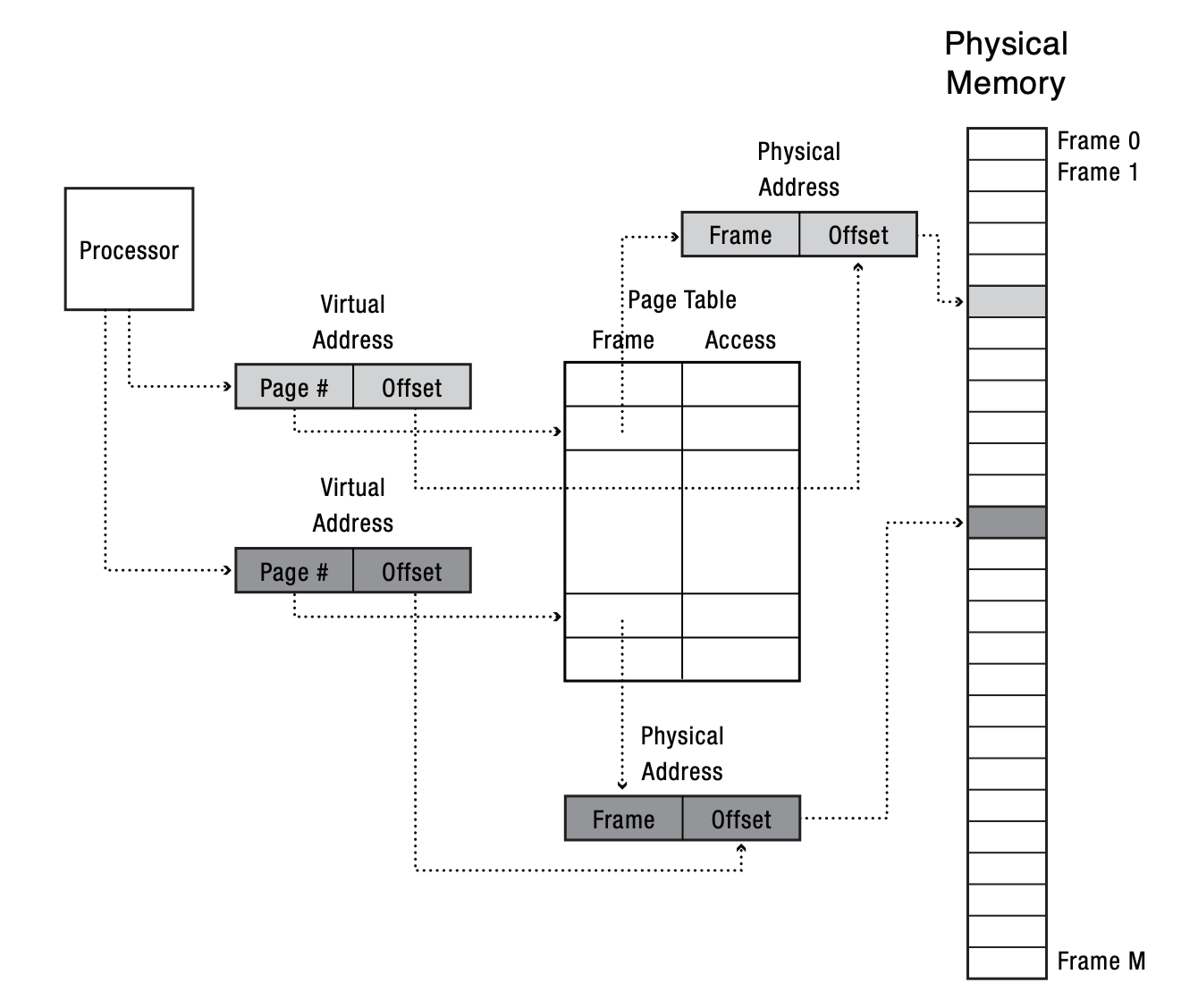

- divide up physical memory into page sized chunks

- each chunk of physical memory is called frame, page frame, or physical page

- track which page is mapped to which frame (physical memory)

- is this information per process or per entire system?

- what data structure can we use to store this info?

- what's the cost for accessing the data structure?

- where do we store the data structure?

- how many of these translation mappings would we need to store?

- on every memory access, transfers control to the kernel and asks the kernel to perform address translation

- how is it actually done?

- page table

- data structure for storing page to frame mappings

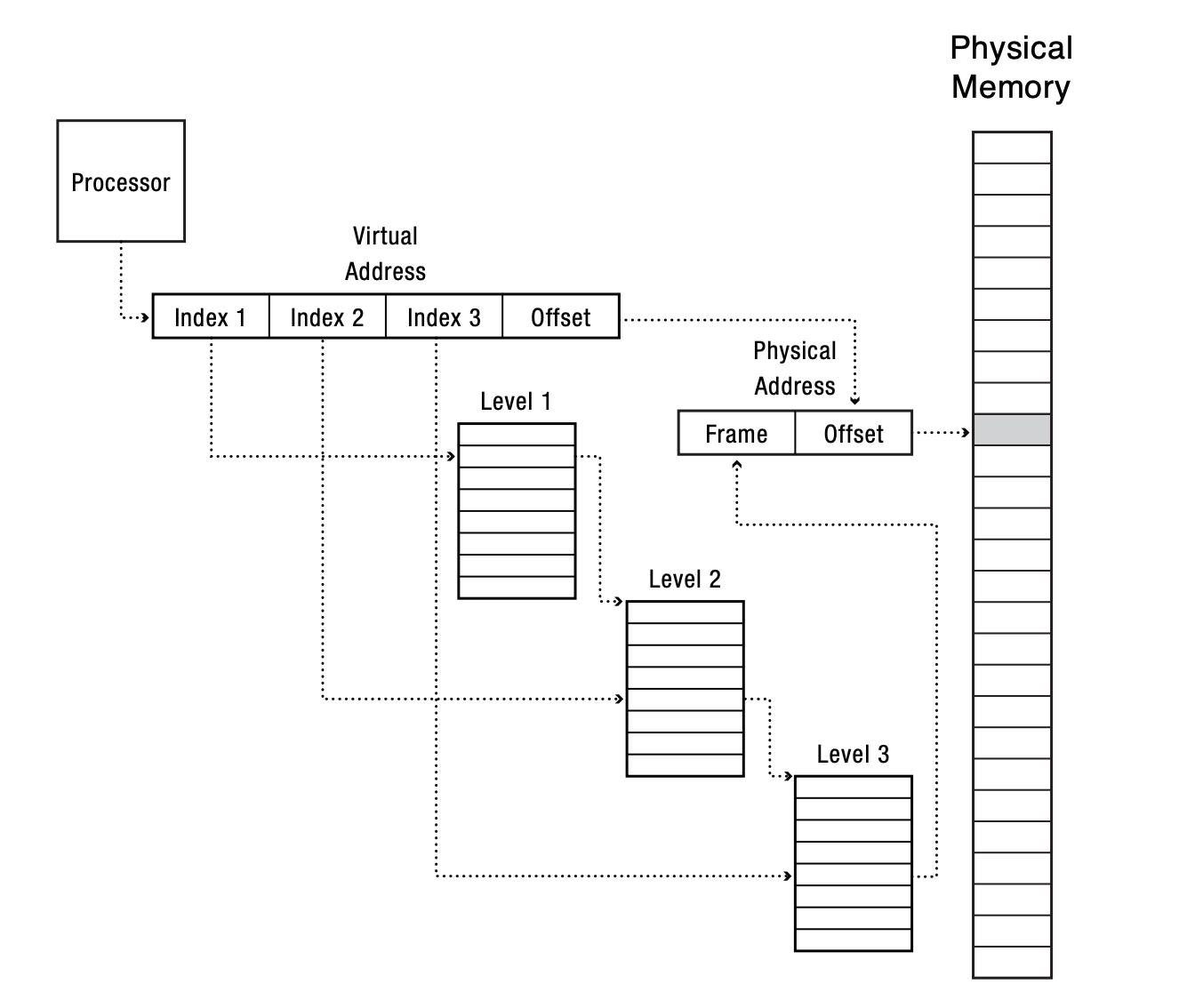

- single level

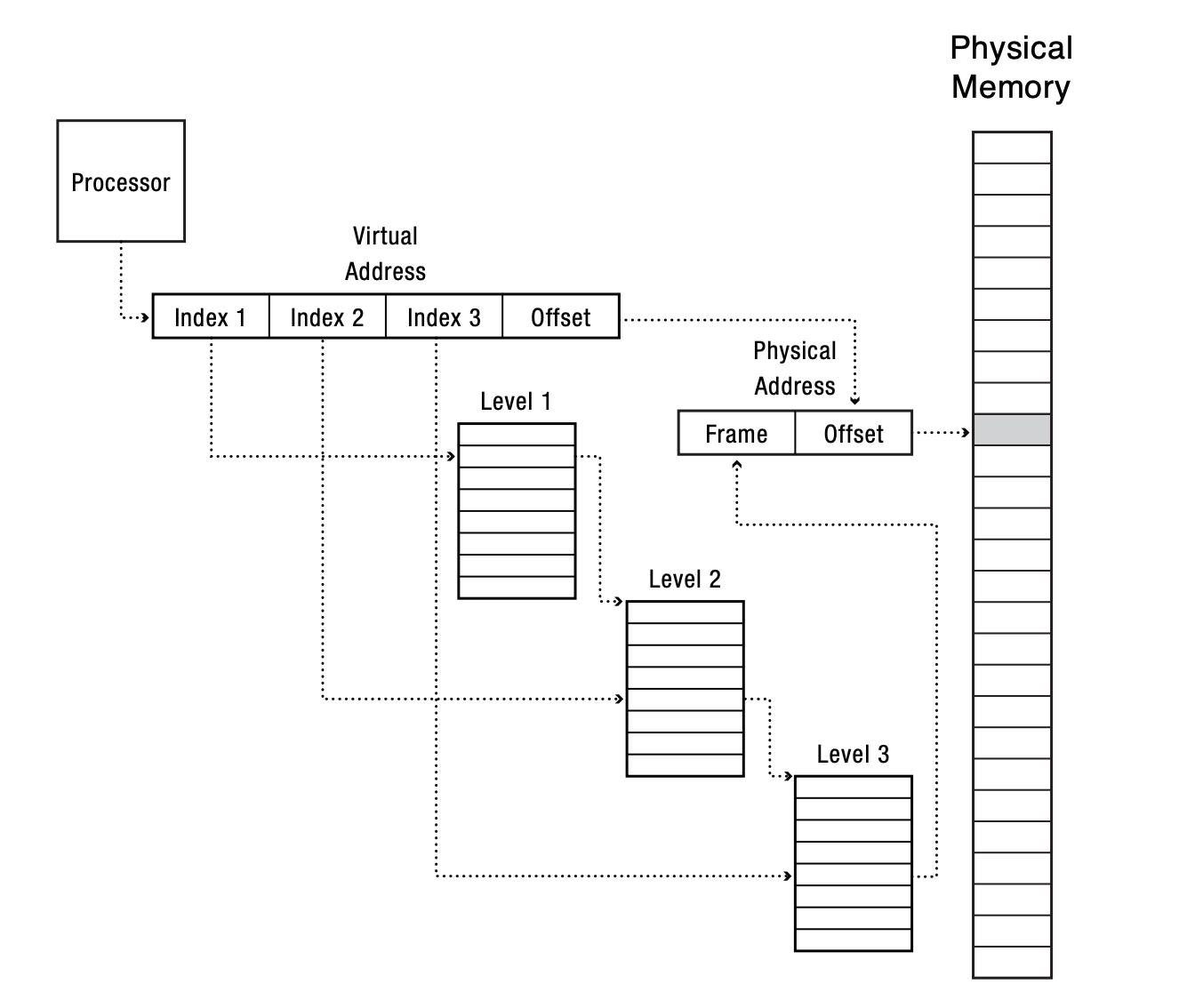

- multilevel

- indirection can help with space saving (when does it not?)

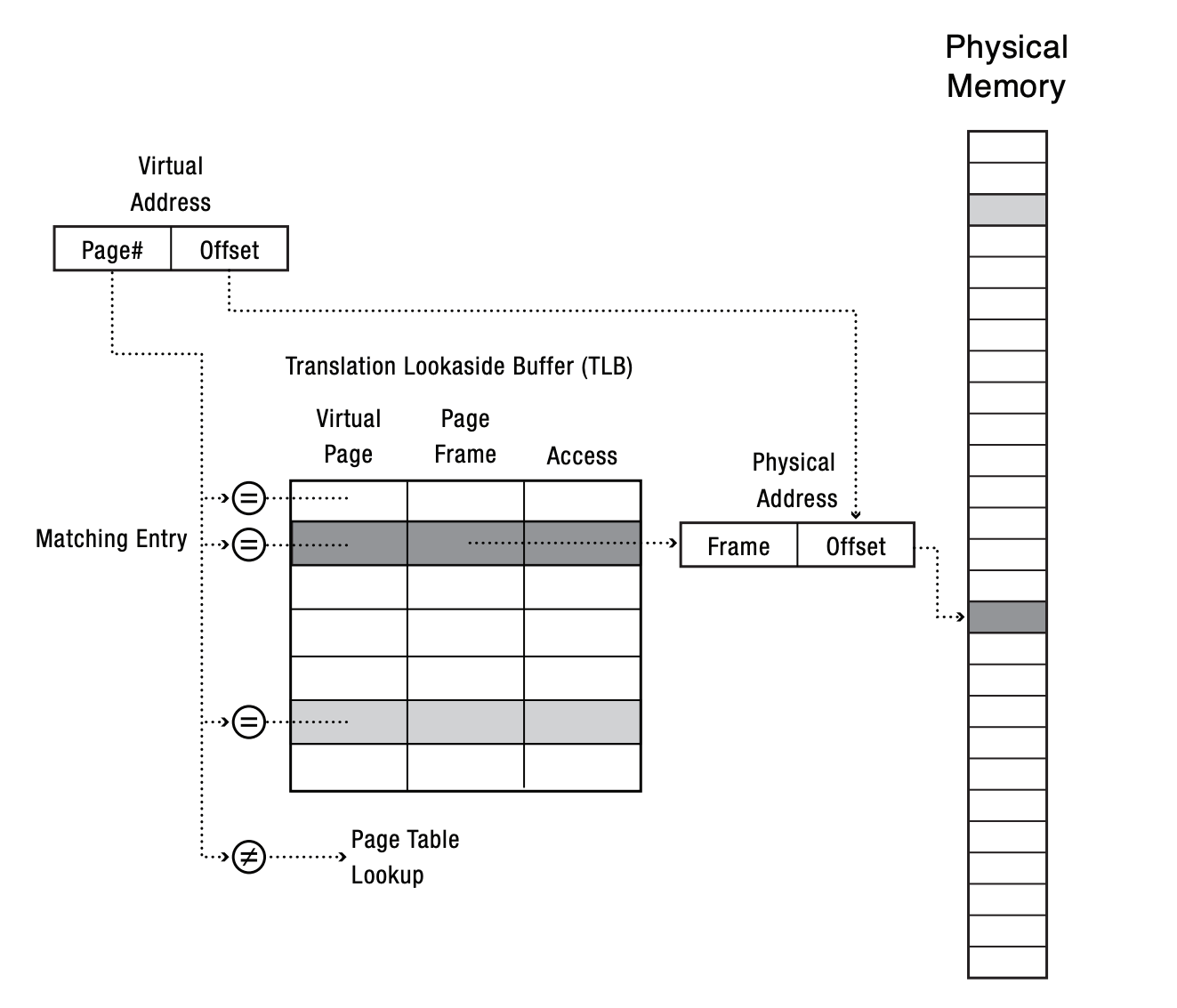

- how often do we need to perform address translation?

- how can we speed it up?

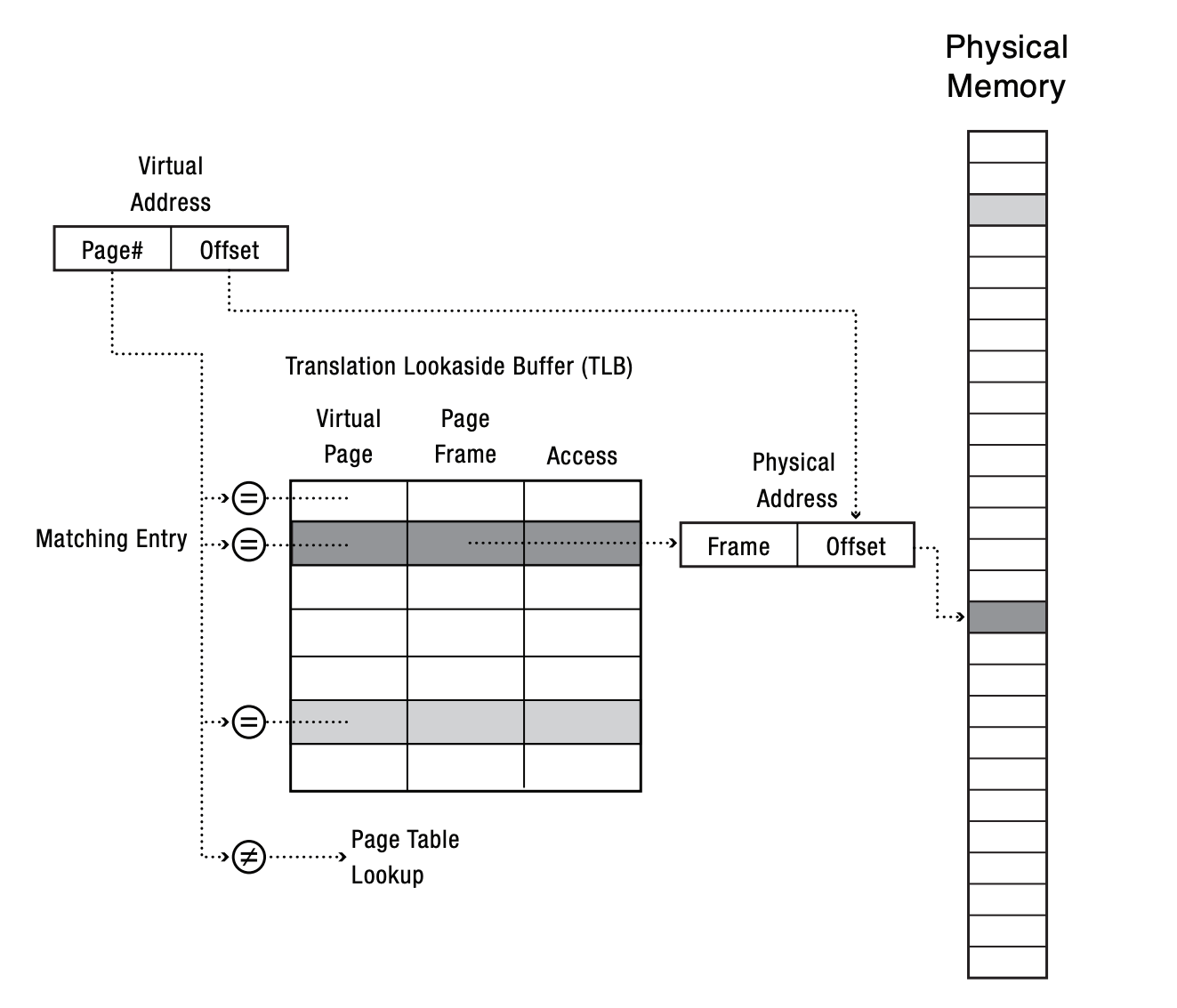

- cache the translation lookup! Translation Lookaside Buffer (TLB)

- upon a memory access, the hardware checks if the translation for the page is cached in the TLB

- if not, walk the page table to find the corresponding frame, and add that to the TLB

- have hardware perform the translation look up (page table walk)

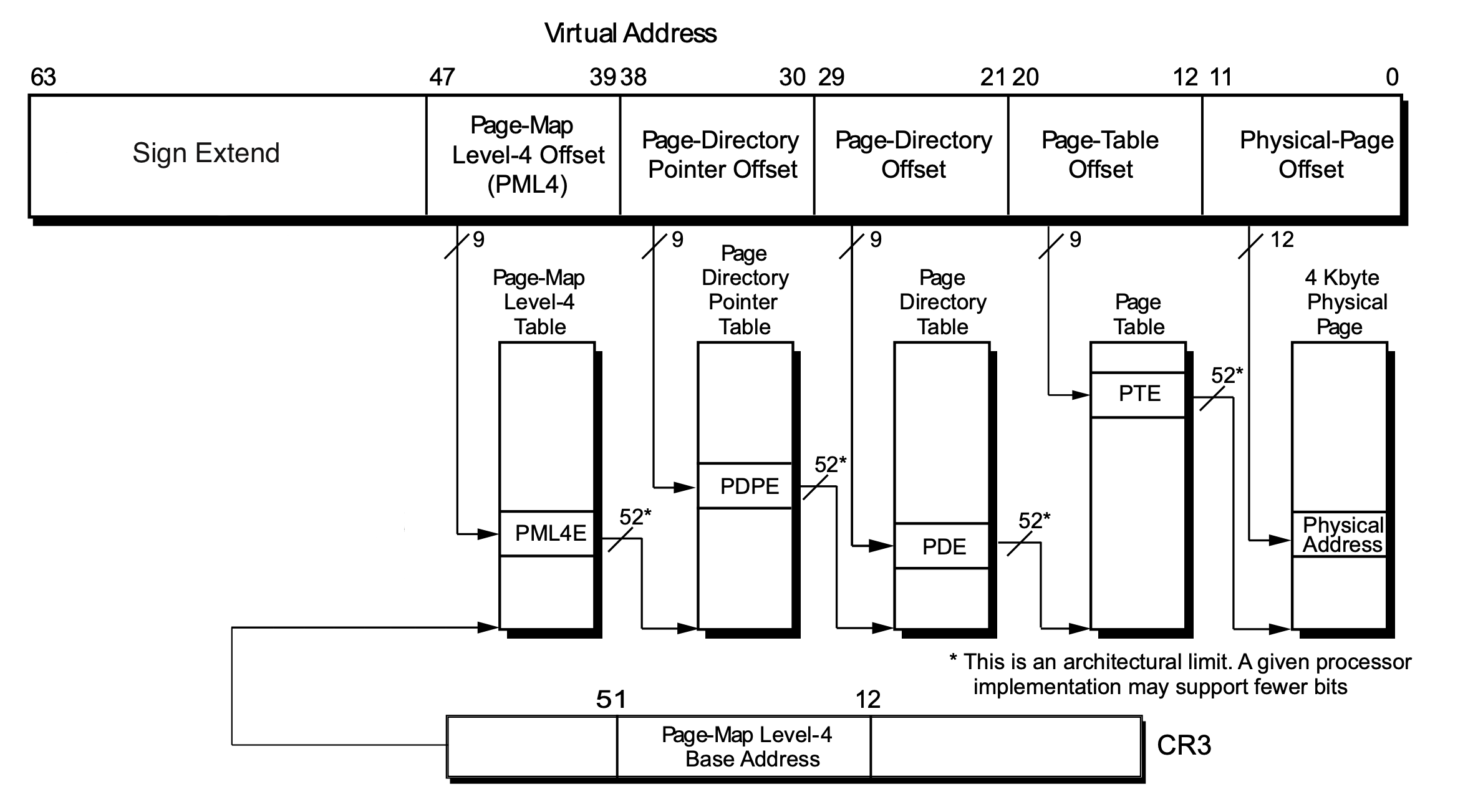

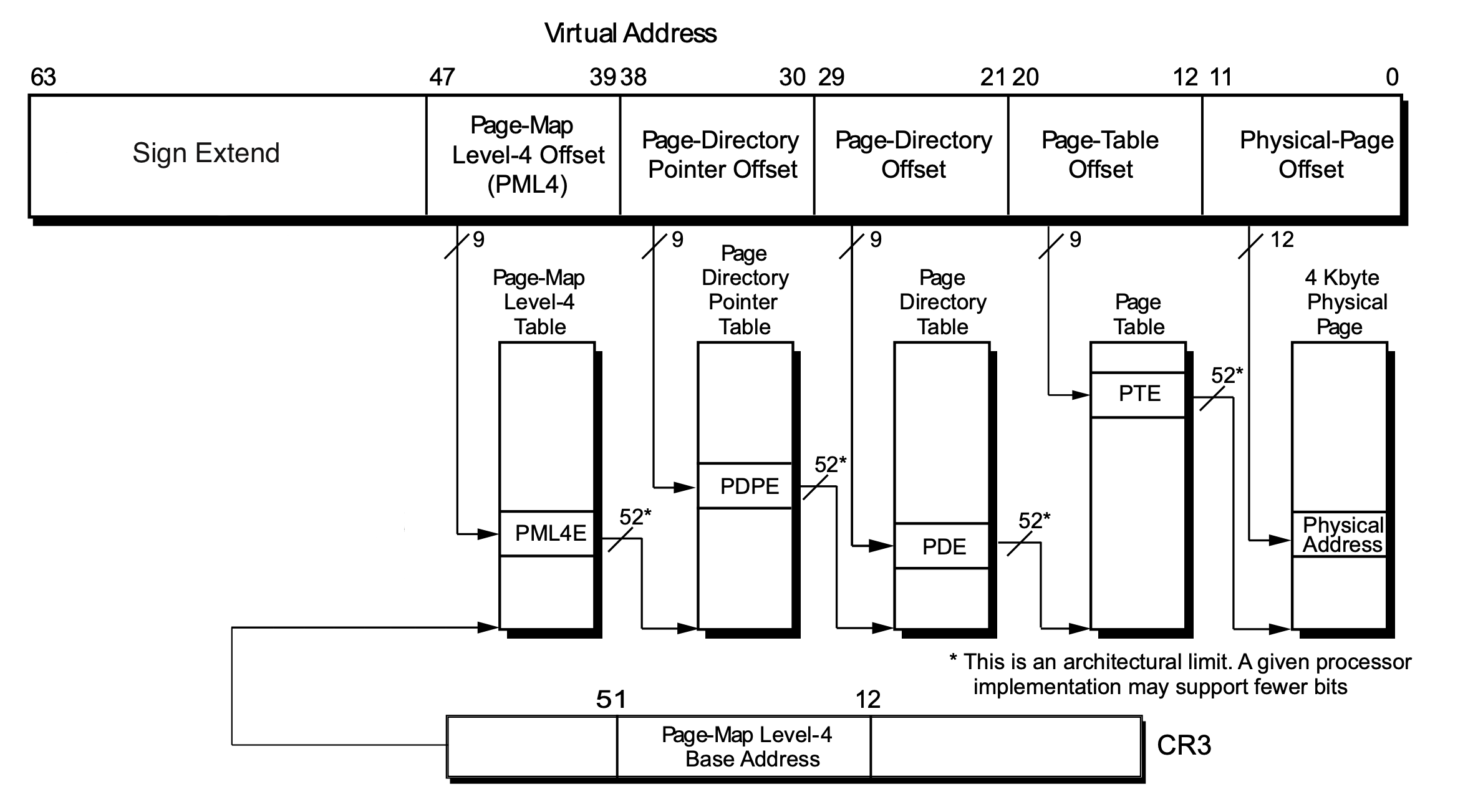

x86-64 Address Translation

architecture specification defines format of the page table

x86-64 page table format

- 4 level page table

- PML4: Page Map Level 4, top level page table, each entry stores the address of a PDPT

- PDPT: Page Directory Pointer Table, 2nd level page table, each entry stores the address of a PDT

- PDT: Page Directory Table, 3rd level page table, each entry stores the address of a PT

- PT: Page Table, last level page table, each entry stores the address of the mapped frame

- each table is 4KB in size and each table entry is 8 bytes

- 4096 (table size) / 8 (entry size) = 512 (entries)

- each table is indexed with 9 bits of the virtual address

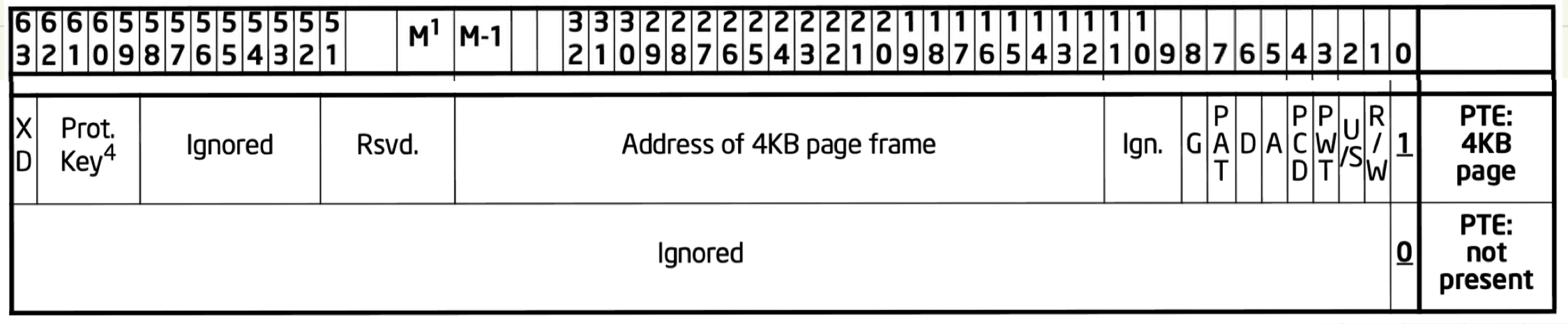

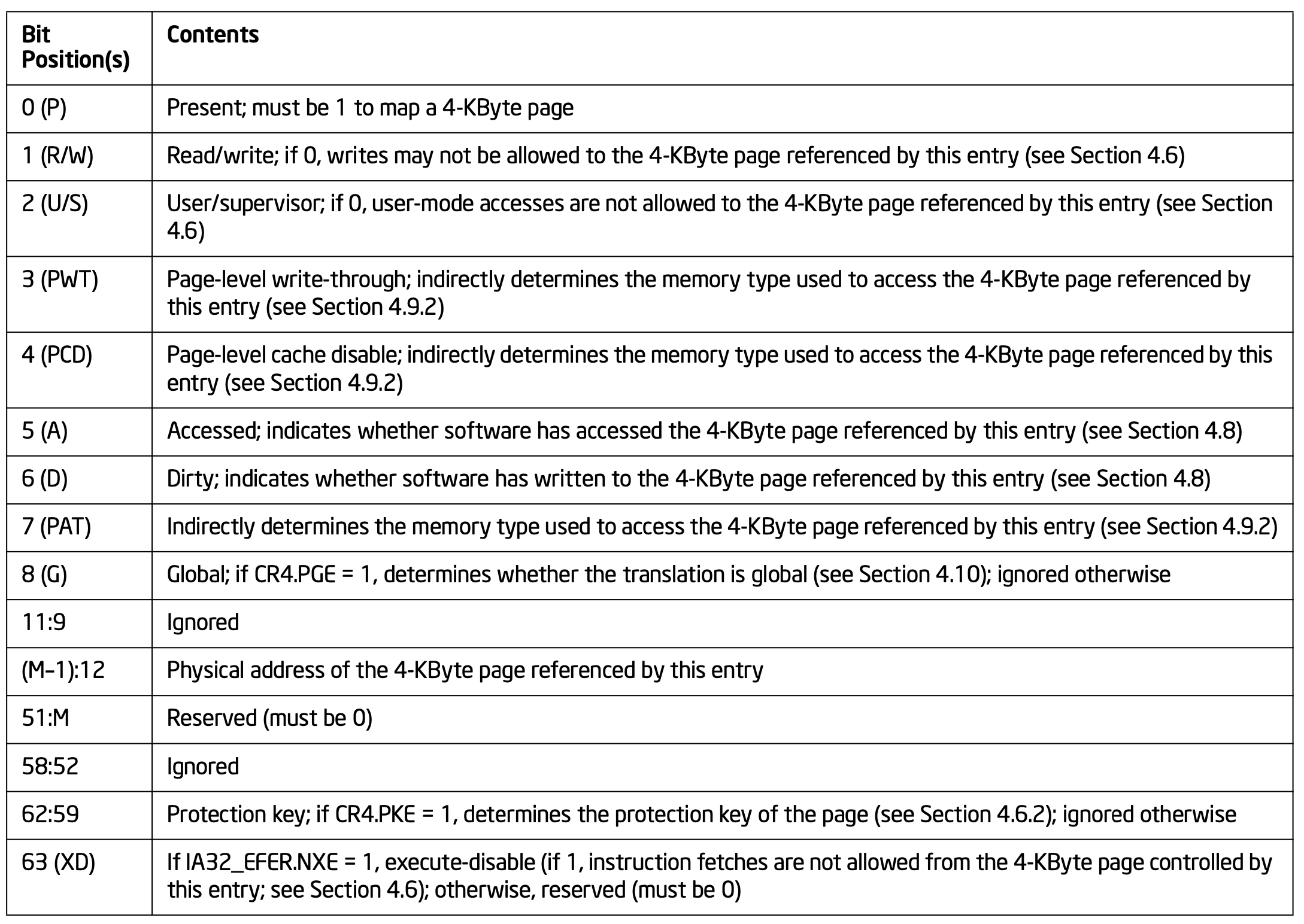

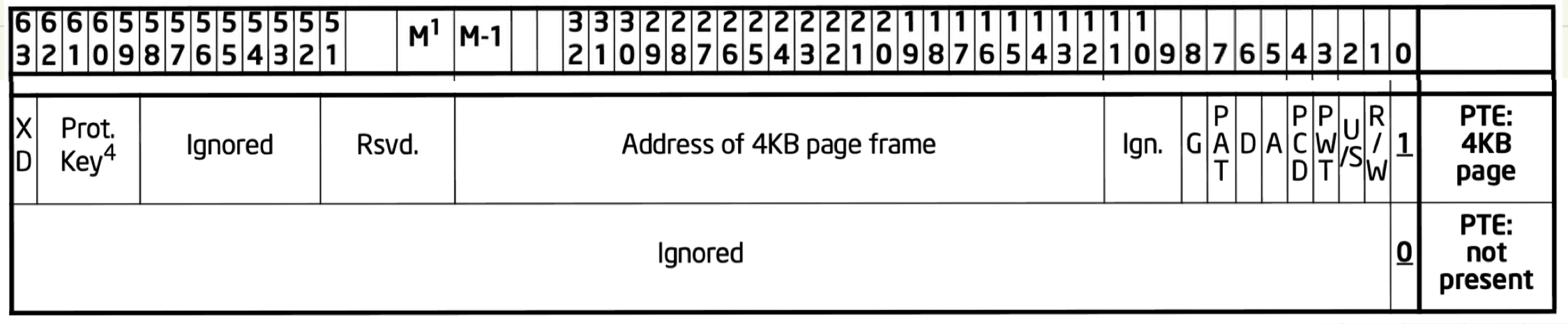

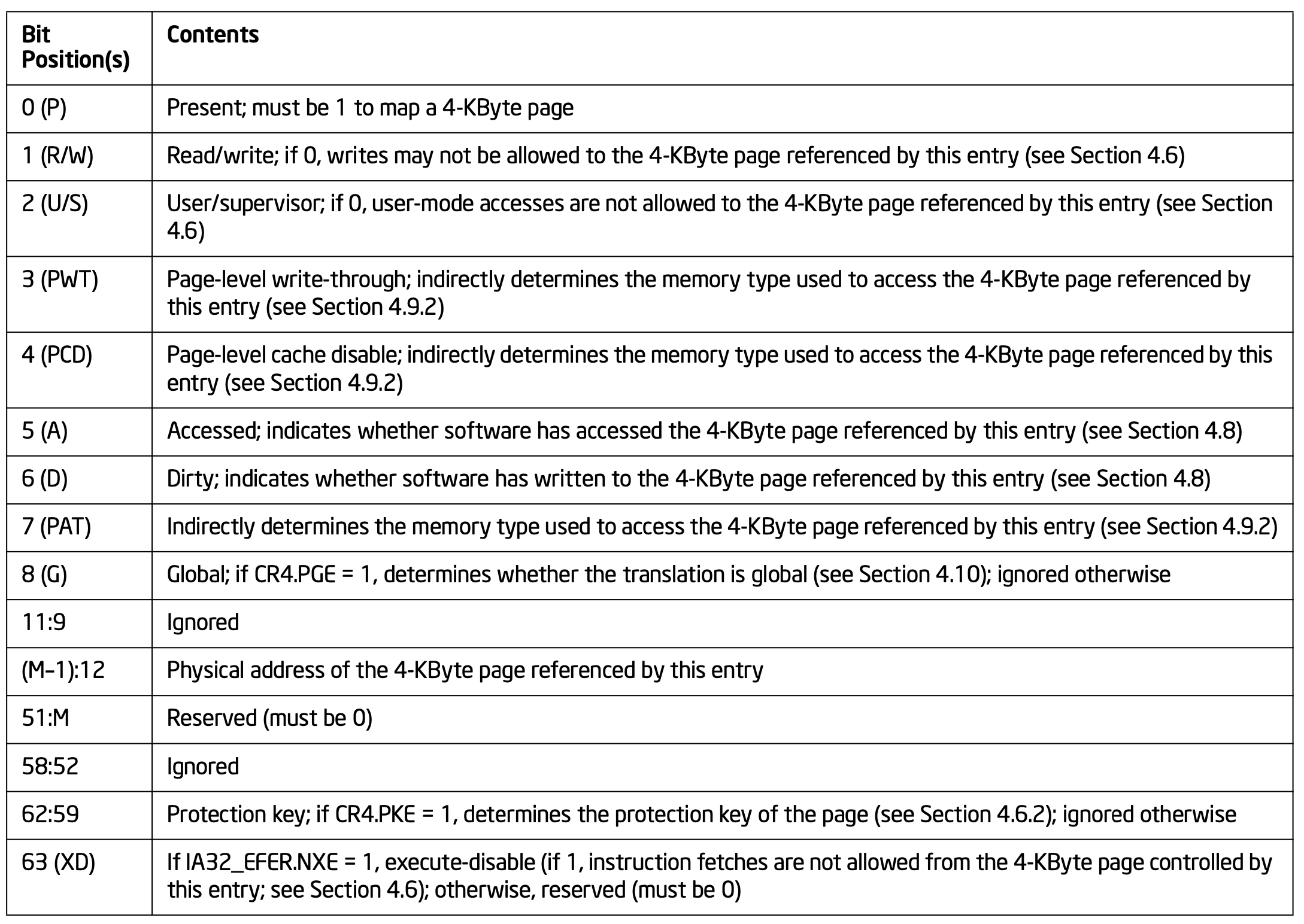

- what does the 8 byte page table entry look like?

- page table entry:

- Bit 0-11 contain information about the page (bit 0: present, 1: writable, 2: user accessible)

- Bit 12-47 contain the physical page number of the frame

- Bit 48-63 contain either reserved field or other permission info about the page (63: executable)

- why do we care about the format if hardware does the walk and permission checking?

- the kernel is responsible for setting up the page tables and filling out the entries

- the kernel can use these bits to make paging policy decisions

- eg. bit 5 indicates if the page has been accessed, 6 indicate if the page has been written to

Page Faults

- an exception that is raised by the hardware when something wrong happens in the page table walk

- could be missing the translation mapping or violating the access permission

- how does the kernel handle a page fault?

- identify and handle valid page faults

- stack or heap growth

- memory mapped files

- known permission mismatch

- memory pressure (access to swapped pages)

- terminate threads with invalid page faults

- nullptr, random address in unallocated virtual memory

- actual permission mismatch

- needs bookkeeping structures to track information (unrelated to address translation) about each page

- machine independent bookkeeping structures vs machine dependent page table

- machine independent structures in xk:

vspace, vregion, vpage_info

- track the size of each region (stack, heap, code), if a page is associated to any file, if a page is cow

- machine dependent structures in xk: the x86-64 page table

x86_64vm.c

- used for actual translation information

- you can update just vspace and generate a new machine depedent page table with

vspaceinvalidate

- last thing: how does the TLB interact with page fault handling

- if we change the permission of a page while handling page fault (eg. cow), is the cached result in TLB still valid?

- if we add a new mapping in page fault (stack growth), do we need to do anything to the TLB?