agents/SkeletonAgent.py & randomAgent.py: These are two default agents that play the game by choosing random or first possible move and you can see how it interacts withgenmoves.py, so you can make your own agent do that.backgammon_dsbg.py: edit this file to implement your DSBG agent.backgammon_ssbg.py: edit this file to implement your SSBG agent.client.py: This is the main file that runs a game between two agents. Details on how to run a game are given below in section F.ui/: This directory is for the GUI. You SHOULD NOT change anything in any of the files in this folder.game_engine/:This directory contains code that provides the logistic about the board and generates the move. You SHOULD NOT change anything in any of the files but defintely take a look at explanations below.boardState.py: This file provides a representation for the states in both versions of the game. Your agents will make moves by receiving from the game master a reference to the current state, plus the result of rolling the dice (in the case of SSBG) or just two fixed pseudo-dice values (in the case of DSBG), and then returning the description of a legal move. There is a sample agent file that you can examine to see what the data format is for the legal move.genmoves.py: Provides functionality to generate one or more (or all) legal moves from a given state. To see an example of using this functionality, look atSkeletonAgent.pytestStates.py: This file contains some example states in Simplified Backgammon -- basically either DSBG or SSBG, that you might find useful when analyzing to see how your agent does in some special situations.

| Assignment 3: Adversarial Search |

|

CSE 415: Introduction to Artificial Intelligence The University of Washington, Seattle, Autumn 2022 |

|

The reading for this assignment is

Search

in The Elements of Artificial Intelligence with Python.

|

|

Due Wednesday, October 26

at 11:59 PM. This is a partnership assignment and students

may work in partnerships of 2 or work individually. Those in partnerships

are eligible for the optional early-bird and collaboration-retrospective extra credit (5 points).

To qualify, turn in a working version of the first agent (backgammon_dsbg.py must pass all Autograder tests)

and the partnership information file (partnership.txt) by Friday, October 21 at 11:59 PM,

and later include the collaboration retrospective in your final report. Please read section J on

what to submit to Gradescope.

Before turining in your final submission, please make sure that you are able to run a full game with both of your agents.

After the assignment deadline, we will run all of your DSBG agents in a round-robin tournament. The class winner will be awarded

5 extra points on this assignment.

|

| A. Introduction This assignment is to write a pair of game-playing agents that can play two simplified versions of the game Backgammon. This game normally involves rolling dice. In the first version of the game, there is no dice-rolling. Instead, it's as if one die had only 1s on all six of its faces and the other die had only 6s on all six if its faces. This adaptation removes the stochastic element of Backgammon, and it allows Minimax and Alpha-Beta pruning to be the appropriate techniques of choice for an agent. We'll call this variation of the game "Deterministic Simplified Backgammon" (DSBG). In the second version of the game, the dice rolling works normally, so expectiminimax search is the way to go, and neither Minimax nor Alpha-Beta is appropriate. We'll call this variation of the game "Stochastic Simplified Backgammon" (SSBG). In both versions, several of the rules of normal Backgammon are removed or simplified so that the new versions are easier to play. |

| B. Reading The reading for this option consists of four parts: (a) some optional background on the game and its recent resurgence in popularity, (b) Search in The Elements of Artificial Intelligence with Python, which includes material on minimax search and alpha-beta pruning, (c) the lecture slides on Expectimax search linked from our course calendar, and, if needed, (f) the article on expectiminimax search at Wikipedia. |

| C. Game Rules

To get started with this option, read the standard

rules of Backgammon.

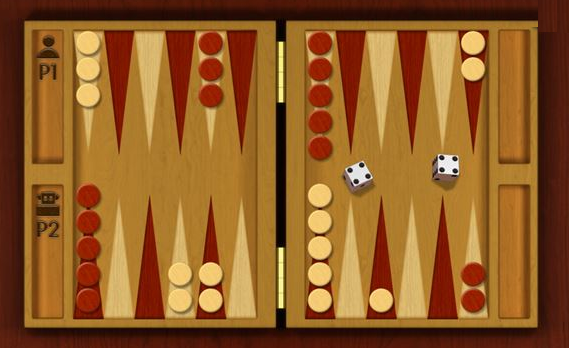

The boards for Backgammon come in many different color arrangements, as do the "checkers" and dice. Above are photos a couple of different ones. The one on the right is courtesy of former lead TA Amir Mola's parents. The version of the board implemented in our A3 starter-code initial state and game master is like the board on the left, but flipped upside down, so white is moving clockwise and red is moving counter-clockwise. Here are the simplifications we are assuming in both of our simplified versions of the game (deterministic and stochastic):

|

D. Working with the Starter Code

|

E. What to Implement

You should create two agents. One will be able to play DSBG (Minimax + Alpha-Beta Pruning). The other will be able

to play SSBG (Expectimax). These two agents can be very similar, except that the algorithms they

use for choosing a best move should be different.

As mentioned above, starter files are included for these agents:

backgammon_dsbg.py and backgammon_ssbg.py

Your second agent should play Stochastic Simplified Backgammon (SSBG).

Instead of Minimax search and Alpha-Beta pruning, it should use Expectiminimax search.

Here are the major differences/similarities between DSBG and SSBG:

move(state, die1, die2) method will implement Expectimax. Note that unlike DSBG

we do not know the roll of the die and therefore, it is determined immediately before gameMaster.py calls on this method.

Please note that in your search, you need to assume the uniform distribution (equal probabilities) for the 36

possible dice-throw outcomes.

"The Autotrader failed to execute correctly. Contact your course staff for help in debugging this issue.

Make sure to include a link to this page so that they can help you most effectively."

that means that the autograder failed to run your file and you have an error.

|

| F. How to Run a Game

There are two ways to run a game: with the GUI or with only ASCII graphics.

The GUI makes things look colorful and prettier in general, but if you have

any trouble installing pygame, which the GUI needs, you can fall back

to the ASCII graphics option.

Use client.py. This is the main file that runs a game between

two agents. Before running client.py you need to install a package known

as pygame. For a typical Python installation, you can get pygame by

executing the following command-line command.

pip3 install pygameIf your Python environment is controlled by Conda or Anaconda, or if you are not sure, click on this help file. After you have pygame installed, you can run a full game with the imported agents from your agents/ directory. If you want to run one of your own agents (SSBG or DSBG) you need to change one of the players to be your BackgammonPlayer. If you run SSBG, make sure to change the value of DETERMINISTIC to False. Then to run a game enter python3 client.pyIf you want to run one of your own agents (SSBG or DSBG) you need to change one of the players (in the imports) to be your BackgammonPlayer. If you run SSBG, make sure to change the value of DETERMINISTIC to False.

If you are having problems with installing pygame or cannot get the

GUI to work, you still can finish this assignment. In a command line window, simply run

bash run.shand then open log.txt to see the game details. If you want to run one of your own agents (SSBG or DSBG) you need to change one of the players (in the imports) to be your BackgammonPlayer. If you run SSBG, make sure to change the value of DETERMINISTIC to False. |

| G. Commenting your Code

Each of your Python files should begin with a multiline string that gives, on the first line,

your name(s) and UWNetID(s). It should identify the file (name and purpose),

and explain if it is a modified version

of starter code in CSE 415 or is a new file you created from scratch.

Follow reasonable commenting practice in your code. |

H. Report

You'll be turning in a "report" for this assignment in addition to your code (see below).

The report should contain the following sections:

Partner 1 name

Partner 2 name

"Assignment 3 for CSE 415, Autumn 2022, University of Washington"

Deterministic Simplified Backgammon Agent

Who did what for this agent (not required if working alone).

How the static evaluation function works. Explain each component in details.

Any special considerations for Alpha-Beta pruning, such as

ordering of successors best-first.

Other comments on the implementation.

Stochastic Simplified Backgammon Agent

Who did what for this agent (not required if working alone).

Other comments on the implementation.

Partnership retrospective (required for the partnership bonus).

What issues you faced or didn't face related to the

partnership.

Lessons you learned as a result of working in this

partnership -- Partner 1. (Give Partner 1's name and

2 to 10 lines describing AT LEAST ONE lesson.)

Lessons you learned as a result of working in this

partnership -- Partner 1. (Give Partner 2's name and

2 to 10 lines describing AT LEAST ONE lesson.)

Optional additional comments. (Comparing the versions,

insights on the games, or on the agents, for example).

|

I. What to Turn In

Submit the following files to GradeScope.

backgammon_dsbg.py backgammon_ssbg.py A3-Report.pdfIf you are submitting for the early-bird option, submit one GradeScope submission (for the team) that includes these files: backgammon_dsbg.py partnership.txtThe partnership.txt file should include the name and uwnetid of each partner. |

J. Notes about Autograder

The staff is planning to autograde parts of your code. To do this, it is likely that

your files will be imported as modules by the autograder. Your agent should not

automatically start running when the agent module is imported. please do not change or add

any additional import statement to any of the agent files.

Autograder Turn-in:

Red to Bear Off < White to Bear Off < White Hit From Bar < Red Hit From Bar < White About to Win.

Red to Bear Off < White to Bear Off: because the white to bear off is a better state for the white

checkers and therefore, the static eval needs to return a larger value for it!

White Hit From Bar < Red Hit From Bar: In both of these test cases, the checkers are still bearing

off but one of their checkers gets hit by the opponent. For example, consider how you can arrive at white hit from bar:

There is a single red checker on the bar, but the white checker home base is completely full so the red checker

cannot sit back down. So you start bearing off white checkers and the red checker still cannot sit back down.

In this case, you bear off let's say 3 white checkers, which means that you are at a better stage compared to white to bear off.

However, by luck, the red checker rolls and sits back down and actually hits a single white checker(s). So in other words there are

now white checker(s) on the bar and the bearing has stopped until you can bring back that one white checker to the home base. This case

is better than the white to bear off because you already have some checkers removed but you have also been hit so it is worse than red hit from bar.

Furthermore, in order to check that your Alpha-Beta pruning is

actually working, we check the number of states explored as well as

the number of cutoffs made.

For full credit, your Alpha-Beta should be exploring less than 0.8 *

number of states explored by minimax.

|

| K. Academic Integrity The code you submit for this assignment must be written by you and your partner. Code copied or "borrowed" from the web or from other students is not permitted. The staff makes spot checks for violations, and when found, these are reported to the administration. |

| L. Acknowledgements The first backgammon game image is courtesy of Microsoft. The second backgammon game image is courtesy of the Mola family. The GUI was written by Alex Zhou with input from Amir Mola and Steve Tanimoto. |

| Updates and Corrections

If necessary, updates and corrections will be posted here and/or mentioned in class or in

ED.

|