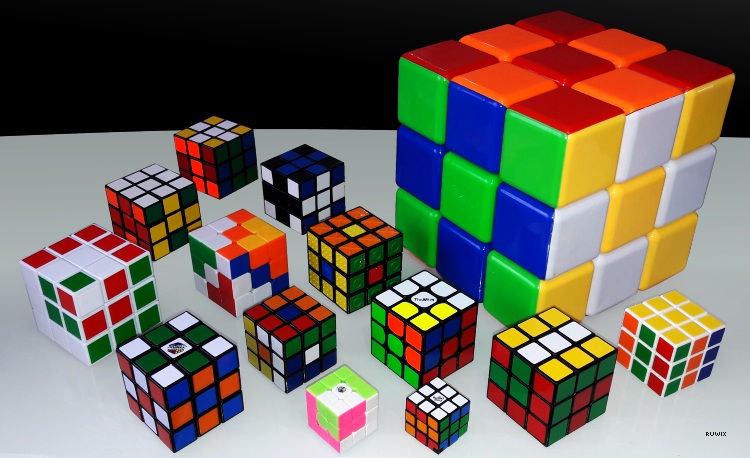

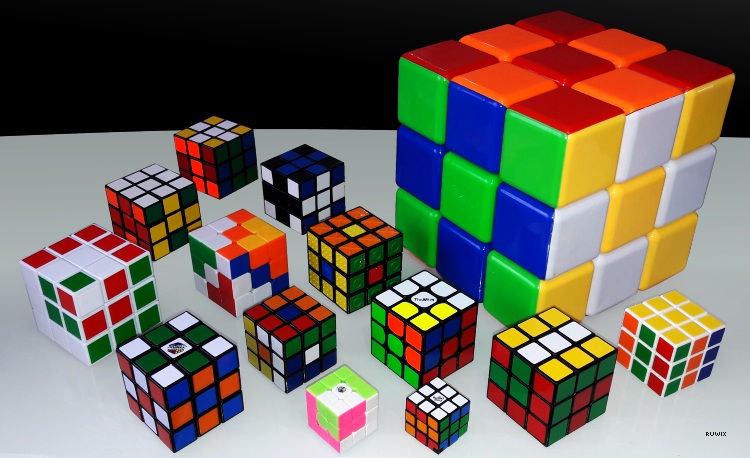

| OPTION 3: Feature-Based Reinforcement Learning for the Rubik Cube.

This option involves applying reinforcement learning to a problem whose state space

is very large.

In this option you will explore the use of Q-Learning in solving

a combinatorially challenging problem: Rubik's Cube.

Image courtesy of ruwix.com.

Because of the very large state space for straight

Rubik's cube, you may consider a variety of simplifications including:

-

Feature-based state representation.

See Poole and Mackworkth,

AI, 2nd ed.

You should definitely take this approach, probably in combination

with some of the following ideas.

-

180-degree moves only. This reduces the branching factor by over half,

leading to a smaller state space.

-

2x2x2 size (similar to the extra credit option in Assignment 3).

Instead of 26 visible cubies (plus an imaginary center cubie),

this version has only 8 cubies, making its state space much smaller.

-

fewer than 6 distinct colors. If there were only one color, all different

rotational configurations of the Rubik's Cube would be identical, and so the

state space would be reduced to a single state. Clearly with some number of

colors c, where 0 < c < 6, we can reduce the size of the state space while

maintaiing some of the challenge of the original puzzle.

-

combinations of heuristics and

Q-Learning, where heuristics may be based on the use of "pattern databases".

By marking some cube faces as "don't care" (i.e., wild-card) colors, we make it

easier to reach a goal state. As in Poker where, for example, a deuce could be

declared "wild" and be part of flush, straight, full house, etc., a wild-card

cubie face could be considered blue, and thus be one of 9 cubie faces contributing

to a monochrome blue face of the whole Rubik's Cube, etc. Another way to think of

a wild-card cubie face is like a blank tile in the game of Scrabble, where the blank

may be interpreted by the player as any normal letter A-Z. With the pattern database

approach, several cubie faces can get a special color, such as black, and we consider

the puzzle solved is all the non-black cubie faces appear on separate Rubik's Cube

faces.

-

constraining allowable starting states.

Note that Rubik's Cube does not have standard starting state, unlike the Towers

of Hanoi. Thus there will be a different solution for each possible state

of the Rubik's Cube puzzle. For an agent to get good at solving in this domain

would mean learning or knowing about the entire state space. If you need to, you

could limit the subset of allowable starting states to a small number, or perhaps

only just 1.

|