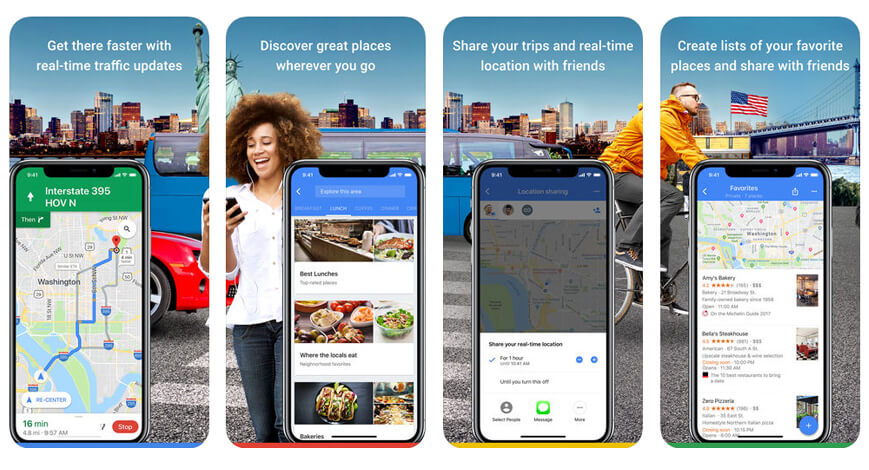

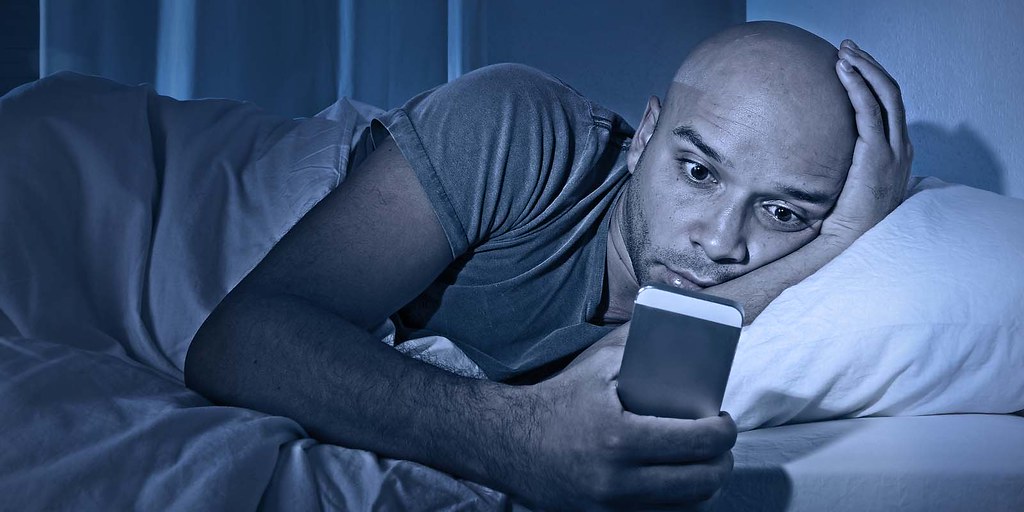

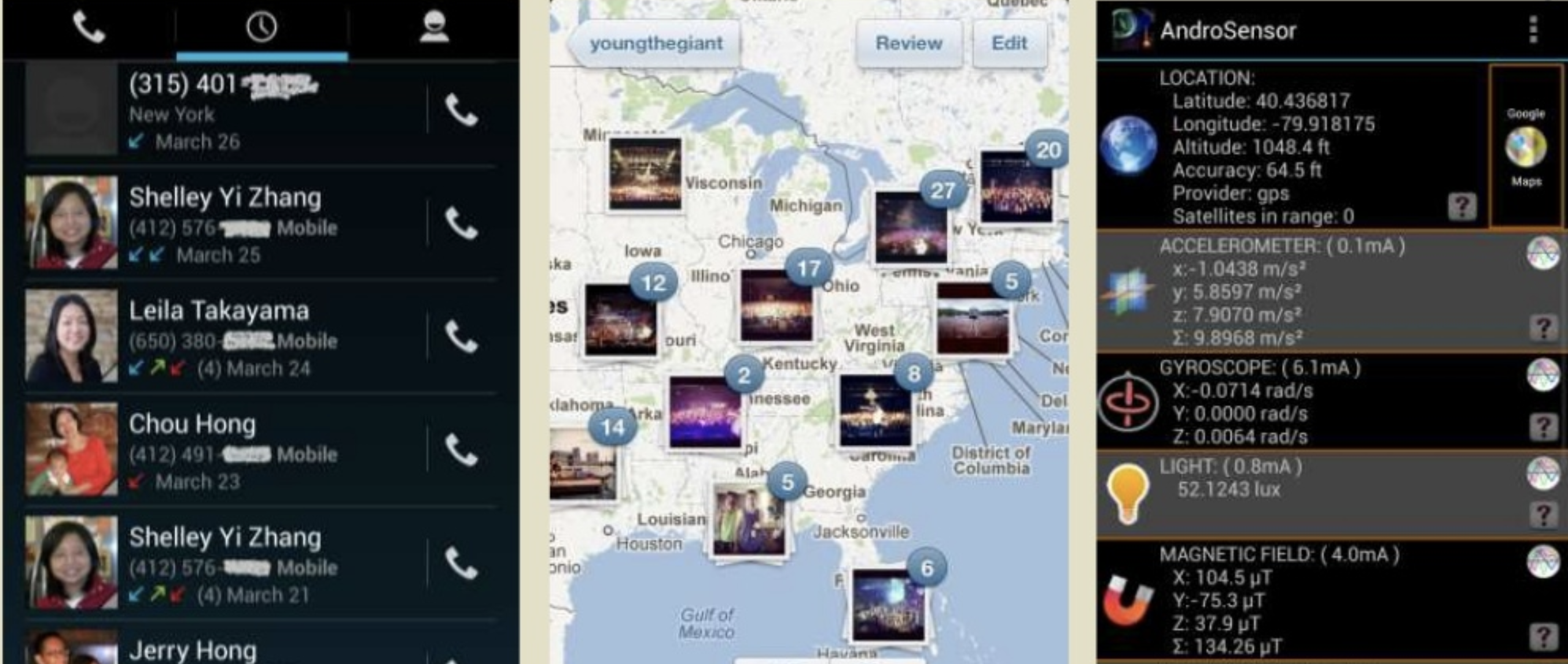

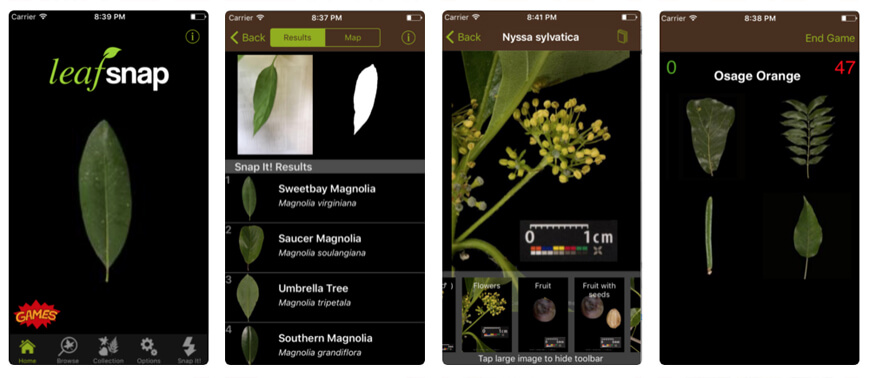

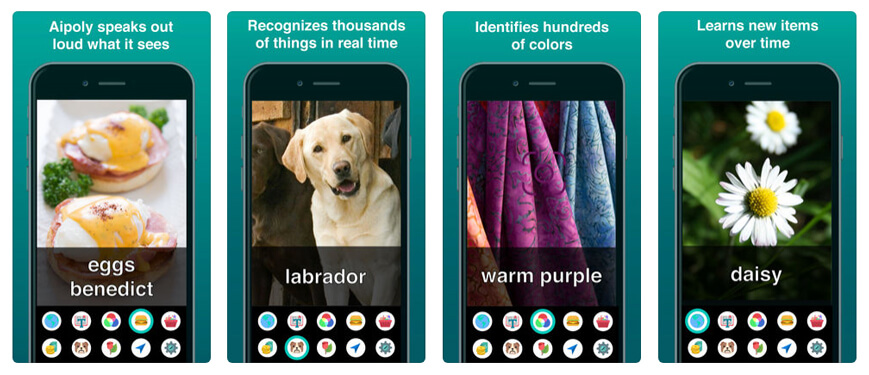

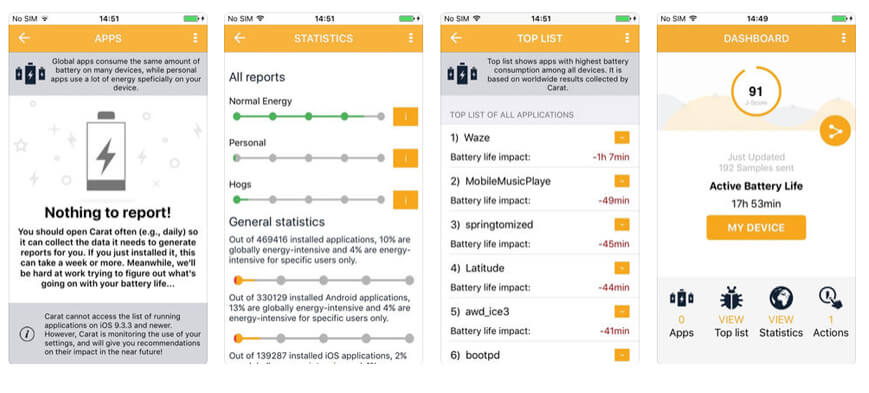

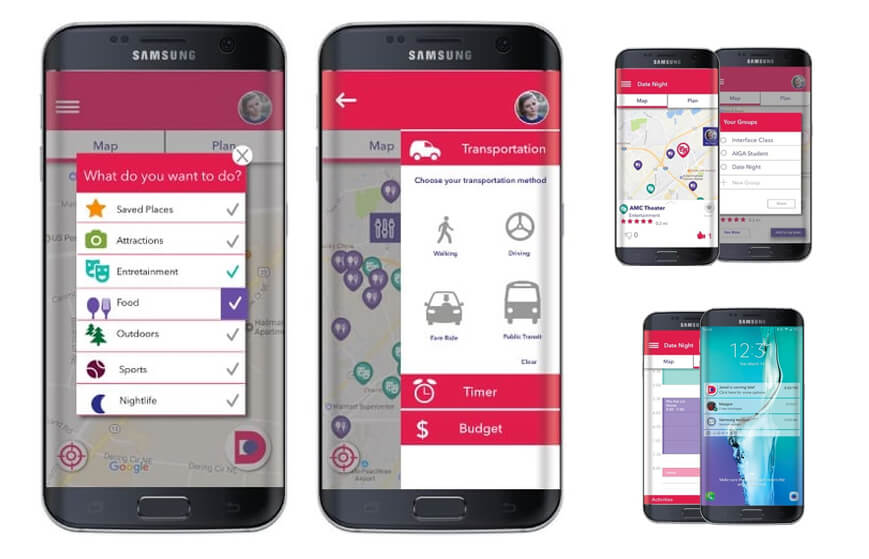

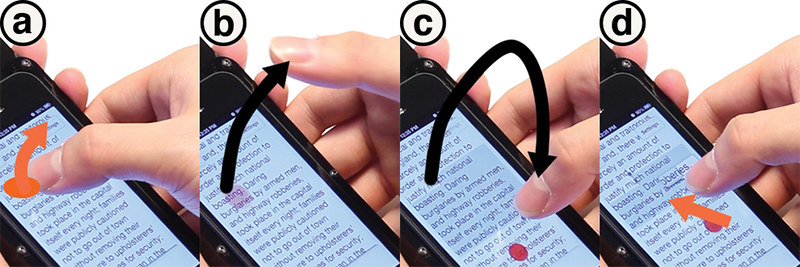

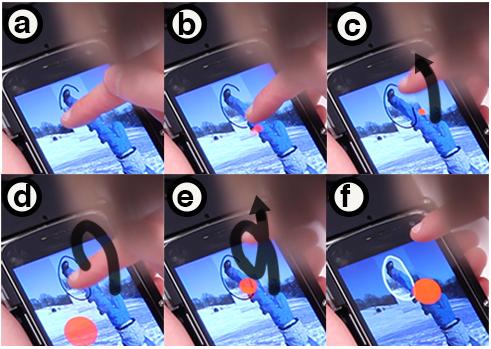

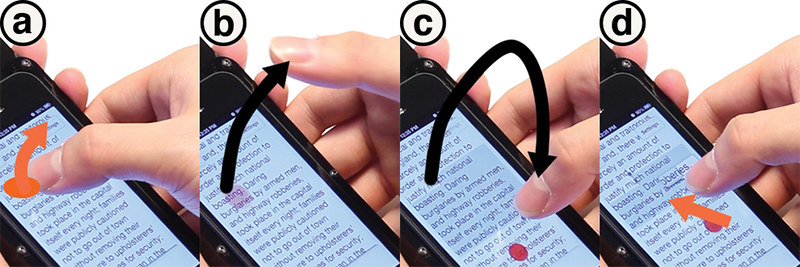

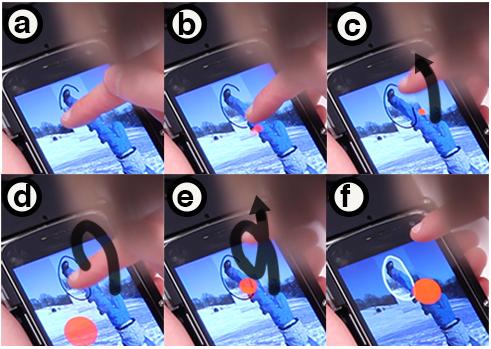

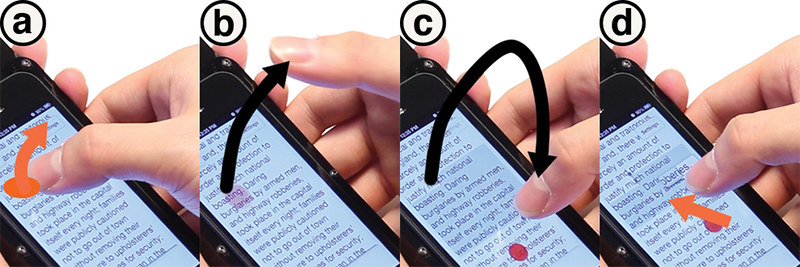

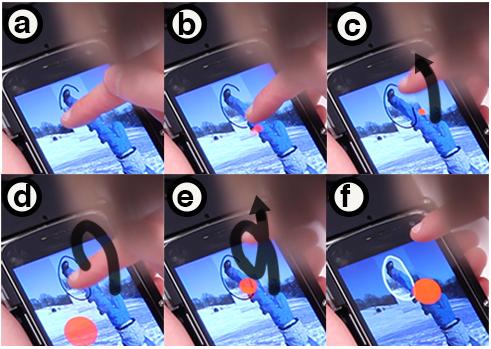

# Google maps - Hall of Fame & Hall of Shame .left-column[ <br><br> Do this now: [poll](https://edstem.org/us/courses/32031/lessons/51126/slides/319297) ] .right-column[  ] .footnote[Picture from [Machine Learning on your Phone](https://www.appypie.com/top-machine-learning-mobile-apps)] ??? fame/shame neighborhood traffic... shorter commutes... --- name: inverse layout: true class: center, middle, inverse --- # Implementing Sensing Lauren Bricker CSE 340 Winter 23 .footnote[Slides credit: Jason Hong, Carnegie Mellon University [Are my Devices Spying on Me? Living in a World of Ubiquitous Computing](https://www.slideshare.net/jas0nh0ng/are-my-devices-spying-on-me-living-in-a-world-of-ubiquitous-computing); ] --- layout: false [//]: # (Outline Slide) # Today's Agenda **Do this now**: Answer the [poll](https://edstem.org/us/courses/32031/lessons/51126/slides/319297) in Ed - Administrivia - Undo code and video due Fri 24-Feb, 10pm - Undo reflection due Sun 26-Feb, 10pm - Final Project out Fri 24-Feb - Sensing and Reacting to the User - Discuss useful applications of sensing - Investigate Sensing and Location basics - Implement sensing in practice --- # Smartphones .left-column[ [Poll](https://edstem.org/us/courses/32031/lessons/51126/slides/319297): How many of you - sleep with your phone? - check your phone first thing in the morning? - use your phone in the bathroom? ] .right-column[  ] --- # Smartphones Fun Facts about Millennials .left-column[ ![:fa thumbs-down] 83% sleep with phones ] .right-column[  ] --- count: false # Smartphones Fun Facts about Millennials .left-column[ ![:fa thumbs-down] 83% sleep with phones ![:fa thumbs-down] 90% check first thing in morning ] .right-column[  ] --- count: false # Smartphones Fun Facts about Millennials .left-column[ ![:fa thumbs-down] 83% sleep with phones ![:fa thumbs-down] 90% check first thing in morning ![:fa thumbs-down] 1 in 3 use in bathroom ] .right-column[  ] --- # Smartphone Data is Intimate  | Who we know | Sensors | Where we go | |-----------------------|-----------------------|---------------| | (contacts + call log) | (accel, sound, light) | (gps, photos) | -- count: false And yet it is very useful! --- # Useful Applications of Sensing  .footnote[[LeafSnap](http://leafsnap.com/) uses computer vision to identify trees by their leaves] --- # Useful Applications of Sensing  .footnote[[Vision AI](https://www.aipoly.com/) uses computer vision to identify images for the Blind and Visually Impaired] --- # Useful Applications of Sensing  .footnote[[Carat: Collaborative Energy Diagnosis](http://carat.cs.helsinki.fi/) uses machine learning to save battery life] --- # Useful Applications of Sensing  .footnote[[Imprompdo](http://imprompdo.webflow.io/ ) uses machine learning to recommend activities to do, both fund and todos] --- # Example: COVID-19 Contact Tracing .left-column-half[  ] .right-column-half[ - Install an app on your phone - Turn on bluetooth - Keep track of every bluetooth ID you see - Sends bluetooth ID (securely). - Will only notify people if you report you contracted COVID - That's when others are notified (securely and privately) that they were in the vacinity of someone who contracted COVID ] --- # Context Aware Apps .left-column-half[  ] .right-column-half[ This is a **Context-Aware** app - The app is using on board sensors (not user initiated input) to capture data - The app "learns" information about the user ] --- # Context Aware Apps .left-column-half[  ] .right-column-half[ Context-aware computing is: “software that examines and reacts to an individual’s changing context.” - Schilit, Adams, & Want 1994 “...aware of its user’s state and surroundings, and help it adapt its behavior” - Satyanarayanan 2002 ] .footnote[From [Intro to Context-Aware Computing](https://www.cs.cmu.edu/~jasonh/courses/ubicomp-sp2007/slides/12-intro-context-aware.pdf)] --- # Types of Context-Aware apps .left-column[  ] .right-column[ **Capture and Access** Recording the events around us, help us to synthesize and analyze the data, then do something with that information. ] -- count: false .right-column[ What Capture and Access apps do you use or can you think of? ] --- # Types of Context-Aware apps .left-column[  ] .right-column[ **Capture and Access** research examples: - .red[Food diarying] and nutritional awareness via receipt analysis [Ubicomp 2002] - .bold.red[Audio Accessibility] for deaf people by supporting mobile sound transcription [Ubicomp 2006, CHI 2007] - .red[Citizen Science] volunteer data collection in the field [CSCW 2013, CHI 2015] - .red[Air quality assessment] and visualization [CHI 2013] - .red[Coordinating between patients and doctors] via wearable sensing of in-home physical therapy [CHI 2014] ] --- # Types of Context-aware apps .left-column[  ] .right-column[ Capture and Access<BR> **Adaptive Services** Computing systems that can change themselves in response to their environment. ] -- count: false .right-column[ What Adaptive Services can you think of? ] --- # Types of Context-aware apps .left-column[  ] .right-column[ Capture and Access<BR> **Adaptive Services** research examples: - .red[Adaptive Text Prediction] for assistive communication devices [TOCHI 2005] - .red[Location prediction] based on prior behavior [Ubicomp 2014] - .bold.red[Pro-active task access] on lock screen based on predicted user interest [MobileHCI 2014] ] --- # Types of Context-aware apps .left-column[   ] .right-column[ Capture and Access<BR> Adaptive Services<BR> **Novel Interaction** New and different ways to interact with a computing device. ] -- count: false .right-column[ What Novel Interactions can you think of? ] --- # Types of Context-aware apps .left-column[   ] .right-column[ Capture and Access<BR> Adaptive Services <BR> **Novel Interaction** research examples: - .red[Cord Input] for interacting with mobile devices [CHI 2010] - .red[Smart Watch Intent to Interact] via twist'n'knock gesture [GI 2016] - .red[VR Intent to Interact] vi sensing body pose, gaze and gesture [CHI 2017] - .red[Around Body interaction] through gestures with the phone [Mobile HCI 2014] - .red.bold[Around phone interaction] through gestures combining on and above phone surface [UIST 2014] ] --- # Types of Context-aware apps .left-column[   ] .right-column[ ![:youtube Interweaving touch and in-air gestures using in-air gestures to segment touch gestures, H5niZW6ZhTk] ] --- # Types of Context-aware apps .left-column[  ] .right-column[ Capture and Access<BR> Adaptive Services (changing operation or timing)<BR> Novel Interaction<BR> **Behavioral Imaging** Using sensing data and modeling techniques to measure and analyze human behavior. ] -- count: false .right-column[ What Behavioral Imaging can you think of? ] --- # Types of Context-aware apps .left-column[  ] .right-column[ Capture and Access<BR> Adaptive Services (changing operation or timing)<BR> Novel Interaction<BR> **Behavioral Imaging** - .red[Detecting and Generating Safe Driving Behavior] by using inverse reinforcement learning to create human routine models [CHI 2016, 2017] - .red[Detecting Deviations in Family Routines] such as being late to pick up kids [CHI 2016] ] --- # Contact tracing Question (answer on [Ed](https://edstem.org/us/courses/32031/lessons/51126/slides/320185)): What context aware classification would you give the the contact tracing app? - Capture and Access - Adaptive Services - Novel Interaction - Behavioral Imaging -- count: false Answer : Capture and Access We are capturing information about who you've been around, and indirectly allowing someone else to access that information. --- # How do these systems work? .left-column50[ ## Old style of app design <div class="mermaid"> graph TD I(Input) --Explicit Interaction--> A(Application) A --> Act(Action) classDef normal fill:#e6f3ff,stroke:#333,stroke-width:2px; class U,C,A,I,S,E,Act,Act2 normal </div> ] -- count: false .right-column50[ ## New style of app design <div class="mermaid"> graph TD U(User) --Implicit Sensing--> C(Context-Aware Application) S(System) --Implicit Sensing--> C E(Environment) --Implicit Sensing--> C C --> Act2(Action) classDef normal fill:#e6f3ff,stroke:#333,stroke-width:2px; class U,C,A,I,S,E,Act,Act2 normal </div> ] --- .left-column-half[ ## Types of Sensors | | | | |--|--|--| | Clicks | Key presses | Touch | | Microphone | Camera | IOT devices | |Accelerometer | Rotation | Screen| |Applications | Location | Telephony| |Battery | Magnetometer | Temperature| |Bluetooth | Network Usage | Traffic| |Calls | Orientation | WiFi| |Messaging | Pressure | Processor| |Gravity | Proximity | Humidity | |Gyroscope | Light | Multi-touch | | ... | ... | ....| ] .right-column-half[ <br><br>  - Which of these are Event Based? - Which of these are "Sampled"? ] ??? --- .left-column-half[ ## Types of Sensors | | | | |--|--|--| | Clicks | Key presses | Touch | | Microphone | Camera | IOT devices | |Accelerometer | Rotation | Screen| |Applications | Location | Telephony| |Battery | Magnetometer | Temperature| |Bluetooth | Network Usage | Traffic| |Calls | Orientation | WiFi| |Messaging | Pressure | Processor| |Gravity | Proximity | Humidity | |Gyroscope | Light | Multi-touch | | ... | ... | ....| ] .right-column-half[ ## Contact Tracing Other than Bluetooth, what sensors might be useful for contact tracing? Why? ] --- # Sensing: Categories of Sensors * Motion Sensors * Measure acceleration forces and rotational forces along three axes * Includes accelerometers, gravity sensors, gyroscopes, and rotational vector sensor * Accelerometers and gyroscope are generally HW based, Gravity, linear acceleration, rotation vector, significant motion, step counter, and step detector may be HW or SW based * Environmental Sensors * Measures relative ambient humidity, illuminance, ambient pressure, and ambient temperature * All four sensors are HW based * Position Sensors * Determine the physical position of the device. * Includes orientation, magnetometers, and proximity sensors * Geomagnetic field sensor and proximity sensor are HW based --- # Snapshots vs Fences Listeners are used to receive sensor or location updates at different intervals - This is like taking a snapshot of the sensor or location at a period of time. Fences (or Geofences) are a way to create an area of interest around a specific location. Listener is called any time a condition is true. --- # 🎟️ Exit Ticket: Contact tracing Question (answer on [Ed](https://edstem.org/us/courses/32031/lessons/51126/slides/320185)): Would you use Snapshots vs Fences for the contact tracing app? -- count: false Answer : Fence We want to be notified about *every* contact so we can record it --- # Sensing: Android Sensor Framework `SensorManager` - Provides methods for accessing and listing sensors, registering and unregistering sensor event listeners. `Sensor` - A class to create an instance of a specific sensor. Can also be used to find a sensors abilities `SensorEvent` - Contains information about a specific event from a sensor. Includes the following information: the raw sensor data, the type of sensor that generated the event, the accuracy of the data, and the timestamp for the event. `SensorEventListener` - Used to create callback methods to receive notifications about sensor events. .footnote[[Sensor Framework](https://developer.android.com/guide/topics/sensors/sensors_overview#sensor-framework)] --- # Sensor Activity The following slides are related to the [Sensing and Location Activity](https://gitlab.cs.washington.edu/cse340/exercises/cse340-sensing-and-location) Clone this repo if you have not already. --- # Sensor Activity: SensorManager Getting the `SensorManager` ```java SensorManager mSensorManager = (SensorManager) getSystemService(Context.SENSOR_SERVICE); ``` Getting the list of all `Sensor`s from the manager ```java mSensorManager.getSensorList(Sensor.TYPE_ALL); ``` Getting a `Sensor` from the `SensorManager`. The `Sensor` type is a [class constant](https://developer.android.com/guide/topics/sensors/sensors_overview#sensors-intro) in `Sensor` ```java Sensor sensor = mSensorManager.getDefaultSensor(/* type of sensor */); ``` --- # Sensor Activity: Sensor listeners Create a `SensorEventListener` for use with the callback. For example: ```java public class SensorActivity extends AbstractMainActivity implements SensorEventListener { public void onSensorChanged(SensorEvent event) { ... switch (event.sensor.getType()) { case Sensor.TYPE_AMBIENT_TEMPERATURE: ... case Sensor.TYPE_LINEAR_ACCELERATION: ... } } public void onAccuracyChanged(Sensor sensor, int accuracy) { // if Used } } ``` You could use any of the ways to create a callback discussed earlier in this class. --- # Registering/Unregistering the sensor Registering is done in `onResume` generally ```java Sensor sensor = mSensorManager.getDefaultSensor(/* type of sensor */); mSensorManager.registerListener(this, sensor, DELAY); ``` Make sure to unregister the listener in `onPause` so you're not working in the background (unless that's the expected behavior) ```java mSensorManager.unregisterListener(this); ``` --- # End of Deck