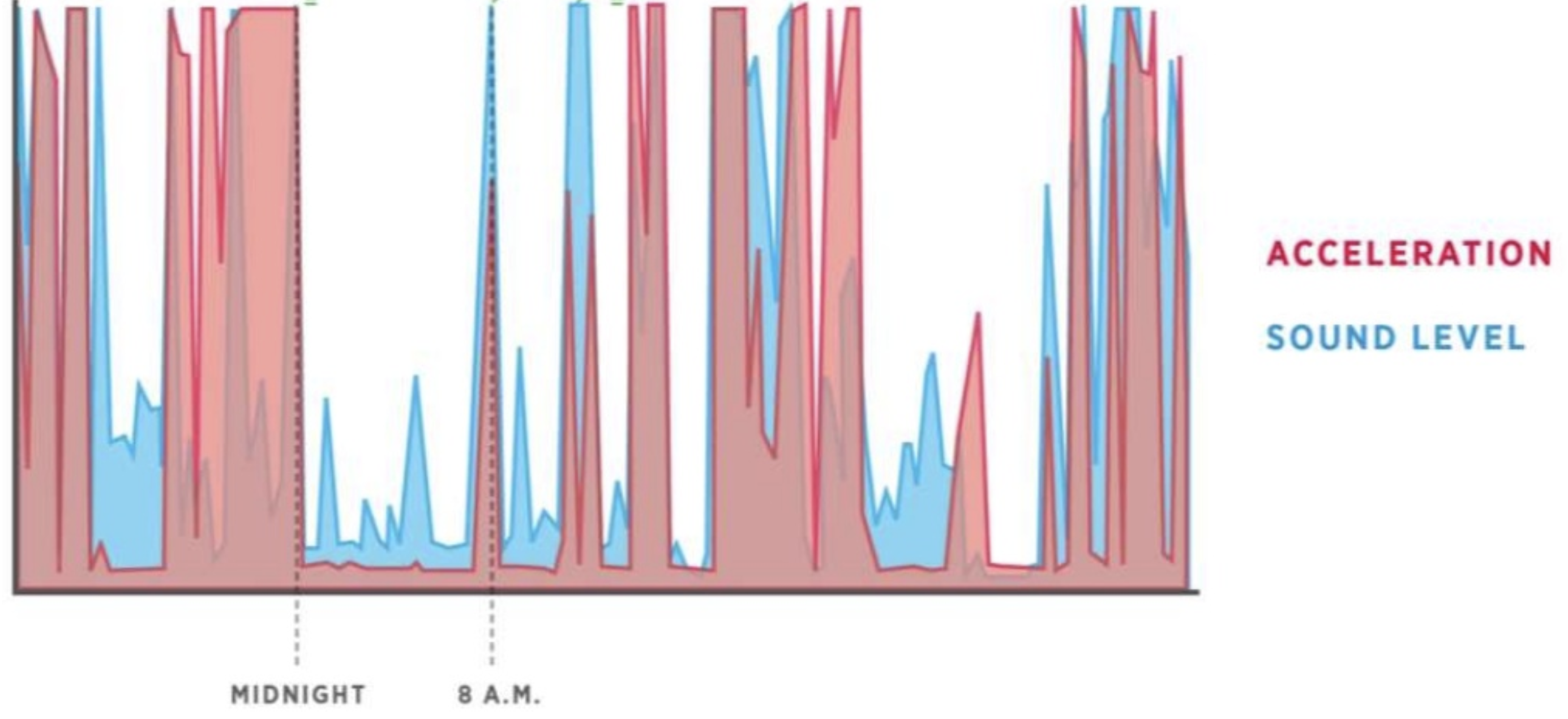

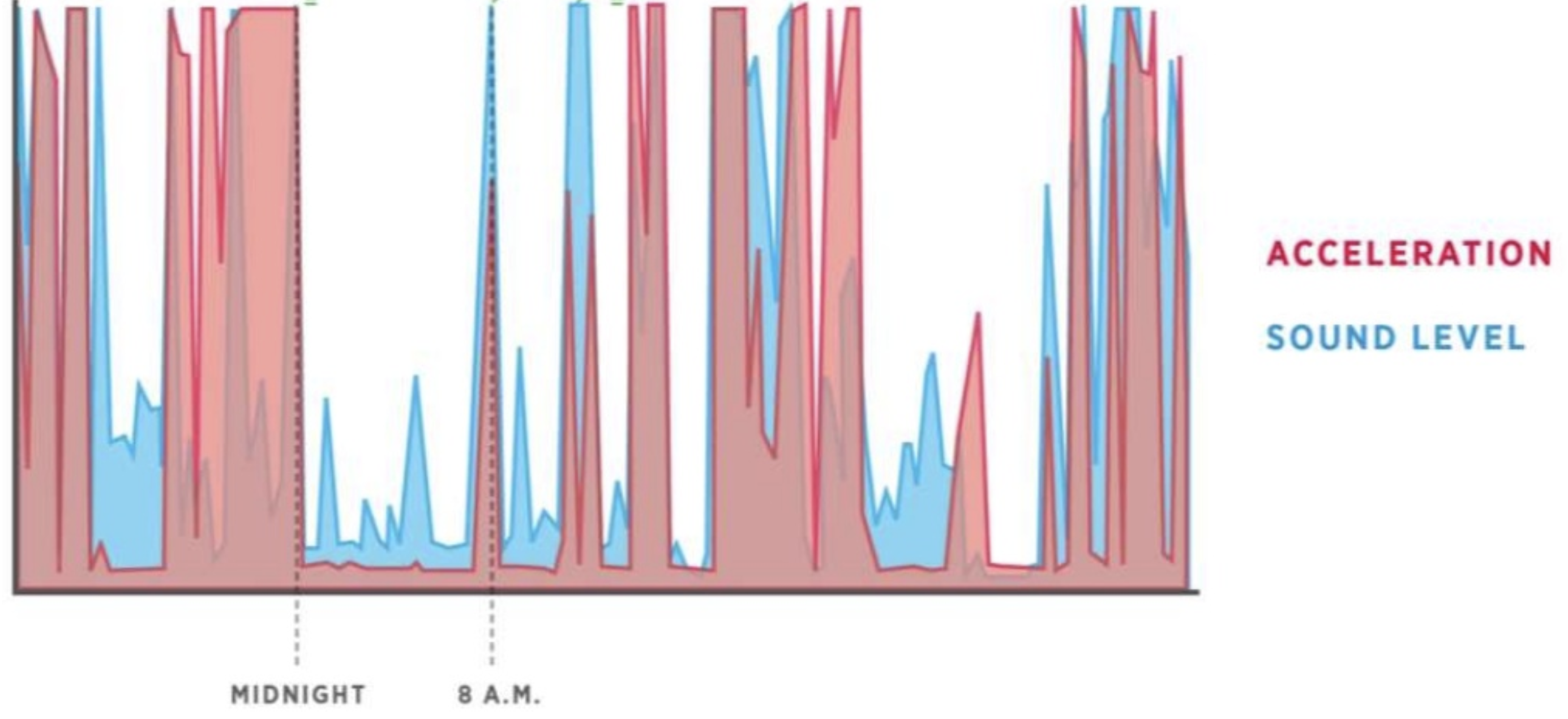

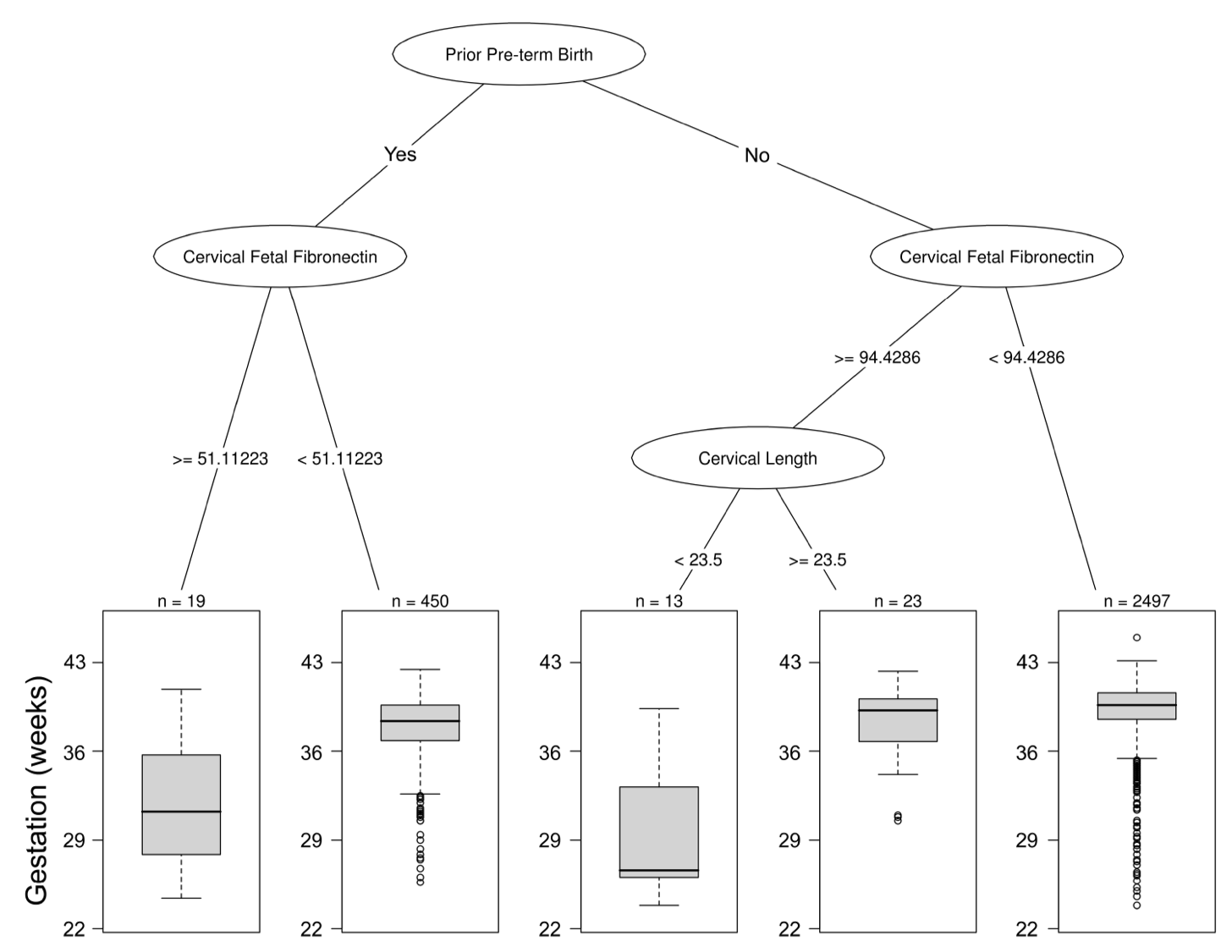

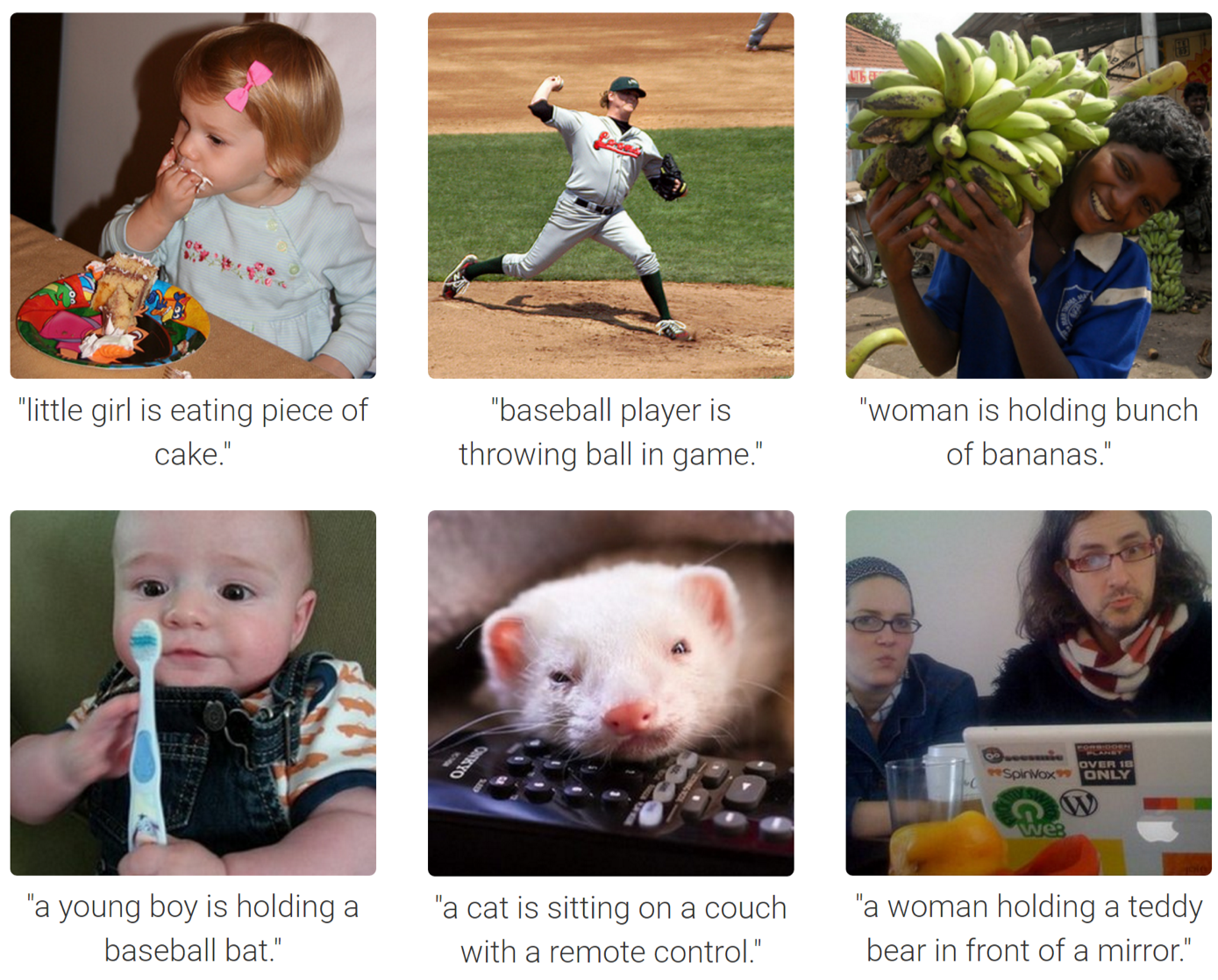

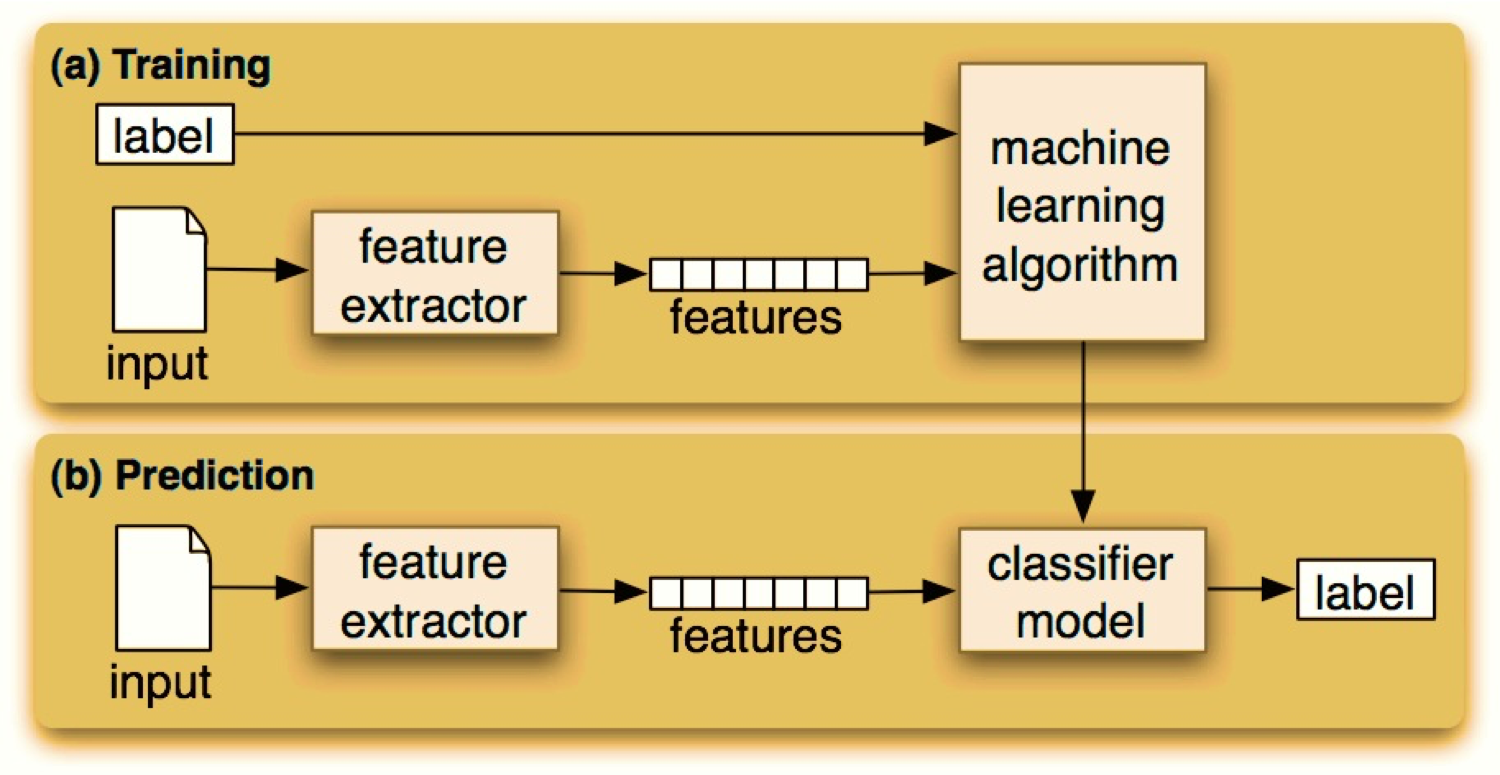

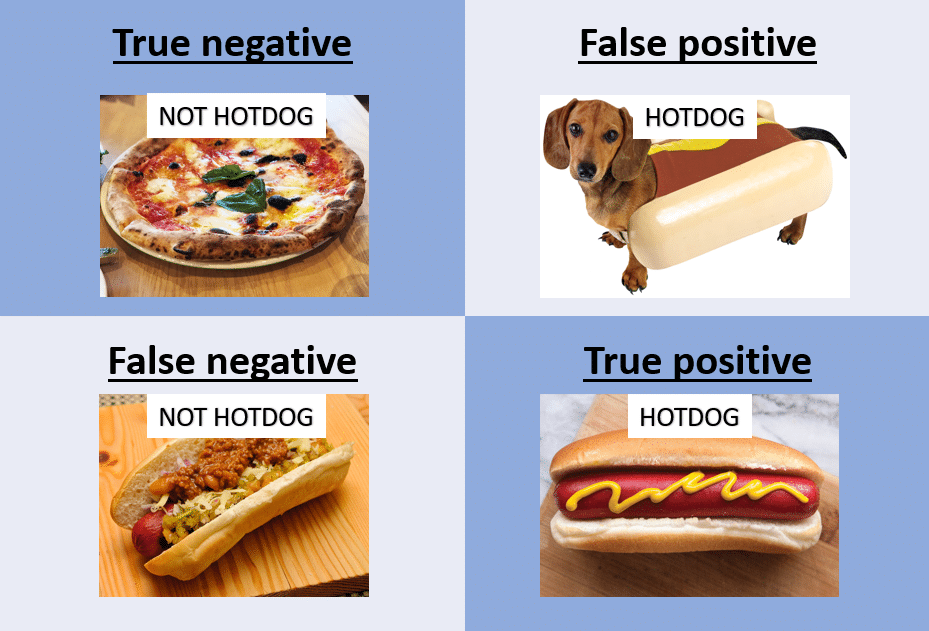

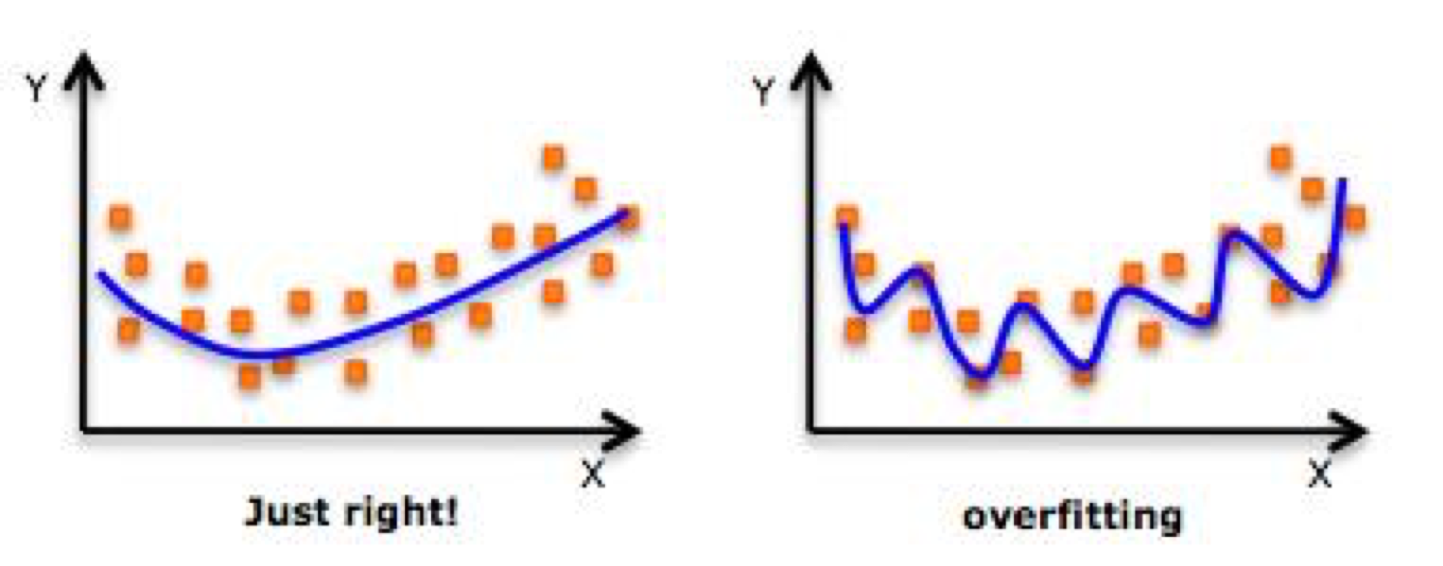

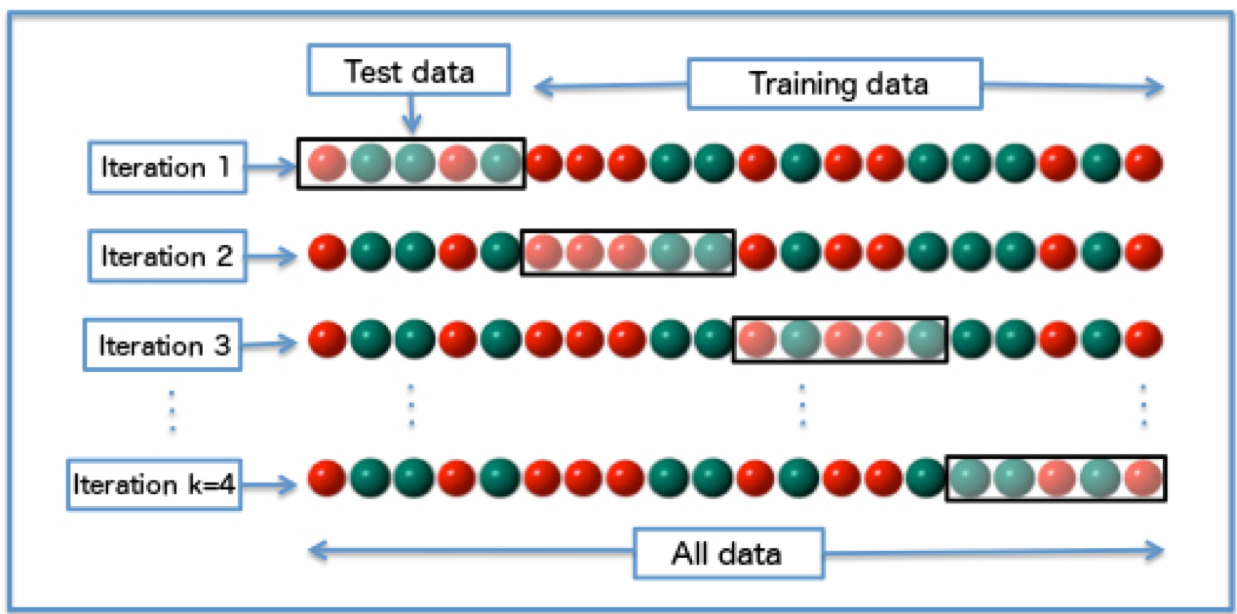

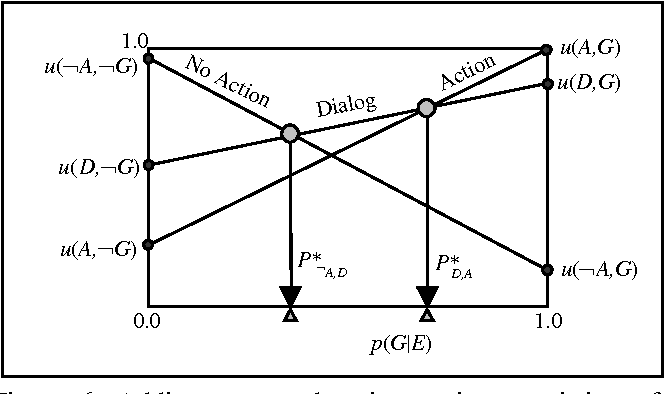

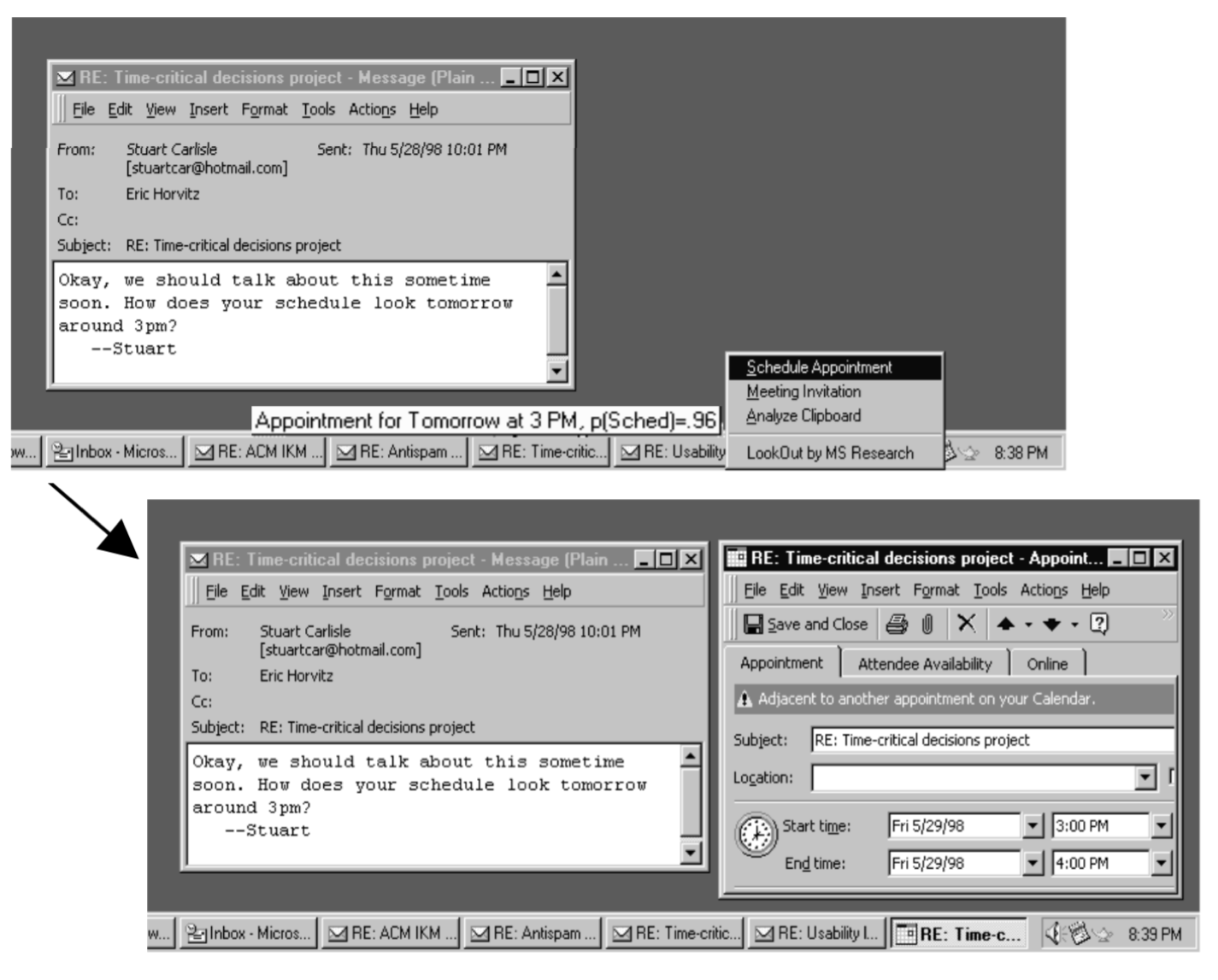

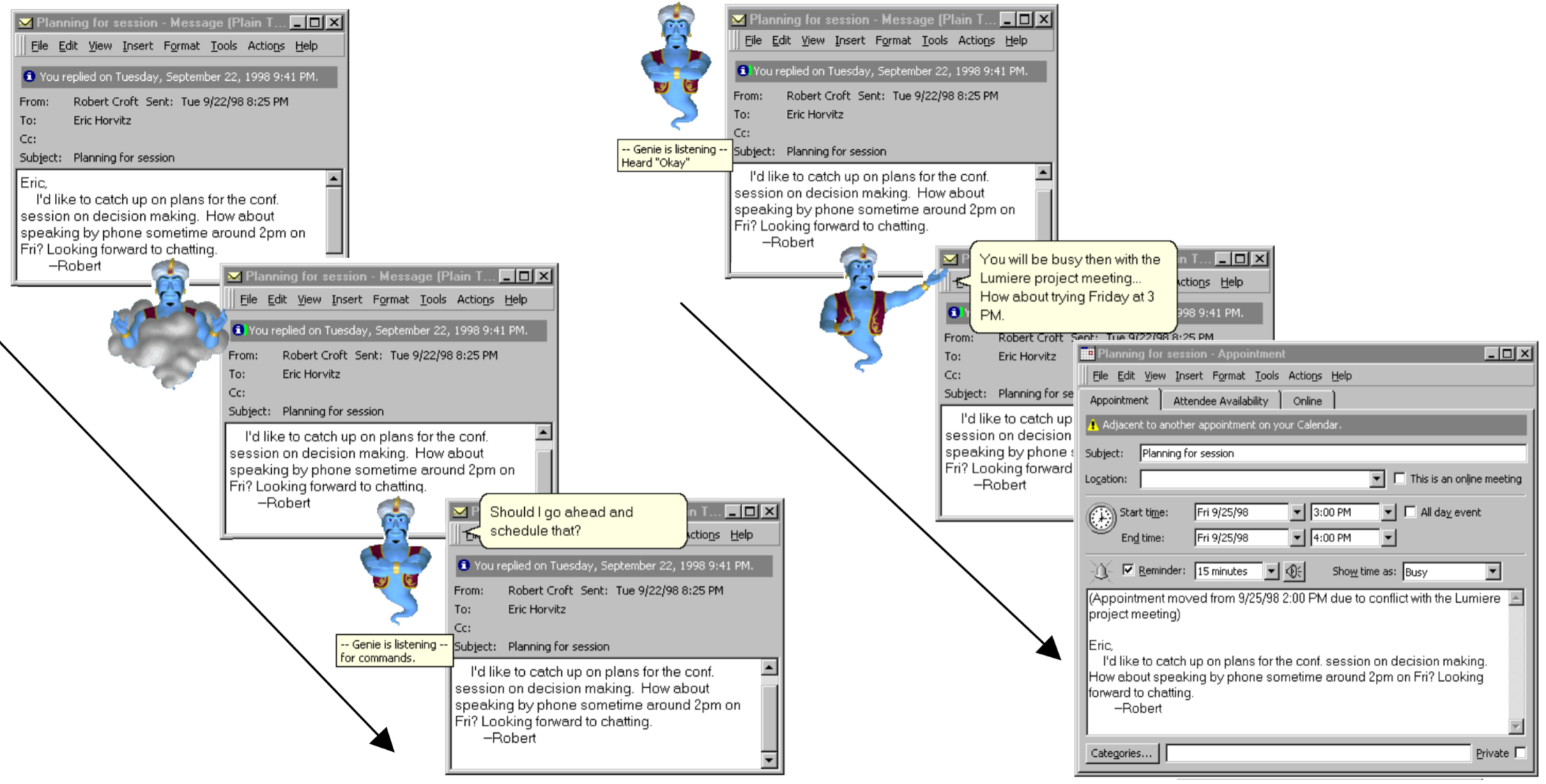

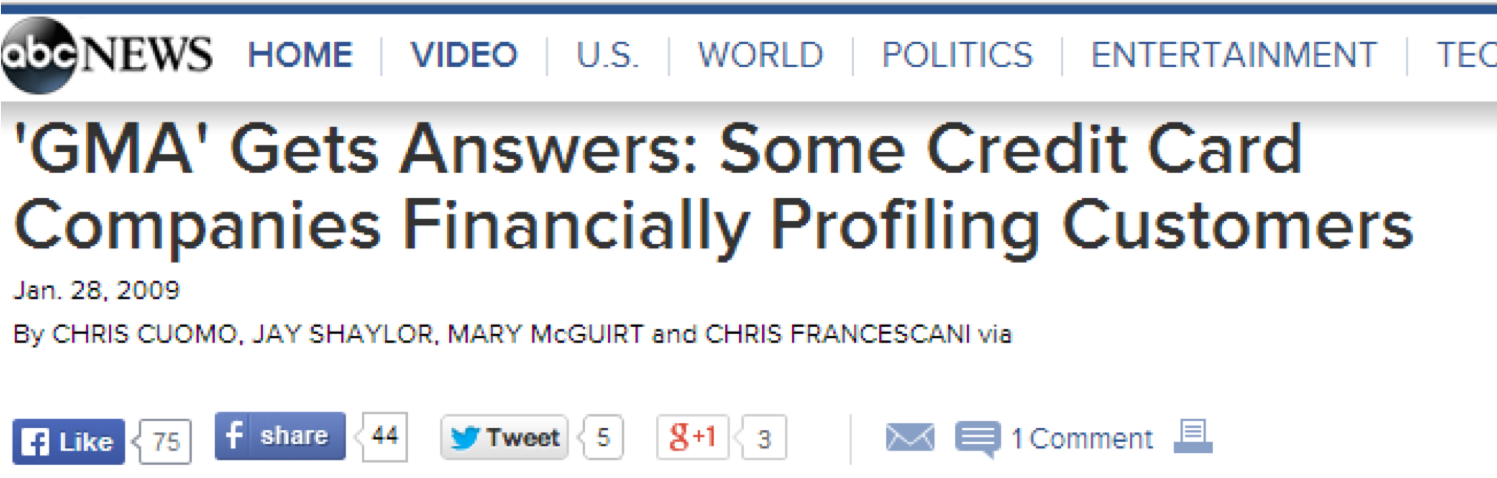

name: inverse layout: true class: center, middle, inverse --- # Sensing and Machine Learning Lauren Bricker<BR><BR> Special thanks to Hunter Schafer<BR> for reviewing the slides CSE 340 Winter 23 --- layout: false [//]: # (Outline Slide) # Today's Agenda - Do this now - Clone the [CSE340 Intent Practice](https://gitlab.cs.washington.edu/cse340/exercises/cse340-intent-practice) repo - Administrivia - Final project info out Fri 24-Feb - Final project design extra point opportunity (Wednesday) - Final project final design due Thursday (after design review in section) - Learning goals - Machine learning with sensing data - Define (briefly) Machine learning (ML) - Determine how ML relates to context-aware apps - Consider the ethical and security implications of context-aware apps - [Intents](/courses/cse340/23wi/slides/wk09/intents.html) --- .left-column-half[ ## Types of Sensors - Review | | | | |--|--|--| | Clicks | Key presses | Touch | | Microphone | Camera | IOT devices | |Accelerometer | Rotation | Screen| |Applications | Location | Telephony| |Battery | Magnetometer | Temperature| |Bluetooth | Network Usage | Traffic| |Calls | Orientation | WiFi| |Messaging | Pressure | Processor| |Gravity | Proximity | Humidity | |Gyroscope | Light | Multi-touch | | ... | ... | ....| ] .right-column-half[ <br><br>  ] --- # Review Sensing: Categories of Sensors * Motion Sensors * Measure acceleration forces and rotational forces along three axes * Includes accelerometers, gravity sensors, gyroscopes, and rotational vector sensor * Accelerometers and gyroscope are generally HW based, Gravity, linear acceleration, rotation vector, significant motion, step counter, and step detector may be HW or SW based * Environmental Sensors * Measures relative ambient humidity, illuminance, ambient pressure, and ambient temperature * All four sensors are HW based * Position Sensors * Determine the physical position of the device. * Includes orientation, magnetometers, and proximity sensors * Geomagnetic field sensor and proximity sensor are HW based --- # Implementing Sensing: Using Data .left-column50[ ![:fa bed, fa-7x] ] .right-column50[ ## In class exercise How might you recognize when a person with a device is sleeping? - What patterns are we trying to recognize? - What sensors are used? ] --- # Implementing Sensing: Using Data .left-column50[  ] .right-column50[ ## In class exercise - What patterns are we trying to recognize? - Length of time sleeping - Sleep quality - What sensors are used? - How to interpret sensors? ] --- # How do we program this? .left-column50[  ] .right-column50[ 1. Write down some rules 2. Implement them ] --- # How do we program this? Old Approach: Create software by hand - Use libraries (like JQuery) and frameworks - Create app content, do layout, code up decision making functionality - Deterministic (code does what you tell it to) New Approach: Collect data and train algorithms - Will still do the above, and will have some functionality based on ML - *Collect lots of examples and train a ML algorithm* - *Think about the data in a statistical way* --- # This is *Machine Learning* Machine Learning is often used to process sensor data - ML is one area of Artificial Intelligence - ML has been getting a lot of press The goal of machine learning is to develop systems that can improve performance with more experience - Can use "example data" as "experience" - Uses these examples to discern patterns - And to make predictions --- # Two main approaches ![:fa eye] *Supervised learning* (we have lots of examples of what should be predicted) -- count: false ![:fa eye-slash] *Unsupervised learning* (e.g. clustering into groups and inferring what they are about) -- count: false ![:fa low-vision] Can combine these (semi-supervised) -- count: false ![:fa history] Can learn over time or train up front --- # How Machine Learning is Typically Used Step 1: Gather lots of data (easy on a phone!) -- count: false Step 2: Figure out useful features - Convert data to information (not knowledge!) - (typically) Collect labels --- count: false # How Machine Learning is Typically Used Step 1: Gather lots of data (easy on a phone!) Step 2: Figure out useful features Step 3: Select and train the ML algorithm to make a prediction - Lots of toolkits for this - Lots of algorithms to choose from - Some models are closed systems (no visibility in algorithm design) - Some models are not easily understood - Some models, like decisions trees, are more transparent and easily understood --- # Example: Decision tree for predicting premature birth  --- # Example: Deep Learning for Image Captioning .left-column50[  ] .right-column50[ Captioning images. - [Note the errors.](http://cs.stanford.edu/people/karpathy/deepimagesent/) Deep learning is now available on your phone (via [Tensorflow](https://www.tensorflow.org/lite)) ] ??? Note differences between these: one label vs many --- # Training process .left-column50[  ] .right-column50[ - Training: 1. Find an input data set. 2. Extract features and manually label them 3. Run them through the ML algorthm to generate the classifier model - Prediction 1. Take the input and run them through the same feature extracton process that happened during labeling. 2. Run them through the classifier model 3. The classifier generates a label based on the data --- # How Machine Learning is Typically Used Step 1: Gather lots of data (easy on a phone!) Step 2: Figure out useful features Step 3: Select and train the ML algorithm Step 4: Evaluate metrics (and iterate) -- count: false - See how well algorithm does using several metrics - Is the model accurate? or - How error prone is it? (How much is too much error?) - Error analysis: what went wrong and why - Iterate: learn from analysis, get new data, create new features --- .left-column[ ## Assessing Accuracy] .right-column[ Prior probabilities - Probability before any observations (ie just guessing) - Ex. ML classifier to guess if an animal is a cat or a ferret based on the ear location - Assume all pointy eared fuzzy creatures are cats (some percentage will be right) - Your trained model should do better than prior classifications Other baseline approaches - Cheap and dumb algorithms - Ex. Classifying cats vs ferrets based on size - Models developers need to work avoid biased results - Impact from gender on academic authorship [cite](https://elifesciences.org/articles/21718) - Bias in algorithms with electronic health records [cite](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6347576/) ] --- .left-column[ ## Assessing Accuracy] .right-column[ Don't just measure accuracy (percent right) * Sometimes we care about *False positives* vs *False negatives* * What examples do you know of false positives or false negatives? ] -- .right-column[  ] .footnote[[Image Source: Guide to accuracy, precision, and recall](https://www.mage.ai/blog/definitive-guide-to-accuracy-precision-recall-for-product-developers)] --- .left-column[ ## Assessing Accuracy] .right-column[ | | | Prediction | | |-------------|--------------|----------------------------|----------------------------| | | | **Positive** | **Negative** | | Actual | **Positive** | .red[True Positive (good)] | False Negative (bad) | | | **Negative** | False Positive (bad) | .red[True Negative (good)] | Accuracy = (TP + TN) / (TP + FP + TN + FN) Intuition: How many things did I get right in all of the total cases. ] --- .left-column[ ## Assessing Accuracy ## Precision ] .right-column[ | | | Prediction | | |-------------|--------------|----------------------------|----------------------| | | | **Positive** | **Negative** | | Actual | **Positive** | .red[True Positive (good)] | False Negative (bad) | | | **Negative** | .red[False Positive (bad)] | True Negative (good) | Precision = TP / (TP+FP) Intuition: Of the items predicted positive, how many are correct? ] --- .left-column[ ## Assessing Accuracy ## Recall ] .right-column[ | | | Prediction | | |--------|--------------|----------------------------|----------------------------| | | | **Positive** | **Negative** | | Actual | **Positive** | .red[True Positive (good)] | .red[False Negative (bad)] | | | **Negative** | False Positive (bad) | True Negative (good) | Recall = TP / (TP+FN) Intuition: Of all things that should have been positive, how many actually labeled correctly? ] --- # Avoiding Overfitting .left-column50[ Overfitting: When your ML model is too specific for data you have - Might not generalize well  ] -- count: false .right-column50[ To avoid overfitting, typically split data into training set and test set - Train model on training set, and test on test set - Often done through validation  ] --- # Validation Break your dataset into 3 groups: * **Training Set**: Data you train on for every model * **Validation Set**: The non-training set you use to compare performance of the different models you try. Often pick the model that has the lowest error. * **Test Set**: Data you test on to get a good estimate of future performance. * Important: you must never train on this or make decisions about the model based on test performance. You can only use it as the step right before you publish to get the best estimate for how you think it will do. That's why we choose the model based on validation set, and now leave our test set as the unbiased estimate for future. Cross validation: a technique to combine the training and validation sets so you don't have to get rid of too much data. --- # How Machine Learning is Typically Used Step 1: Gather lots of data (easy on a phone!) Step 2: Figure out useful features Step 3: Select and train the ML algorithm Step 4: Evaluate metrics (and iterate) Step 5: Deploy --- # What makes this work well? Quality of features determines quality of results - Typically more data is better - Accurate labels are important .red[This is *NOT* as sophisticated as the media makes out] -- count: false .red[*BUT* ML can infer all sorts of things] --- # AI/ML Not As Sophisticated as in Media A lot of people outside of computer science often ascribe human behaviors to AI systems - Especially desires and intentions - Works well for sci-fi, but not for today or near future These systems only do: - What we program them to do - What they are trained to do (based on the (possibly biased) data) --- # Concerns Significant Societal Challenges for Privacy (see our security lecture) -- count: false Wide Range of Privacy Risks | Everyday Risks | Medium Risk | Extreme Risks | |--------------------|---------------------|-------------------| | Friends, Family | Employer/Government | Stalkers, Hackers | | Over-protection | Over-monitoring | Well-being | | Social obligations | Discrimination | Personal safety | | Embarrassment | Reputation | Blackmail | | | Civil Liberties | | - It's not just Big Brother, it's not just corporations - Privacy is about our relationships with every other individual and organization out there --- # Concerns Significant Societal Challenges for Privacy Who should have the initiative? (Initiative matters) -- - Does a person initiate things or the computer? - How much does computer system do on your behalf? - Autonomous vehicle example: Some think Tesla autopilot is fully autonomous, leads to risky actions - Instead of direct manipulation, some smarts/intelligent agent for automation - Questions remain: - What should be automated, what shouldn't? - Should "intelligence" be anthropomorphized? - How can a user learn what system can and can't do? - What are strategies for showing state of system or preventing errors? --- exclude: true .left-column50[ ## Mixed-initiative best practices - Significant value-added automation - Considering uncertainty - Socially appropriate interaction w/ agent - Consider cost, benefit, uncertainty - Use dialog to resolve uncertainty - Support direct invocation and termination - Remember recent interactions ] .right-column50[  ] --- exclude: true .left-column50[ ## Mixed-initiative best practices - Significant value-added automation - Considering uncertainty - Socially appropriate interaction w/ agent - Consider cost, benefit, uncertainty - Use dialog to resolve uncertainty - Support direct invocation and termination - Remember recent interactions ] .right-column50[  ] ??? Can see what agent is suggesting, in terms of scheduling a meeting --- exclude: true .left-column50[ ## Mixed-initiative best practices - Significant value-added automation - Considering uncertainty - Socially appropriate interaction w/ agent - Consider cost, benefit, uncertainty - Use dialog to resolve uncertainty - Support direct invocation and termination - Remember recent interactions ] .right-column50[  ] ??? Uses anthropomorphized aganet Uses speech for input Uses mediation to help resolve conflict --- exclude: true .left-column[ ## Mixed-initiative best practices ] .right-column[ Built-in cost-benefit model in system - If perceived benefit >> cost, then do the action - Otherwise wait Note that this is just one point in design space (1999), and still lots of open questions - Ex. Should “intelligence” be anthropomorphized? - Ex. How to learn what system can and can't do? - Ex. What kinds of tasks should be automated / not? - Ex. What are strategies for showing state of system? - Ex. What are strategies for preventing errors? ] --- # Concerns Significant Societal Challenges for Privacy Who should have the initiative? Bias in Machine Learning --- .quote[Johnson says his jaw dropped when he read one of the reasons American Express gave for lowering his credit limit: ![:fa quote-left] Other customers who have used their card at establishments where you recently shopped have a poor repayment history with American Express. ]  ---  --- # Concerns Significant Societal Challenges for Privacy Who should have the initiative? Bias in Machine Learning Understanding ML -- count: false - How does a system know I am addressing it? - How do I know a system is attending to me? - When I issue a command/action, how does the system know what it relates to? - How do I know that the system correctly understands my command and correctly executes my intended action? .footnote[ Belloti et al., CHI 2002 "Making Sense of Sensing" ] --- # Wrong location-based recommendation .left-column40[  ] .right-column60[ Why did it not tell me about the Museum? How does it determine my location? Providing explanations to these questions can make Intelligent systems Intelligible Other examples: caregiving hours determined by insurance companies, etc ] --- # Types of feedback Feedback is crucial to user's understanding of how a system works and helping guide future actions - What did the system do? - What if I do W, what will the system do? - Why did the system do X? - Why did the system not do Y - How do I get the system to do Z? --- # Summary ML and ethics - ML is powerful (but not perfect), often better than heuristics - Basic approach is collect data, train, test, deploy - Hard to understand what algorithms are doing (transparency) - ML algorithms just try to optimize, but might end up finding a proxy for race, gender, computer, etc - But hard to inspect these algorithms - Still a huge open question - Privacy - How much data should be collected about people? - How to communicate this to people? - What kinds of inferences are ok? --- # End of deck