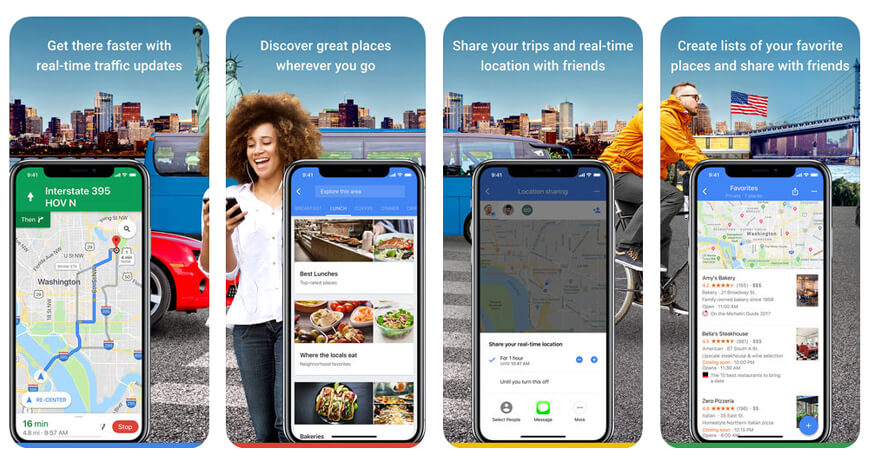

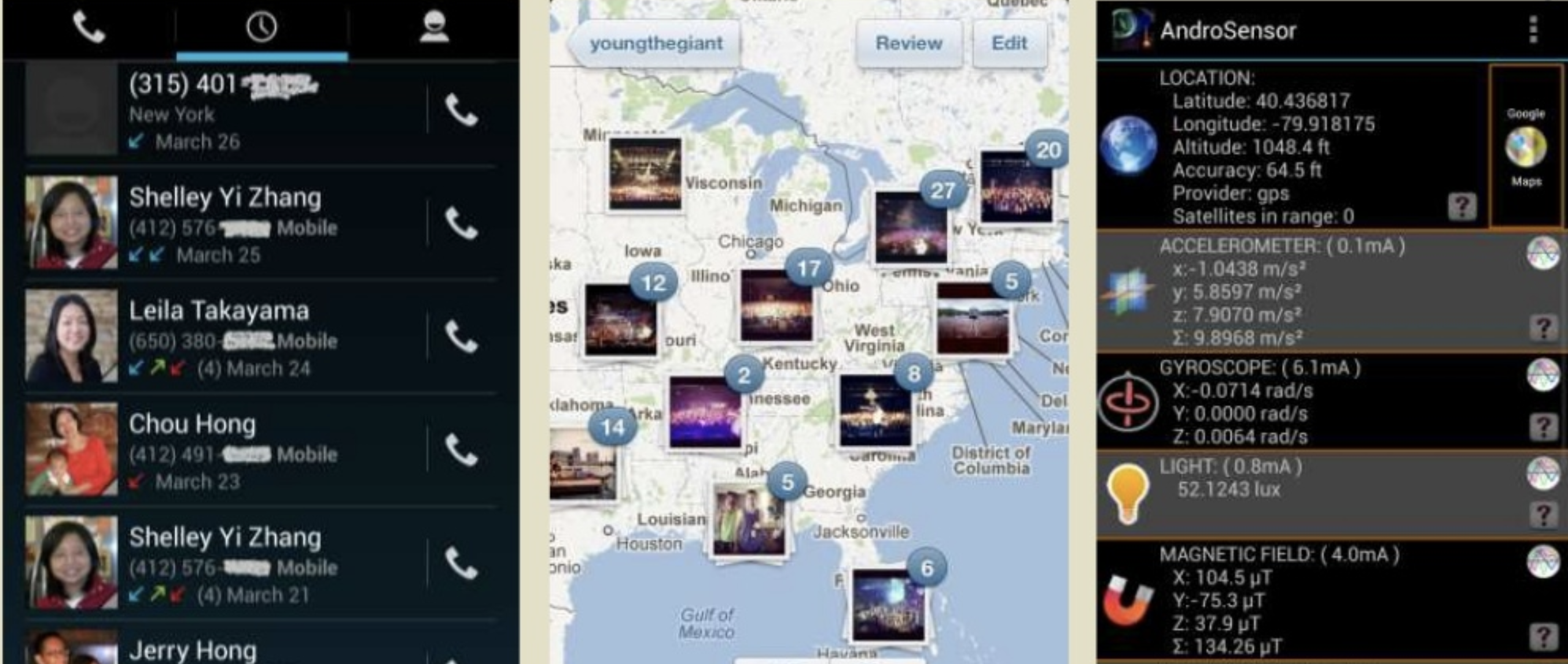

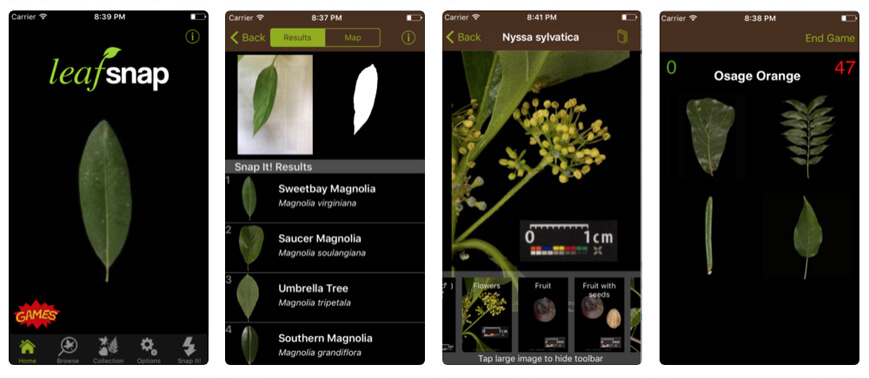

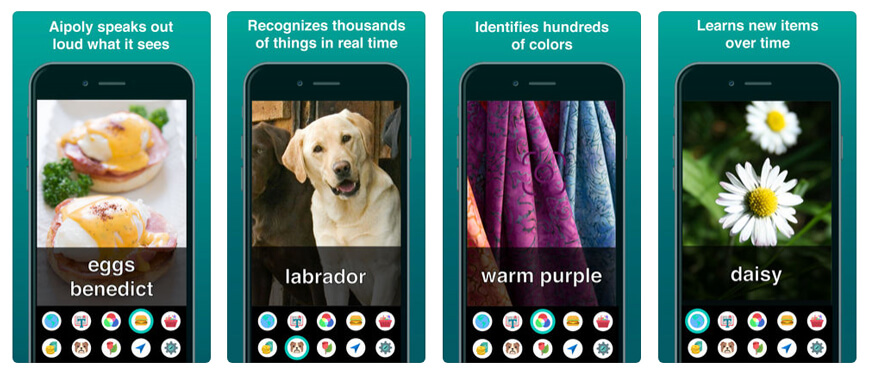

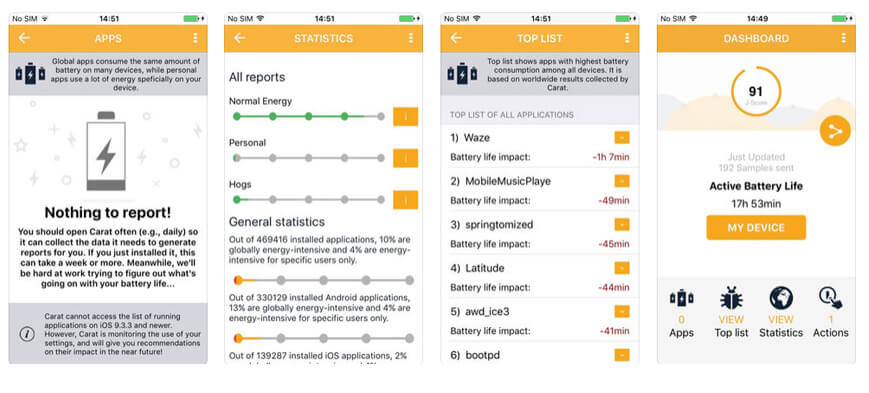

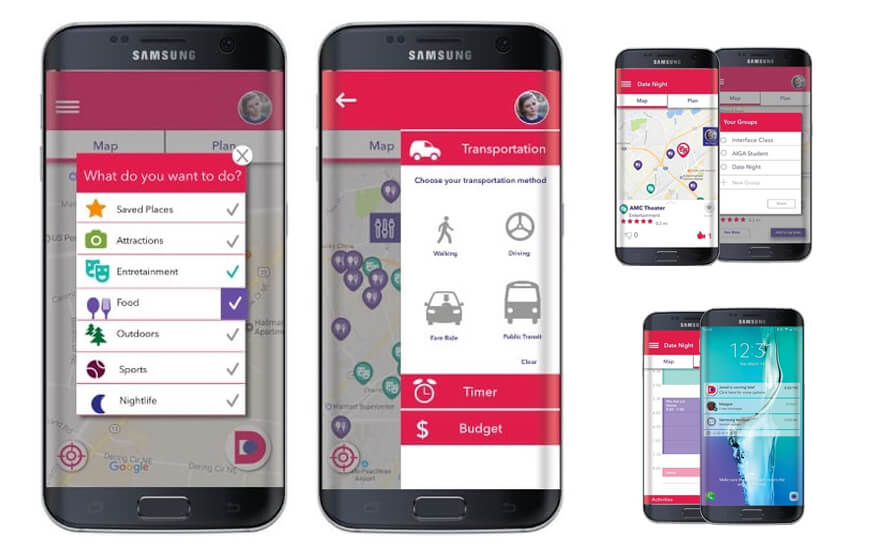

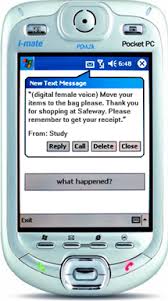

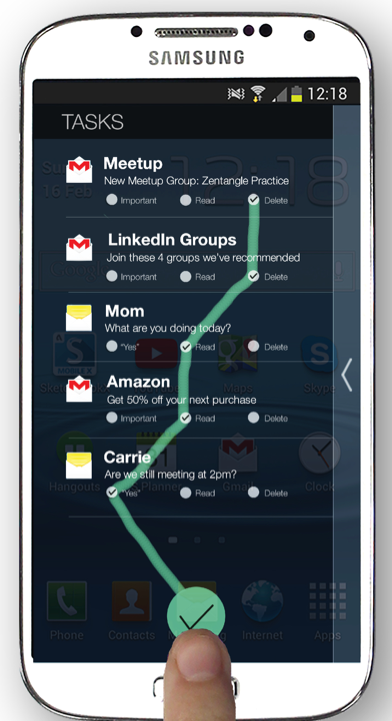

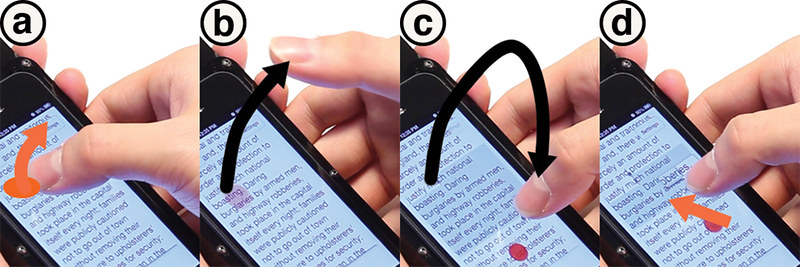

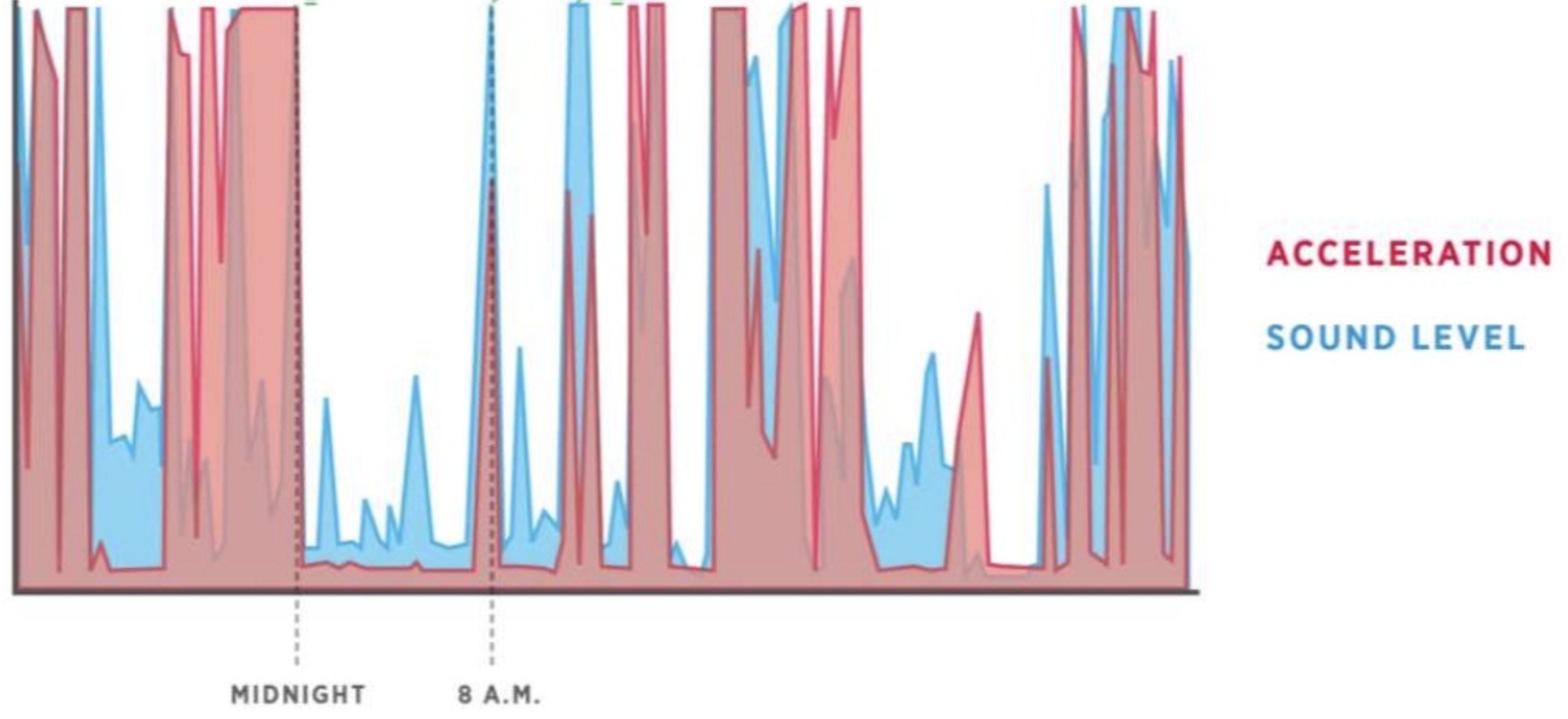

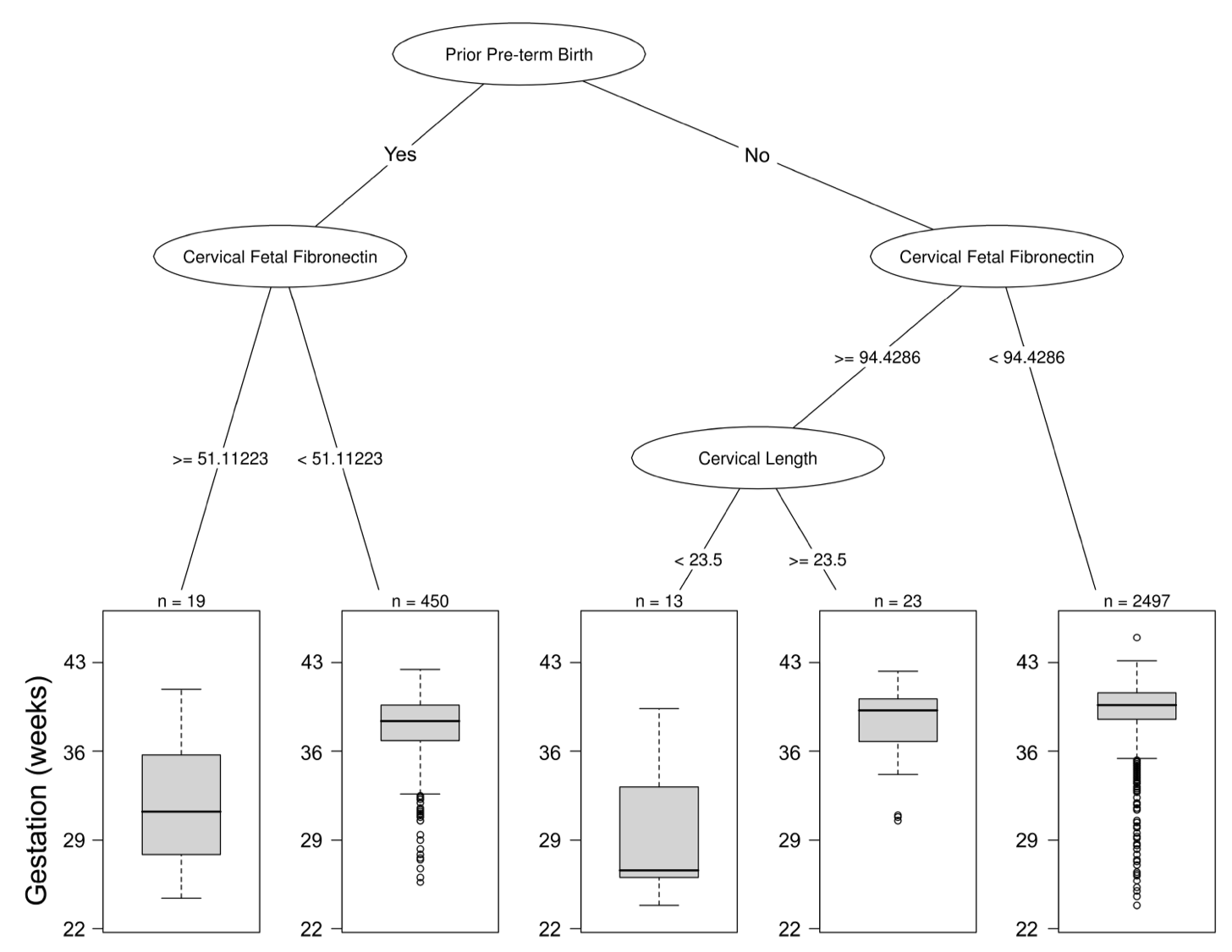

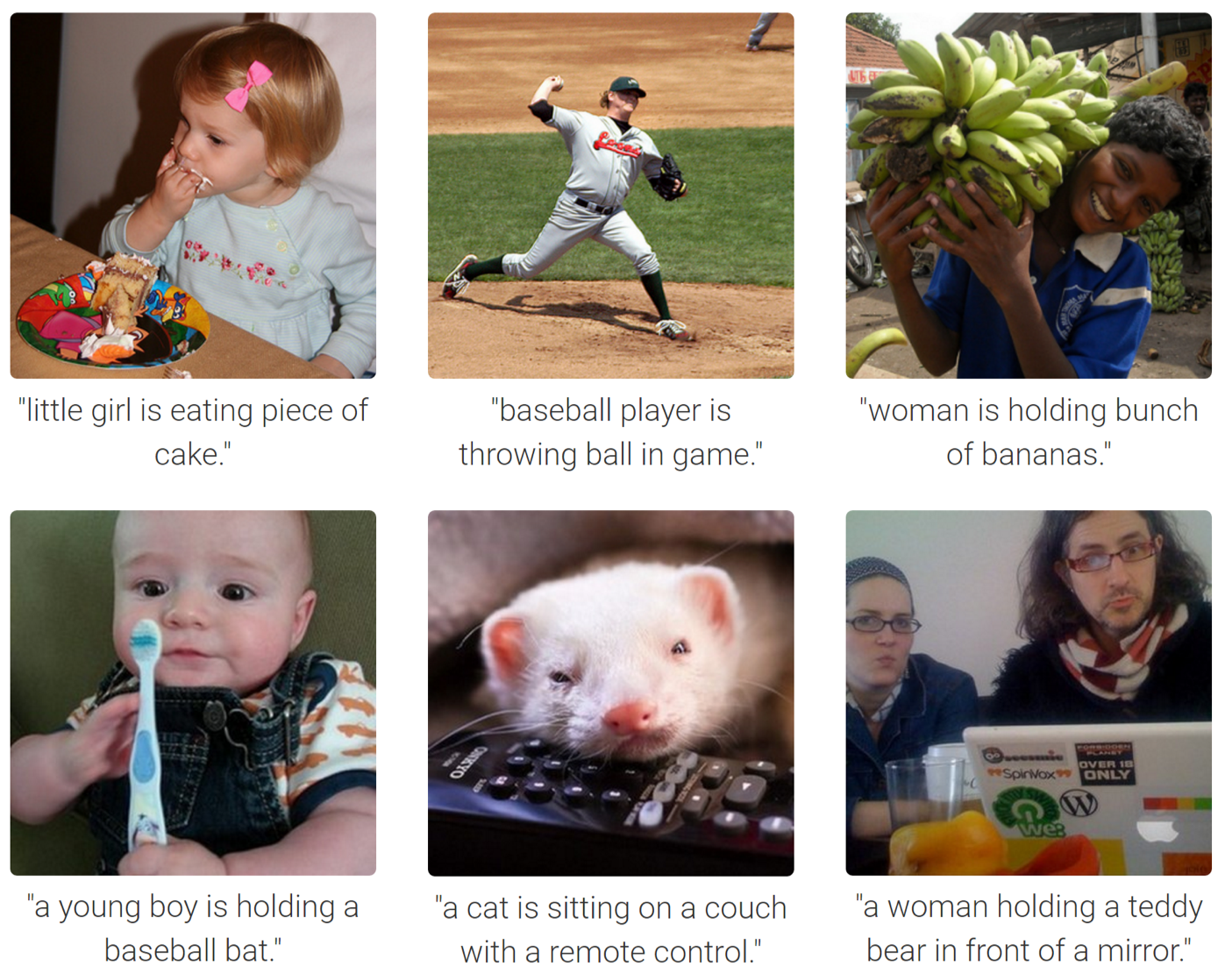

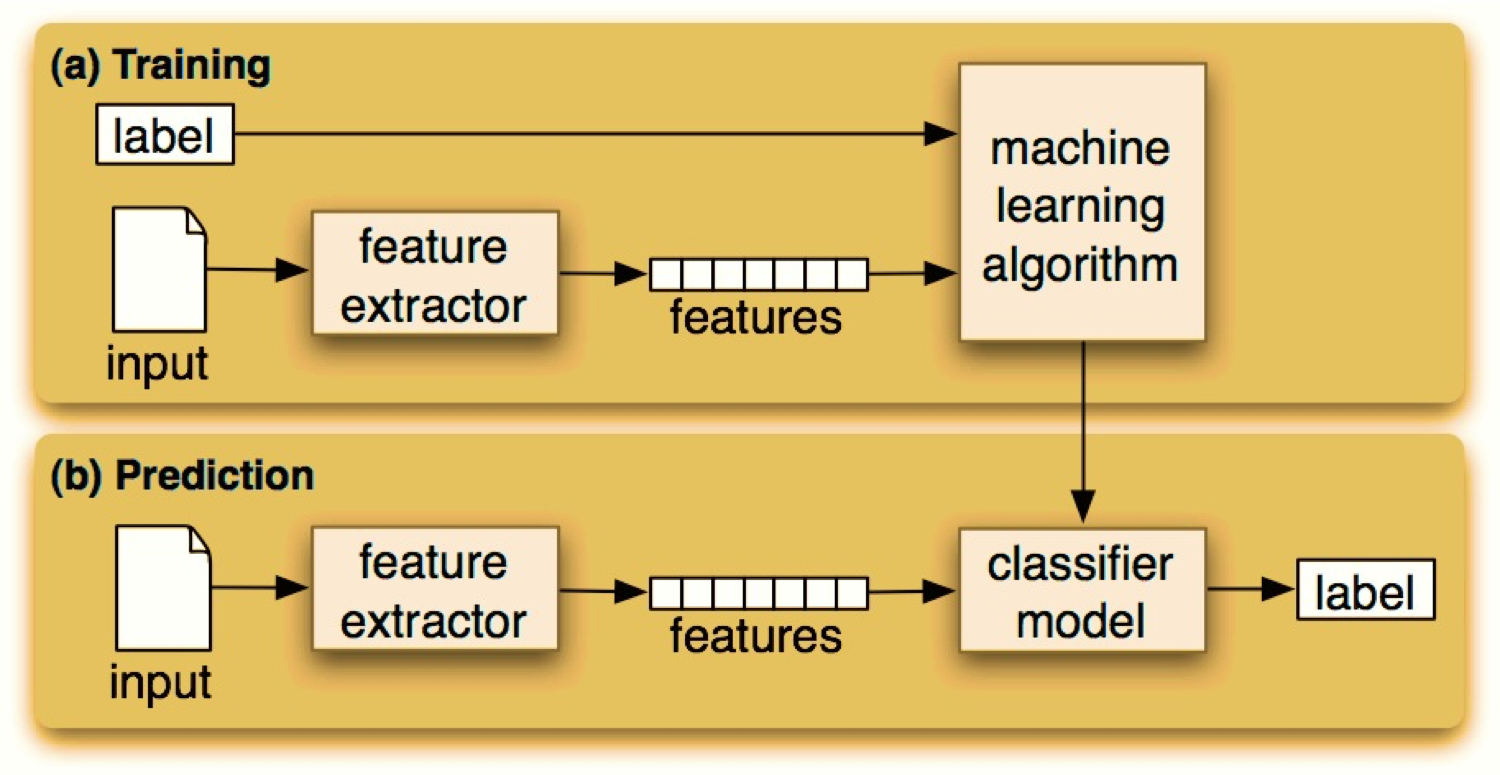

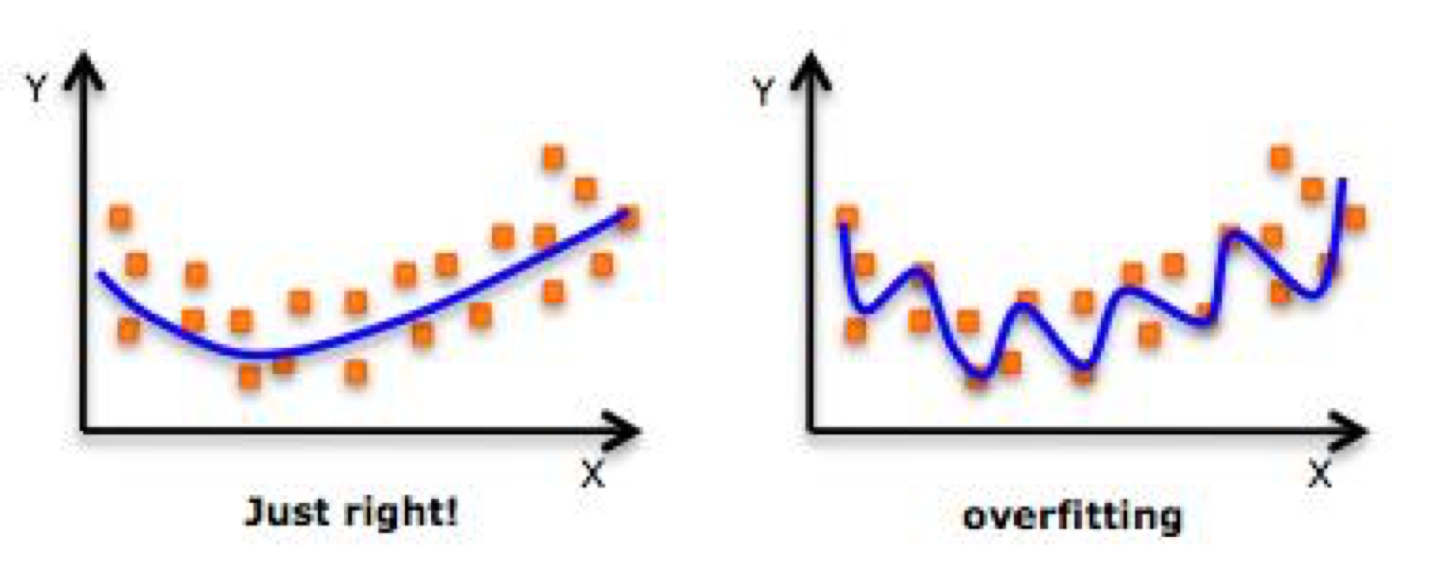

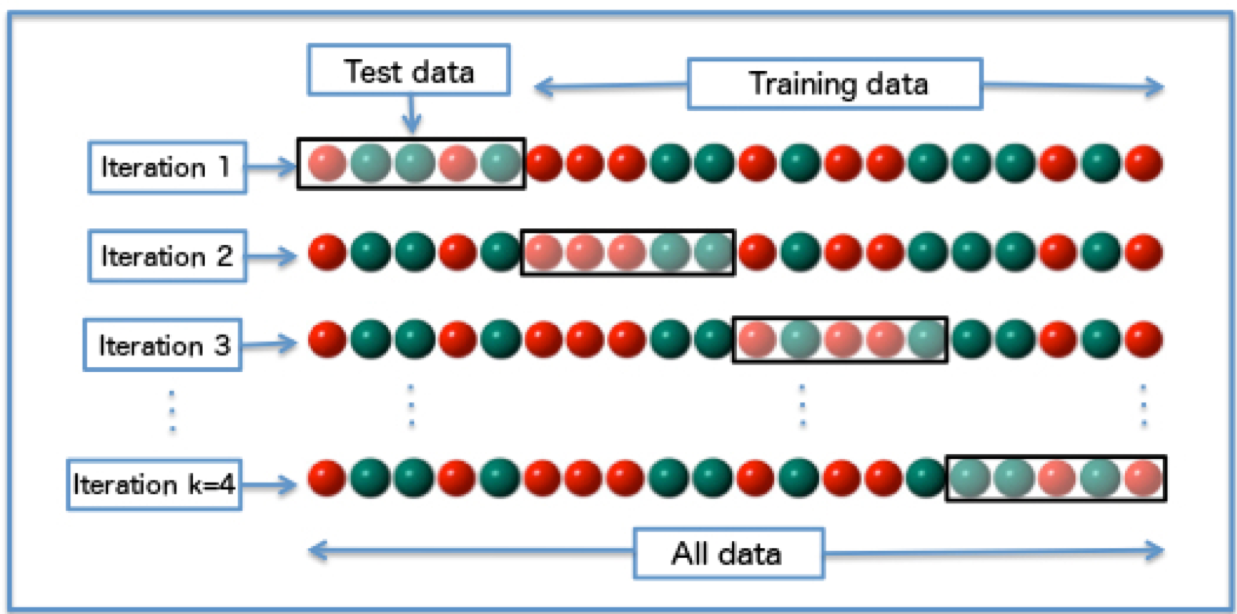

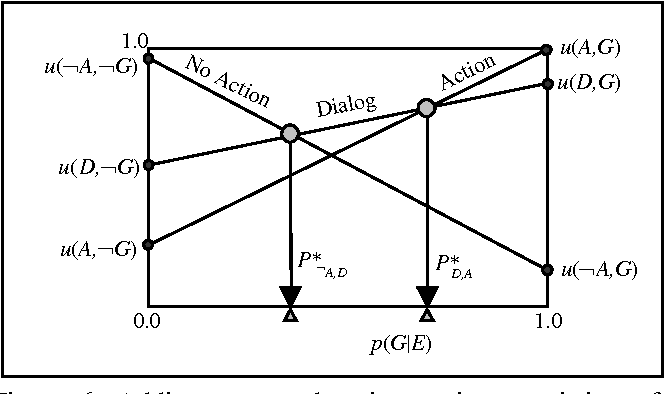

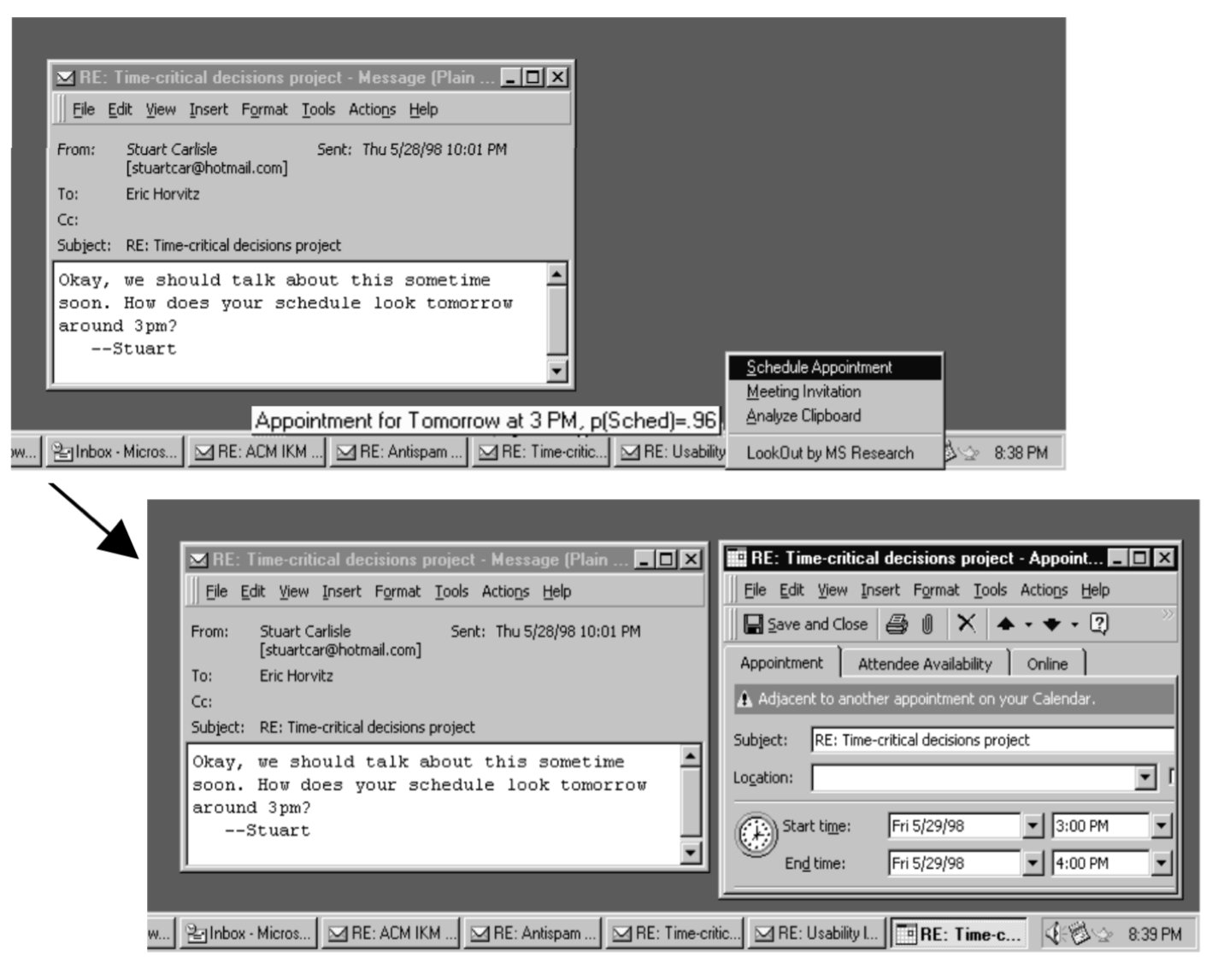

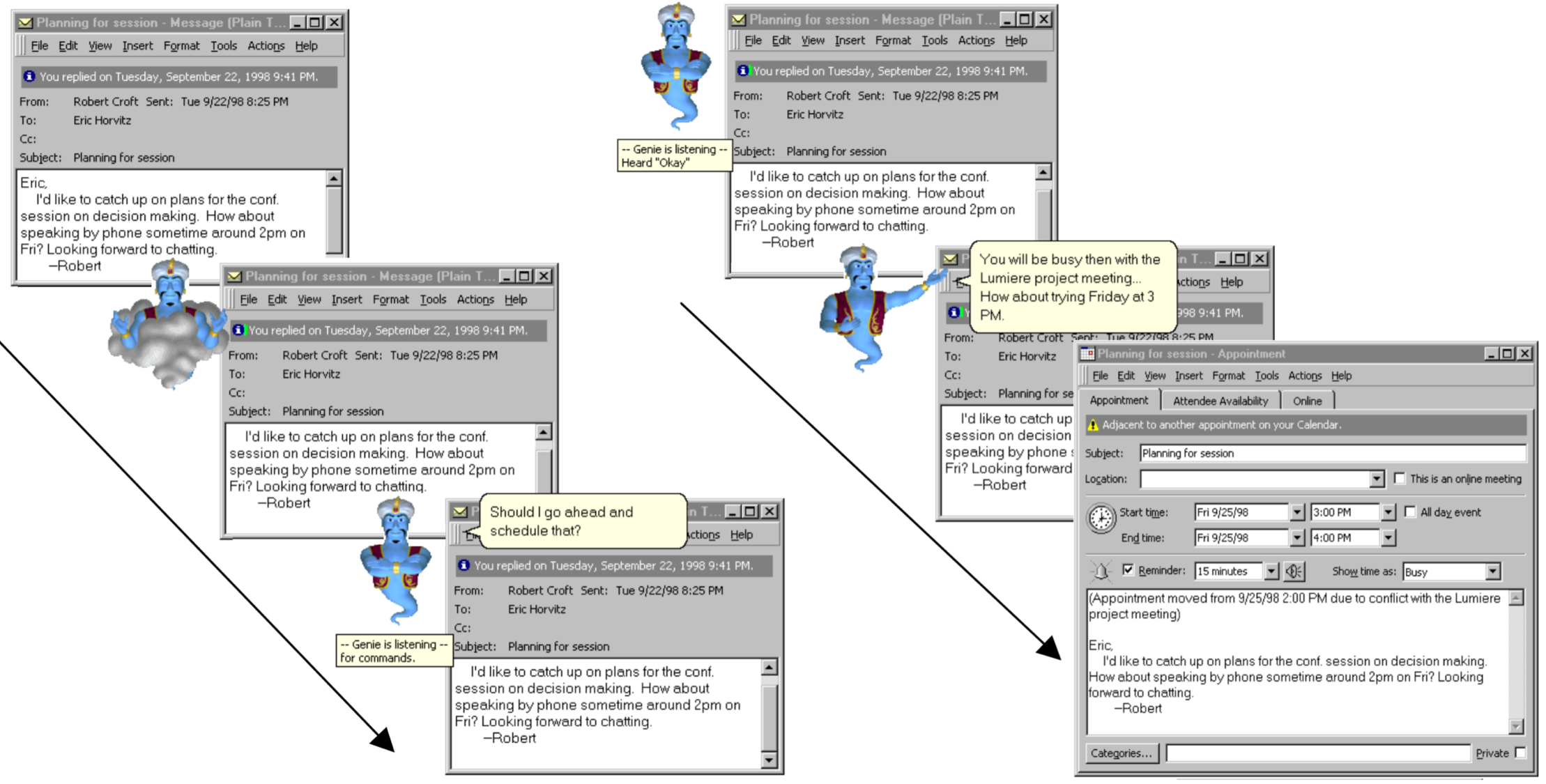

name: inverse layout: true class: center, middle, inverse --- # Google maps ](img/ml/google.jpg) .footnote[Picture from [Machine Learning on your Phone](https://www.appypie.com/top-machine-learning-mobile-apps)] ??? fame/shame neighborhood traffic... shorter commutes... --- # Machine Learning and your Phone Jennifer Mankoff CSE 340 Winter 2021 .footnote[Slides credit: Jason Hong, Carnegie Mellon University [Are my Devices Spying on Me? Living in a World of Ubiquitous Computing](https://www.slideshare.net/jas0nh0ng/are-my-devices-spying-on-me-living-in-a-world-of-ubiquitous-computing); ] --- layout: false # Smartphones Fun Facts about Millennials .left-column[ ![:fa thumbs-down] 83% sleep with phones ] .right-column[  ] --- # Smartphones Fun Facts about Millennials .left-column[ ![:fa thumbs-down] 83% sleep with phones ![:fa thumbs-down] 90% check first thing in morning ] .right-column[  ] --- # Smartphones Fun Facts about Millennials .left-column[ ![:fa thumbs-down] 83% sleep with phones ![:fa thumbs-down] 90% check first thing in morning ![:fa thumbs-down] 1 in 3 use in bathroom ] .right-column[  ] --- # Smartphone Data is Intimate  | Who we know | Sensors | Where we go | |-----------------------|-----------------------|---------------| | (contacts + call log) | (accel, sound, light) | (gps, photos) | --- # Example: COVID-19 Contact Tracing .left-column-half[  ] .right-column-half[ - Install an app on your phone - Turn on bluetooth - Keep track of every bluetooth ID you see ] --- # Other Useful Applications of Sensing  .footnote[[LeafSnap](http://leafsnap.com/) uses computer vision to identify trees by their leaves] --- # Other Useful Applications of Sensing  .footnote[[Vision AI](https://www.aipoly.com/) uses computer vision to identify images for the Blind and Visually Impaired] --- # Other Useful Applications of Sensing  .footnote[[Carat: Collaborative Energy Diagnosis](http://carat.cs.helsinki.fi/) uses machine learning to save battery life] --- # Other Useful Applications of Sensing  .footnote[[Imprompdo](http://imprompdo.webflow.io/ ) uses machine learning to recommend activities to do, both fund and todos] --- # How do these systems work? .left-column50[ ## Old style of app design <div class="mermaid"> graph TD I(Input) --Explicit Interaction--> A(Application) A --> Act(Action) classDef normal fill:#e6f3ff,stroke:#333,stroke-width:2px; class U,C,A,I,S,E,Act,Act2 normal </div> ] -- .right-column50[ ## New style of app design <div class="mermaid"> graph TD U(User) --Implicit Sensing--> C(Context-Aware Application) S(System) --Implicit Sensing--> C E(Environment) --Implicit Sensing--> C C --> Act2(Action) classDef normal fill:#e6f3ff,stroke:#333,stroke-width:2px; class U,C,A,I,S,E,Act,Act2 normal </div> ] --- .left-column-half[ ## Types of Sensors | | | | |--|--|--| | Clicks | Key presses | Touch | | Microphone | Camera | IOT devices | ] --- .left-column-half[ ## Types of Sensors | | | | |--|--|--| | Clicks | Key presses | Touch | | Microphone | Camera | IOT devices | |Accelerometer | Rotation | Screen| |Applications | Location | Telephony| |Battery | Magnetometer | Temperature| |Bluetooth | Network Usage | Traffic| |Calls | Orientation | WiFi| |Messaging | Pressure | Processor| |Gravity | Proximity | Humidity | |Gyroscope | Light | Multi-touch | | ... | ... | ....| ] ??? Sampled or event based? -- .right-column-half[ ## Contact Tracing Which Sensors might be useful for contact tracing? Why? Type your answers in chat! ] --- # Implementing Sensing I: Getting Data .footnotelarge[Android [Awareness API](https://developers.google.com/awareness/)] .left-column-half[ ## Turn it on Must enable it: [Google APIs](https://console.developers.google.com/apis/) Recent updates to documentation (from 2017): February 2020! ] .right-column-half[ ## Set up callbacks Two types of context sensing: Snapshots/ Fences ] ??? --- # Registering Callbacks - `Managers` (e.g. `LocationManager`) let us create and register a listener - Listeners can receive updates at different intervals, etc. - Some Sensor-specific settings [https://source.android.com/devices/sensors/sensor-types.html](https://source.android.com/devices/sensors/sensor-types.html) -- Requires an API key (Follow the [“Quick Guide”](https://developers.google.com/places/web-service/get-api-key)).. - When you have your API key, go to your android manifest and paste it in between the quotation marks labeled API_KEY. - Once you do that, you'll need to accept permissions and update play when you run your code. --- # Snapshots Capture sensor data at a moment in time Require a single callback, to avoid hanging while sensor data is fetched: `onSnapshot(Response response)` Seting up the callback (just like callbacks for other events) ``` java setSnapshotListener(Awareness.getSnapshotClient(this).getDetectedActivity(), new ActivitySnapshotListener(mUpdate, mResources)); ``` --- # Fences Notify you *every time* a condition is true Conditional data 3 callbacks: during, starting, stopping ```java mActivityFenceListener = new ActivityFenceListener( // during DetectedActivityFence.during(DetectedActivity.WALKING), // starting DetectedActivityFence.starting(DetectedActivity.WALKING), // stopping DetectedActivityFence.stopping(DetectedActivity.WALKING), this, this, mUpdate); ``` ??? What might we use for a location fence? Headphone fence? ... --- # Snapshots vs Fences for contact tracing .left-column60[ <iframe src="https://embed.polleverywhere.com/multiple_choice_polls/i8r4dmGMgyxIYDqgut9Rg?controls=none&short_poll=true" width="500px" height="400px"></iframe> ] -- .right-column30[ Answer: Fence We want to be notified about *every* contact so we can record it ] --- # This is Computational Behavioral Imaging  --- # This is Computational Behavioral Imaging   --- # Types of Context-aware apps .left-column[  ] .right-column[ **Capture and Access** - .red[Food diarying] and nutritional awareness via receipt analysis [Ubicomp 2002] - .bold.red[Audio Accessibility] for deaf people by supporting mobile sound transcription [Ubicomp 2006, CHI 2007] - .red[Citizen Science] volunteer data collection in the field [CSCW 2013, CHI 2015] - .red[Air quality assessment] and visualization [CHI 2013] - .red[Coordinating between patients and doctors] via wearable sensing of in-home physical therapy [CHI 2014] ] --- # Types of Context-aware apps .left-column[  ] .right-column[ Capture and Access<BR> **Adaptive Services (changing operation or timing)** - .red[Adaptive Text Prediction] for assistive communication devices [TOCHI 2005] - .red[Location prediction] based on prior behavior [Ubicomp 2014] - .bold.red[Pro-active task access] on lock screen based on predicted user interest [MobileHCI 2014] ] --- # Types of Context-aware apps .left-column[   ] .right-column[ Capture and Access<BR> Adaptive Services (changing operation or timing)<BR> **Novel Interaction** - .red[Cord Input] for interacting with mobile devices [CHI 2010] - .red[Smart Watch Intent to Interact] via twist'n'knock gesture [GI 2016] - .red[VR Intent to Interact] vi sensing body pose, gaze and gesture [CHI 2017] - .red[Around Body interaction] through gestures with the phone [Mobile HCI 2014] - .red.bold[Around phone interaction] through gestures combining on and above phone surface [UIST 2014] ] --- # Types of Context-aware apps .left-column[   ] .right-column[ ![:youtube Interweaving touch and in-air gestures using in-air gestures to segment touch gestures, H5niZW6ZhTk] ] --- # Types of Context-aware apps .left-column[  ] .right-column[ Capture and Access<BR> Adaptive Services (changing operation or timing)<BR> Novel Interaction<BR> **Behavioral Imaging** - .red[Detecting and Generating Safe Driving Behavior] by using inverse reinforcement learning to create human routine models [CHI 2016, 2017] - .red[Detecting Deviations in Family Routines] such as being late to pick up kids [CHI 2016] ] --- # Type of App for Contact Tracing .left-column60[ <iframe src="https://embed.polleverywhere.com/multiple_choice_polls/bLtpQdL1rX2N3LVcIuwvk?controls=none&short_poll=true" width="500px" height="400px"></iframe> ] -- .right-column30[ Answer: Capture and Access ] --- # Implementing Sensing II: Using Data .left-column50[ ![:fa bed, fa-7x] ] .right-column50[ ## In class exercise How might you recognize sleep? - What recognition question - What sensors ] ??? (sleep quality? length?...) How to interpret sensors? --- # Implementing Sensing II: Using Data .left-column50[  ] .right-column50[ ## In class exercise - What recognition question (sleep quality? length?...) - What sensors - How to interpret sensors? ] --- # How do we program this? Write down some rules Implement them --- # How do we program this? Old Approach: Create software by hand - Use libraries (like JQuery) and frameworks - Create content, do layout, code up functionality - Deterministic (code does what you tell it to) New Approach: Collect data and train algorithms - Will still do the above, but will also have some functionality based on ML - *Collect lots of examples and train a ML algorithm* - *Statistical way of thinking* --- # This is *Machine Learning* Machine Learning is often used to process sensor data - Machine learning is one area of Artificial Intelligence - This is the kind that’s been getting lots of press The goal of machine learning is to develop systems that can improve performance with more experience - Can use "example data" as "experience" - Uses these examples to discern patterns - And to make predictions --- # Two main approaches ![:fa eye] *Supervised learning* (we have lots of examples of what should be predicted) ![:fa eye-slash] *Unsupervised learning* (e.g. clustering into groups and inferring what they are about) ![:fa low-vision] Can combine these (semi-supervised) ![:fa history] Can learn over time or train up front --- # How Machine Learning is Typically Used Step 1: Gather lots of data (easy on a phone!) -- Step 2: Figure out useful features - Convert data to information (not knowledge!) - (typically) Collect labels --- # How Machine Learning is Typically Used Step 1: Gather lots of data (easy on a phone!) Step 2: Figure out useful features Step 3: Select and train the ML algorithm to make a prediction - Lots of toolkits for this - Lots of algorithms to choose from - Mostly treat as a "black box" --- # Example: Decision tree for predicting premature birth  --- # Example: Deep Learning for Image Captioning  .footnote[[Captioning images. Note the errors.](http://cs.stanford.edu/people/karpathy/deepimagesent/) Deep learning now [available on your phone!](https://www.tensorflow.org/lite)] ??? Note differences between these: one label vs many --- # Training process  --- # How Machine Learning is Typically Used Step 1: Gather lots of data (easy on a phone!) Step 2: Figure out useful features Step 3: Select and train the ML algorithm Step 4: Evaluate metrics (and iterate) ??? See how well algorithm does using several metrics Error analysis: what went wrong and why Iterate: get new data, make new features --- # Evaluation Concerns Accuracy: Might be too error-prone --- .left-column[ ## Assessing Accuracy] .right-column[ Prior probabilities - Probability before any observations (ie just guessing) - Ex. ML classifier to guess if a person is male or female based on name - Just assume all names are female (50% will be right) - Your trained model needs to do better than prior Other baseline approaches - Cheap and dumb algorithms - Ex. Names that end in vowel are female - Your model needs to do better than these too ] ??? We did this to study gender's impact on academic authorship; doctors reviews --- .left-column[ ## Assessing Accuracy] .right-column[ Don't just measure accuracy (percent right) Sometimes we care about *False positives* vs *False negatives* ] --- .left-column[ ## Assessing Accuracy ## Confusion matrix helps show this] .right-column[ | | | .red[Prediction] | | |-------------|--------------|----------------------|----------------------| | | | **Positive** | **Negative** | | .red[Label] | **Positive** | True Positive (good) | False Negative (bad) | | | **Negative** | False Positive (bad) | True Negative (good) | Accuracy is (TP + TN) / (TP + FP + TN + FN) ] --- .left-column[ ## Assessing Accuracy ## Precision ] .right-column[ | | | .red[Prediction] | | |-------------|--------------|----------------------------|----------------------| | | | **Positive** | **Negative** | | .red[Label] | **Positive** | .red[True Positive (good)] | False Negative (bad) | | | **Negative** | .ref[False Positive (bad)] | True Negative (good) | Precision = TP / (TP+FP) Intuition: Of the positive items, how many right? ] --- .left-column[ ## Assessing Accuracy ## Recall ] .right-column[ | | | Prediction | | |--------|--------------|----------------------------|----------------------------| | Actual | | **Positive** | **Negative** | | | **Positive** | .red[True Positive (good)] | .red[False Negative (bad)] | | | **Negative** | False Positive (bad) | True Negative (good) | Recall = TP / (TP+FN) Intuition: Of all things that should have been positive, how many actually labeled correctly? ] --- # Evaluation Concerns Accuracy: Might be too error-prone Overfitting: Your ML model is too specific for data you have - Might not generalize well  --- # Avoiding Overfitting To avoid overfitting, typically split data into training set and test set Train model on training set, and test on test set Often do this through cross validation  --- # How Machine Learning is Typically Used Step 1: Gather lots of data (easy on a phone!) Step 2: Figure out useful features Step 3: Select and train the ML algorithm Step 4: Evaluate metrics (and iterate) Step 5: Deploy --- # What makes this work well? Typically more data is better Accurate labels important Quality of features determines quality of results .red[*NOT* as sophisticated as the media makes out] -- .red[*BUT* can infer all sorts of things] --- # AI / Machine Learning Not As Sophisticated as in Media A lot of people outside of computer science often ascribe human behaviors to AI systems - Especially desires and intentions - Works well for sci-fi, but not for today or near future These systems only do: - What we program them to do - What they are trained to do (based on the (possibly biased) data) --- # Concerns Significant Societal Challenges for Privacy -- Wide Range of Privacy Risks | Everyday Risks | Medium Risk | Extreme Risks | |--------------------|---------------------|-------------------| | Friends, Family | Employer/Government | Stalkers, Hackers | | Over-protection | Over-monitoring | Well-being | | Social obligations | Discrimination | Personal safety | | Embarrassment | Reputation | Blackmail | | | Civil Liberties | | - It's not just Big Brother - It's not just corporations - Privacy is about our relationships with every other individual and organization out there --- # Concerns Significant Societal Challenges for Privacy We will talk more about privacy in a future lecture --- # Mixed-initiative interfaces Basically, who is in charge? - Does person initiate things? Or computer? - How much does computer system do on your behalf? Example: Autonomous vehicles - Some people think Tesla autopilot is full autonomous, leads to risky actions Why initiative matters - Potential major shift: instead of direct manipulation, some smarts (intelligent agent) for automation --- .left-column50[ ## Mixed-initiative best practices - Significant value-added automation - Considering uncertainty - Socially appropriate interaction w/ agent - Consider cost, benefit, uncertainty - Use dialog to resolve uncertainty - Support direct invocation and termination - Remember recent interactions ] .right-column50[  ] --- .left-column50[ ## Mixed-initiative best practices - Significant value-added automation - Considering uncertainty - Socially appropriate interaction w/ agent - Consider cost, benefit, uncertainty - Use dialog to resolve uncertainty - Support direct invocation and termination - Remember recent interactions ] .right-column50[  ] ??? Can see what agent is suggesting, in terms of scheduling a meeting --- .left-column50[ ## Mixed-initiative best practices - Significant value-added automation - Considering uncertainty - Socially appropriate interaction w/ agent - Consider cost, benefit, uncertainty - Use dialog to resolve uncertainty - Support direct invocation and termination - Remember recent interactions ] .right-column50[  ] ??? Uses anthropomorphized aganet Uses speech for input Uses mediation to help resolve conflict --- .left-column[ ## Mixed-initiative best practices ] .right-column[ Built-in cost-benefit model in system - If perceived benefit >> cost, then do the action - Otherwise wait Note that this is just one point in design space (1999), and still lots of open questions - Ex. Should “intelligence” be anthropomorphized? - Ex. How to learn what system can and can’t do? - Ex. What kinds of tasks should be automated / not? - Ex. What are strategies for showing state of system? - Ex. What are strategies for preventing errors? ] --- # Concerns Significant Societal Challenges for Privacy Who should have the initiative? Bias in Machine Learning --- .quote[Johnson says his jaw dropped when he read one of the reasons American Express gave for lowering his credit limit: ![:fa quote-left] Other customers who have used their card at establishments where you recently shopped have a poor repayment history with American Express. ]  --- .right-column[  ] --- # Concerns Significant Societal Challenges for Privacy Who should have the initiative? Bias in Machine Learning Understanding ML --- # Understanding what is going on: Forming Mental Models How does a system know I am addressing it? How do I know a system is attending to me? When I issue a command/action, how does the system know what it relates to? How do I know that the system correctly understands my command and correctly executes my intended action? .footnote[ Belloti et al., CHI 2002 ‘Making Sense of Sensing’ ] --- # Wrong location-based rec  ??? Why did it not tell me about the Museum? How does it determine my location? Providing explana7ons to these ques7ons can make Intelligent systems Intelligible other examples: caregiving hours by insurance company, etc --- # Types of feedback Feedback: crucial to user’s understanding of how a system works and helping guide future action - What did the system do? - What if I do W, what will the system do? - Why did the system do X? - Why did the system not do Y - How do I get the system to do Z? --- # Summary ML and ethics ML is powerful (but not perfect), often better than heuristics Basic approach is collect data, train, test, deploy Hard to understand what algorithms are doing (transparency) - ML algorithms just try to optimize, but might end up finding a proxy for race, gender, computer, etc - But hard to inspect these algorithms - Still a huge open question Privacy - How much data should be collected about people? - How to communicate this to people? - What kinds of inferences are ok? --- # End of deck