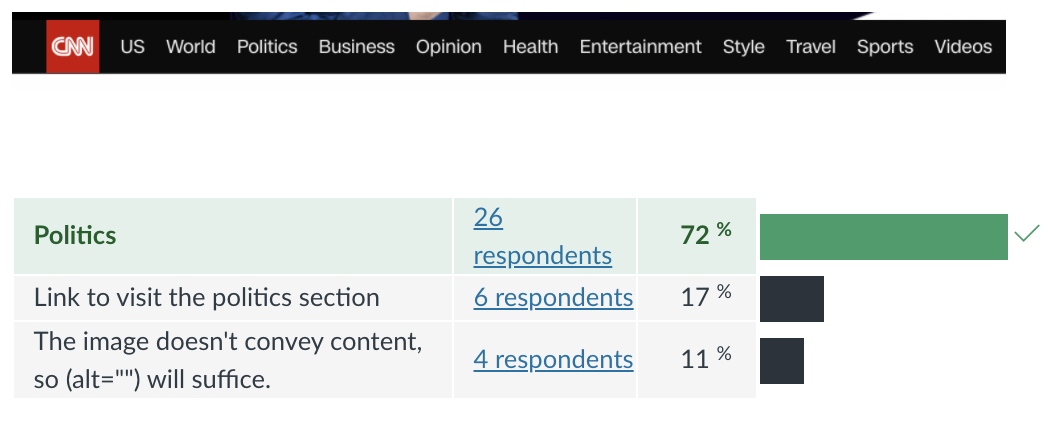

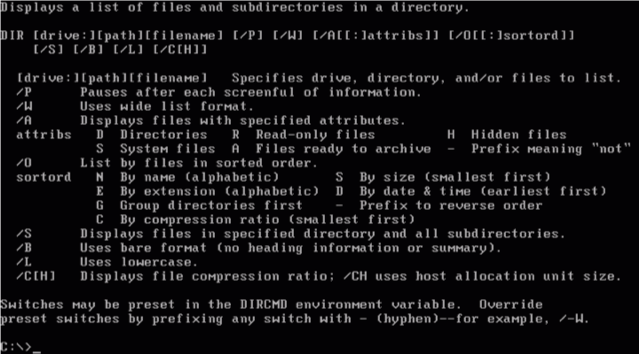

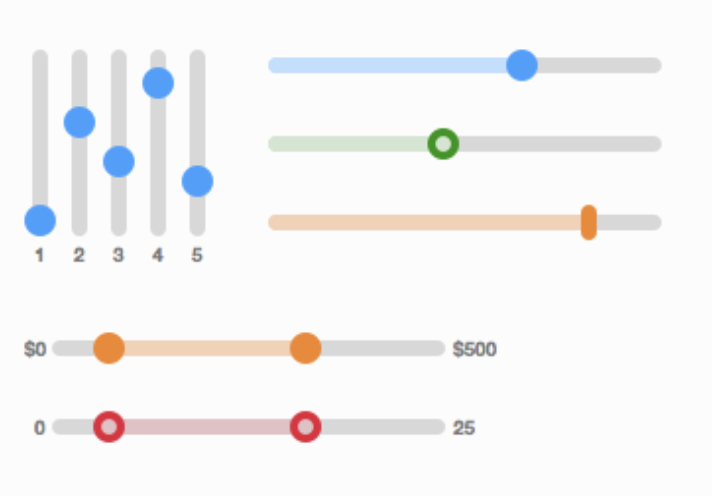

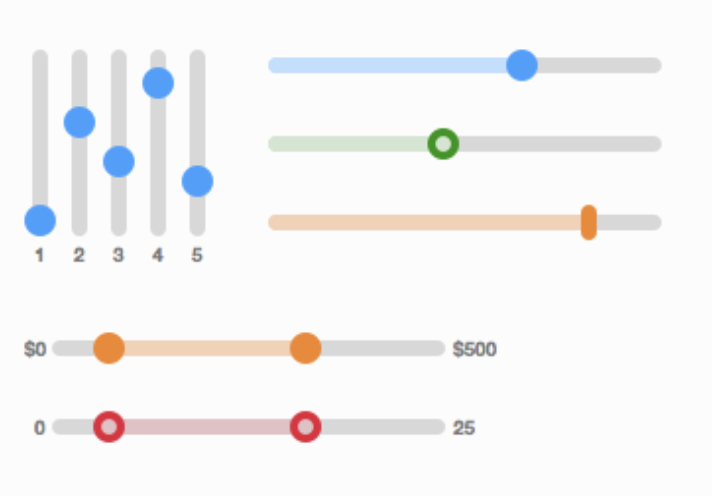

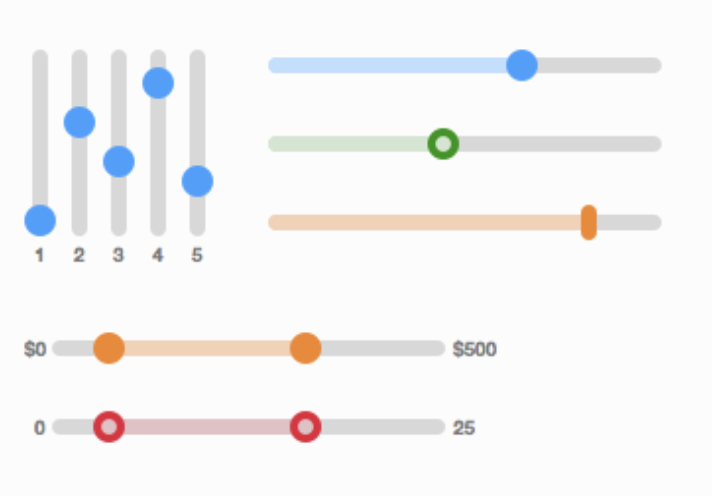

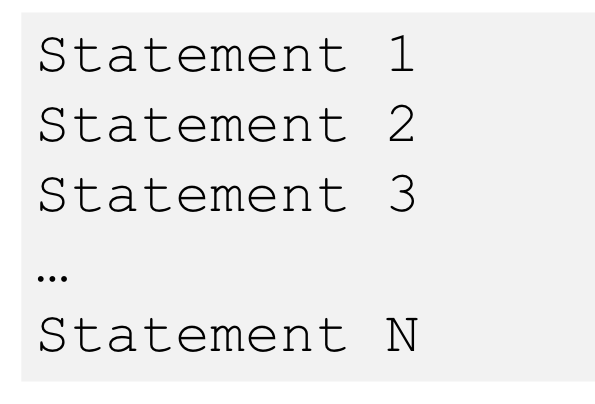

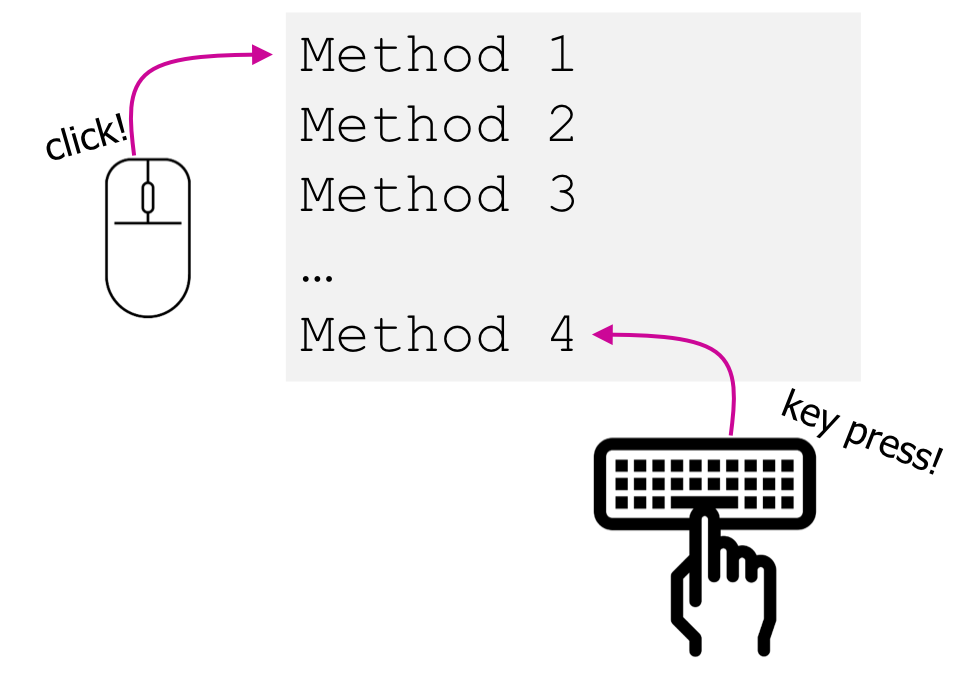

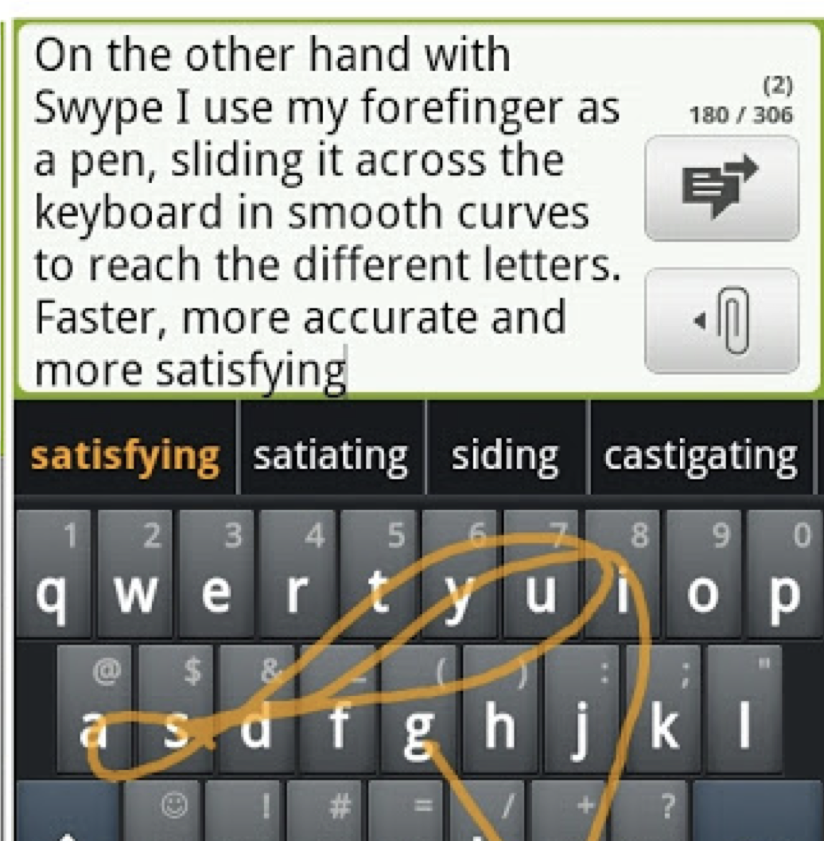

name: inverse layout: true class: center, middle, inverse --- # Write down three things that you reacted to this week What are some examples? -- This is event-driven interaction -- We will be studying the theory and practice of event driven interactions in Android --- # Event Handling I - Callbacks, Model View Controller, and Events Jennifer Mankoff CSE 340 Winter 2021 --- layout: false # First, quick review of Accessibility Quiz - Reminder: Required readings are actually required and will be tested on. -- - ALT Text What would be the most appropriate alt text for the "Politics" option in the following image?  --- layout: false # First, quick review of Accessibility Quiz - Reminder: Required readings are actually required and will be tested on. - ALT Text What about a spacer? -- - Set the description to null, or "" Other things that don't require ALT text? --- --- # Automated Testing See Slides [23](https://courses.cs.washington.edu/courses/cse340/21wi/slides/wk04/android-accessibility.html#23) and [24](https://courses.cs.washington.edu/courses/cse340/21wi/slides/wk04/android-accessibility.html#24) from the [lecture on android accessibility](https://courses.cs.washington.edu/courses/cse340/21wi/slides/wk04/android-accessibility.html). >A limitation of automated testing is that it does not catch every accessibility issue. Using only these tests means you could miss issues that come up during manual testing... Good but incomplete. Need examples for full credit. (like whether ALT text is present (automated check) vs whether it is good (requires a human) -- Also many wrong answers -- automated tests *can* test button size; contrast for example. And many less specific answers (i.e. not about accessibility at all) --- # Situational Disability Lots of great ideas. I loved the answer that listed a couple of changes for one or the other >Reading may be difficult in bright sunlight --- # Situational Disability Lots of great ideas. I loved the answer that listed a couple of changes for one or the other >high contrast font --- # Situational Disability Lots of great ideas. I loved the answer that listed a couple of changes for one or the other >Bigger buttons -- Simply saying "add a screen reader" is a start, but doesn't necessarily account for additional situational issues (do I want it reading aloud? Do I have earphones in? Is it noisy out doors) --- [//]: # (Outline Slide) # Today's goals - **What is a Model View Controller?** - What is an event? - What is a callback? - ~~How is this all related?~~ (Moved to Wednesday) --- # What do you think happens to user input? .left-column30[ <div class="mermaid"> graph LR ap[Application Program] t[Toolkit] w[Window System] o[OS] h[Hardware] classDef yellow font-size:14pt,text-align:center class ap,w,o,h,hlt,t yellow </div> ] .right-column60[ What happens at each level of the hardware stack? ] --- # What do you think happens to user input? .left-column30[ <div class="mermaid"> graph LR ap[Application Program] t[Toolkit] w[Window System] o[OS] h[Hardware] classDef yellow font-size:14pt,text-align:center class ap,w,o,hlt,t yellow class h green </div> ] .right-column60[ What happens at each level of the hardware stack? - Hardware level: electronics to sense circuits closing or movement ] --- # What do you think happens to user input? .left-column30[ <div class="mermaid"> graph LR ap[Application Program] t[Toolkit] w[Window System] o[OS] h[Hardware] classDef yellow font-size:14pt,text-align:center class ap,h,hlt,t yellow class o,w green </div> ] .right-column60[ What happens at each level of the hardware stack? - Hardware level: electronics to sense circuits closing or movement - OS: "Interrupts" that tell the Window system something happened - Windows system: Tells which window received the input ] --- # What do you think happens to user input? .left-column30[ <div class="mermaid"> graph LR ap[Application Program] t[Toolkit] w[Window System] o[OS] h[Hardware] classDef yellow font-size:14pt,text-align:center class ap,w,o,hlt,h yellow class t green </div> ] .right-column60[ What happens at each level of the hardware stack? - Hardware level: electronics to sense circuits closing or movement - OS: "Interrupts" that tell the Window system something happened - Windows system: Tells which window received the input - Toolkit: defines how the app developer will use these events ] --- # Interacting with the User .left-column50[ Command line interfaces  ] -- .right-column50[ - Think about the from 142/143 or in prior classes did they create that took user interaction - What control flow structure did you use to get input repeatedly from the user? - Did the system continue to work or did it wait for the user input? ] --- # Interacting with the User .left-column50[ Command line interfaces  ] .right-column50[ Mac Desktop interface  ] ??? - Event-Driven Interfaces (GUIs) - Interaction driven by user - UI constantly waiting for input events - Pointing & text input, graphical output --- # Windowing System does the setup for this .left-column[ <div class="mermaid"> graph LR ap[Application Program] hlt[High Level Tools] t[Toolkit] w[Window System] o[OS] h[Hardware] classDef yellow font-size:14pt,text-align:center classDef green font-size:14pt,text-align:center class ap,o,h,hlt,t yellow class w green </div> ] .right-column[ The Window System layer allows modern UIs to happens - provides each view (app) with an independent drawing interface. - ensures that the display gets updates to reflect changes ] -- .right-column[ **But what happens after the app gets the user's input?** ] --- # Model View Controller (MVC) .left-column60[ View for a slider? (New definition of View: what does it show the user) ] .right-column40[  ] --- # Model View Controller (MVC) .left-column60[ View for a slider? (What does it show the user) Controller for a slider? (What is the underlying logic of the interactor?) ] .right-column40[  ] --- # Model View Controller (MVC) .left-column60[ View for a slider? (What does it show the user) Controller for a slider? (What is the underlying logic of the interactor?) Model for a slider? (what is its state?) ] .right-column40[  ] --- # Model View Controller (MVC) .left-column70[ <div class="mermaid"> sequenceDiagram loop Every time user provides input Note right of View: User provides input View->>Controller: Input Controller->>Model: Change state Model->>Controller: Update state of View(s) Controller->>View: Triggers redraw Note right of View: User sees response end </div> ] --- # Example: Fingerprint entry for door lock .left-column70[ <div class="mermaid"> sequenceDiagram Note right of View: User provides input View->Controller: User Fingerprint ID Controller->Model: Update Lock to open Model->Controller: Lock is open Controller->View: Redraw/open door Note right of View: User sees response </div> ] --- # Model View Controller (MVC) From [Wikipedia]() >MVC is a software design pattern commonly used for developing user interfaces which divides the related program logic into three interconnected elements. - *Model* - a representation of the state of your application (or a single view) - *View* - the visual representation presented to the user - *Controller* - communicates between the model and the view - Handles changing the model based on user input - Retrieves information from the model to display on the view -- MVC exists within each View as well as for overall interface --- # Where is the MVC in your code? - Model - May be stored in the application - Persistent state must be stored *outside* the application (filesystem, database, etc) --- # Where is the MVC in your code? - Model - View (Output) -- we need to show people feedback. - What did we learn about how to do this? - What causes the screen to update? - How are things laid out on screen? --- # Where is the MVC in your code? - Model - View (Output) - Controller (Input) - We need to know when people are doing things. This needs to be event driven. - Responding to Users: Event Handling - When a user interacts with our apps, Android creates **events** - As app developers, we react "listening" for events and responding appropriately --- |Procedural | Event Driven | | :--: | :--: | ||<br><br><br><br>| |Code is executed in sequential order | Code is executed based upon events| --- layout: false [//]: # (Outline Slide) # Today's goals - What is a Model View Controller? - **What is an event?** - What is a callback? - ~~How is this all related?~~ (Moved to Wednesday) --- # But what is an Event? A representation of user input Generally, input is harder than output - More diversity, less uniformity - More affected by human properties --- # Device Independence - We need device independence to handle a variety of ways to get input - We need a uniform and higher level abstraction for input (events) --- # More on events - Sampled devices - Handled as “incremental change” events - Each measurable change: a new event with new value - Device differences - Handled implicitly by only generating events they can generate - Recognition Based Input? - Yes, can generate events for this too --- # Where does and Event come from? Consider the "location" of an event... What is different about a joystick, a touch screen, and a mouse? ??? - Mouse was originally just a 1:1 mapping in 2 dimensions == absolute location; bounded - Joystick is relative (maps movement into rate of change in location); unbounded - Touch screen is absolute; bounded - What about today's mouse? Lifting and moving? -- - Mouse was originally just a 1:1 mapping in 2 dimensions == absolute location; bounded - Joystick is relative (maps movement into rate of change in location); unbounded - Touch screen is absolute; bounded -- What about the modern mouse? Lifting and moving? -- How about a wii controller? --- # Interaction techniques / Components make input devices effective .left-column[] .right-column[ Now consider text entry: - 60-80 (keyboards; twiddler) - ~20 (soft keyboards) - ~50? Swype – but is it an input device? ] --- # Higher level abstraction Logical Device Approach: - Valuator → returns a scalar value (like a slider) - Button → returns integer value - Locator → returns position on a logical view surface - Keyboard → returns character string - Stroke → obtain sequence of points ??? - Can obscure important differences -- hence use inheritance - Discussion of mouse vs pen -- what are some differences? - Helps us deal with a diversity of devices - Make sure everyone understands types of events - Make sure everyone has a basic concept of how one registers listeners --- # Not really satisfactory... Doesn't capture full device diversity | Event based devices | | Sampled devices | | -- | -- | -- | | Time of input determined by user | | Time of input determined by program | | Value changes only when activated | | Value is continuously changing | | e.g.: button | | e.g.: mouse | ??? Capability differences - Discussion of mouse vs pen - what are some differences? --- # Contents of Event Record Think about your real world event again. What do we need to know? **What**: Event Type **Where**: Event Target **When**: Timestamp **Value**: Event-specific variable **Context**: What was going on? ??? Discuss each with examples --- # Contents of Event Record What do we need to know about each UI event? **What**: Event Type (mouse moved, key down, etc) **Where**: Event Target (the input component) **When**: Timestamp (when did event occur) **Value**: Mouse coordinates; which key; etc. **Context**: Modifiers (Ctrl, Shift, Alt, etc); Number of clicks; etc. ??? Discuss each with examples --- # Input Event Goals Device Independence - Want / need device independence - Need a uniform and higher level abstraction for input Component Independence - Given a model for representing input, how do we get inputs delivered to the right component? ??? --- layout: false [//]: # (Outline Slide) # Today's goals - What is a Model View Controller? - What is an event? - **What is a callback?** - ~~How is this all related?~~ (Moved to Wednesday) --- # What is a callback? It's just the method that gets called when an event is deliverd Not unique to Android We will review the general concept From [Wikipedia](https://en.wikipedia.org/wiki/Callback_(computer_programming)): > A callback ... is any executable code that is passed as an argument to other code; > that other code is expected to call back (execute) the argument at a given time. --- # Callback Exercise 1. Hopefully you designed your Zoo. 2. Put your enclosure name in the chat now 3. Remind yourself what sound you will make. 4. Wait until I call your enclosure name, then make the sound for the requested number of seconds. --- # Callback Exercise As code, imagine your each enclosure has implemented the following interface to define the behavior. (Note: Listeners are a type of event handling callback in Java/Android) ```java public interface EnclosureListener { public void makeNoise(int numSeconds); } ``` For example: ```java public class LionCage implements EnclosureListener { ... public void makeNoise(int numSeconds) { // make the animal noise for the given number of seconds } } ``` --- # Callback Exercise Further, by telling me the name of your enclosure you're registering your listener WITH me, the "zookeeper" ```java public class LionCage implements EnclosureListener { ... public void makeNoise(int numSeconds) { // make the animal noise for the given number of seconds } public void onCreate(Bundle savedInstanceState) { ... zookeeper.addEnclosureListener(this); } } ``` --- # Callback Exercise ```java public class Zookeeper { // The zookeeper keeps a list of all EnclosureListeners that were registered, private List<EnclosureListeners> mEnclosureListeners; public final void addEnclosureListener(EnclosureListener listener) { if (listener == null) { throw new IllegalArgumentException("enclosureListener should never be null"); } mEnclosureListeners.add(listener); } // And can call on them whenever they need with the right amount of time. public void cacophony(int seconds) for (EnclosureListener listener : mEnclosureListeners) { listener->makeNoise(seconds); } } } ``` --- # Callback Exercise - The Zookeeper has NO idea what each enclosure will do until the listener is registered and called. - Each enclosure separately defines how it will react to a `makeNoise` event. - The "contract" between the zookeeper and the enclosures is the defined `EnclosureListener` interface. --- # Implementing a callback There are three ways to implement callbacks in Java 1. Creating a class in a separate file 2. Creating an inner class 3. Creating an anonymous class (like the following) ```java Arrays.sort(people, new Comparator<Person>() { @Override public int compare(Person p1, Person p2) { return p1.getAge() - p2.getAge(); } }); ``` --- # Implementing a callback There are <del>three</del> four ways to implement callbacks in Java 1. Creating a class in a separate file (like `PersonLastNameComparator`) 2. Creating an inner class 3. Creating an anonymous class 4. Using a lambda ```java Arrays.sort(people, (Person p1, Person p2)->{return p1.getAge() - p2.getAge();}); ``` --- # Callbacks in Android - At the time Android was created the toolkit developers have *no* idea how every app may want to respond to events - The toolkit has pre-defined interfaces so apps or components can respond to events such as clicks or touches. For example: - The `View` class defines the following Listeners as inner classes: - `View.OnClickListener` - `View.OnLongClickListener` - `View.OnFocusChangeListener` - `View.OnKeyListener` - `View.OnTouchListener` (this is used in ColorPicker and Menu by subclassing AppCompatImageView) - `View.OnCreateContextMenuListener` We will come back to these in our next class. --- # Summary - MVC: Separation of concerns for user interaction -- - Events: logical input device abstraction -- - Callbacks: a programatic way to get or send information from/to our program from the system --- # Summary: Relating this back to the phone .left-column30[ <div class="mermaid"> graph LR ap[Application Program] t[Toolkit] w[Window System] o[OS] h[Hardware] classDef yellow font-size:14pt,text-align:center class ap,w,o,h,hlt,t yellow </div> ] .right-column60[ - Hardware level: electronics to sense circuits closing or movement - Difference between hardware (Event vs sampled) - Sensor based input - OS: "Interrupts" that tell the Window system something happened - Logical device abstraction and types of devices - Windows system: Tells which window received the input - Toolkit: Packages up Events & calls Callbacks & Redraw - Application Program: - Implements callbacks, stores data ] --- # End of Deck