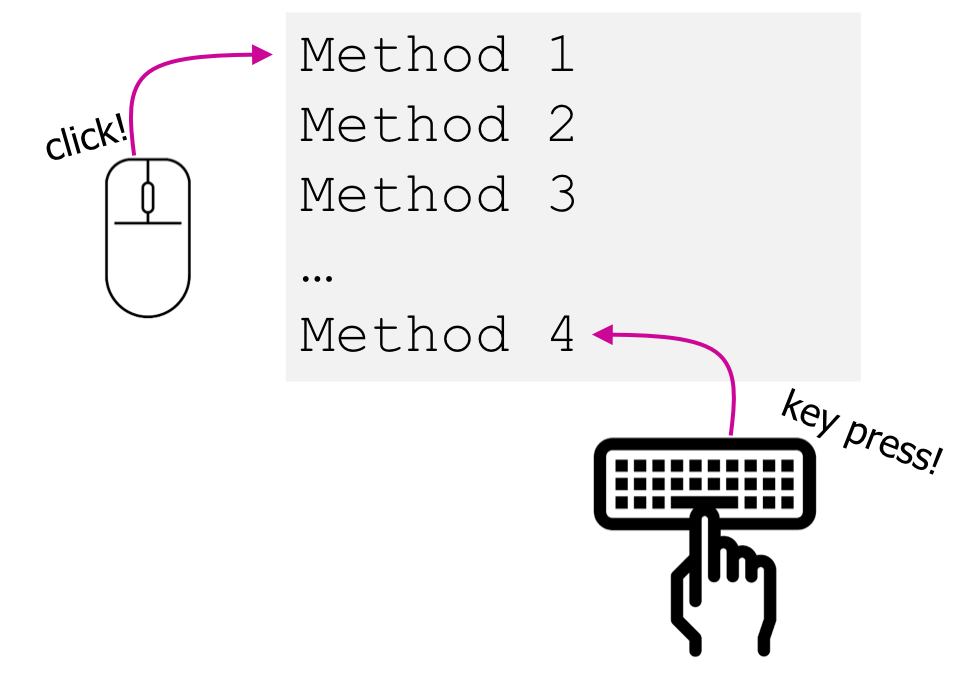

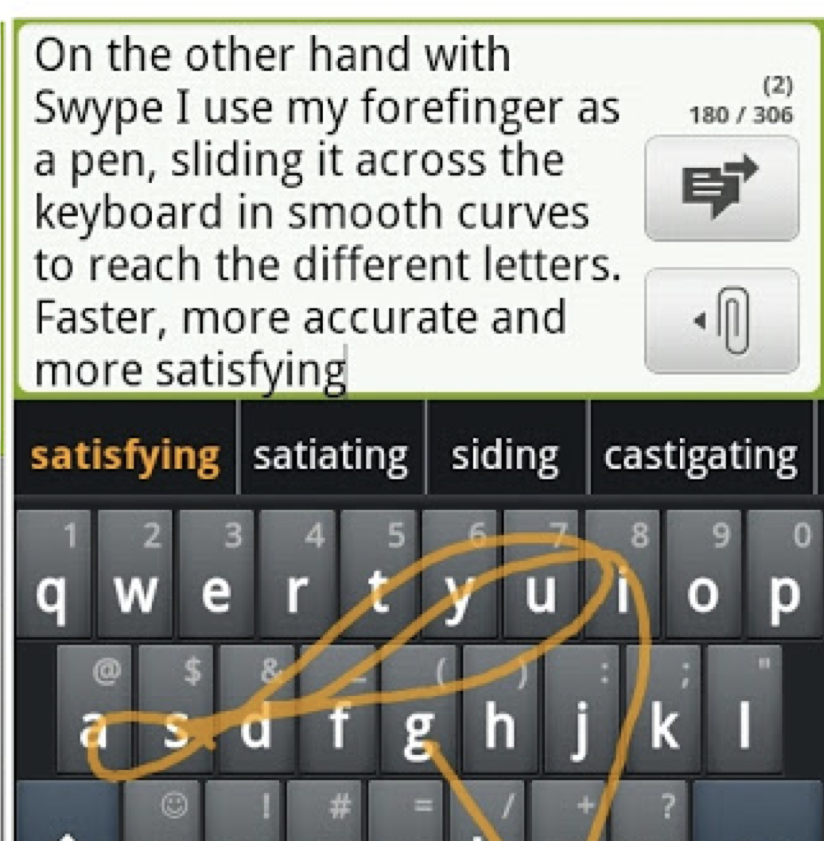

name: inverse layout: true class: center, middle, inverse --- # Write down three things that you reacted to this week What are some examples? -- This is event-driven interaction --- # Model View Controller, Input Devices and Events Jennifer Mankoff CSE 340 Winter 2020 --- name: normal layout: true class: --- [//]: # (Outline Slide) .left-column[ # Today's goals ] .right-column[ Discuss how input is handled - Difference between hardware and interaction techniques / Components - Logical device abstraction and types of devices - Sensor based input - Event vs sampled devices - Events as an abstraction Canvas quiz on our class experiment Class Evaluation ] --- # How do you think an app responds to input? --- .left-column-half[ # Responding to Users: Model View Controller <div class="mermaid"> graph TD View(View<sup>*</sup>) --Input<sup>1</sup>--> Presenter(Controller) Presenter --Output<sup>5</sup>--> View Presenter --Updates Model<sup>2</sup>-->Model(Model<sup>0,3</sup>) Model --State Change<sup>4</sup>-->Presenter class View,Presenter blue class User,Model bluegreen </div> ] .right-column-half[ Suppose this is a digital phone app <sup>*</sup> User interacts through View (s) (the interactor hierarchy)<br> <sup>0</sup> Model State: _Current person:_ Adam; _Lock state:_ closed<br> <sup>1</sup> Password entry. Trigger _Event Handling_<br> <sup>2</sup> Change person to Jen; App unlocked<br> <sup>3</sup> Model State: _Current person:_ Jen; _Lock state:_ open<br> <sup>4</sup> Change state of View(s)<br> <sup>5</sup> Trigger _Redraw_ and show ] ??? Sketch out key concepts - Input -- we need to know when people are doing things. This needs to be event driven. - Output -- we need to show people feedback. This cannot ‘take over’ i.e. it needs to be multi threaded - Back end -- we need to be able to talk to the application. - State machine -- we need to keep track of state. - What don’t we need? We don’t need to know about the rest of the UI, probably, etc etc - Model View Controller -- this works within components (draw diagram), but also represents the overall structure (ideally) of a whole user interface - NOTE: Be careful to write any new vocabulary words on the board and define as they come up. --- .left-column-half[ # Responding to Users: Model View Controller <div class="mermaid"> graph TD View(View) --Input<sup>1</sup>--> Presenter(Controller) Presenter --Output<sup>5</sup>--> View Presenter --Updates Model<sup>2</sup>-->Model(Model<sup>0,3</sup>) Model --State Change<sup>4</sup>-->Presenter class View,Presenter blue class User,Model bluegreen </div> ] .right-column-half[ Suppose this is a fancy speech-recognition based digital door lock instead <sup>*</sup> User interacts through View (s) (the interactor hierarchy)<br> <sup>0</sup> Model State: _Current person:_ Adam; _Lock state:_ closed<br> <sup>1</sup> Password entry. Trigger _Event Handling_<br> <sup>2</sup> Change person to Jen; App unlocked<br> <sup>3</sup> Model State: _Current person:_ Jen; _Lock state:_ open<br> <sup>4</sup> Change state of View(s)<br> <sup>5</sup> Trigger _Redraw_ and show ] ??? Sketch out key concepts - Input -- we need to know when people are doing things. This needs to be event driven. - Output -- we need to show people feedback. This cannot ‘take over’ i.e. it needs to be multi threaded - Back end -- we need to be able to talk to the application. - State machine -- we need to keep track of state. - What don’t we need? We don’t need to know about the rest of the UI, probably, etc etc - Model View Controller -- this works within components (draw diagram), but also represents the overall structure (ideally) of a whole user interface - NOTE: Be careful to write any new vocabulary words on the board and define as they come up. --- # MVC for a button (or any View) MVC exists within each View as well as for overall interface Diagram it - Model - View - Controller --- .left-column-half[ # MVC in Android <div class="mermaid"> graph TD View(Passive View) --user events--> Presenter(Presenter: <BR>Supervising Controller) Presenter --updates view--> View Presenter --updates model--> Model(Model) Model --state-change events--> Presenter class View,Presenter blue class Model bluegreen </div> ] .right-column-half[ Applications typically follow this architecture - What did we learn about how to do this? - What causes the screen to update? - How are things laid out on screen ] ??? - Relationship of MVC to Android software stack - Discuss Whorfian effects -- .right-column-half[ Responding to Users: Event Handling - When a user interacts with our apps, Android creates **events** - As app developers, we react "listening" for events and responding appropriately ] --- |Procedural | Event Driven | | :--: | :--: | ||<br><br><br><br>| |Code is executed in sequential order | Code is executed based upon events| --- # But what is an Event? Where does it come from? Generally, input is harder than output - More diversity, less uniformity - More affected by human properties --- # Consider Location What is different about a joystick, a touch screen, and a mouse? ??? Mouse was originally just a 1:1 mapping in 2 dimensions == absolute location; bounded Joystick is relative (maps movement into rate of change in location); unbounded Touch screen is absolute; bounded What about today's mouse? Lifting? -- - Mouse was originally just a 1:1 mapping in 2 dimensions == absolute location; bounded - Joystick is relative (maps movement into rate of change in location); unbounded - Touch screen is absolute; bounded -- What about the modern mouse? Lifting? -- How about a wii controller? --- # Is this an input device? .left-column-half[] -- .right-column-half[No … it’s an interaction technique. Over 50 WPM!] ??? Who/what/where/etc Dimensionality – how many dimensions can a device sense? Range – is a device bounded or unbounded? Mapping – is a device absolute or relative? -- .right-column-half[ Considerations: - Dimensionality – how many dimensions can a device sense? - Range – is a device bounded or unbounded? - Mapping – is a device absolute or relative? ] --- # Interaction techniques / Components make input devices effective For example, consider text entry: - 60-80 (keyboards; twiddler) - ~20 (soft keyboards) - ~50? Swype – but is it an input device? --- # Modern hardware and software starting to muddy the waters around this  ??? Add OLEDs to keys -> reconfigurable label displays --- # Is there a higher level abstraction here? -- Logical Device Approach: - Valuator -> returns a scalar value - Button -> returns integer value - Locator -> returns position on a logical view surface - Keyboard -> returns character string - Stroke -> obtain sequence of points ??? - Can obscure important differences -- hence use inheritance - Discussion of mouse vs pen -- what are some differences? - Helps us deal with a diversity of devices - Make sure everyone understands types of events - Make sure everyone has a basic concept of how one registers listeners --- # Not really satisfactory... Doesn't capture full device diversity | Event based devices | | Sampled devices | | -- | -- | -- | | Time of input determined by user | | Time of input determined by program | | Value changes only when activated | | Value is continuously changing | | e.g.: button | | e.g.: mouse | ??? Capability differences - Discussion of mouse vs pen - what are some differences? --- # Contents of Event Record Think about your real world event again. What do we need to know? **What**: Event Type **Where**: Event Target **When**: Timestamp **Value**: Event-specific variable **Context**: What was going on? ??? Discuss each with examples --- # Contents of Event Record What do we need to know about each UI event? **What**: Event Type (mouse moved, key down, etc) **Where**: Event Target (the input component) **When**: Timestamp (when did event occur) **Value**: Mouse coordinates; which key; etc. **Context**: Modifiers (Ctrl, Shift, Alt, etc); Number of clicks; etc. ??? Discuss each with examples --- # Summary - MVC: Separation of concerns for user interaction -- - Events: logical input device abstraction -- - We model everything as events - Sampled devices - Handled as “incremental change” events - Each measurable change: a new event with new value - Device differences - Handled implicitly by only generating events they can generate - Recognition Based Input? - Yes, can generate events for this too --- layout: false #Input Event Goals Device Independence - Want / need device independence - Need a uniform and higher level abstraction for input Component Independence - Given a model for representing input, how do we get inputs delivered to the right component? ??? --- # Review: Logical Device Approach - Valuator → returns a scalar value - Button → returns integer value - Locator → returns position on a logical view surface - Keyboard → returns character string - Stroke → obtain sequence of points --- # End of Deck