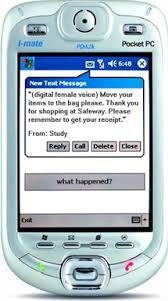

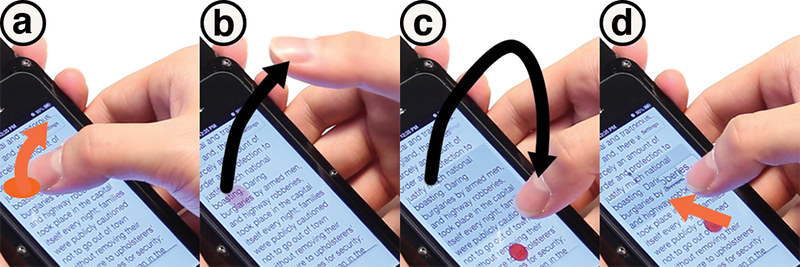

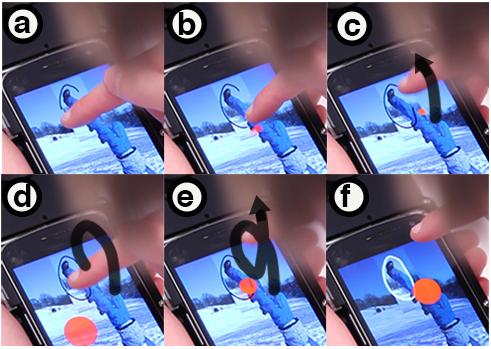

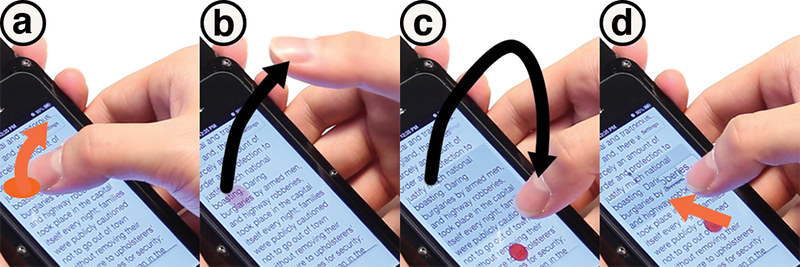

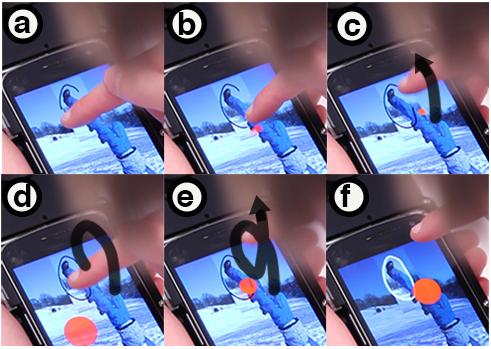

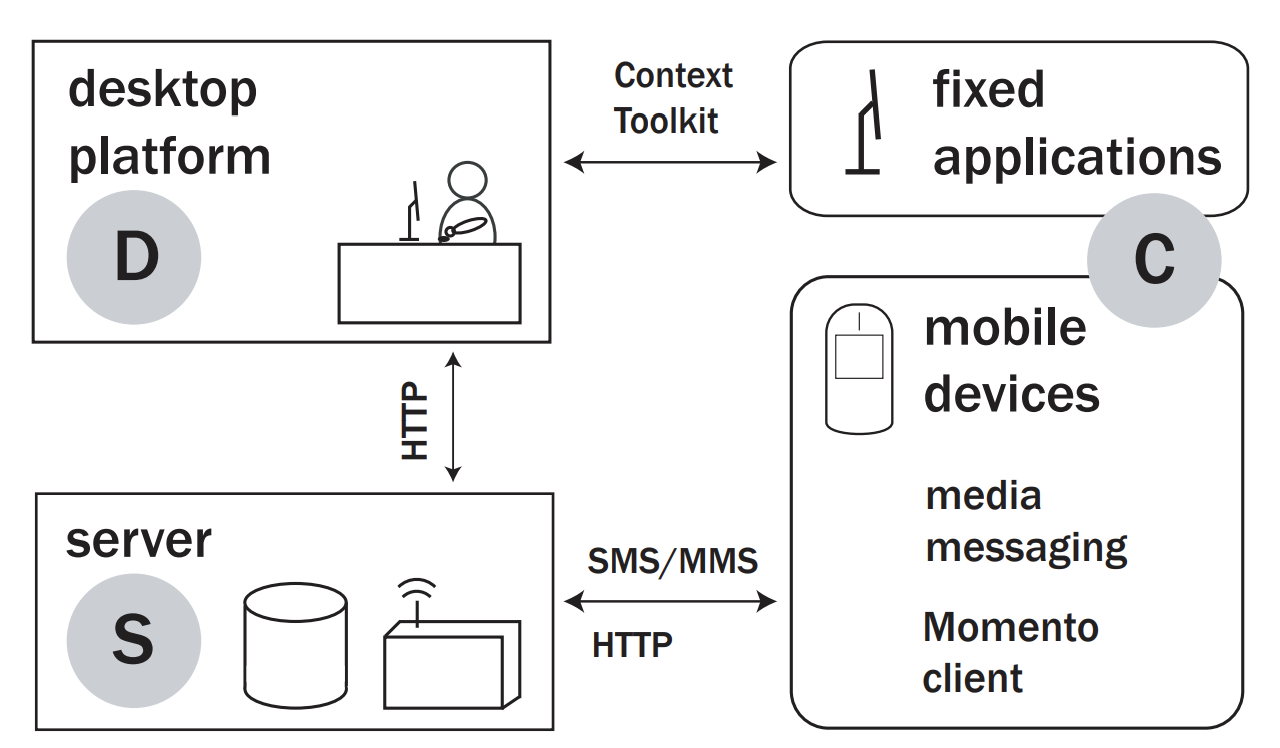

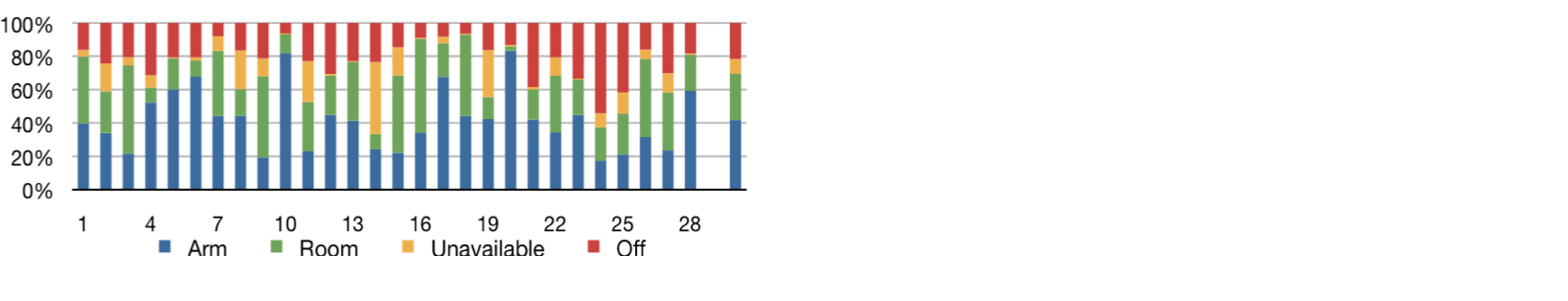

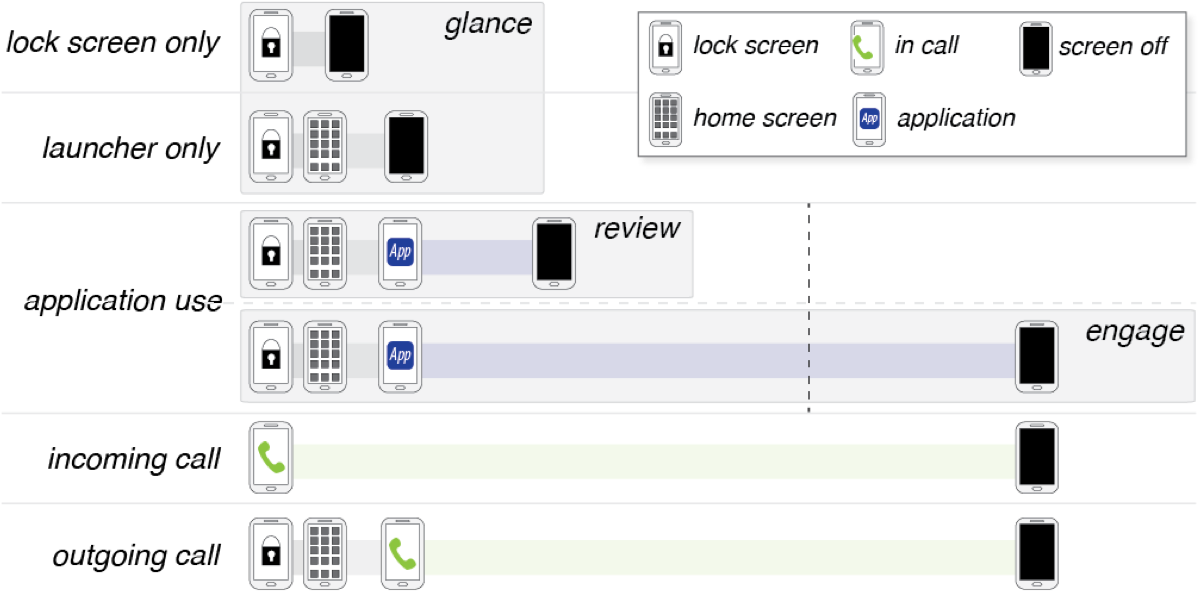

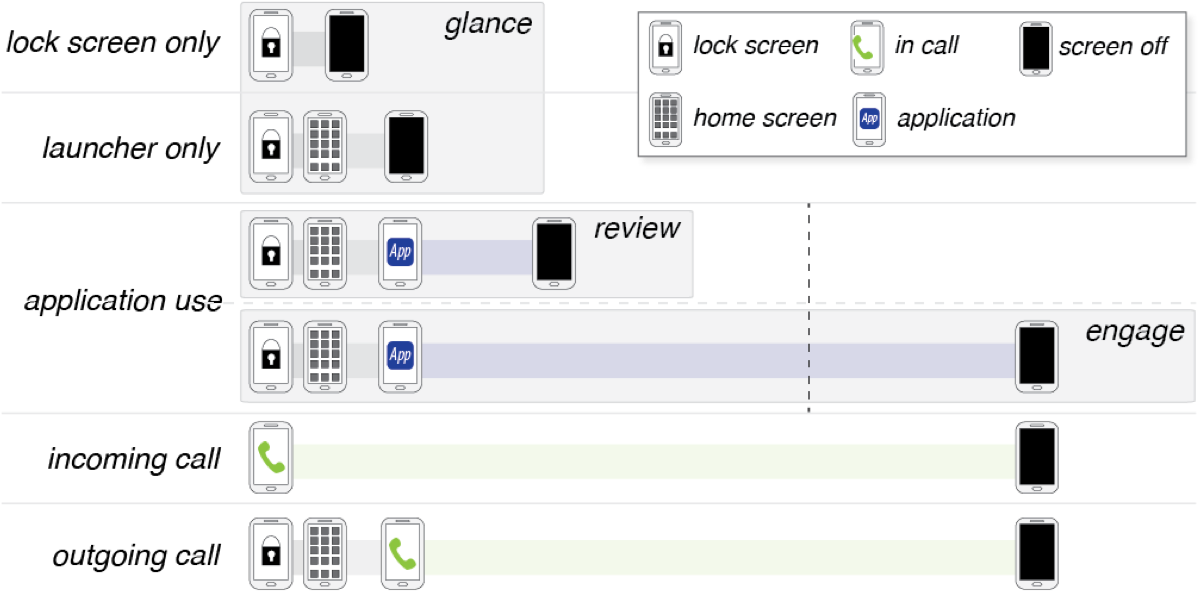

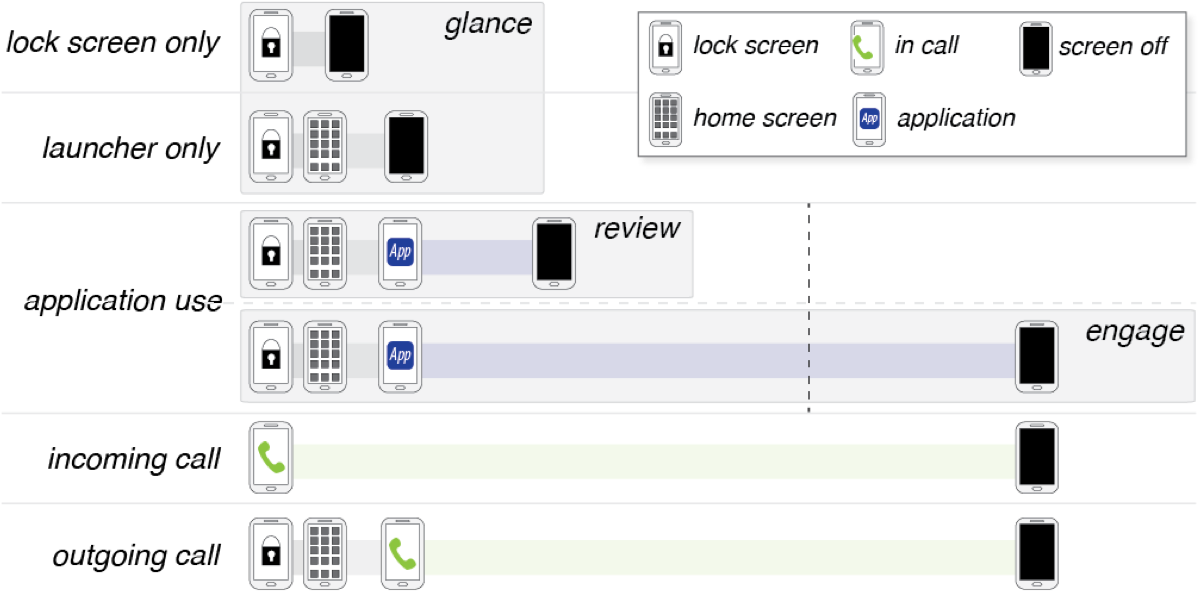

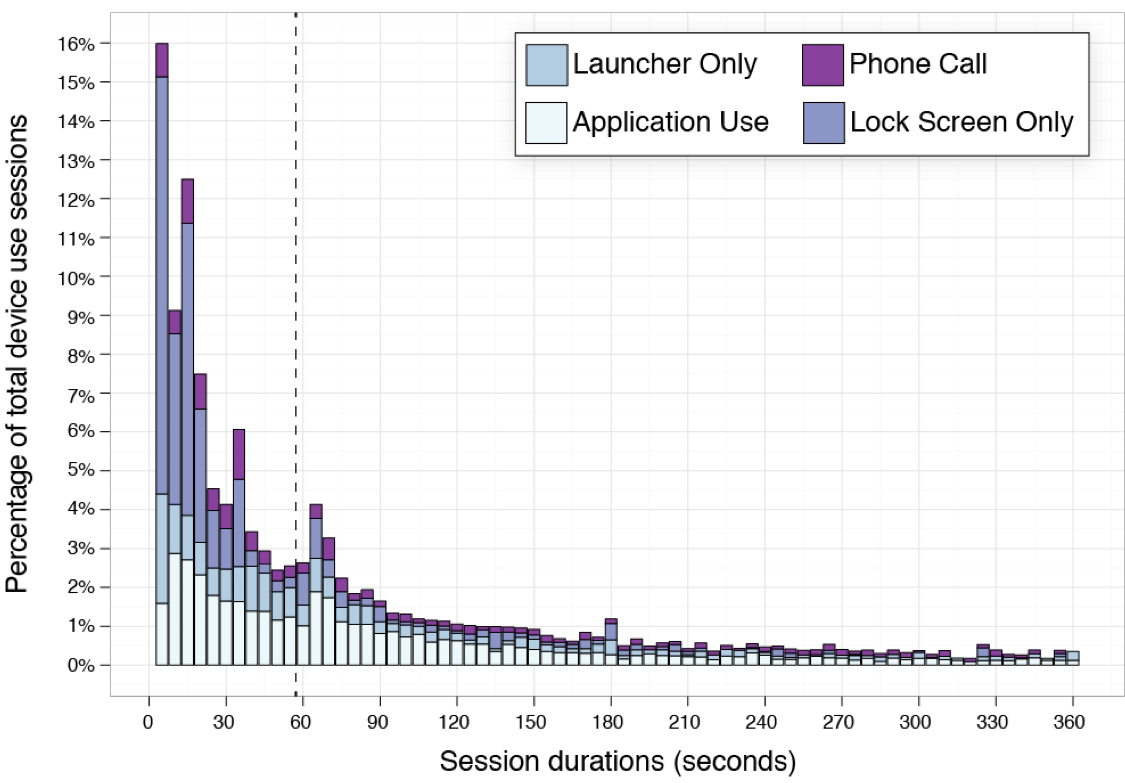

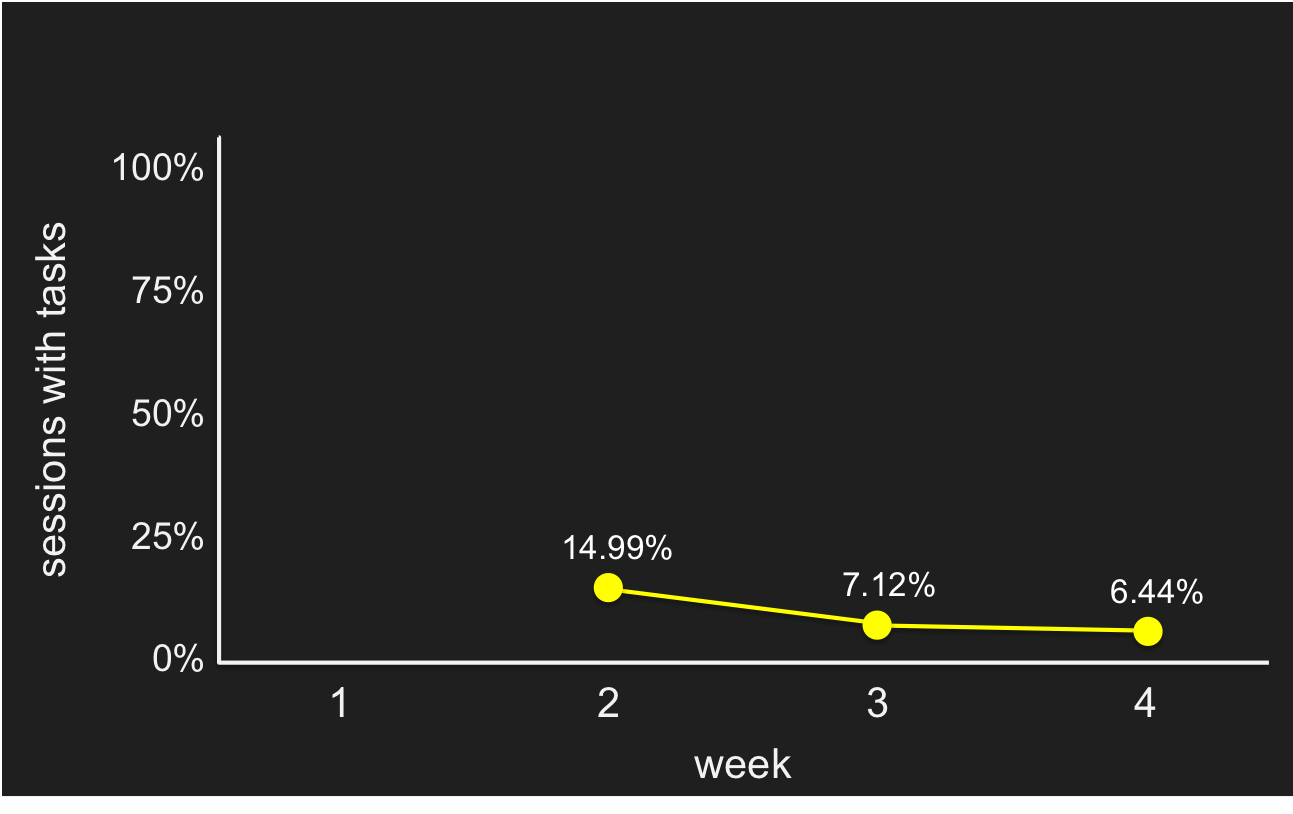

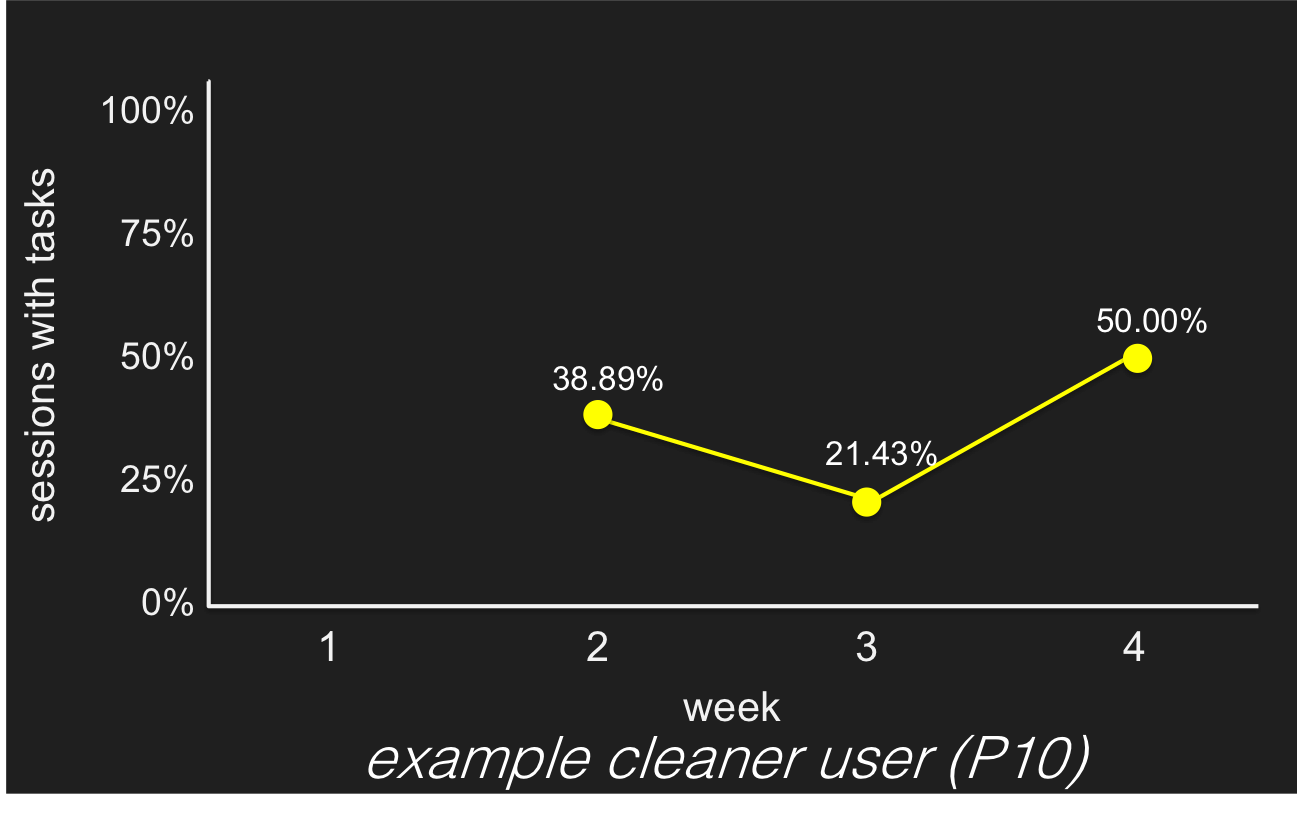

name: inverse layout: true class: center, middle, inverse --- background-image: url(img/context/people-background.png) # The Physical Phone Jennifer Mankoff CSE 340 Spring 2020 --- layout: false [//]: # (Outline Slide) .left-column[# Today's goals] .right-column[ - Talk about myths about phone use - Talk about what a phone can sense ] --- .title[Interface Structure] .body[ <div class="mermaid"> graph TD I(Input) --Explicit Interaction--> A(Application) A --> Act(Action) classDef normal fill:#e6f3ff,stroke:#333,stroke-width:2px; class U,C,A,I,S,E,Act,Act2 normal </div> ] --- .title[Context Aware Computing Interface Structure] .body[ <div class="mermaid"> graph TD I(Input) --Explicit Interaction--> A(Application) A --> Act(Action) U(User) --Implicit Sensing--> C(Context-Aware Application) S(System) --Implicit Sensing--> C E(Environment) --Implicit Sensing--> C C --> Act2(Action) classDef normal fill:#e6f3ff,stroke:#333,stroke-width:2px; class U,C,A,I,S,E,Act,Act2 normal </div> ] --- # Example: COVID-19 Contact Tracing .left-column-half[  ] .right-column-half[ - Install an app on your phone - Turn on bluetooth - Keep track of every bluetooth ID you see ] --- .left-column[  ] .right-column[ ## Context Awareness is about *Human Activity* - Useful, and necessary, input to context-aware systems - Easier and easier to collect information about human activity ] .footnote[[Image credit](https://www.techherd.co/iot-mobile-threats/) ] ??? Improved software and inferencing Improved sensors --- .left-column[ ## Computational Behavioral Imaging ] .right-column[  ] --- .left-column[ ## Computational Behavioral Imaging ] .right-column[   ] --- .left-column[ ## Computational Behavioral Imaging ] .right-column[   ] --- .left-column[  ] .right-column[ ## Phones People already carry them Capture many aspects of behavior - Interactions with information: virtual - Social engagement: social - Loads of sensors: physical ] --- .left-column[ ## Assumptions ] .right-column[ We have made a number of .red.bold[WRONG] assumptions about: - what smartphones are - how they are used ] --- .left-column[ ## Assumption #1: We all have smart phones  ] .right-column[ What is a smart phone? ] ??? What do you think? --- .left-column[ ## Assumption #1: We all have smart phones  ] .right-column[ What is a smart phone? - Runs a complete mobile OS - Offers computing ability and connectivity - Includes sensors ] --- # Types of Sensors .left-column-half[ - Not just touches: clicks, key presses | | | | |--|--|--| |Accelerometer | Rotation | Screen| |Applications | Location | Telephony| |Battery | Magnetometer | Temperature| |Bluetooth | Network Usage | Traffic| |Calls | Orientation | WiFi| |Messaging | Pressure | Processor| |Gravity | Proximity | Humidity | |Gyroscope | Light | ... Many More | ] .right-column-half[ Other Kinds of Sensors: - Microphone - Camera - Multi-touch - Connected Devices? ] ??? Sampled or event based? --- # Which Sensors might be useful for contact tracing? Why? .left-column-half[ microphone/camera/multi-touch/IOT PLUS | | | | |--|--|--| |Accelerometer | Rotation | Screen| |Applications | Location | Telephony| |Battery | Magnetometer | Temperature| |Bluetooth | Network Usage | Traffic| |Calls | Orientation | WiFi| |Messaging | Pressure | Processor| |Gravity | Proximity | Humidity | |Gyroscope | Light | ... Many More | ] .right-column-half[ Type your answers in chat! ] --- # How do you program with sensors? - `Managers` (e.g. `LocationManager`) let us create and register a listener - Listeners can receive updates at different intervals, etc. - Some Sensor-specific settings [https://source.android.com/devices/sensors/sensor-types.html](https://source.android.com/devices/sensors/sensor-types.html) --- # Implementing Sensing Android [Awareness API](https://developers.google.com/awareness/) .left-column-half[ ## Turn it on Must enable it: [Google APIs](https://console.developers.google.com/apis/) Recent updates to documentation (from 2017): February 2020! ] .right-column-half[ ## Set up callbacks Two types of context sensing: Snapshots/ Fences ] ??? --- # Snapshots Capture sensor data at a moment in time Require a single callback, to avoid hanging while sensor data is fetched: `onSnapshot(Response response)` Seting up the callback (just like callbacks for other events) ``` java setSnapshotListener(Awareness.getSnapshotClient(this).getDetectedActivity(), new ActivitySnapshotListener(mUpdate, mResources)); ``` --- # Fences Notify you *every time* a condition is true Conditional data 3 callbacks: during, starting, stopping ```java mActivityFenceListener = new ActivityFenceListener( // during DetectedActivityFence.during(DetectedActivity.WALKING), // starting DetectedActivityFence.starting(DetectedActivity.WALKING), // stopping DetectedActivityFence.stopping(DetectedActivity.WALKING), this, this, mUpdate); ``` ??? What might we use for a location fence? Headphone fence? ... --- # Snapshots vs Fences for contact tracing .left-column40[ <iframe src="https://embed.polleverywhere.com/multiple_choice_polls/mdDbVMvvYnDCcu3ugYWzh?controls=none&short_poll=true" width="800" height="600" frameBorder="0"></iframe> ] -- .right-column30[ Answer: Fence We want to be notified about *every* contact so we can record it ] --- # Using Context-Awareness in Apps .left-column[  ] .right-column[ Capture and Access: - .red[Food diarying] and nutritional awareness via receipt analysis [Ubicomp 2002] - .bold.red[Audio Accessibility] for deaf people by supporting mobile sound transcription [Ubicomp 2006, CHI 2007] - .red[Citizen Science] volunteer data collection in the field [CSCW 2013, CHI 2015] - .red[Air quality assessment] and visualization [CHI 2013] - .red[Coordinating between patients and doctors] via wearable sensing of in-home physical therapy [CHI 2014] ] --- # What might we do with today's phones? .left-column[  ] .right-column[ ## Adaptive Services (changing operation or timing) - .red[Adaptive Text Prediction] for assistive communication devices [TOCHI 2005] - .red[Location prediction] based on prior behavior [Ubicomp 2014] - .bold.red[Pro-active task access] on lock screen based on predicted user interest [MobileHCI 2014] ] --- # What might we do with today's phones? .left-column[   ] .right-column[ ## Novel Interaction - .red[Cord Input] for interacting with mobile devices [CHI 2010] - .red[Smart Watch Intent to Interact] via twist'n'knock gesture [GI 2016] - .red[VR Intent to Interact] vi sensing body pose, gaze and gesture [CHI 2017] - .red[Around Body interaction] through gestures with the phone [Mobile HCI 2014] - .red.bold[Around phone interaction] through gestures combining on and above phone surface [UIST 2014] ] --- # What might we do with today's phones? .left-column[   ] .right-column[ ![:youtube Interweaving touch and in-air gestures using in-air gestures to segment touch gestures, H5niZW6ZhTk] ] --- # What might we do with today's phones? .left-column[  ] .right-column[ ## Behavioral Imaging - .red[Detecting and Generating Safe Driving Behavior] by using inverse reinforcement learning to create human routine models [CHI 2016, 2017] - .red[Detecting Deviations in Family Routines] such as being late to pick up kids [CHI 2016] ] --- # What might we do with today's phones? .left-column[  ] .right-column[ ## General Solutions for Data Collection and Response - .red[General solution for studying people in the wild] via mobile sensing and interaction [CHI 2007] - .red[Minimizing user burden] for generating adaptive services via test-time feature ordering [Ubicomp 2016] ] --- <iframe src="https://embed.polleverywhere.com/multiple_choice_polls/pgjVCzFsgUO4RT0yWQJLF?controls=none&short_poll=true" width="800" height="600" frameBorder="0"></iframe> --- # Revisiting Assumption #1: We all have smart phones .left-column[  ] .right-column[ Does this seem like a smart phone? No, it just more input devices You have to do all the work ] -- .corner-ribbon.brtl[All Marketing] --- background-image: url(img/context/phones-background.png) # Dumb (Feature) Phones We live in a time of dumb phones Know almost nothing about me Explicit preferences Contacts Running applications Hardly knows when I’m mobile/fixed, charging/not charging Doesn’t know me or what I’m doing -- .corner-ribbon.purple.tlbr[WHY NOT SMARTER???] --- # Research Goal: A real smartphone! .left-column[  ] .right-column[ Want to build a smart phone that Collects and learns a model of human behavior with every interaction From the moment the phone is purchased and turned on Uses behavior information to improve interaction and the user experience Do this opportunistically - Your noise is my signal! - Big Data of 1 ] ??? How close are we to this? - Amazing amounts of computation at hand - Memory and storage - Radios and communication - Sensors - Software --- # Assumption #2: Proximity is standard We assume that users have their phones with them and turned on 24-7 Which is great for things like health apps and behavior modeling - Mobile phone is personal and travels with the user - Proxy for user context - Proxy for user’s environment context - Proxy for user’s attention/display device - Provide always-available service --- # Assumption #2: Proximity is standard ## How much of the day is your phone on? Average user: 78-81% [Dey, 2011] - One-fifth of the time, phone is off - Can’t sense anything - Can’t show anything to the user --- <iframe src="https://embed.polleverywhere.com/multiple_choice_polls/eB1wjPW8LGqgpi9X1mhQS?controls=none&short_poll=true" width="800" height="600" frameBorder="0"></iframe> --- # Where is your phone right now? .left-column-half[  Arms Reach  Same Room  Further Off ] .right-column-half[ ] --- # When your phone is on, where is it? .left-column[    ] .right-column[ - Within arm’s reach (53%) - Within the same room (35%) - Further away? (12%)  ] --- # Assumption #2: Proximity is standard .left-column-half[ ## Challenges for interpreting phone data: ***Can't use the phone as a proxy for the user*** It's off about 20% of the time It's not in the same roomabout 12% of the time ] -- .left-column-half[ ***How do we implement contact tracing given this?*** ] --- # Assumption #2: Proximity is standard .left-column-half[ ## Challenges for interpreting phone data: ***Can't use the phone as a proxy for the user*** It's off about 20% of the time It's not in the same roomabout 12% of the time ***How do we implement contact tracing given this?*** ] .right-column-half[ ## May need complementary sensors Smart watch Fitbit Room level sensing ] --- .left-column[ ## Assumption #3: Usage is Notification Driven  ] .right-column[ What do we know about how people use their mobile devices? - “Always on the phone!” - “Notifications are ruining my life!” ] --- # Characterizing Usage: Glance .left-column-half[  ] .right-column-half[ ![:youtube Glancing at a phone without engaging, 4pXLqDZCFwo] ] .footnote[Based on 1 months data from 10 participants] --- # Characterizing Usage: Review .left-column-half[  ] .right-column-half[ ![:youtube Reviewing on a phone, vsPkU8fHp-c] ] .footnote[Based on 1 months data from 10 participants] --- # Characterizing Usage: Engage .left-column-half[  ] .right-column-half[ ![:youtube Reviewing on a phone, NCwj3__BFxQ] ] .footnote[Based on 1 months data from 10 participants] --- # Characterizing Usage .left-column-half[  ] .right-column-half[ 95% of sessions shorter than 5 minutes; most < 60 secs Notifications lead to engagement… only 25% of the time! Self-interruption therefore more common than we would think Notifications prevent unnecessary engages No good support for reviews ] --- .left-column[ ## Opportunity: Leverage real knowledge about phone use  ] .right-column[ Engage people when appropriate Avoid interrupting when not Make short interactions more powerful ] --- .left-column[  ] .right-column[ ## Example: pro-active tasks Provide access to email management, etc right on lock screen ] --- .left-column[  ] .right-column[ ## Example: pro-active tasks Provide access to email management, etc right on lock screen Study of phone use (10 users, 4 weeks) 95% of sessions shorter than 5 minutes; most < 60 secs No good support for quick task completion (just viewing things) ] --- .left-column[ ## All users  ] .right-column[ ## Results 25 participants - 10 nonusers (4% of lockscreen views with a task or less per week) - 9 regular users (5-10% of lockscreen views with a task per week) - 5 power users (40-60% of lockscreen views!) ] ??? regular users mostly used the tasks when they had some down time, or when bored or nothing better to do. power users who applied actions to email in more than 1/3 of sessions when tasks were present on their lock screen. --- .left-column[ ## Cleaners  ] .right-column[ ## Results 25 participants - 10 nonusers (4% of lockscreen views with a task or less per week) - 9 regular users (5-10% of lockscreen views with a task per week) - 5 power users (40-60% of lockscreen views!) - 3 'cleaners' ] ??? We also found a special kind of power users called cleaners. They rarely had any unread emails in their inbox and used ProactiveTasks to keep their inbox clear of any unwanted emails. --- .left-column[ ## Assumption #4: Need is Necessary  ] .right-column[ .quote[When asked which device or platform they would not be able to live without, a majority (65%) chose iPhone, while only a few (1%) [...mentioned] facebook. Nearly 15% ... ] ] --- .left-column[ ## Assumption #4: Need is Necessary  ] .right-column[ .quote[When asked which device or platform they would not be able to live without, a majority (65%) chose iPhone, while only a few (1%) [...mentioned] facebook. Nearly 40% ... .red[said they'd rather give up their laptop than go for even a weekend without their iPhone] ] ] --- .left-column[ ## Assumption #4: Need is Necessary  ] .right-column[  ] --- .left-column[ ## Assumption #4: Need is Necessary ## Should we combat this? How? ] .right-column[ [Hinicker](https://www.alexishiniker.com/) (works at UW): - Can devices teach self-regulation, rather than trying to regulate children? - Why do people compulsively check their phones? Can they change this? [Burke](http://thoughtcrumbs.com/) (works at Facebook): - [Watching silly cat videos is good for you](https://www.wsj.com/articles/why-watching-silly-cat-videos-is-good-for-you-1475602097) - [Online social life good for your longevity](https://www.nytimes.com/2016/11/01/science/facebook-longer-life.html) ... but [The Relationship Between Facebook Use and Well-Being Depends on Communication Type and Tie Strength](https://academic.oup.com/jcmc/article/21/4/265/4161784) ] --- .left-column[ ## Assumptions - Assumption #1: Phones are smart - Assumption #2: Proximity is standard - Assumption #3: Usage is notification driven - Assumption #4: Need is necessary ] .right-column[ By removing assumptions, we can recast: - the notion of what a smart phone is - how we can use them to improve people’s lives - how to leverage make (personalized) meaning from (your) big data ] ??? --- # Do you think COVID-19 has challenged the validaty of any of these assumptions? .left-column[ ## Assumptions - Assumption #1: Phones are smart - Assumption #2: Proximity is standard - Assumption #3: Usage is notification driven - Assumption #4: Need is necessary ] .right-column[ <iframe src="https://embed.polleverywhere.com/multiple_choice_polls/Omnf3hdr8zaHvaGCTpemV?controls=none&short_poll=true" width="800" height="600" frameBorder="0"></iframe> ] ??? --- .title[Challenges to this vision] .body[ - Battery - Raw sensors not behavior data - Not the sensors we always want - Computational complexity - Latency in communication - Basic software framework to support apps that can adapt to user behavior - Apps that drive innovation - How people use phones ] --- # End of Deck --- --- # Places Shows you what is nearby ```java //In MainActivity: setSnapshotListener(Awareness.getSnapshotClient(this).getPlaces(), new PlacesSnapshotListener(mUpdate, mResources))); //In PlacesSnapshotListener public void onSnapshot(PlacesResponse response) { List<PlaceLikelihood> placeLikelihood = response.getPlaceLikelihoods(); if (placeLikelihood != null && !placeLikelihood.isEmpty()) { for (PlaceLikelihood likelihood : placeLikelihood) { addPlace(likelihood.getPlace().getName().toString(), likelihood.getLikelihood()); } } mUpdate.prependText(placeLikelihood.toString()); } ```