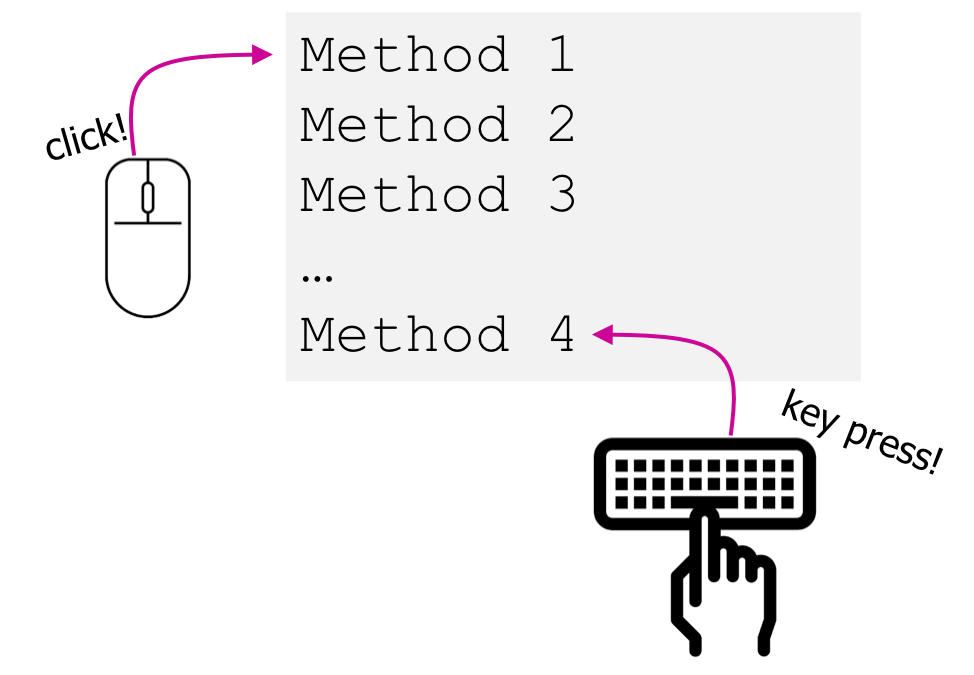

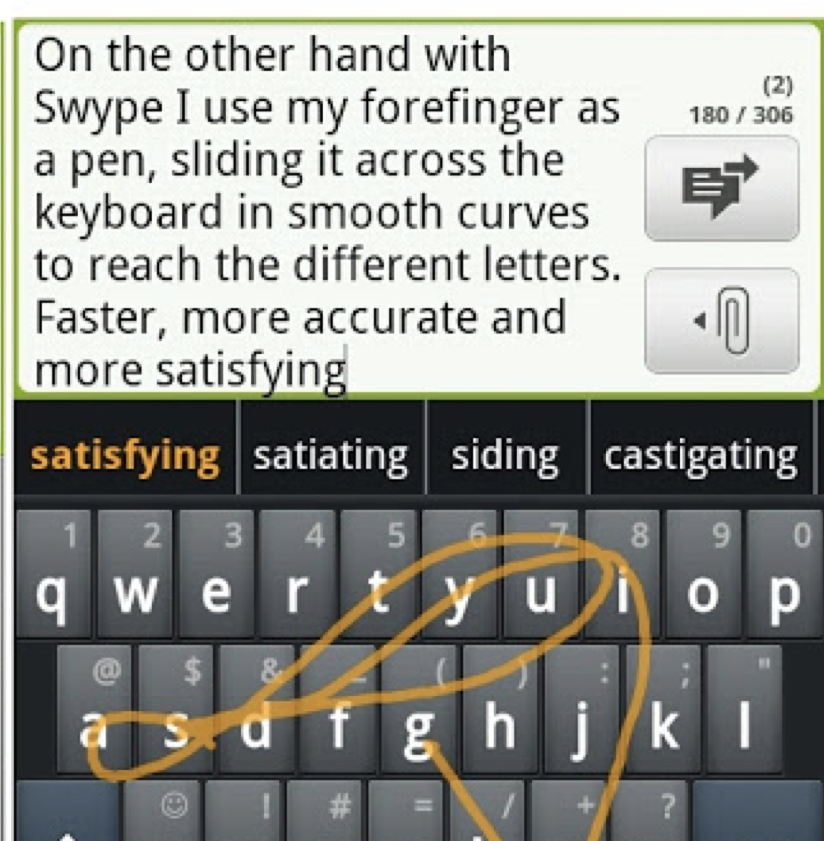

name: inverse layout: true class: center, middle, inverse --- # Write down three things that you reacted to this week What are some examples? -- This is event-driven interaction -- We will be studying the theory and practice of event driven interactions in Android --- # Event Handling I - Callbacks, Model View Controller, and Events Lauren Bricker CSE 340 Spring 2020 --- layout: false # Administrivia - Layout part 3-5 - Due date TONIGHT at 10pm - Reflection is due with code. - Lock date (aka when GitGrade and Gradescope will lock) TOMORROW at 10pm - Assesssments - [Post Layout Quiz](https://canvas.uw.edu/courses/1370612/quizzes/1236290) it out due Sunday. - Will likely change the format for the Layout Examlet. - Accessiblity is out - Mid quarter evals are out --- [//]: # (Outline Slide) # Today's goals - What is a callback? - What is an event? - What is a Model View Controller? - How is this all related? --- # Java 102: `Comparator<E>` Recall that if you have an array of items that have a "natural sorting order" such as: ```java String[] names = {"Emma", "Ncuti", "Tina", "Idris", "Awkwafina"}; ``` We can sort those things using `Arrays.sort` ```java Arrays.sort(names); System.out.println(Arrays.toString(names)); ``` Which will output: ``` [Awkwafina, Emma, Idris, Ncuti, Tina] ``` --- # Java 102: `Comparator<E>` What if you want to create a new class: [Person](person.zip) ```java public class Person { private String mFirstName; private String mLastName; private int mAge; public Person(String firstName, String lastName, int age) { mFirstName = firstName; mLastName = lastName; mAge = age; } public String toString() { return mFirstName + " " + mLastName + " - age " + mAge; } ``` --- # Java 102: `Comparator<E>` If we had an array of these `Person` objects ``` Person[] people = {new Person("Emma", "Watson", 30), new Person("Ncuti", "Gatwa", 27), ... } ``` How would we sort that array using `Arrays.sort`? -- ```java public class Person implements Comparable<Person> { ... public int compareTo(Person other) { // what would go in here?!?!?!?!? } } ``` Should we choose based on the first name? Last name? age? --- # Java 102: `Comparator<E>` There's another way to set up sorting: by creating an object that extends from [`Comparator<E>`](https://docs.oracle.com/en/java/javase/13/docs/api/java.base/java/util/Comparator.html) A subclass of `Comparator<E>` must implement one method: ```java public int compare(E object1, E object2) // Compares its two arguments for order. ``` `compare` compare returns * -1 if object1 is "less than" object2 * 0 if object1 and object2 are equivalent * 1 if object1 is "greater than" object 2 --- # Java 102: `Comparator<E>` Example: ``` // this class is defined in PersonLastNameComparator.java public class PersonLastNameComparator implements Comparator<Person> { @Override public int compare(Person p1, Person p2) { return p1.getLastName().compareTo(p2.getLastName()); } } ``` Then we could do the folowing to sort the array by the last name. ```java Arrays.sort(people, new PersonLastNameComparator()); ``` --- # Callbacks The model used for implementing Comparators is consider a **Callback** From [Wikipedia](https://en.wikipedia.org/wiki/Callback_(computer_programming)): > A callback ... is any executable code that is passed as an argument to other code; > that other code is expected to call back (execute) the argument at a given time. --- # Classes, Inner classes, and Anonymous classes. There are three ways to implement callbacks in Java 1. Creating a class in a separate file (like `PersonLastNameComparator`) 2. Creating an inner class (like `Person.FirstNameComparator`) 3. Creating an anonymous class (like the following) ```java Arrays.sort(people, new Comparator<Person>() { @Override public int compare(Person p1, Person p2) { return p1.getAge() - p2.getAge(); } }); ``` --- # Classes, Inner classes, and Anonymous classes. There are <del>three</del> four ways to implement callbacks in Java 1. Creating a class in a separate file (like `PersonLastNameComparator`) 2. Creating an inner class (like `Person.FirstNameComparator`) 3. Creating an anonymous class (like the following) 4. Using a lambda ```java Arrays.sort(people, (Person p1, Person p2)->{return p1.getAge() - p2.getAge();}); ``` --- # Callback Exercise Note: this is a breakout room exercise - Please participate! - TAs will help moderate the activity. --- # Callback Exercise 1. Imagine we're designing a Zoo. Each group is going to design a different zoo enclosure 2. In your rooms - Agree on and write down the name of your enclosure (what animal you contain) - Determine the type of animal in this enclosure. - Decide what sound will emanate from this enclosure when you get a “makeNoise for some number of seconds” request - Decide who will turn their mic on in the main room to make the sound. - Decide who will type the name of your enclosure in the chat upon your return 3. When you come back into the main room - One person type the name of your enclosure in the chat. 4. Wait until the instructor calls your enclosure name, then make the sound for the requested number of seconds. --- # Callback Exercise As code, I've asked each enclosure to implement the following interface to define the behavior. (Note: Listeners are a type of event handling callback in Java/Android) ```java public interface EnclosureListener { public void makeNoise(int numSeconds); } ``` For example: ```java public class LionCage implements EnclosureListener { ... public void makeNoise(int numSeconds) { // make the animal noise for the given number of seconds } } ``` --- # Callback Exercise Further, by telling me the name of your enclosure you're registering your listener WITH me, the "zookeeper" ```java public class LionCage implements EnclosureListener { ... public void makeNoise(int numSeconds) { // make the animal noise for the given number of seconds } public void onCreate(Bundle savedInstanceState) { ... zookeeper.addEnclosureListener(this); } } ``` --- # Callback Exercise ```java public class Zookeeper { // The zookeeper keeps a list of all EnclosureListeners that were registered, private List<EnclosureListeners> mEnclosureListeners; public final void addEnclosureListener(EnclosureListener listener) { if (listener == null) { throw new IllegalArgumentException("enclosureListener should never be null"); } mEnclosureListeners.add(listener); } // And can call on them whenever they need with the right amount of time. public void cacophony(int seconds) for (EnclosureListener listener : mEnclosureListeners) { listener->makeNoise(seconds); } } } ``` --- # Callback Exercise - The Zookeeper has NO idea what each enclosure will do until the listener is registered and called. - Each enclosure separately defines how it will react to a makeNoise event. - The "contract" between the zookeeper and the enclosures is the defined EnclosureListener interface. --- # Callbacks in Java - At the time Java has created the toolkit developers had *no* idea how every app would want to respond to events - The toolkit has pre-defined interfaces so apps or components can respond to events such as clicks or touches. For example: - The `View` class defines the following Listeners as inner classes: - `View.OnClickListener` - `View.OnLongClickListener` - `View.OnFocusChangeListener` - `View.OnKeyListener` - `View.OnTouchListener` (this is used in ColorPicker and Menu by subclassing AppCompatImageView) - `View.OnCreateContextMenuListener` We will come back to these in our next class. --- name: inverse layout: true class: center, middle, inverse --- # Model View Controller, Input Devices and Events --- name: normal layout: true class: --- # How do you think an app responds to user input? .left-column30[ <div class="mermaid"> graph LR ap[Application Program] hlt[High Level Tools] t[Toolkit] w[Window System] o[OS] h[Hardware] classDef yellow font-size:14pt,text-align:center class ap,w,o,h,hlt,t yellow </div> ] .right-column60[ What happens at each level of the hardware stack? ] --- # How do you think an app responds to user input? .left-column30[ <div class="mermaid"> graph LR ap[Application Program] hlt[High Level Tools] t[Toolkit] w[Window System] o[OS] h[Hardware] classDef yellow font-size:14pt,text-align:center class ap,w,o,h,hlt,t yellow </div> ] .right-column60[ What happens at each level of the hardware stack? - Hardware level: electronics to sense circuits closing or movement - Difference between hardware (Event vs sampled) - Sensor based input - OS: "Interrupts" that tell the Window system something happened - Logical device abstraction and types of devices - Windows system: Tells which window received the input - Toolkit: defines how the app developer will use these events - Events as an abstraction - High level tools: Defines standard components that react to events in a certain way - Application Program: Can use standard components OR create new interactions - May define separate interaction techniques ] --- # Responding to Users: Model View Controller (MVC) .left-column30[ <br> <div class="mermaid"> graph TD View(View * ) --1-Input--> Presenter(Controller) Presenter --5-Output --> View Presenter --2-Updates-->Model(0,3-Model) Model --4-State Change-->Presenter classDef edgeLabel font-size:14pt classDef blue font-size:14pt,text-align:center classDef bluegreen font-size:14pt,text-align:center class View,Presenter blue class User,Model bluegreen </div> ] .right-column60[ Suppose this is a digital phone app <sup>*</sup> User interacts through View (s) (the interactor hierarchy)<br> <sup>0</sup> Model State: _Current person:_ Lauren; _Lock state:_ closed<br> <sup>1</sup> Password entry. Trigger _Event Handling_<br> <sup>2</sup> Change state: App unlocked<br> <sup>3</sup> Model State: _Current person:_ Lauren; _Lock state:_ open<br> <sup>4</sup> Change state of View(s)<br> <sup>5</sup> Trigger _Redraw_ and show ] ??? Sketch out key concepts - Input -- we need to know when people are doing things. This needs to be event driven. - Output -- we need to show people feedback. This cannot ‘take over’ i.e. it needs to be multi threaded - Back end -- we need to be able to talk to the application. - State machine -- we need to keep track of state. - What don’t we need? We don’t need to know about the rest of the UI, probably, etc etc - Model View Controller -- this works within components (draw diagram), but also represents the overall structure (ideally) of a whole user interface - NOTE: Be careful to write any new vocabulary words on the board and define as they come up. --- # Responding to Users: Model View Controller (MVC) .left-column30[ <br> <div class="mermaid"> graph TD View(View * ) --1-Input--> Presenter(Controller) Presenter --5-Output --> View Presenter --2-Updates-->Model(0,3-Model) Model --4-State Change-->Presenter classDef edgeLabel font-size:14pt classDef blue font-size:14pt,text-align:center classDef bluegreen font-size:14pt,text-align:center class View,Presenter blue class User,Model bluegreen </div> ] .right-column60[ Suppose this is a fancy speech-recognition based digital door lock instead <sup>*</sup> User interacts through View (s) (the interactor hierarchy)<br> <sup>0</sup> Model State: _Current person:_ Lauren; _Lock state:_ closed<br> <sup>1</sup> Password entry. Trigger _Event Handling_<br> <sup>2</sup> Change person to Lauren; App unlocked<br> <sup>3</sup> Model State: _Current person:_ Lauren; _Lock state:_ open<br> <sup>4</sup> Change state of View(s)<br> <sup>5</sup> Trigger _Redraw_ and show ] ??? Sketch out key concepts - Input -- we need to know when people are doing things. This needs to be event driven. - Output -- we need to show people feedback. This cannot ‘take over’ i.e. it needs to be multi threaded - Back end -- we need to be able to talk to the application. - State machine -- we need to keep track of state. - What don’t we need? We don’t need to know about the rest of the UI, probably, etc etc - Model View Controller -- this works within components (draw diagram), but also represents the overall structure (ideally) of a whole user interface - NOTE: Be careful to write any new vocabulary words on the board and define as they come up. --- # Model View Controller (MVC) From [Wikipedia]() >MVC is a software design pattern commonly used for developing user interfaces which divides the related program logic into three interconnected elements. - *Model* - a representation of the state of your application - *View* - a visual representation presented to the user - *Controller* - communicates between the model and the view - Handles changing the model based on user input - Retrieves information from the model to display on the view -- MVC exists within each View as well as for overall interface --- # MVC in Android .left-column30[ <br> <div class="mermaid"> graph TD View(Passive View) --user events--> Presenter(Presenter: <BR>Supervising Controller) Presenter --updates view--> View Presenter --updates model--> Model(Model) Model --state-change events--> Presenter classDef edgeLabel font-size:14pt classDef blue font-size:14pt,text-align:center classDef bluegreen font-size:14pt,text-align:center class View,Presenter blue class Model bluegreen </div> ] .right-column60[ Applications typically follow this architecture - What did we learn about how to do this? - What causes the screen to update? - How are things laid out on screen? ] ??? - Relationship of MVC to Android software stack - Measure and layout - Discuss Whorfian effects -- .right-column60[ Responding to Users: Event Handling - When a user interacts with our apps, Android creates **events** - As app developers, we react "listening" for events and responding appropriately ] --- |Procedural | Event Driven | | :--: | :--: | ||<br><br><br><br>| |Code is executed in sequential order | Code is executed based upon events| --- # But what is an Event? Generally, input is harder than output - More diversity, less uniformity - More affected by human properties --- # Where does and Event come from? Consider the "location" of an event... What is different about a joystick, a touch screen, and a mouse? ??? - Mouse was originally just a 1:1 mapping in 2 dimensions == absolute location; bounded - Joystick is relative (maps movement into rate of change in location); unbounded - Touch screen is absolute; bounded - What about today's mouse? Lifting and moving? -- - Mouse was originally just a 1:1 mapping in 2 dimensions == absolute location; bounded - Joystick is relative (maps movement into rate of change in location); unbounded - Touch screen is absolute; bounded -- What about the modern mouse? Lifting and moving? -- How about a wii controller? --- # Is this an input device? .left-column-half[] -- .right-column-half[No … it’s an interaction technique. Over 50 WPM!] ??? Who/what/where/etc Dimensionality – how many dimensions can a device sense? Range – is a device bounded or unbounded? Mapping – is a device absolute or relative? -- .right-column-half[ Considerations: - Dimensionality – how many dimensions can a device sense? - Range – is a device bounded or unbounded? - Mapping – is a device absolute or relative? ] --- # Interaction techniques / Components make input devices effective For example, consider text entry: - 60-80 (keyboards; twiddler) - ~20 (soft keyboards) - ~50? Swype – but is it an input device? --- # Modern hardware and software starting to muddy the waters around this  ??? Add OLEDs to keys -> reconfigurable label displays --- # Higher level abstraction Logical Device Approach: - Valuator (slider) -> returns a scalar value - Button -> returns integer value - Locator -> returns position on a logical view surface - Keyboard -> returns character string - Stroke -> obtain sequence of points ??? - Can obscure important differences -- hence use inheritance - Discussion of mouse vs pen -- what are some differences? - Helps us deal with a diversity of devices - Make sure everyone understands types of events - Make sure everyone has a basic concept of how one registers listeners --- # Not really satisfactory... Doesn't capture full device diversity | Event based devices | | Sampled devices | | -- | -- | -- | | Time of input determined by user | | Time of input determined by program | | Value changes only when activated | | Value is continuously changing | | e.g.: button | | e.g.: mouse | ??? Capability differences - Discussion of mouse vs pen - what are some differences? --- # Contents of Event Record Think about your real world event again. What do we need to know? **What**: Event Type **Where**: Event Target **When**: Timestamp **Value**: Event-specific variable **Context**: What was going on? ??? Discuss each with examples --- # Contents of Event Record What do we need to know about each UI event? **What**: Event Type (mouse moved, key down, etc) **Where**: Event Target (the input component) **When**: Timestamp (when did event occur) **Value**: Mouse coordinates; which key; etc. **Context**: Modifiers (Ctrl, Shift, Alt, etc); Number of clicks; etc. ??? Discuss each with examples --- # Input Event Goals Device Independence - Want / need device independence - Need a uniform and higher level abstraction for input Component Independence - Given a model for representing input, how do we get inputs delivered to the right component? ??? --- # Summary - Callbacks: a programatic way to get or send information from/to our program from the system -- - MVC: Separation of concerns for user interaction -- - Events: logical input device abstraction -- - We model everything as events - Sampled devices - Handled as “incremental change” events - Each measurable change: a new event with new value - Device differences - Handled implicitly by only generating events they can generate - Recognition Based Input? - Yes, can generate events for this too --- # End of Deck