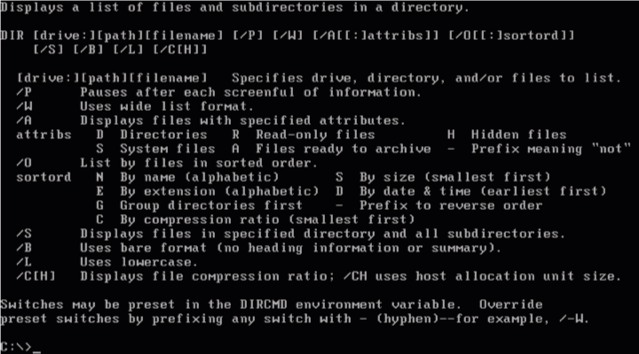

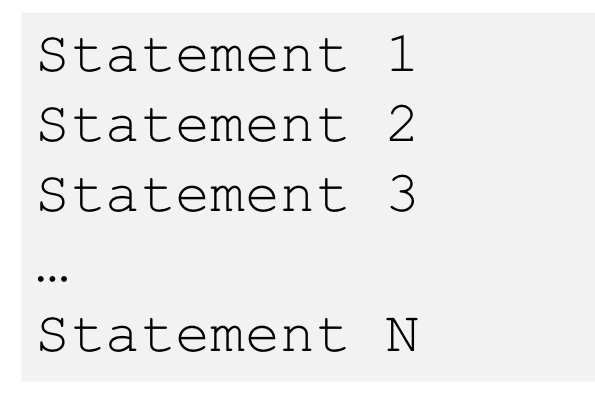

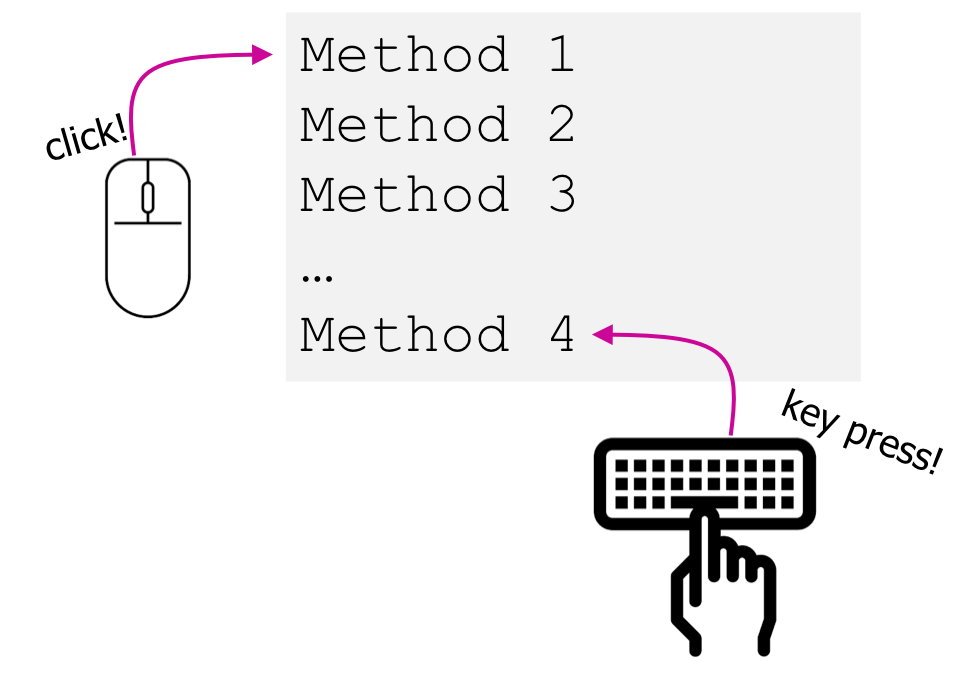

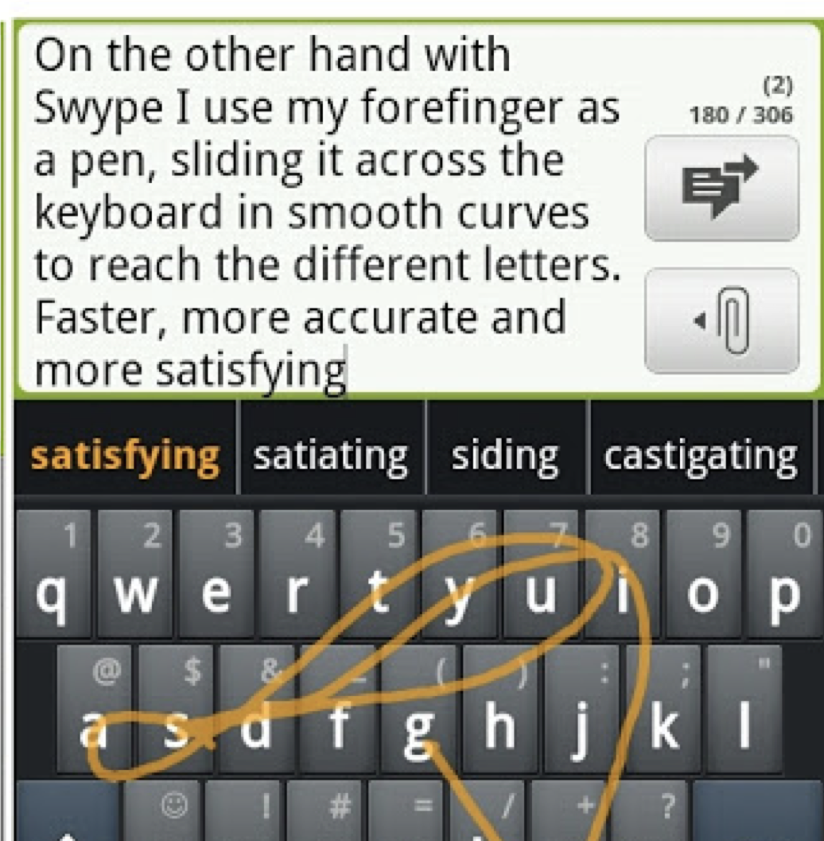

name: inverse layout: true class: center, middle, inverse --- # Event Types and Event Dispatch Jennifer Mankoff CSE 340 Spring 2019 --- name: normal layout: true class: --- | Command Line | Windows | |---|---| ||| ??? - Command Line (Unix shell, DOS prompt) - Interaction driven by system - User prompted for input when needed - Text-based input and output - Event-Driven Interfaces (GUIs) - Interaction driven by user - UI constantly waiting for input events - Pointing & text input, graphical output --- [//]: # (Outline Slide) .left-column[ # Today's goals ] .right-column[ Discuss how input is handled - Difference between hardware and interaction techniques / Components - Logical device abstraction and types of devices - Sensor based input - Event vs sampled devices - Events as an abstraction Discuss how Android models input - Event hierarchy - Input dispatch process - Listeners - Picking, capture and bubble ] --- |Procedural | Event Driven | |---|---| |Code is executed in sequential order | Code is executed based upon events| ||<br><br><br><br>| --- .title[How does the Toolkit Architecture accomplish this?] .body[ Application’s UI Thread (This is the main thread of your program) ``` while(true){ // Gets event from the application message queue. // If the queue is empty, we just wait here. Event e = GetEventFromMessageQueue(); // Dispatches the event to the appropriate application window // (the destination address is in the event data). DispatchEventToMessageDestination(e); } ``` ] -- .body[ Don’t worry, this code is automatically setup and managed by the UI framework you are using (e.g., .NET, WPF, Android, Swift). You don’t really need to worry about it (but you should know it’s there and how it functions!) ] --- .left-column[ # But what is an Event? Where does it come from? ] .right-column[ Generally, input is harder than output - More diversity, less uniformity - More affected by human properties ] --- .left-column[ # Is this an input device? ] .right-column[  ] -- .right-column[ No … it’s an interaction technique. Over 50 WPM! ] ??? Who/what/where/etc Dimensionality – how many dimensions can a device sense? Range – is a device bounded or unbounded? Mapping – is a device absolute or relative? --- .left-column[ # Interaction techniques / Components make input devices effective ] .right-column[ For example, consider text entry: - 60-80 (keyboards; twiddler) - ~20 (soft keyboards) - ~50? Swype – but is it an input device? ] --- .left-column[ # Modern hardware and software starting to muddy the waters around this ]  ??? Add OLEDs to keys -> reconfigurable label displays --- .left-column[ # Can never ignore hardware completely ] .right-column[ Consider Location What is different about a joystick, a touch screen, and a mouse? ] ??? Mouse was originally just a 1:1 mapping in 2 dimensions == absolute location; bounded Joystick is relative (maps movement into rate of change in location); unbounded Touch screen is absolute; bounded What about today's mouse? Lifting? --- .left-column[ # Is there a higher level abstraction here? ] -- .right-column[ Logical Device Approach: - Valuator -> returns a scalar value - Button -> returns integer value - Locator -> returns position on a logical view surface - Keyboard -> returns character string - Stroke -> obtain sequence of points ] ??? - Can obscure important differences -- hence use inheritance - Discussion of mouse vs pen -- what are some differences? ??? - Helps us deal with a diversity of devices - Make sure everyone understands types of events - Make sure everyone has a basic concept of how one registers listeners --- .left-column[ # Not really satisfactory... ] .right-column[ Doesn't capture full device diversity Event based devices - Time of input determined by user - Value changes only when activated - e.g. button Sampled devices - Time of input determined by program - Value is continuously changing - e.g. mouse ] ??? Capability differences - Discussion of mouse vs pen - what are some differences? --- .left-column[ # Contents of Event Record ] .right-column[ What do we need to know about each event? ] -- .right-column[ **What**: Event Type (mouse moved, key down, etc) **Where**: Event Target (the input component) **When**: Timestamp (when did event occur) **Value**: Mouse coordinates; which key; etc. **Context**: Modifiers (Ctrl, Shift, Alt, etc); Number of clicks; etc. ] ??? Discuss each with examples --- .left-column[ # Responding to Users ] .right-column[ When a user interacts with our apps, Android creates **events** ] -- .right-column[ As app developers, we react "listening" for events and responding appropriately ] --- # Demo --- .left-column[## Android Events] .right-column[ Each kind of event has its own class but they all implement the event interface - A little hard to find all the parts defined in one place - Harder to deal with uniformly - But easily extensible for new event types .red[Artificial] events are a thing (e.g. window events) ] --- .title[Android [MotionEvent](https://developer.android.com/reference/android/view/MotionEvent)] .body[ ```java java.lang.Object ↳ android.view.InputEvent ↳ android.view.MotionEvent public final class MotionEvent extends InputEvent implements Parcelable { int getAction() // up, down etc int getActionIndex() // multi touch support -- which pointer float getX() // position float getY() // position int getButtonState() // pressed or not, for example long getDownTime() float getOrientation(int pointerIndex) float getPressure() float getSize() // fingers aren't pixel sized // and many more... } ``` ] --- .left-column[ # Aside: Multiple Hierarchies discussed so far in class ] .right-column[ Can you think of them? ] ??? Inheritance hierarchy for interactors Inheritance hierarchy for layout Inheritance hierarchy for events Component hierarchy --- .left-column[ # Input __Dispatch__ Process ] .right-column[ Input thread: - When a user interacts, __events__ are created - Events go into a queue ] --- .left-column[ # Input __Dispatch__ Process ] .right-column[ Input Thread Dispatch thread: - Front event comes off queue - How does a toolkit decide where to send events? ] --- .left-column[ # How do we decide where to send events?] .right-column[ Input Thread Dispatch thread: - Worked example of touch input ```java @Override public boolean onTouchEvent(MotionEvent event) { ... } ``` ] ??? (focus) Discuss Quiz on Input Events (positional) Determine proper component --- .left-column[ # Input __Dispatch__ Process ] .right-column[ Input Thread Dispatch thread Components: - Components have to listen for events - How do components respond? ] ??? - remind them that component/view/interactor are interchangeable in this class - Update application state if appropriate - Request repaint if needed --- # Input Process - Picking <div class="mermaid"> graph TD V0((V0)) --> V1((V1*)) V0 --> V4((V4*)) V1 --> V2((V2)) V1 --> V3((V3*)) V4 --> V5((V5)) V4 --> V6((V6*)) classDef bluegreen fill: #d1e0e0,stroke:#333,stroke-width:2px; classDef blue fill:#e6f3ff,stroke:#333,stroke-width:2px; classDef green fill:#dbf0db,stroke:#333,stroke-width:4px; class V0,V2,V5 blue class V1,V4,V3,V6 bluegreen </div> .footnote[*: denotes the element responds to the event] -- - What `View` objects does dispatch "pick"? -- - Picked Views = { `V1`, `V3`, `V4`, `V6`} -- - Order matters! --- # Input Example - Capture - Picked Views = { `V1`, `V3`, `V4`, `V6`} - What happens next? -- - Dispatch starts at the front of the picked `View` object list -- - In order, dispatch asks: will you consume this event? -- - If `true`: the event is consumed and the event propagation __stops__ - If `false`: Move to the next element in the `View` list -- - What happens when we reach the last `View` in the list and no one has consumed the event? --- # Input Example - Bubbling - Picked Views = { `V1`, `V3`, `V4`, `V6`} -- - Android backtracks through the list, asking if they want to consume the event -- - Who gets asked first ? --- # Input Example - Recap .three-quarters-width[ |State |Ordering | |:---------------|:----------------| |*Picking* | V1| V3 | V4 | V6| |*Capture Order* | 1 | 2 | 3 | 4 | |*Bubbling Order*| 4 | 3 | 2 | 1 | ] --- .left-column[ # How does *Android* decide where to send events?] .right-column[ - **capture** (most things don’t) top to bottom deliver to target object (bottom) (example: `onInterceptTouchEvent()` in android - **pick** to identify objects of interest (or just focus()) (`buildTouchDispatchChildList()` in android; happens only after capture!) - **bubble** (bottom to top) (example: `onTouchEvent()` in android) - Invoke callback (wait until complete) (we do this by creating a custom listener in android) ] .footnote[fascinating to look at [implementation in ViewGroup](https://android.googlesource.com/platform/frameworks/base/+/master/core/java/android/view/ViewGroup.java) -- not very general!] --- .title[Creating a new type of callback] .body[ ```java protected MyView.MyListener mListener; public void setMyListener(MyListener mListener) { this.mListener = mListener; } // Creates a new named inner interface for listening to color selection events public interface MyListener { void onInterestingEvent(); // can include a parameter } // somewhere else in your code, when callback time happens public void someMethod() { mListener.onInterestingEvent(); } ``` ] --- # What is focus useful for? --- .title[Summary] .body[ - Events: logical input device abstraction - Event driven code: very different from procedural - Input dispatching process (toolkit architecture) - Picking (alternative: Focus) - Capture - Bubble - Listeners are notified about events - Application callbacks can be implemented as custom listeners ] --- # End of Deck --- # Implementing Drag and Drop Focus? Or Positional? ??? [Android's tutorial is positional](https://developer.android.com/training/gestures/scale#java) --- .left-column[##Summary] .right-column[ ] ??? --- # How would you handle input in a circular component? ??? Consumption is based on the bounding box... --- # Code shown in class ```java public class Counter extends ImageView { private int count = 0; // model public Counter(Context context, AttributeSet attrs) { super(context, attrs); } protected void onDraw(Canvas canvas) { Paint paint = new Paint(); paint.setColor(Color.WHITE); paint.setTextSize(60); canvas.drawText("Click count" + count, 100,100, paint); } @Override public boolean onTouchEvent(MotionEvent event) { if (event.getAction() == MotionEvent.ACTION_DOWN) { Log.i("debug", "onTouch: down"); count++; invalidate(); return true; } return false; } } ``` --- # XML shown in class ```xml <com.example.myapplicationeventdemo.Counter android:layout_width="358dp" android:layout_height="231dp" android:background="@android:color/black" android:text="Hello World!" app:layout_constraintBottom_toBottomOf="parent" app:layout_constraintLeft_toLeftOf="parent" app:layout_constraintRight_toRightOf="parent" app:layout_constraintTop_toTopOf="parent" /> ``` --- # End of Deck --- --- .left-column[ # We model everything as events ] .right-column[ Sampled devices - Handled as “incremental change” events - Each measurable change: a new event with new value Device differences - Handled implicitly by only generating events they can generate ] --- .title[MVC for a button] .body[ - Diagram it with respect to reading - Model - View - Controller ] --- .title[Interactor (Component) Model] .body[ __Encapsulate interactive components__ (interactors) - Component library (button, slider, container) - Interface built as a hierarchy of components __Components drawn by underlying graphics library__ - Input event generation and dispatch - Historically mouse & keyboard, now touch, ... __Bounds management & damage/redraw__ - Model geometry, redraw updated regions only ]

layout: true