Homework 2

Due: Tuesday, Oct 29th, 2024 by 10:00 pm

Goals

In this assignment you will use the LinkedList and HashTable modules that you built in Homework 1 in order to finish our implementation of a file system crawler, indexer, and search engine:

- In Part A, you will build a module that reads the content of a file into memory, parses it into a series of words, and builds a linked list of (word, list of positions) information.

- In Part B, you will build modules that convert a series of these linked lists into an in-memory, inverted index.

- In Part C, you will use this in-memory, inverted index to build a query processor that has a console-based interface.

As before, please read through this entire document before beginning the assignment, and please start early! There is a fair amount of coding you need to do to complete this assignment, and it will definitely expose any conceptual weaknesses you have with the prior material on C, pointers, malloc/free, and the semantics of the LinkedList and HashTable implementations.

In-Memory File System Search Engine

Part A: File Parser

You're going to write a module that reads the contents of a text file into memory and then parses the text file to look for words within it. As it finds words, it will build up a HashTable that contains one record for each word. Each record will contain a lowercase copy of the word, and also a sorted linked list. Each record of the linked list contains an offset within the file that the word appeared in (the first character in the file has offset zero).

Our word parser won't be very smart. It will treat as a word any non-zero sequence of alphabetic characters separated by non-alphabetic characters.

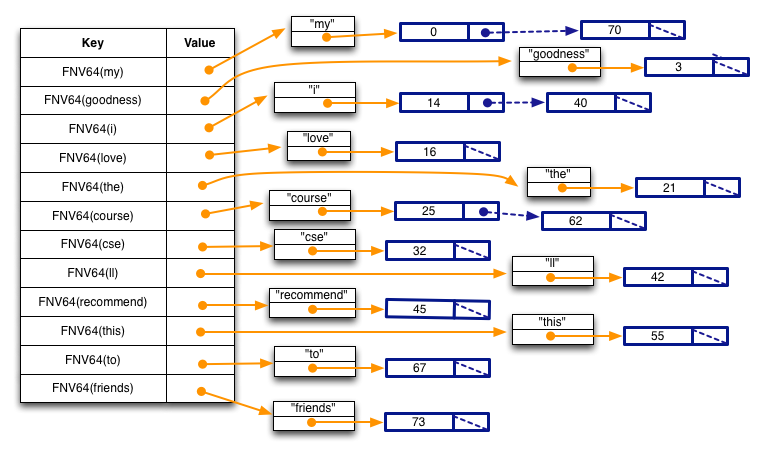

So, graphically, what your module will do is take a text file that contains something like this:

My goodness! I love the course CSE333.\n I'll recommend this course to my friends.\n

(where '\n' represents the newline control character that appears at the end of each line in the input file) and produces a data structure that looks like this:

Specifically, note a few things:

- Each key in the hash table is the result of calling the

hashtable module's

FNVHash64()function, passing the string as the first argument, and thestrlen(string)as the second argument. - Each element in the hash table is a pointer to a heap-allocated structure that contains two fields; a string and a linked list. Note the string is lower-cased, and that our parser is not very smart: because it treats any sequence of alphabetic characters surrounded by non-alphabetic characters as words, the word I'll will be misparsed as the two words i and ll.

- Each element in the linked list is an integer representing the position in the text file at which the word starts; this is both its byte offset and, since we are only handling ASCII files, the number of characters from the start of the file (each ASCII character is exactly 1 byte). So, the word "my" starts at offset 0 in the text file, the word "i" appears twice, once at offset 14 and once at offset 40, and the word "course" appears twice, once at offset 25 and once at offset 62.

- Each list is sorted in ascending order.

Instructions

You should follow these steps:

- Navigate to the directory containing

your cse333 GitLab repository.

Enter

git pullto retrieve new folders containing the starter code for hw2 and a directory containing test data for the remaining parts of the project. Check that you have everything.

bash$ git pull ...git output... bash$ ls cpplint.py exercises gtest hw0 hw1 hw2 projdocs

(Note that you still need the

hw1directory; hw2 won't build properly without it. It's ok if you've deleted your hw0 directory.) - Take a look inside

hw2, and note a few things:- There is a subdirectory called

libhw1/. It has symbolic links to header files and yourlibhw1.afrom../hw1. Therefore you should make sure you have compiled everything in../hw1while working on hw2.) - If you don't think your HW1 solution is up to the job, you

can use ours instead. Our

libhw1.ais in../hw1/solution_binaries; just copy it over your../hw1/libhw1.a. - Just like with HW1, there is a

Makefilethat compiles the project, a series of files (i.e.,test_*) that contain our unit tests, and some files (DocTable.c,DocTable.h,CrawlFileTree.c,CrawlFileTree.h,FileParser.c,FileParser.h,MemIndex.c,MemIndex.h,searchshell.c) that contain our partial implementation of the project. - Type

maketo compile the project, and try running the test suite by running./test_suite. It should fail (and might not even terminate), since most of the implementation is missing!

- There is a subdirectory called

- Also note there is a new directory called

projdocsthat contains a bunch of text files and subdirectories containing more text files. Explore itstest_treesubdirectory and its contents; start with theprojdocs/test_tree/README.TXTfile. - Your job in Part A is to finish the implementation of

FileParser.c. Start by reading throughFileParser.hand make sure you understand the semantics of the functions. Also, look at theWordPositionsstructure defined infileparser.hand compare it to the figure above. The functionParseIntoWordPositionsTable()builds a HashTable that looks like the figure, and each value in the HashTable contains a heap-allocatedWordPositionsstructure. - Similar to HW1, look through

FileParser.cto get a sense of its layout, and look for all occurrences of STEP X (e.g., STEP 1, STEP 2, ...) for where you need to add code. Be sure to read the full file before adding any code, so you can see the full structure of what we want you to do. Once you're finished adding code, run the test suite and you should see some tests start to succeed! - As before, in the subdirectory

solution_binaries/we've provided you with linux executables (i.e.,test_suiteandsearchshell) that were compiled with our complete, working version of HW2. You can run them to see what should happen when your HW2 is working. - Also, as before, you must implement all components as specified

and you may not modify any header files. You are, of course,

free to add additional private (

static) functions when that makes sense.

Part B: File Crawler and Indexer

At a high-level, a search engine has three major components: a crawler, an indexer, and a query processor. A crawler explores the world, looking for documents to index. The indexer takes a set of documents found by the crawler, and produces something called an inverted index out of them. A query processor asks a user for a query, and processes it using the inverted index to figure out a list of documents that match the query.

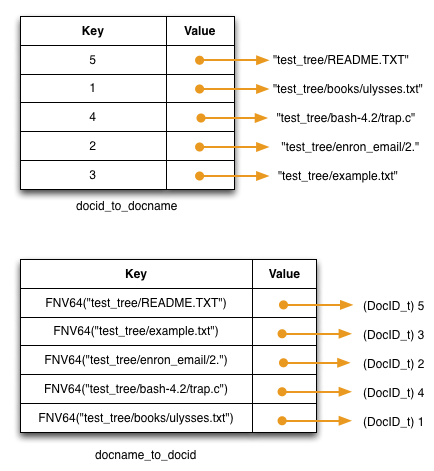

File system crawler: Your file system crawler will be provided with the name of a directory in which it should start crawling. Its job is to look through the directory for documents (text files) and to hand them to the indexer, and to look for subdirectories; it recursively descends into each subdirectory to look for more documents and sub-sub-directories. For each document it encounters, it will assign the document a unique "document ID", or "docID". A docID is just a 64-bit unsigned integer.

Your crawler itself will build two hash tables in memory, adding to them each time it discovers a new text file. The two hash tables map from docID to document filename, and from document filename to docID:

For each document the crawler finds, it will make use of

your part A code to produce a word hashtable using

ParseIntoWordPositionsTable().

Indexer: This is the heart of the search engine. The job

of the indexer is to take each word hashtable produced by

ParseIntoWordPositionsTable(), and fold its contents

in to an inverted index. An

inverted index is easy to understand; it's just a hash table that

maps from a word to a "posting list," and a posting list is just

a list of places that word has been found.

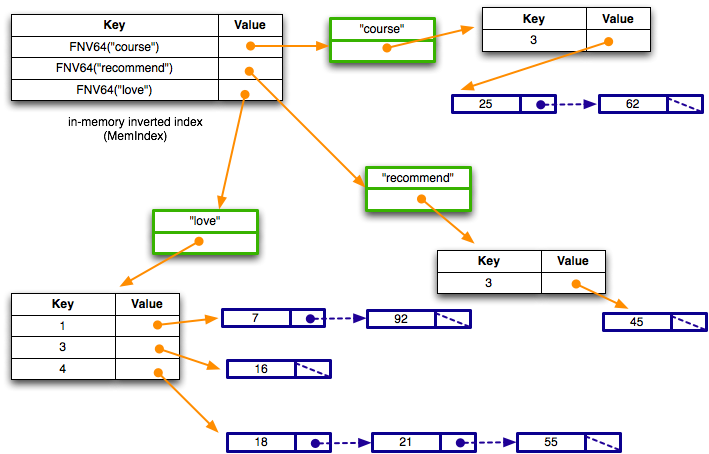

Specifically, the indexer should produce an in-memory hash table that looks roughly like this:

Walking through it, the inverted index is a hash table that maps from a (hash of a) word to a structure. The structure (shown in green) contains the word as a string, and also a HashTable. The HashTable maps from the docID (not the hash of docID) to a LinkedList. The LinkedList contains each position that word appeared in that docID.

So, based on the figure, you can see that the word "course" appeared in a single document with docID 3, at byte offsets 25 and 62 from the start of file. Similarly, the word "love" appears in three documents: docID 1 at positions 7 and 92, docID 3 at position 16, and docID 4 at positions 18, 21, and 55.

Instructions

The bulk of the work in this homework is in this step. We'll tackle it in parts.

- Take a look at

DocTable.h; this is the public interface to the module that builds up the docID-to-docname HashTable and the docname-to-docID HashTable. Make sure you understand the semantics of everything in that header file; note how we can now implement procedural-style class composition! We create a single DocTable structure contains both of these tables, so when you implementDocTable_Allocate(), you'll end up malloc'ing a structure that contains two HashTables, and you'll allocate each of those HashTables. - Take a look inside

DocTable.c; this is our partially completed implementation. Be sure to read the full file. Your job, as always, is to look for the "STEP X" comments and finish our implementation. Once you've finished the implementation, re-compile and re-run thetest_suiteexecutable to see if you pass our tests. If not, go back and fix some bugs! - Now, take a look inside

CrawlFileTree.h; this is the public interface to our file crawler module. Make sure you understand the semantics of everything in that header file. Next, read through the fullCrawlFileTree.cand then complete our implementation. Once you're ready, re-compile and re-run thetest_suiteexecutable to see if you pass more tests. If not, go back and fix some bugs! - Finally, take a look inside

MemIndex.h. This is the public interface to the module that builds up the in-memory inverted index. Make sure you understand the semantics of everything in that header file and note how we implement procedural-style inheritance! Next, read the fullMemIndex.c, and then complete our implementation. (This is the most involved part of the assignment.) Once you're ready, re-compile and re-run the test_suite executable to see if you our tests. If not, go back and fix some bugs!Once you've passed all of the tests, re-rerun the test suites under

valgrindand make sure you don't have any memory leaks.Congrats, you've passed part B of the assignment!

Part C: Query Processor

Now that you have a working inverted index, you're ready to build your search engine. The job of a search engine is to receive queries from a user, and return a list of matching documents in some rank order.

For us, a query is just a string of words, such as:

course friends myThe goal of our search engine is to find all of the documents that contain all of the words. So, if a document has the words "course" and "my" but not the word "friends," it shouldn't match.

To execute a query, first the query

processor must split the query up into a list of words (the

strtok_r() function is useful for this). Next, it

looks up in the inverted index the

list of documents that match the first word. This is our

initial matching list.

Next, for each additional word in the query, the query processor uses the inverted index to get access to the HashTable that contains the set of matching documents. For each document in the matching list, the query processor tests to see if that document is also in the HashTable. If so, it keeps the document in the matching list, and if not, it removes the document from the matching list.

Once the processor has processed all of the words, it's done. Now, it just has to rank the results, sort the results by rank, and return the sorted result list to the user.

For us, our ranking function is very simple: given a document that matches against a query, we sum up the number of times each query word appears in the document, and that's our rank. So, if the user provides the query "foo" and that words appears on a document 5 times, the rank for that document given that query is 5. If the user provides a multi-word query, our sum includes the number of times each of the words in the query appears. So, if the user provides the query "foo bar", the word "foo" appears in the document 5 times, and the word "bar" appears 10 times, the rank of the document for that query is 15. The bigger the sum, the higher the rank, and therefore the "better" the search result.

Instructions

We have provided a mostly empty skeleton file

searchshell.c.

It is up to you to complete it by implementing a program that uses

the Linux console to interact with the user. When you run the

query processor (called searchshell -- you can try a

working searchshell in the

solution_binaries/ directory), it takes from a

command line argument the name of a directory to crawl. After

crawling the directory and building the inverted index, it enters

a query processing loop, asking the user to type in a search

string and printing the results. If the user signals end-of-file

when the program asks for a search string (i.e., control-D from the

linux terminal keyboard), the program should clean up any

allocated resources - particularly memory - and shut down.

When you are ready, try running ./searchshell to

interact with your program and see if your output matches the

transcript from a search session with

our solution. Alternatively, compare your searchshell to

the searchshell we provided in the solution_binaries/

directory. Note that our ranking function does not specify an

ordering for two documents with the same rank. Documents that

have the same rank may be listed in any order, and that might

be different from the ordering in the sample transcript or

produced by the solution_binaries version of

searchshell.

Testing

As with previous homeworks, you compile your implementation

using the make command. This will result in several

output files, including an executable called test_suite.

You can run all of the tests in that suite with the command:

bash$ ./test_suite

You can also run only specific tests by passing command line

arguments into test_suite. For example, to only

test a single index for QueryProcessor, you can type:

bash$ ./test_suite --gtest_filter=Test_QueryProcessor.TestQueryProcessorSingleIndex

In general, you can specify which tests are run for any of the tests in the assignment; you just need to know the names of the tests, which can be obtained by running:

bash$ ./test_suite --gtest_list_tests

These settings can be helpful for debugging specific parts of the

assignment, especially since test_suite can be run with

these settings through valgrind and gdb!

However, you should not debug your code using only the supplied

tests! The test setup and code are complex enough that it can be

hard to isolate problems effectively without spending excessive

amounts of time trying to reverse-engineer the details of the

test_suite code.

Be sure to also run your code on small sample files and

directories where you can predict in advance exactly what data

structures should be created and what their contents should be, and

then use gdb or other tools to verify that things are

working exactly as expected.

Code Quality

In addition to passing tests, your code should be high quality and readable. This includes several aspects:

- Modularity: Your code should be divided into

reasonable modules (e.g., functions) and should not have

excessive redundancies that could be removed by replacing

redundant code with calls to suitable, possibly new,

functions. If you create any additional private (e.g.,

static) helper functions, be sure to provide good comments that explain the function inputs, outputs, and behavior. These comments can often be relatively brief as long as they convey to the reader the information needed to understand how to use the function and what it does when executed. - Readability: Your code should blend smoothly with the code surrounding it. Follow the existing conventions in the code for capitalization; naming of functions, variables, and other items; using comments to document aspects of the code; and layout conventions such as indenting and spacing.

- Automated bug checking: Use the provided tools

(

cpplint.py --clintandValgrind) to look for common coding bugs and fix reported issues before submitting your code. Exception: ifcpplintreports style problems in the supplied starter code, you should leave that code as-is. - Style guide: Refer to the Google C++ Style Guide for advice. Much of the guide applies equally well to C code as well as C++.

- Good development practices: We will look through your git activity (eg, tags and commits) to verify that you are following the development practices described in class. This may include correct tagnames, succinct commit messages, and incremental checkins (eg, commiting after a major milestone like passing a test or implementing a feature).

Submission

When you are ready to turn in your assignment, you should follow

the same procedures you used for hw0 and hw1, except this time tag

the repository with hw2-final. Remember to clean up,

commit, and push all necessary files to your repository before you

add the tag. After you have created and pushed the

tag, remember to test everything in the CSE Linux environment by

creating a new clone of the repository in a separate, empty

directory, checkout the hw2-final tag, and verify that

everything works as expected. Refer to the hw0 turnin instructions

for details, and follow those steps carefully.

When you clone your repository to check it, it normally will not

include hw1/libhw1.a, which is needed to build hw2.

Either run "make" in hw1 to recreate it using your solution to hw1,

or copy the

libhw1.a file in hw1/solution_binaries

and place it in the main hw1 folder to use the sample solution version

of that library.

If you fail to check your work and your project doesn't build properly when the same steps are done by the course staff to grade it, you may lose a huge amount of the possible credit for the assignment even if almost absolutely everything is actually correct.

Grading

We will be basing your grade on several elements:

- The degree to which your code passes the unit tests

contained in

test_suite.c. If your code fails a test, we won't attempt to understand why: we're planning on just including the number of points that the test drivers print out. - We have some additional unit tests that test a few additional cases that aren't in the supplied test drivers. We'll be checking to see if your code passes these.

- The quality and readability of your code. We'll be judging this on several qualitative aspects described above. Both code correctness and code quality matter. Both are weighted significantly in the evaluation of your project.