You build an N-gram model by processing some sample text. For instance, suppose we process this text:

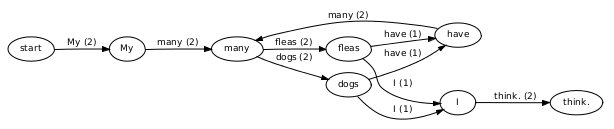

My many dogs have many fleas I think. My many fleas have many dogs I think.The 1-gram model for that text might look like this:

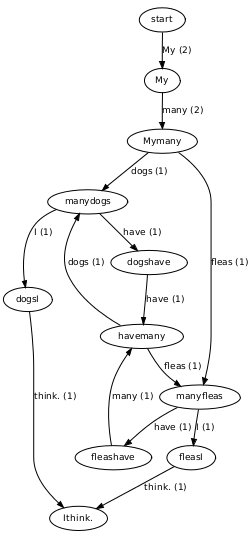

and the 2-gram model like this:

The edge labels include counts of the number of times that edge was traversed when processing the input text.

These are logical depictions. Your implementation needn't associate information with edges and nodes in exactly this way. But, you must produce a graph of this sort, and the edges must be shared_ptrs to node objects.

The second phase is producing synthetic sentences using the model. To do that, you start in the start state. While in a non-terminal state, you choose an outgoing edge at random, with probability proportional to the count of that edge. For instance, in the 2-gram model above, while in state manydogs you'd choose successor states dogsI and dogshave with equal probability. Each time you traverse an edge you output the token that labels that edge.

Important Details

You can run the sample executable on attu like this:

$ cd /cse/courses/cse333/13wi/ex12_files $ ./ex12 3 ./datafiles Constructing model austen Constructing model carroll Constructing model dickens Constructing model doyle Constructing model grimm Constructing model howard Constructing model hugo Constructing model joyce Constructing model kafka Constructing model melville Constructing model shelley Constructing model twain Constructing model whitman Enter model name to generate sentence using that model, list for a list of models, or exit to exit: hugo Caesar and Tacitus are two successive phenomena, a meeting between whom seems to be mysteriously avoided, by the One who, when He sets the centuries on the stage, to say revolt now and then, but merely to distinguish superficial facts, and always preserving the distinction between revolt, the form, and insurrection, the foundation. Enter model name to generate sentence using that model, list for a list of models, or exit to exit: exitThe command caused the application to construct 3-gram models from all xxx.txt files located in directory ./datafiles. A separate 3-gram model was constructed for each individual text file. In this case, the files correspond to the works of the authors they're named after, as available at Project Gutenberg. Once the models are constructed, you can request a new sentence in the style of any of the authors.