We have learned a lot in CSE 143 so far! On the index cards last week, a few people asked to go over what we’ve learned so far. This document reading is pretty long because I tried to cover the content so far in enough detail to be a good reference.

It is not the intent of this reading to be the one source you should use for studying, but rather as an overview of the things we’ve covered in this class so far that are important. It’s very important that you try to spend some time thinking about how the pieces of this class fit together into your own concept map to help you synthesize the material we have learned so far and help integrate new information we will learn in the coming weeks. Similarly, it would not be a fruitful exercise to try to memorize all of the material in this document, but rather you should use it as a refresher for the things you should be more familiar with. Remember, that in this class we never test you on your ability to recite definitions, but rather assess you on your ability to apply the concepts you have learned to solve problems.

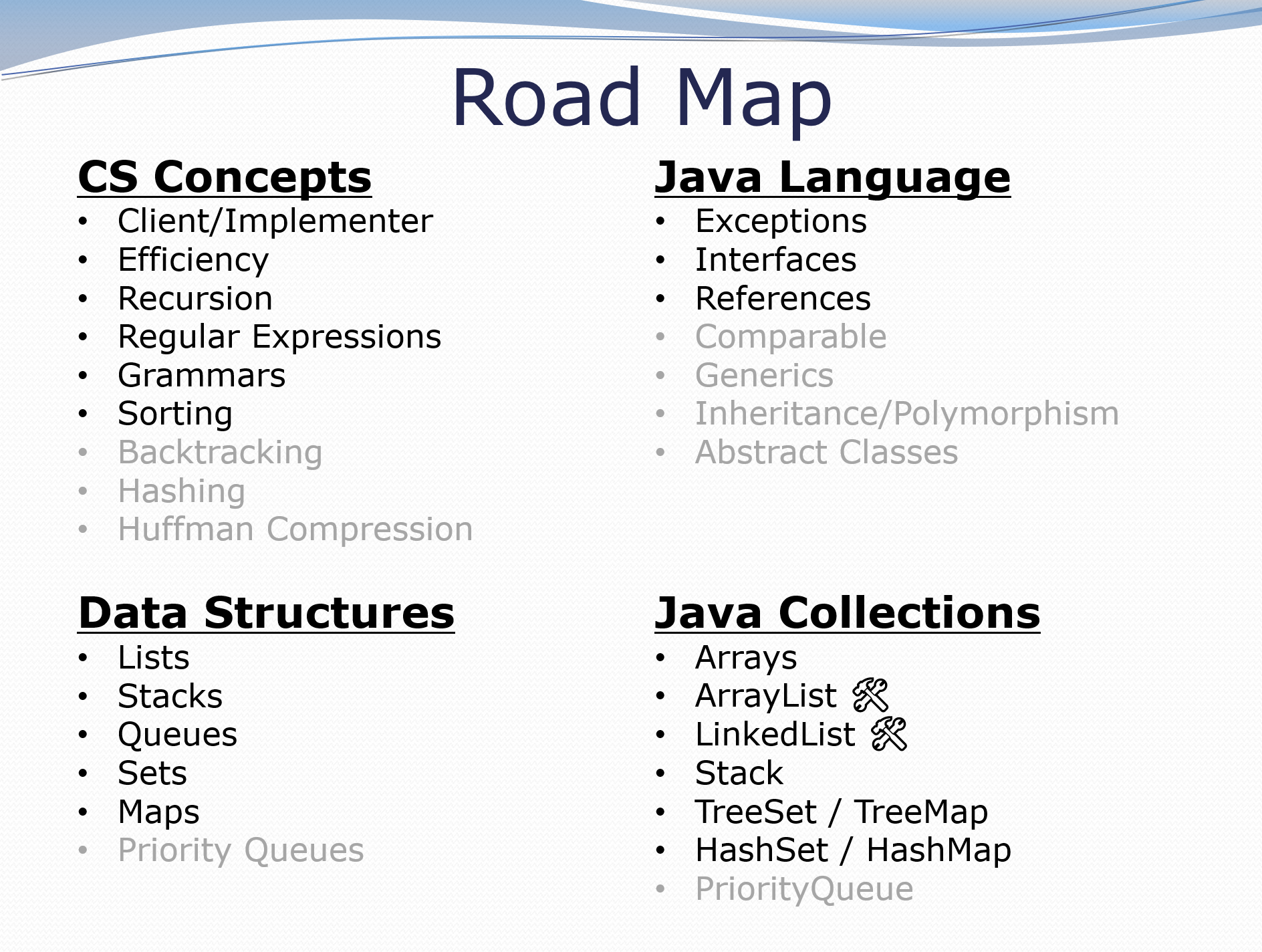

In brief, it might help to look at the slide I have been including at the start of most slide decks that shows what we are currently learning and how it fits into the course

CS Concepts¶

Client/Implementer¶

We started by talking about the idea of separating “what something does” from “how it does it” when talking with the different views of clients and implementers. This is a key idea in CS called abstraction which allows us to manage the details of our programs by encapsulating the lower level details in the class.

Practically, what this means is you should make your fields private to make sure the client doesn’t have access to them and when writing comments for your class, you should avoid exposing “implementation details” to the client. The reason we want to avoid implementation details is we don’t want to bog down the client with “how” and focus more on the “what” (just like how we are clients of radios, but don’t have to know how they work internally).

Efficiency¶

We saw that timing code has limitations because it is not precise so the results are difficult to generalize from. Instead we learned the idea of complexity which allows us to quantify the amount of time a program will need to run by estimating about how many steps will need to be run depending on the size of the input. For example, we saw if we wanted to find the index of a value in an unsorted list of n elements, in the worst case we would have to search through all n elements resulting in a time that is “about n” or what we would call O(n).

Here is a concrete example: suppose we had an array of numbers (assume there are at least 2) and wanted to find the largest difference between any two pairs of numbers. One might implement this looking at every pair of numbers

public static int largestDifference(int[] nums) {

int largestDifference = 0; // Constant work O(1)

for (int i = 0; i < nums.length; i++) { // Runs n times

for (int j = 0; j < nums.length; j++) { // Runs n times

int difference = Math.abs(nums[j] - nums[i]); // Constant work, O(1)

largestDifference = //

Math.max(difference, largestDifference); // Constant work, O(1)

} // Total O(n) for inner loop

} // Total for outer loop is O(n^2)

//

return largestDifference; // Constant work O(1)

} // Total for method is O(n^2)

Some might notice that the solution above does some unnecessary work because it checks the same pair of numbers multiple times, but versions that do this compare pairs approach will have to run in O(n^2) time so we still have this quadratic slow down when n grows.

A much better solution takes advantage of the fact that the largest difference always occurs from the smallest element and the largest element. Using this idea we can write

public static int largestDifference(int[] nums) {

int min = nums[0]; // Constant work O(1)

int max = num[0]; // Constant work O(1)

//

for (int i = 0; i < nums.length; i++) { // Runs n times

min = Math.min(min, nums[i]); // Constant work O(1)

max = Math.max(max, nums[i]); // Constant work O(1)

} // Total for loop is O(n)

//

return max - min; // Constant work O(1)

} // Total for method is O(n)

Now we can see with this solution, if we had an array of size n we would do about n things if this method was called. This is inexact, but gives you an idea about how the solution scales.

Java Knowledge¶

Object Oriented Programming¶

We introduced this new programming paradigm at the beginning of the quarter. Instead of writing a runnable program with a main method, we will instead write objects that manage state (fields) and provide behavior (methods) that a client can create and use in their programs. This is a pretty big shift from the programs we wrote in 142 because now we have to think about what the client does and doesn’t know and how to make sure the state of our object is correct between method calls.

Reference semantics¶

We have spent a fair amount of time talking about the memory model you should have for Java to understand how programs behave in the way they do. We have stressed the difference between a value and a variable. A value is concrete piece of data in a program like an integer, or true/false, or a reference (read phone number) to some object while a variable is a named box that we can store a value in. It’s very important to understand when you have the line of code

a = b;

That we evaluate this by looking inside the box labeled b and store the value inside that box in the box labeled a. This is true regardless if we are working with primitive or reference data.

For a refresher on reference semantics, you should check out this reading. Importantly, try to figure out how this relates to the linked lists we were working with two weeks ago and the discussions we were having about storing Maps with complicated values like Sets.

Abstract Data Types (ADT), Data Structures, and how they appear in Java¶

We commonly have a high-level idea of what something like a List does (it has indices, you can add and remove at any index, it has a dynamic size) which we refer to as an Abstract Data Type or ADT. An ADT is a high-level specification of what a type of collection can do. A data structure is a more concrete notion which actually talks about how you would implement the ADT. For example, we would call array lists and linked lists two data structures that meet the list ADT. Note that we still aren’t quite talking about Java here since you could implement a linked list in pretty much any programming language; this is why we have this concept in the CS Concepts section rather than anything Java specific.

In Java, we can use an interface to represent the idea of an ADT. Interfaces allows us to require certain operations (methods) and what they mean. To implement the interface, we write a class that can meet the specification of the interface. For example, Java has an interface that represents the list ADT (called List) and has classes that implement the List interface called ArrayList and LinkedList.

Below we will describe the ADT and data structures we have learned in this class and their corresponding entities in Java. The signals that we have actually implemented a version of that class to see how it works

- List: An ordered sequence of elements with indices that has dynamic length.

- Operations: add at an index, add at the end, remove at an index, get the value at a particular index, see if the list contains a value, etc.

- Java: Represented by the

Listinterface and implemented byArrayList: Uses an array behind the scenes to store the data.- Pro: Fast access to elements given an index because arrays have “random access”

- Con: Difficult to insert at an index (have to shift everything over), arrays are fixed size so we have to “grow” the array if we run out of space

LinkedList: Uses nodes that are linked together to the store the data- Pro: Really easy to add/remove from the front since we can just change the references. Not fixed size so we never have to copy data over to “grow”

- Con: Lose random access, so to go to an index we have to start at the front and follow the links until we reach the destination.

- Set: A collection of unique values where you can’t determine the order.

- Operations: add a value, remove a value, check if the set contains a value, etc.

- Java: Represented by the

Setinterface and implemented byTreeSet: Haven’t seen how to implement this- Pro: Stores the data in sorted order so it is easy to view

- Con: Just a bit slower than

HashSetwith operations that usually run inO(log(n))time

HashSet: Haven’t seen how to implement this- Pro: Extremely fast. Most operations usually run in

O(1)time (meaning it doesn’t depend on how much data is there) - Con: The data is stored in an order that looks random which makes it harder to understand what is in the Set when printing

- Pro: Extremely fast. Most operations usually run in

- Stack: A very simple ADT that only allows data to be added/removed from the top of the structure (like a stack of trays)

- Operations: push (add at top), pop (remove from top), peek (inspect top element)

- Java: Represented by the

Stackclass

- Queue: A very simple ADT that only allows data to be added at the end and removed from the front (like a line of people)

- Operations: add at end, remove from front, peek (inspect front element)

- Java: Represented by the

Queueinterface and implemented with theLinkedListclass

- Map: A more generic version of an array, which let you use any type as the keys to associate to values.

- Operations: add mapping from key to value, remove the mapping from key to value, get all the keys, get all the values, etc.

- Java: Represented by the

Mapinterface and implemented byTreeMap: Very similar toTreeSetbut implements the map ADT insteadHashMap: Very similar toHashSetbut implements the map ADT instead