Due Date: Friday, February 27, 2009 in class and submit code online (the seventh week)

|

- Bayesian learning

- 1.1. A judge is considering a criminal charge against a man. From the past records, the judge thinks that it is very unlikely (say, 5%) for the man to have committed the crime. However, the man's DNA has been found to match the forensic evidence found at the crime scene. It is known that if the DNAs are indeed the same, the test will report match 99.9% of times; however, it also has 0.5% of chance to report match when the DNAs aren't the same. What should the judge conclude? Explain.

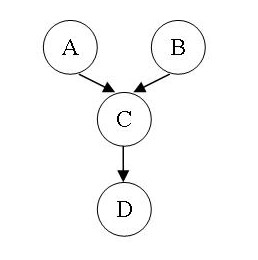

- 1.2. Consider the following Bayesian network:

- Is A independent of B?

- Is A independent of D?

- Is D independent of A given C?

- Is A independent of B given D?

Justify your answers.

- 1.3. Consider a Bayesian network with two Boolean nodes A and B, where A is the parent of B. Suppose you have a training set composed of the following examples in the form (A,B), with "?" indicating a missing value: (0,0), (0, ?), (0, 1), (0, ?), (1, 1), (1, ?). Show the sequence of filled-in values and parameter estimates produced by the EM algorithm, assuming the parameters are initialized ignoring missing values.

- Neural networks:

- 2.1. (Mitchell problem 4.2) Design a two-input perceptron that implements the boolean function A ^ (NOT B). Design a two-layer network of percetrons that implements A XOR B.

- 2.2. (Mitchell problem 4.5) Derive a gradient descent training rule for a single unit with output o, where o=w0+w1*x1+w1*x1*x1+....+wn*xn+wn*xn*xn.

- Naive Bayes for text classification.

- In this project, we will revisit the task of classifying documents into topics as in Project 3 of Homework 2, using the same Reuter dataset. But this time, you should implement the multinomial naive Bayes algorithm instead of KNN. Naive Bayes used to be the de facto method for text classification (now supplanted by SVMs). Try various smoothing parameters for the Naive Bayes learner. What's the accuracy of your learner? Which parameters work best? Any qualitative and/or quantitative difference in the result comparing to that of KNN? Explain.

-

Turn in the following:

- Your code for Problem 3. Submit your files here. The due date is 11:30AM, February 27, 2009. Your code should contain appropriate comments to facilitate understanding.

- A report with your answers to the homework problems. For problem 3, as usual, you should describe in high-level how your code works and answer the questions.

Good luck, and have fun!

|